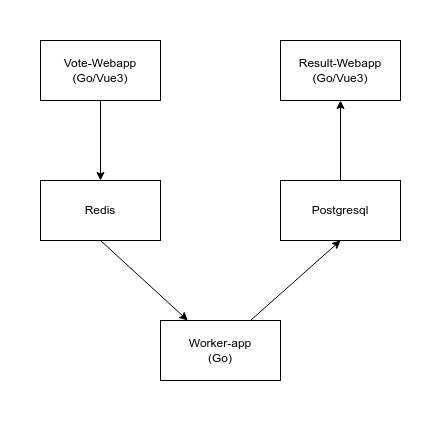

☸️ Example of a distributed voting app running on Kubernetes. Written in Golang with Terraform definitions to deploy to AWS

This repository provide a complete and modern ready to deploy example of a dockerized and distributed app. Deployable using Docker-Compose, Kubernetes templates or even Helm Chart.

k8s-voting-app-aws/

├─ .github/ # Github workflows

├─ docs/

│ ├─ app-architecture.jpg # App's architcture scheme

│ ├─ README-FR.md # French translation of the readme

├─ helm/ # Helm Chart definitions

├─ k8s-specifications/ # K8s Templates files

├─ voting-app/ # Result, Vote and Worker source code

├─ *.tf # terraform specs files

├─ *.tfvars # terraform values files

├─ *.yml # docker-compose files- Download and install Docker and Docker-Compose

- Clone this repository:

git clone git@github.com:hbollon/k8s-voting-app-aws.git(you can alternatively use http) - Open a terminal inside the cloned repository folder and build Docker images:

docker-compose build - Start all services:

docker-compose up -d

The result app should be now accessible through localhost:9091 and the vote one to localhost:9090

To stop all deployed ressources run: docker-compose down

Before deploying the app, you must install Minikube and start a cluster:

- Install Minikube

- Start a Minikube cluster:

minikube start - Check that Minikube is fully up (

minikube status) and kubectl successfully linked (kubectl get pods -A) - Enable Nginx Ingress Controller addon:

minikube addons enable ingress

- Deploy all k8s ressources:

kubectl apply -f k8s-specifications --namespace=voting-app-stack - Get your cluster IP using:

minikube ip - Enable ingress access:

- On Linux: Edit your hosts file located at

/etc/hostsby adding<minikube ip> result.votingapp.com vote.votingapp.comto the end of it, of course replace<minikube ip>by the real cluster ip. - On Windows: Edit your hosts file located at

c:\Windows\System32\Drivers\etc\hostsby adding127.0.0.1 result.votingapp.com vote.votingapp.comto the end of it. After that, start a Minikube tunnel:minikube tunnel

- On Linux: Edit your hosts file located at

The result app should be now accessible through result.votingapp.com and the vote one to vote.votingapp.com

To stop and destroy all k8s deployed ressources run: kubectl delete -f k8s-specifications --namespace=voting-app-stack and stop minikube using minikube stop

- Update Helm repositories and download dependencies:

helm dependency update ./helm/voting-app - Deploy the Helm Chart:

helm template voting-app ./helm/voting-app --namespace=voting-app-stack | kubectl apply -f - - Get your cluster IP using:

minikube ip - Enable ingress access:

- On Linux: Edit your hosts file located at

/etc/hostsby adding<minikube ip> result.votingapp.com vote.votingapp.comto the end of it, of course replace<minikube ip>by the real cluster ip. - On Windows: Edit your hosts file located at

c:\Windows\System32\Drivers\etc\hostsby adding127.0.0.1 result.votingapp.com vote.votingapp.comto the end of it. After that, start a Minikube tunnel:minikube tunnel

- On Linux: Edit your hosts file located at

The result app should be now accessible through result.votingapp.com and the vote one to vote.votingapp.com

To stop and destroy all k8s deployed ressources run: helm template voting-app ./helm/voting-app --namespace=voting-app-stack | kubectl delete -f - and stop minikube using minikube stop

To deploy the app to AWS you must create an infrastructure based on EKS (Elastic Kubernetes Service) first. You have all the needed Terraform definitions to do that easily with an IAC interface (Infrastructure As Code). You must have an AWS account to follow this guide and be careful, although AWS has free tier for new accounts, this infrastructure can generate some costs although very limited. Especially in case of bad configuration or usage where the costs can be multiplied.

I will not be responsible for the invoice generated in any way.

-

Clone this repo

-

Create a new IAM User on your AWS Account:

- Go to the IAM section and create a new user named "TerraformUser"

- Add this user to a new group named "TerraformFullAccessGroup" with AdministratorAccess and AmazonEKSClusterPolicy rights

- Once done, keep the Secret Access Key and Access Key ID, this will be the only time AWS gives it to you

-

Go to the VPC panel of the AWS Console and get two differents subnet ids from the default VPC. Add these two ids in the

values.tfvarsfile at the root of this project (replace<subnet_id_1>and<subnet_id_2>). -

For the following steps you will need to use a credentials management method to use them with Terraform and AWS-CLI. The easier way is to set AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY environement variables. But you can also use tools like Summon or AWS config files.

-

Open a console at the root of this project directory and execute:

terraform initterraform plan -var-file=values.tfvars: check that the output is generated without any errors.terraform apply -var-file=values.tfvars(this operation can take a while don't worry)

-

If previous commands runs well you should now have a working EKS cluster, in order to link your kubectl installation to it you must run:

aws eks update-kubeconfig --region eu-west-3 --name eks_cluster_voting_appchange the region flag if you have deployed the EKS on another one. Once done, runkubectl get pods -A, if it working you've done with your fresh EKS cluster. -

Finally, deploy all the k8s ressources:

- With k8s templates:

kubectl apply -f k8s-specifications --namespace=voting-app-stack - With Helm Chart:

helm dependency update ./helm/voting-appand after:helm template voting-app ./helm/voting-app --namespace=voting-app-stack | kubectl apply -f -

- With k8s templates:

You have the possibility to get the aws endpoint linked to your EKS cluster by running: terraform output -json. However, the ingress ressource is not compatible with it atm.

You can destroy everything just by running this command: terraform destroy -var-file=values.tfvars

Never delete the generated .tfstate file when the infrastucture is deployed! Without it you will be unable to delete all the AWS ressources with Terraform and you will be forced to do it manually with the Web AWS Console or the AWS-CLI.

Many additional features are coming, including:

- Ingress compatibility with AWS and domain customization

- Monitoring/Alerting/Dashboarding using kube-prometheus-stack

- Style webapps with CSS

- And many more to come !

Contributions are greatly appreciated!

- Fork the project

- Create your feature branch (

git checkout -b feature/AmazingFeature) - Commit your changes (

git commit -m 'Add some amazing stuff') - Push to the branch (

git push origin feature/AmazingFeature) - Create a new Pull Request

Issues and feature requests are welcome! Feel free to check issues page.

👤 Hugo Bollon

- Github: @hbollon

- LinkedIn: @Hugo Bollon

- Portfolio: hugobollon.me

Give a ⭐️ if this project helped you! You can also consider so sponsor me here ❤️

This project is under MIT license.