This is the official repository of

MobileCLIP: Fast Image-Text Models through Multi-Modal Reinforced Training. (CVPR 2024) Pavan Kumar Anasosalu Vasu, Hadi Pouransari, Fartash Faghri, Raviteja Vemulapalli, Oncel Tuzel.

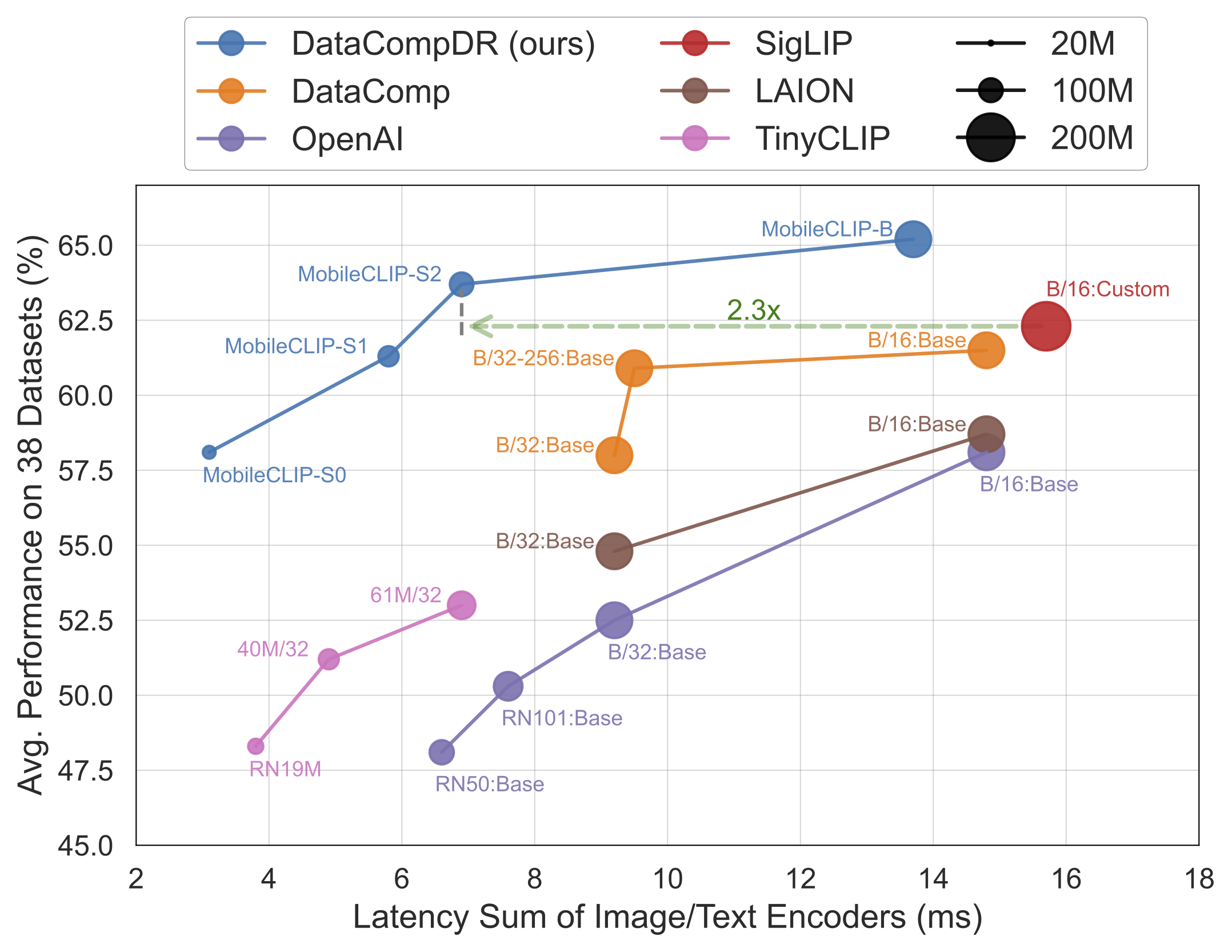

- Our smallest variant

MobileCLIP-S0obtains similar zero-shot performance as OpenAI's ViT-B/16 model while being 4.8x faster and 2.8x smaller. MobileCLIP-S2obtains better avg zero-shot performance than SigLIP's ViT-B/16 model while being 2.3x faster and 2.1x smaller, and trained with 3x less seen samples.MobileCLIP-B(LT) attains zero-shot ImageNet performance of 77.2% which is significantly better than recent works like DFN and SigLIP with similar architectures or even OpenAI's ViT-L/14@336.

conda create -n clipenv python=3.10

conda activate clipenv

pip install -e .To download pretrained checkpoints follow the code snippet below

source get_pretrained_models.sh # Files will be downloaded to `checkpoints` directory.For easy adoption, our model has similar API as open_clip models. To use our model, follow the code snippet below

import torch

from PIL import Image

import mobileclip

model, _, preprocess = mobileclip.create_model_and_transforms('mobileclip_s0', pretrained='/path/to/mobileclip_s0.pt')

tokenizer = mobileclip.get_tokenizer('mobileclip_s0')

image = preprocess(Image.open("docs/fig_accuracy_latency.png").convert('RGB')).unsqueeze(0)

text = tokenizer(["a diagram", "a dog", "a cat"])

with torch.no_grad(), torch.cuda.amp.autocast():

image_features = model.encode_image(image)

text_features = model.encode_text(text)

image_features /= image_features.norm(dim=-1, keepdim=True)

text_features /= text_features.norm(dim=-1, keepdim=True)

text_probs = (100.0 * image_features @ text_features.T).softmax(dim=-1)

print("Label probs:", text_probs)To reproduce results, we provide script to perform zero-shot evaluation on ImageNet-1k dataset. To evaluate on all the 38 datasets, please follow instructions in datacomp.

# Run evaluation with single GPU

python eval/zeroshot_imagenet.py --model-arch mobileclip_s0 --model-path /path/to/mobileclip_s0.ptPlease refer to Open CLIP Results to compare with other models.

| Model | # Seen Samples (B) |

# Params (M) (img + txt) |

Latency (ms) (img + txt) |

IN-1k Zero-Shot Top-1 Acc. (%) |

Avg. Perf. (%) on 38 datasets |

Pytorch Checkpoint (url) |

|---|---|---|---|---|---|---|

| MobileCLIP-S0 | 13 | 11.4 + 42.4 | 1.5 + 1.6 | 67.8 | 58.1 | mobileclip_s0.pt |

| MobileCLIP-S1 | 13 | 21.5 + 63.4 | 2.5 + 3.3 | 72.6 | 61.3 | mobileclip_s1.pt |

| MobileCLIP-S2 | 13 | 35.7 + 63.4 | 3.6 + 3.3 | 74.4 | 63.7 | mobileclip_s2.pt |

| MobileCLIP-B | 13 | 86.3 + 63.4 | 10.4 + 3.3 | 76.8 | 65.2 | mobileclip_b.pt |

| MobileCLIP-B (LT) | 36 | 86.3 + 63.4 | 10.4 + 3.3 | 77.2 | 65.8 | mobileclip_blt.pt |

Note: MobileCLIP-B(LT) is trained for 300k iterations with constant learning rate schedule and 300k iterations with cosine learning rate schedule.

If you found this code useful, please cite the following paper:

@InProceedings{mobileclip2024,

author = {Pavan Kumar Anasosalu Vasu, Hadi Pouransari, Fartash Faghri, Raviteja Vemulapalli, Oncel Tuzel},

title = {MobileCLIP: Fast Image-Text Models through Multi-Modal Reinforced Training},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

}

Our codebase is built using multiple opensource contributions, please see ACKNOWLEDGEMENTS for more details.