[ Project Page ] [ ArXiv ]

Computer Vision Lab, ETH Zurich

- 2024.06: code will be released within June 2024 (stay tuned!).

- 2024.04: MASA is awarded CVPR highlight!

This is a repository for MASA, a universal instance appearance model for matching any object in any domain. MASA can be added atop of any detection and segmentation models to help them track any objects they have detected.

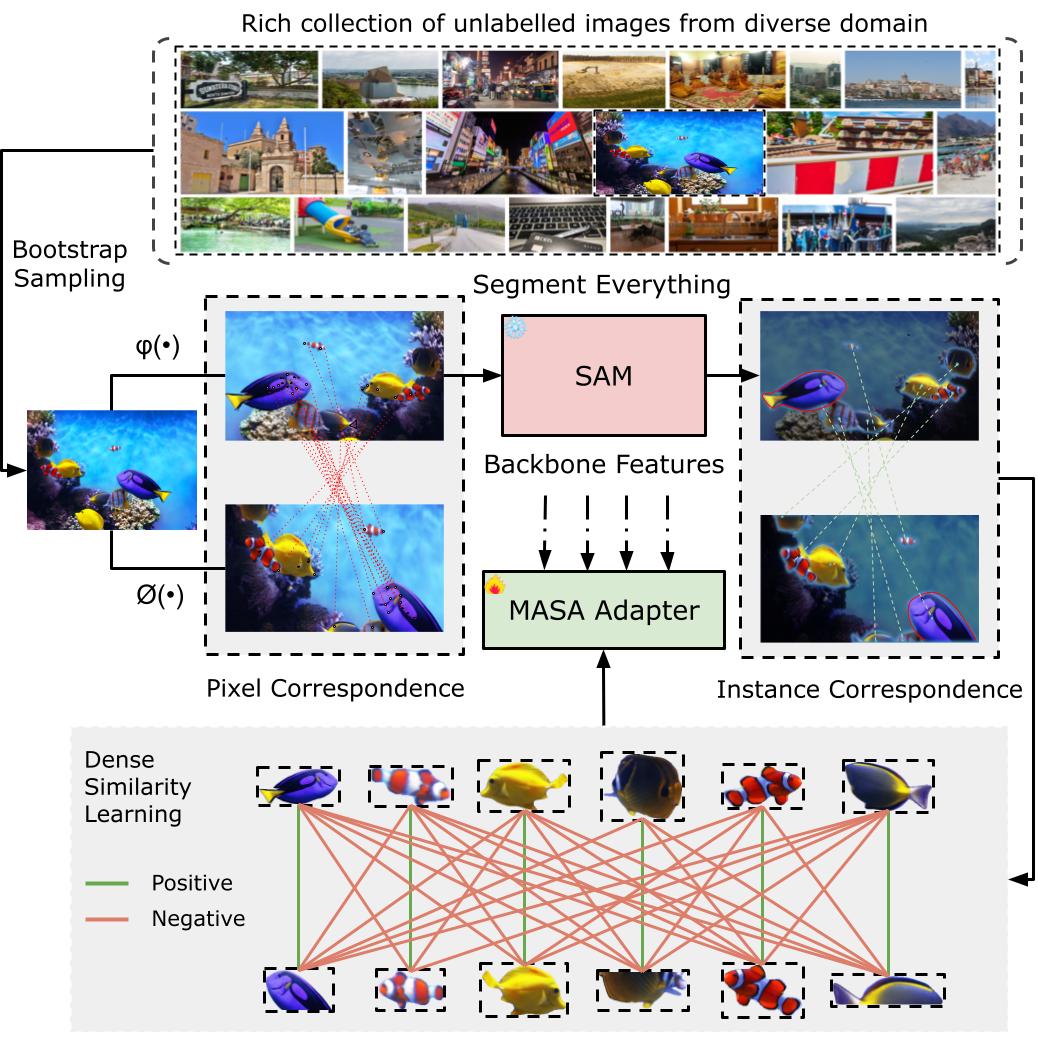

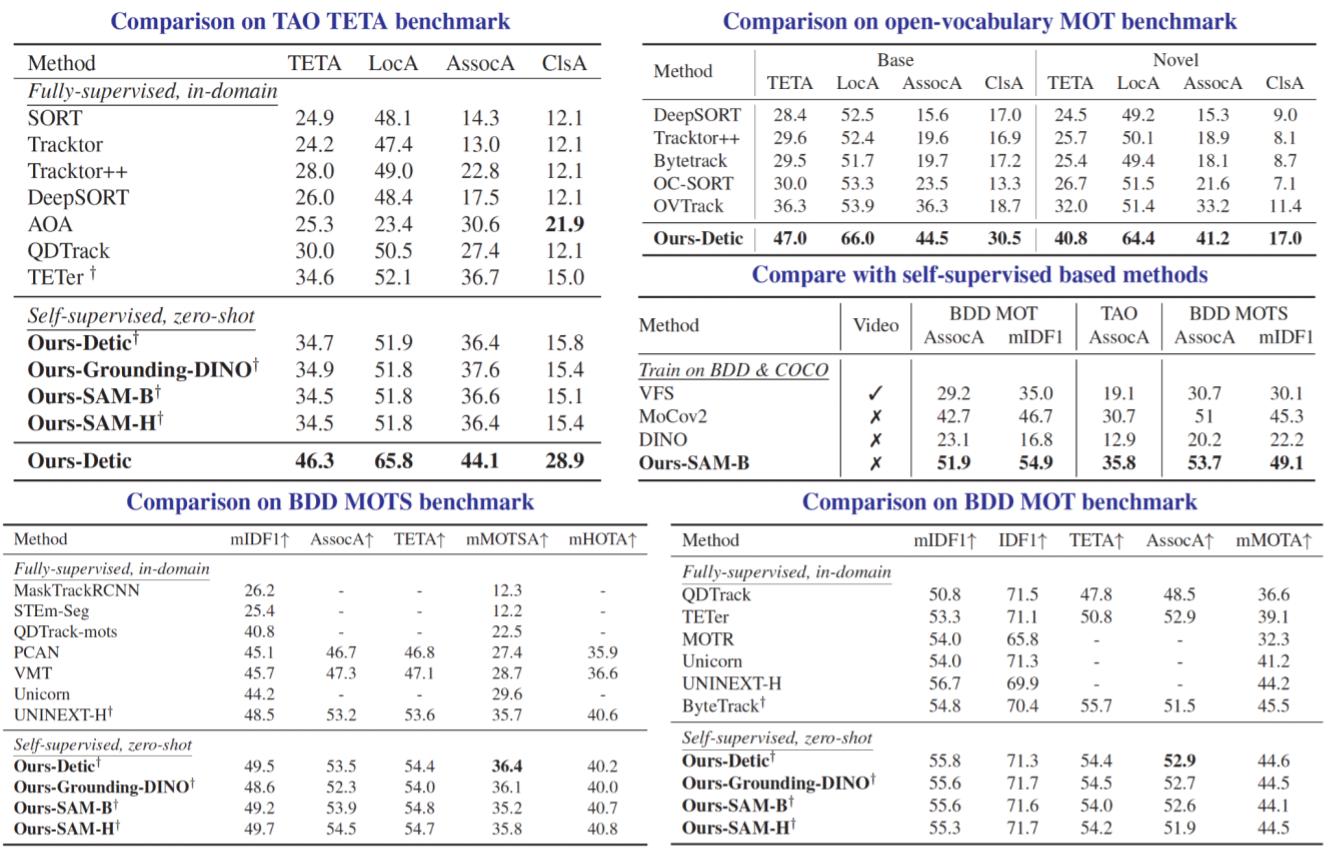

The robust association of the same objects across video frames in complex scenes is crucial for many applications, especially Multiple Object Tracking (MOT). Current methods predominantly rely on labeled domain-specific video datasets, which limits the cross-domain generalization of learned similarity embeddings. We propose MASA, a novel method for robust instance association learning, capable of matching any objects within videos across diverse domains without tracking labels. Leveraging the rich object segmentation from the Segment Anything Model (SAM), MASA learns instance-level correspondence through exhaustive data transformations. We treat the SAM outputs as dense object region proposals and learn to match those regions from a vast image collection. We further design a universal MASA adapter which can work in tandem with foundational segmentation or detection models and enable them to track any detected objects. Those combinations present strong zero-shot tracking ability in complex domains. Extensive tests on multiple challenging MOT and MOTS benchmarks indicate that the proposed method, using only unlabeled static images, achieves even better performance than state-of-the-art methods trained with fully annotated in-domain video sequences, in zero-shot association.

See more results on our project page!

For questions, please contact the Siyuan Li.

@article{masa,

author = {Li, Siyuan and Ke, Lei and Danelljan, Martin and Piccinelli, Luigi and Segu, Mattia and Van Gool, Luc and Yu, Fisher},

title = {Matching Anything By Segmenting Anything},

journal = {CVPR},

year = {2024},

}The authors would like to thank: Bin Yan for helping and discussion; Our code is built on mmdetection, OVTrack, TETA, yolo-world. If you find our work useful, consider checking out their work.