Tengda Han, Weidi Xie, Andrew Zisserman. CVPR2022 Oral.

[project page] [PDF] [Arxiv] [Video]

- [23.08.30] 📣 📣 We released WhisperX ASR output and InternVideo & CLIP-L14 visual features for HowTo100M here.

- [22.09.14] Fixed a bug that affects the ROC-AUC calculation on HTM-Align dataset. Other metrics are not affected. Details

- [22.09.14] Fixed a few typos and some incorrect annotations in HTM-Align. This download link is up-to-date.

- [22.08.04] Released HTM-370K and HTM-1.2M here, the sentencified version of HowTo100M. Thank you for your patience, I'm working on the rest.

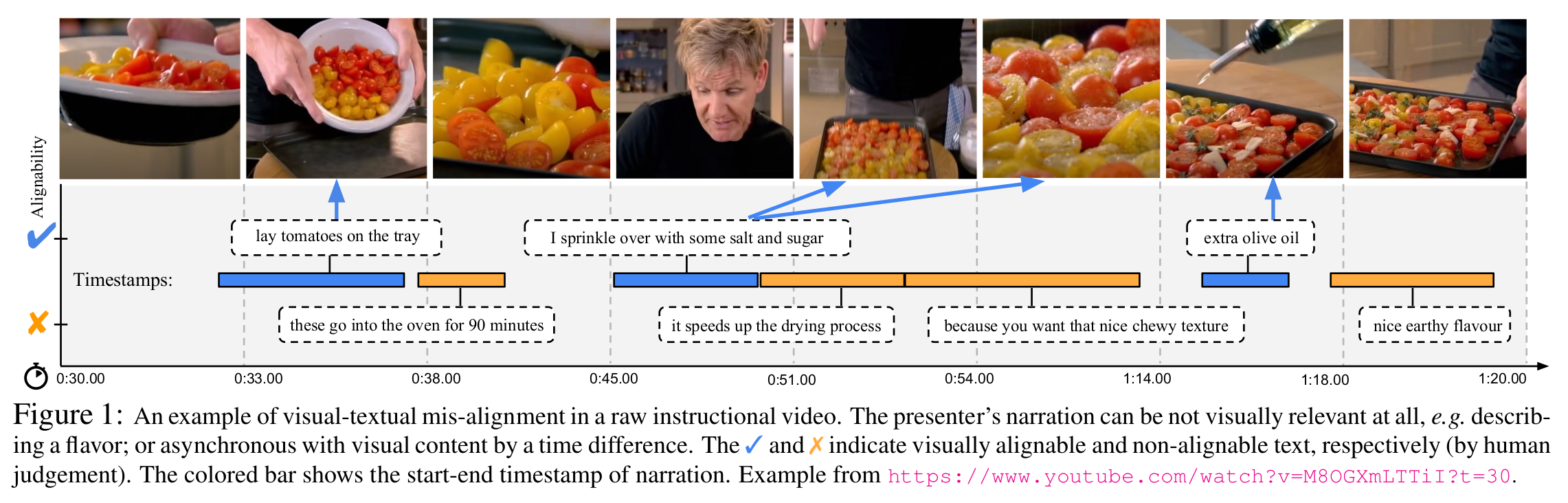

- Natural instructional videos (e.g. from YouTube) has the visual-textual alignment problem, that introduces lots of noise and makes them hard to learn.

- Our model learns to predict:

- if the ASR sentence is alignable with the video,

- if yes, the most corresponding video timestamps.

- Our model is trained without human annotation, and can be used to clean-up the noisy instructional videos (as the output, we release an Auto-Aligned HTM dataset, HTM-AA).

- In our paper, we show the auto-aligned HTM dataset can improve the backbone visual representation quality comparing with original HTM.

Datasets (Check project page for details)

- HTM-Align: A manually annotated 80-video subset for alignment evaluation.

- HTM-AA: A large-scale video-text paired dataset automatically aligned using our TAN without using any manual annotations.

- Sentencified HTM: The original HTM dataset but the ASR is processed into full sentences.

- Sentencify-text: A pipeline to pre-process ASR text segments and get full sentences.

- See instructions in [train]

- See instructions in [end2end]

@InProceedings{han2022align,

title={Temporal Alignment Network for long-term Video},

author={Tengda Han and Weidi Xie and Andrew Zisserman},

booktitle={CVPR},

year={2022}}