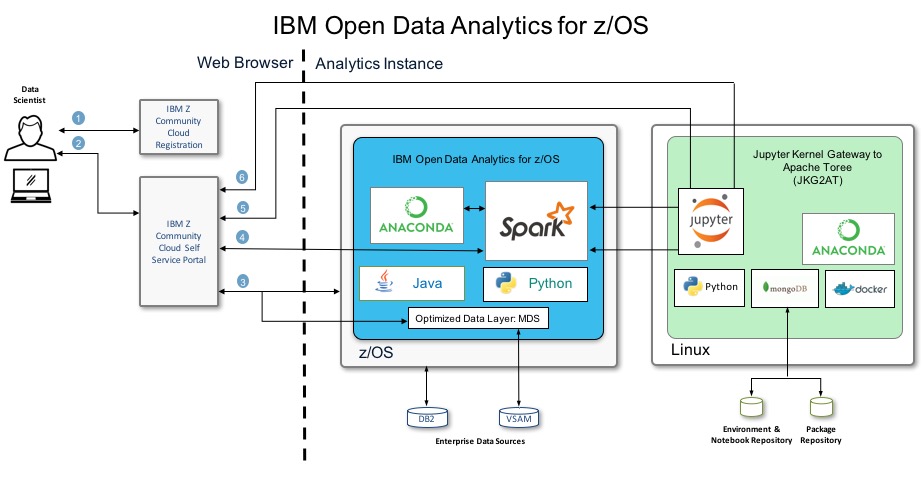

The following instructions can be used to explore analytics applications using IBM Open Data Analytics for z/OS. The analytics examples provided use data stored in DB2 and VSAM tables, and demonstrate machine learning algorithms such as random forest and logistic regression. You will use fictitious customer information and credit card transaction data to learn how a financial organization might analyze their enterprise data to evaluate customer retention. User may also upload their own data (DB2 tables, VSAM datasets or CSV files) into the systems for further exploitation of IzODA. Please pay attention to that due to the resource limitation, don't upload files larger than 100MB.

1. The first example demonstrates the Spark-submit function with an application written in Scala.

2. The second example demonstrates a client retention analysis using a Python 3 notebook.

3. The third example demonstrates a client retention analysis using PySpark APIs in a Python 3 notebook.

- Sign up for an IBM Z Community Cloud account.

- Log in to the Self Service Portal.

- Configure your Analytics Instance and upload data.

- Use case #1: Run a Scala program in batch mode.

- Use case #2: Run a Python program with Jupyter Notebook.

- Use case #3: Run a PySpark program with Jupyter Notebook.

- If you have not done so already, go to IBM Z Community Cloud Registration page and register for a 30-day trial account.

- Fill out and submit the registration form.

- You will receive an email containing credentials to access the self-service portal. This is where you can start exploring all our available services.

Note: The Mozilla Firefox browser is recommended for these examples.

-

Open a web browser and access the IBM Z Community Cloud self-service portal.

1. Enter your Portal User ID and Portal Password 2. Click **'Sign In'** You will see the home page for the IBM Z Community Cloud self-service portal. -

Select the Analytics Service.

- Click 'Try Analytics Service'

-

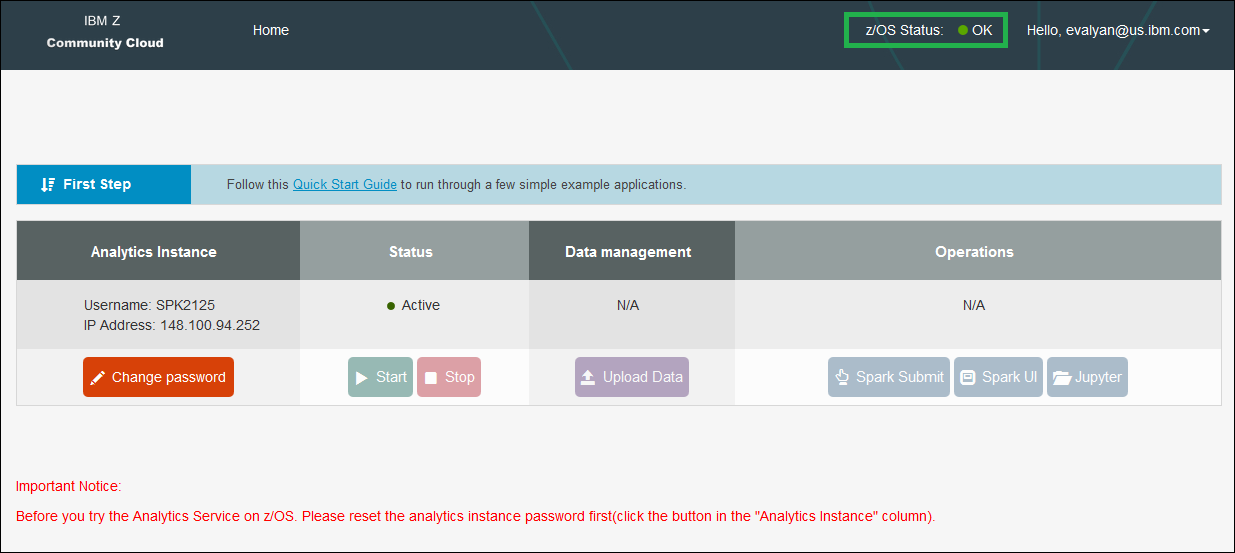

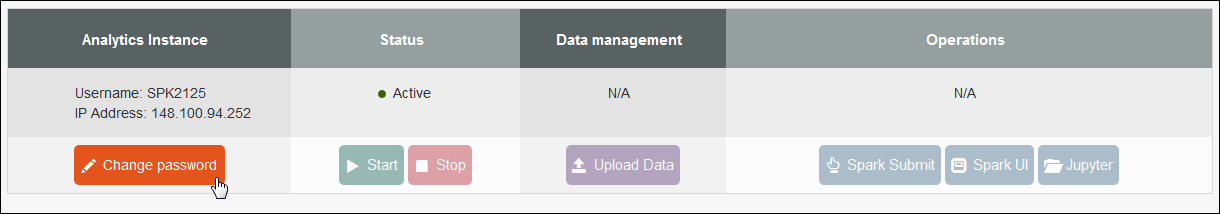

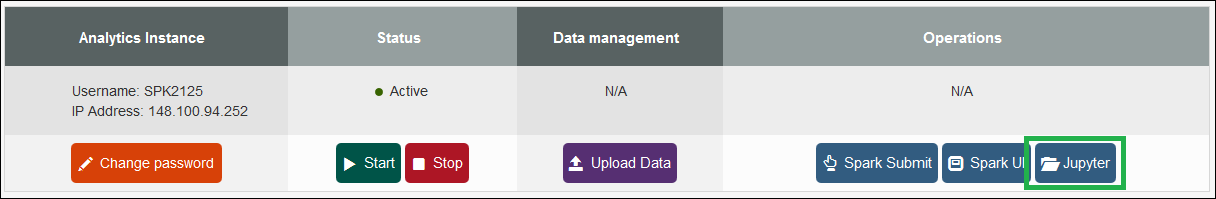

You will now see a dashboard, which shows the status of your Analytics instance.

- At the top of the screen, notice the 'z/OS Status' indicator, which should show the status of your instance as 'OK'.

- In the middle of the screen, the ‘Analytics Instance’, ‘Status’, ‘Data management’, and ‘Operations’ sections will be displayed. The ‘Analytics Instance’ section contains your individual 'Analytics Instance Username' and IP address.

- Below the field headings, you will see buttons for functions that can be applied to your instance.

The following table lists the operation for each function:

| Function | Operation |

|---|---|

| Change Password | Click to change your Analytics Instance password |

| Start | Click to start your individual Spark cluster |

| Stop | Click to stop your individual Spark cluster |

| Upload Data | Click to select and load your DDL and data file into DB2 |

| Spark Submit | Click to select and run your Spark program |

| Spark UI | Click to launch your individual Spark worker output GUI |

| Jupyter | Click to launch your individual Jupyter Notebook GUI |

-

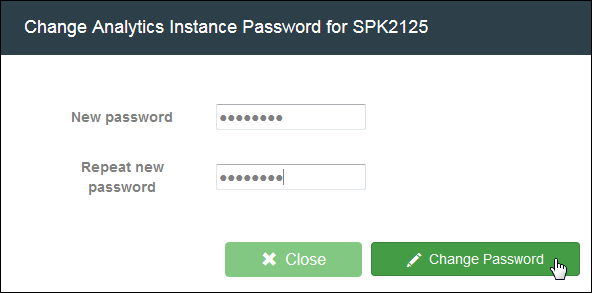

For logging in the first time, you must set a new Analytics Instance (Spark on z/OS) password.

- Click ‘Change Password’ in the ‘Analytics Instance’ section

-

Enter a new password for your Analytics Instance (Spark on z/OS). Please note that the password should be no longer than 8 characters, and has to be alphanumeric.

-

Repeat the new password for your Analytics Instance (Spark on z/OS)

-

Click ‘Change Password’

- This is your 'Analytics Instance Password' that will be used for subsequent steps

-

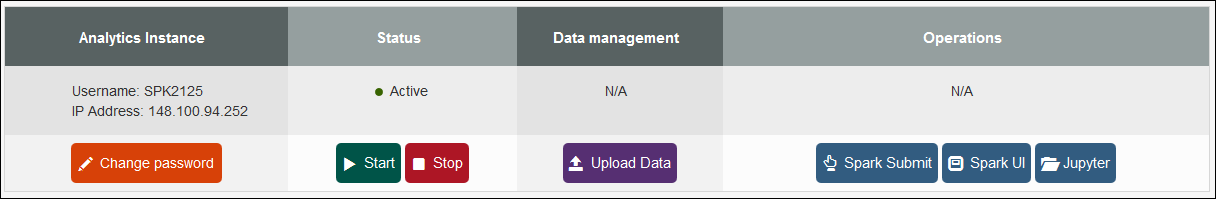

Confirm your instance is Active.

- If it is ‘Stopped’, click ‘Start’ to start it

-

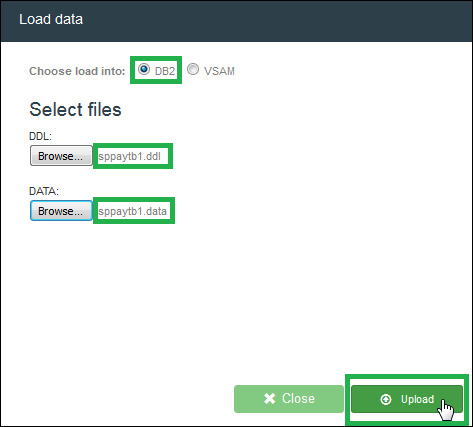

(Optional) The DB2 data for this exercise has already been loaded for you, no further action is required. The DDL and DB2 data file are provided in the zAnalytics Github repository for your reference.

- DB2 data file: sppaytb1.data

- DB2 DDL: sppaytb1.ddl

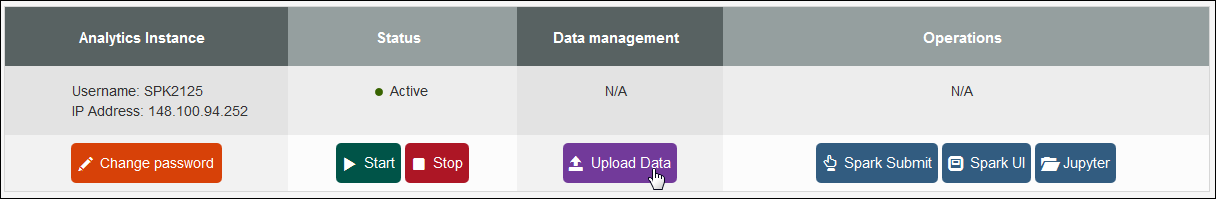

Follow these steps if you wish to upload your own DB2 data.

1. Click **‘Upload Data’**This process could take up to five minutes. You will see the status change from ‘Transferring’ to ‘Loading’ to ‘Upload Success’.

-

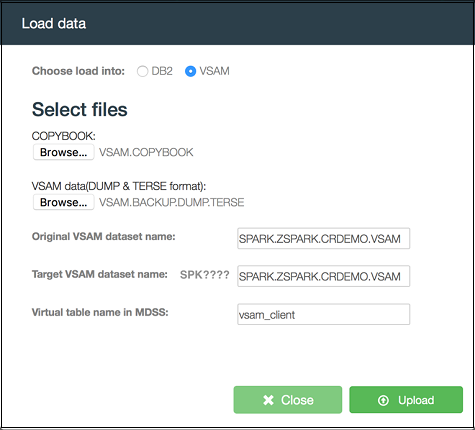

(Optional) The VSAM data for this exercise has already been loaded for you, no further action is required. The VSAM copybook and VSAM data file are provided in the zAnalytics Github repository for your reference.

- VSAM data file: VSAM.BACKUP.DUMP.TERSE

- VSAM copybook: VSAM.COPYBOOK

Follow these steps if you wish to upload the example VSAM data.

-

Click ‘Upload Data’

-

Select 'VSAM'

-

Select the VSAM copybook

-

Select the VSAM data file

-

Enter the original VSAM data file name as shown in the below figure

-

Enter the target VSAM data file name as shown in the below figure

-

Enter the virtual table name for your target VSAM data file as shown in the below figure

-

Click ‘Upload’

-

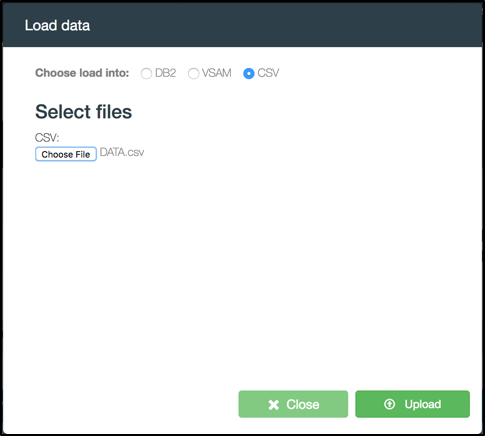

(Optional) You may upload your own Comma Separated Values (CSV) file, which will be stored in your analytics instance’s file system.

Follow these steps if you wish to upload a CSV file.

This process could take a few munites or even longer, depends on the file size to be uploaded. You will see the status change from 'Transferring' to 'Loading' to 'Upload Success'.

You have a file size limit of 200MB. Please note: When uploading files larger than 100MB the upload window indicating upload in progress will disappear, but the upload is in progress in the back end. After ~10 mins, use

import glob print(glob.glob("*.csv"))

to check the files uploaded to your z/OS instance

The sample Scala program will access DB2 and VSAM data, perform transformations on the data, join these two tables in a Spark dataframe, and store the result back to DB2.

-

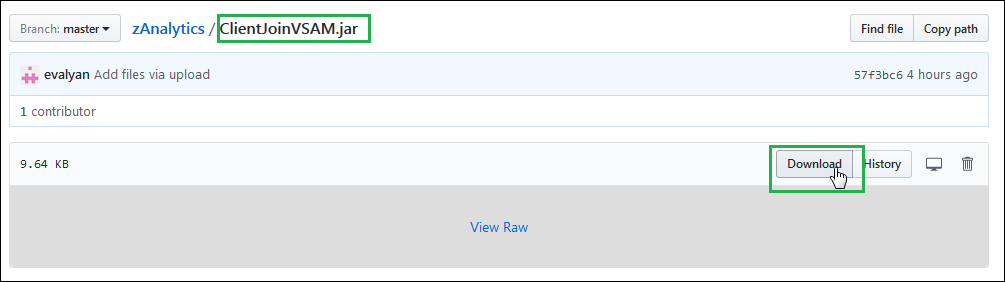

Download the prepared Scala program from the zAnalytics Github repository to your local workstation.

- Click the ClientJoinVSAM.jar file.

- Click Download.

-

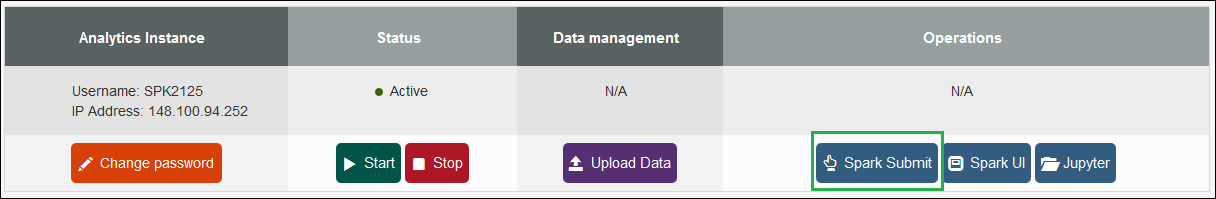

Submit the downloaded Scala program to analyze the data.

-

Click ‘Spark Submit’

-

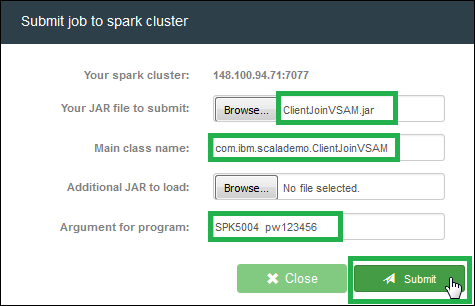

Select the ‘ClientJoinVSAM.jar’ file you just downloaded

-

Specify Main class name ‘com.ibm.scalademo.ClientJoinVSAM’

-

Enter the arguments: <Your 'Analytics Instance Username'> <Your 'Analytics Instance Password'> ( Please note this is NOT the username and password you used to login to the IBM Z Community Cloud! )

-

Click ‘Submit’

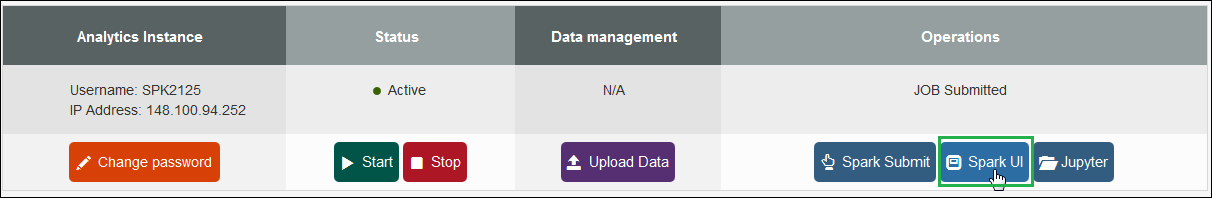

“JOB Submitted” will appear in the dashboard when the program is complete.

-

-

Launch your individual Spark worker output GUI to view the job you just submitted.

-

Click ‘Spark UI’

-

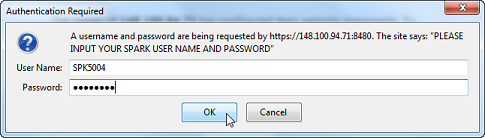

Authenticate with your 'Analytics Instance Username' and 'Analytics Instance Password' ( Please note this is NOT the username and password you used to login to the IBM Z Community Cloud! )

-

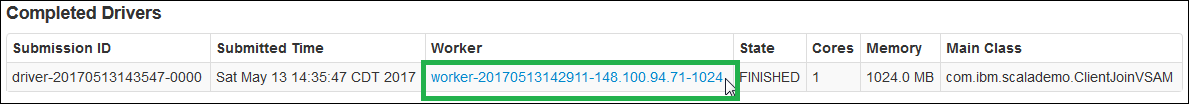

Click the ‘Worker ID’ for your program in the ‘Completed Drivers’ section

-

Authenticate with your 'Analytics Instance Username' and 'Analytics Instance Password' ( Please note this is NOT the username and password you used to login to the IBM Z Community Cloud! )

-

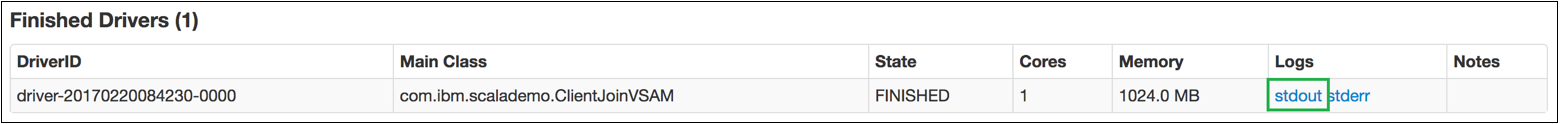

Click on ‘stdout’ for your program in the ‘Finished Drivers’ section to view your results

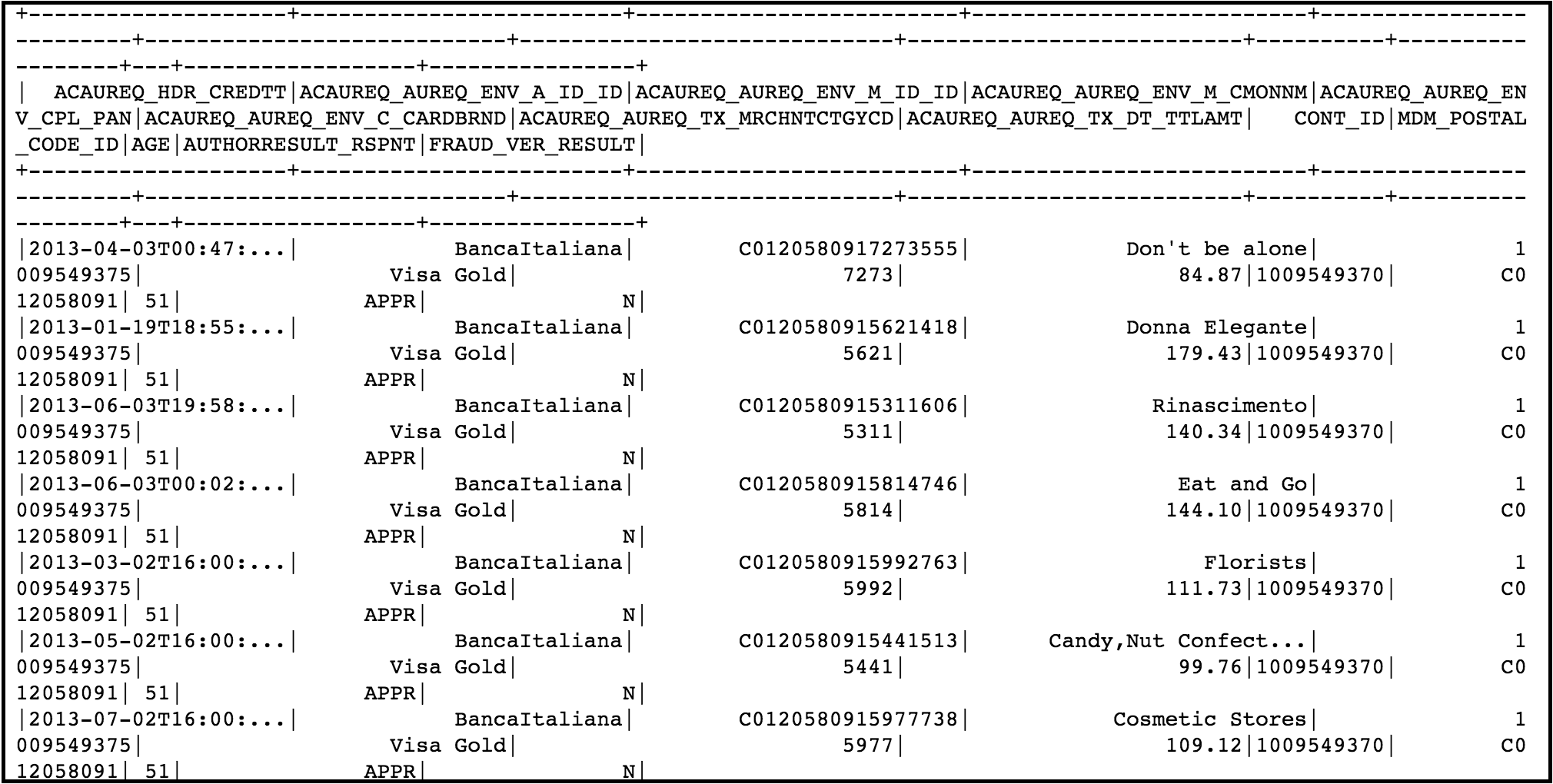

Your results will show:

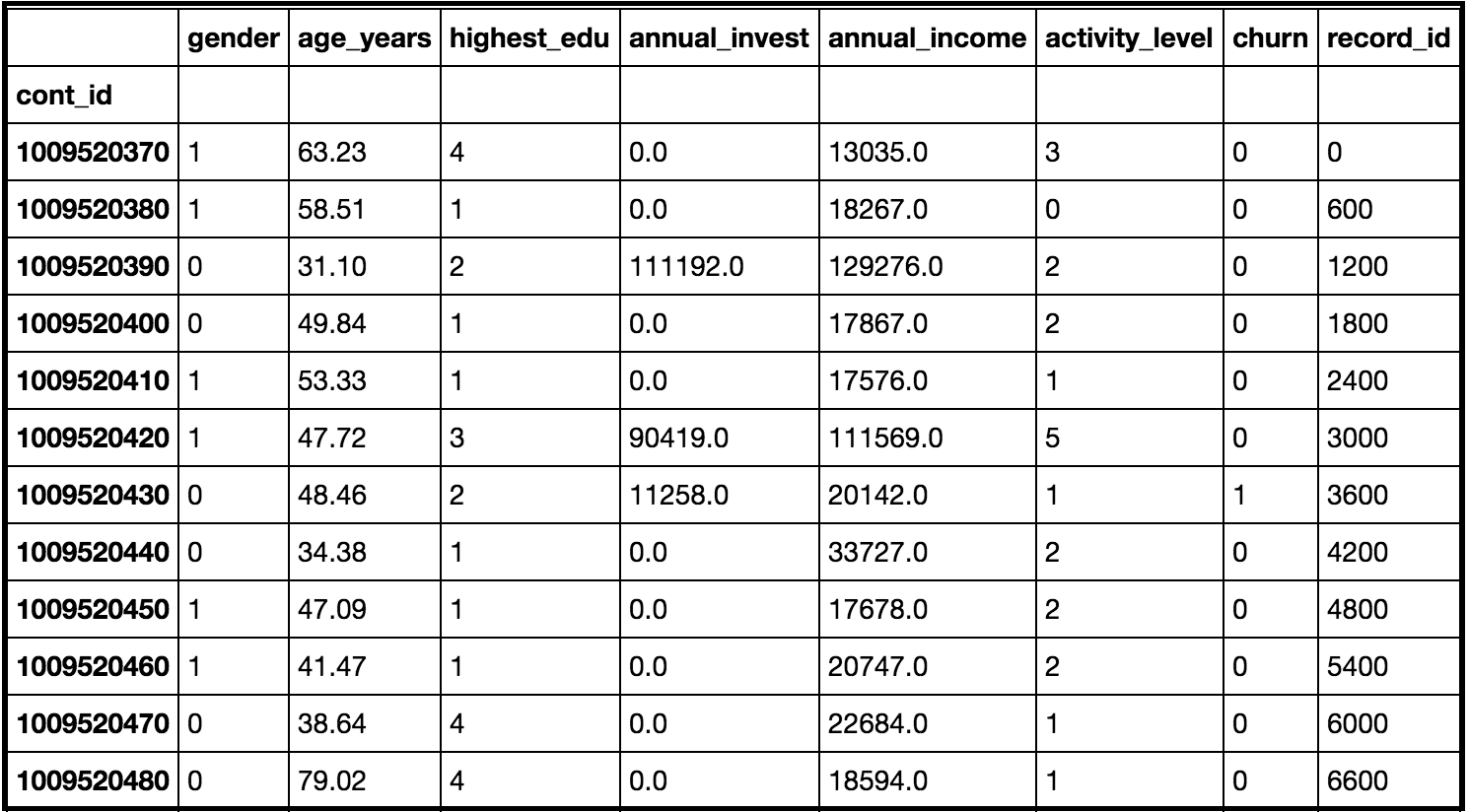

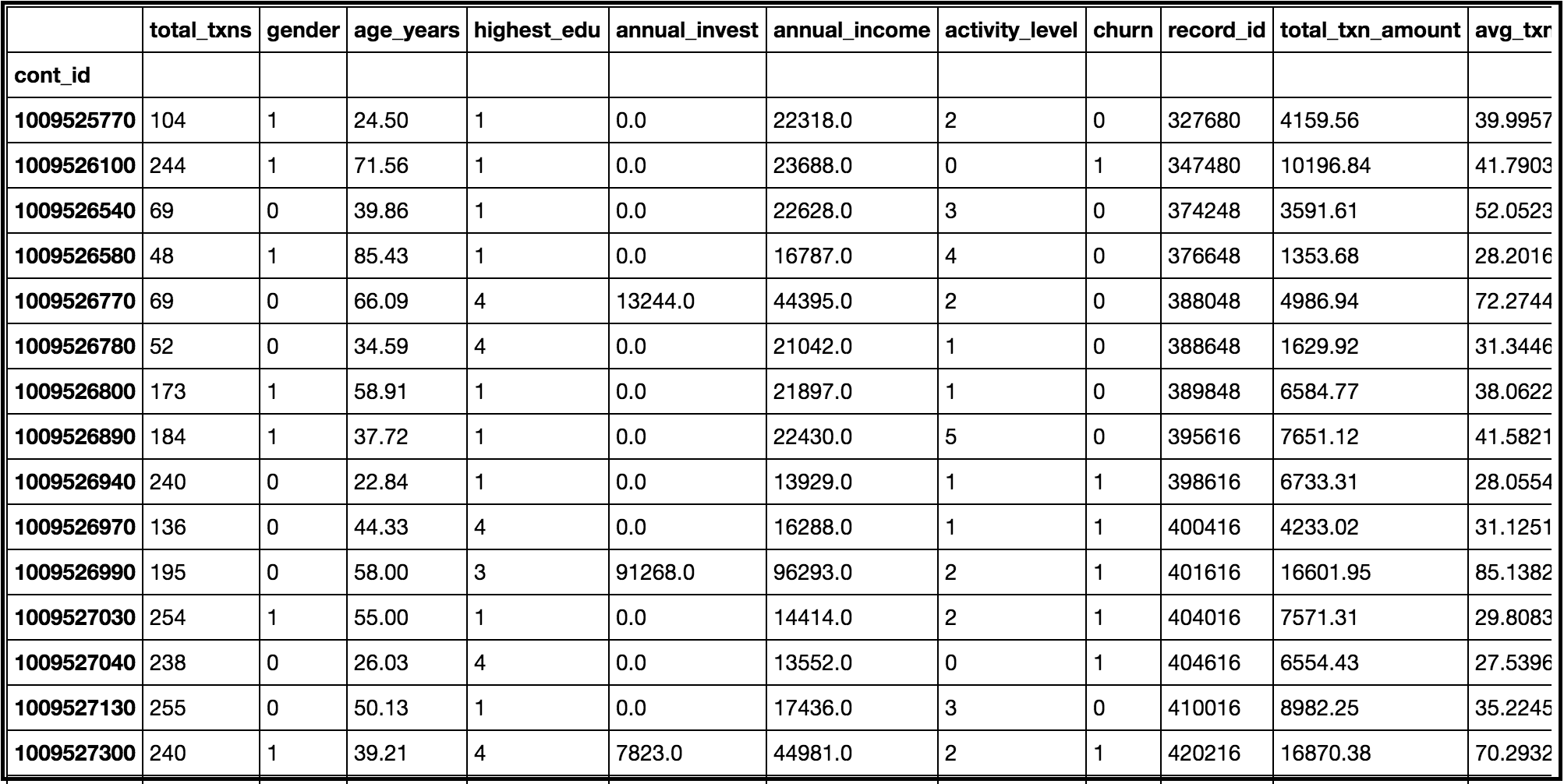

- the top 20 rows of the VSAM data (customer information) in the first table,

- the top 20 rows of the DB2 data (transaction data) in the second table, and

- the top 20 rows of the result ‘client_join’ table in the third table.

-

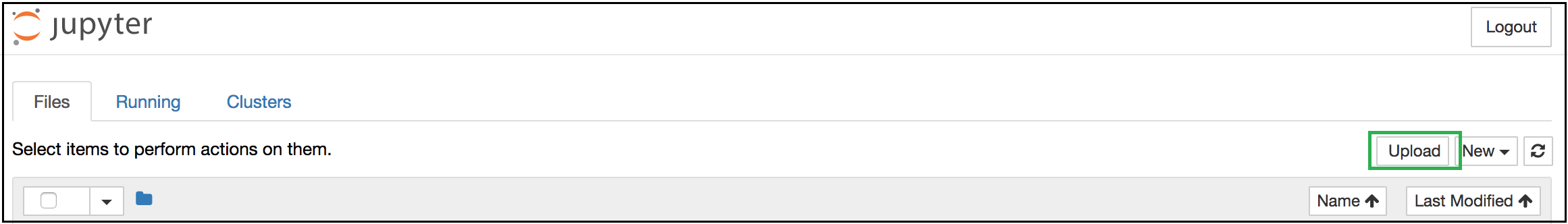

In this section, you will use the Jupyter Notebook tool that is installed in the dashboard. This tool allows you to write and submit Python code, and view the output within a web GUI.

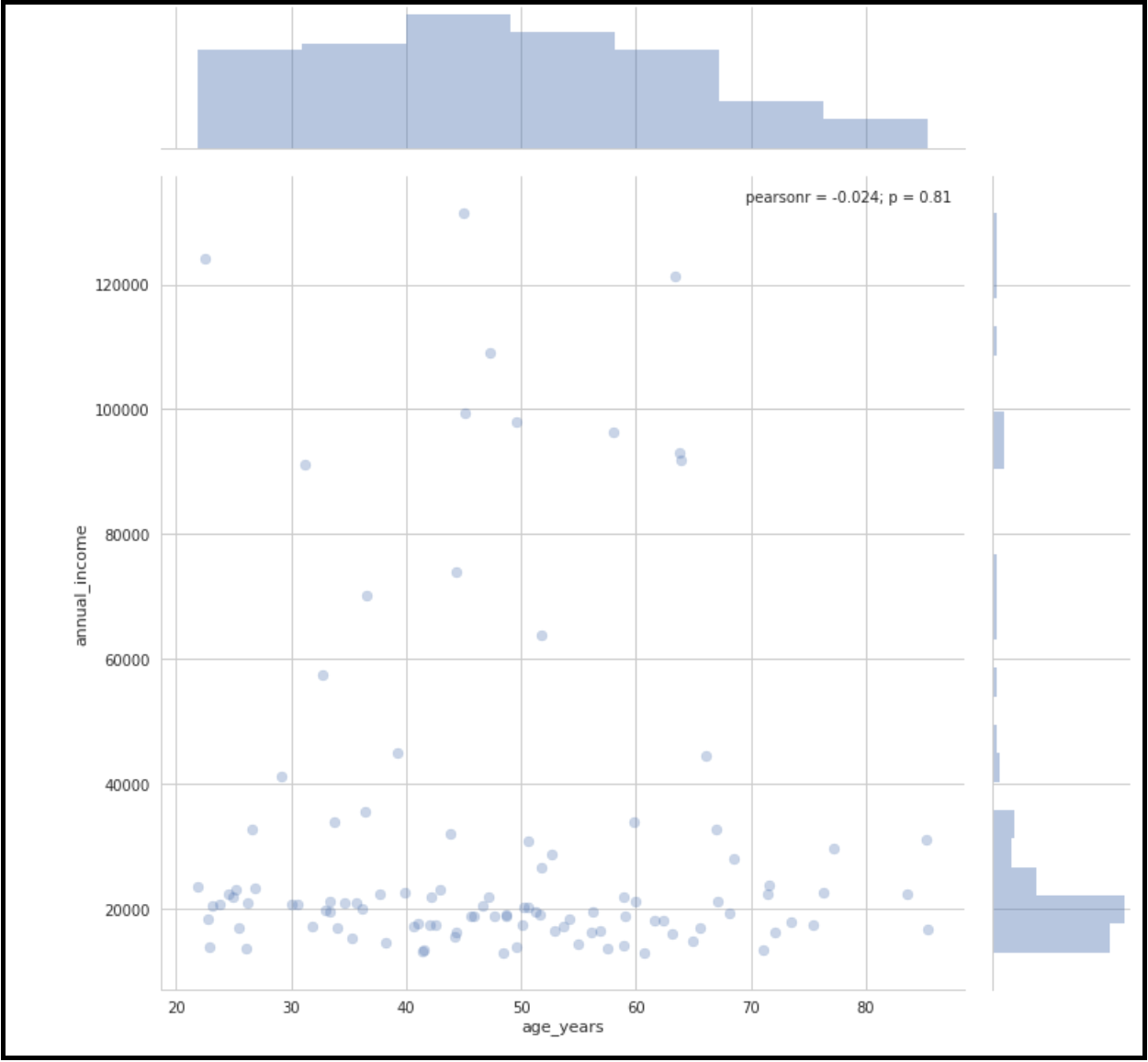

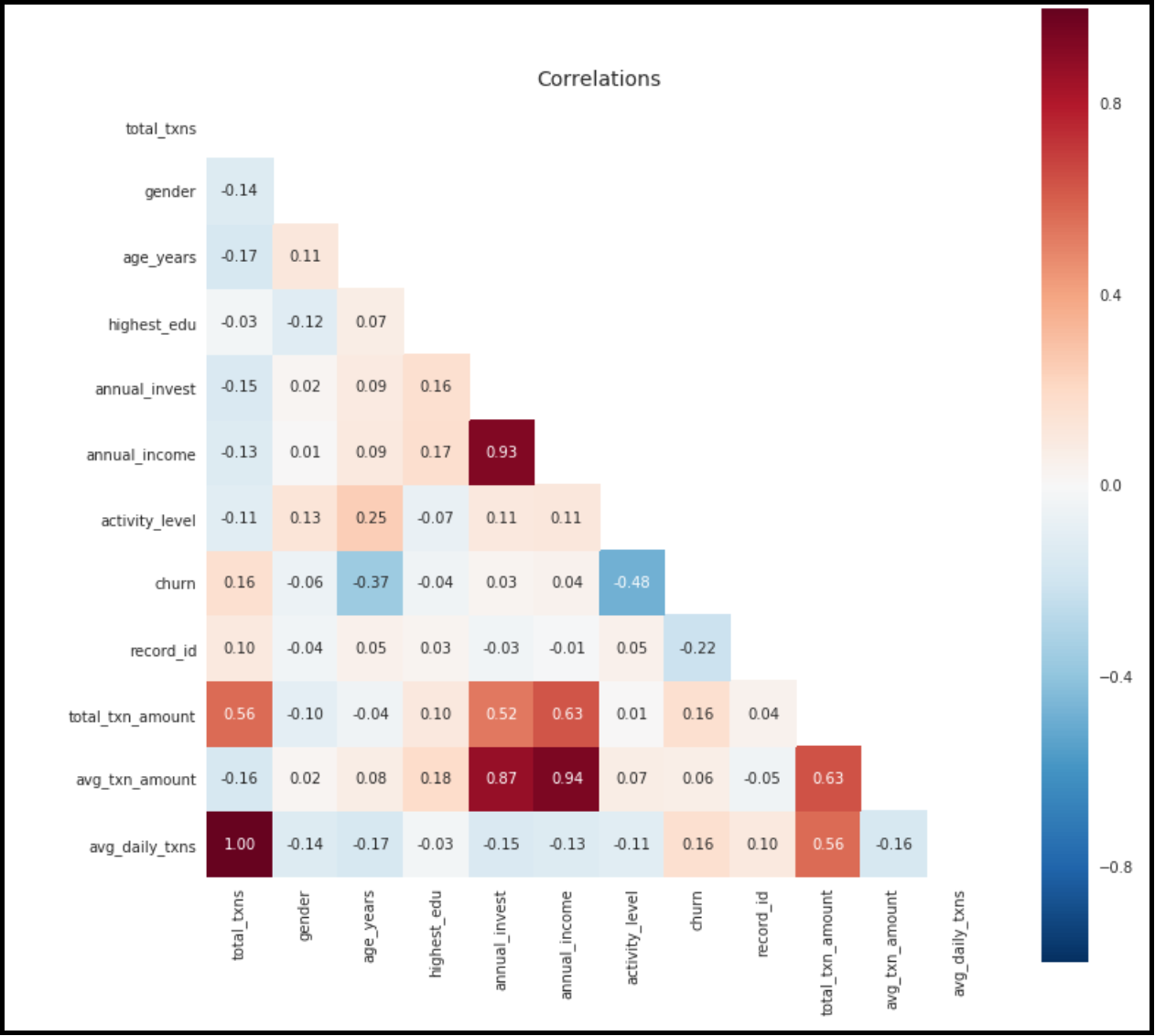

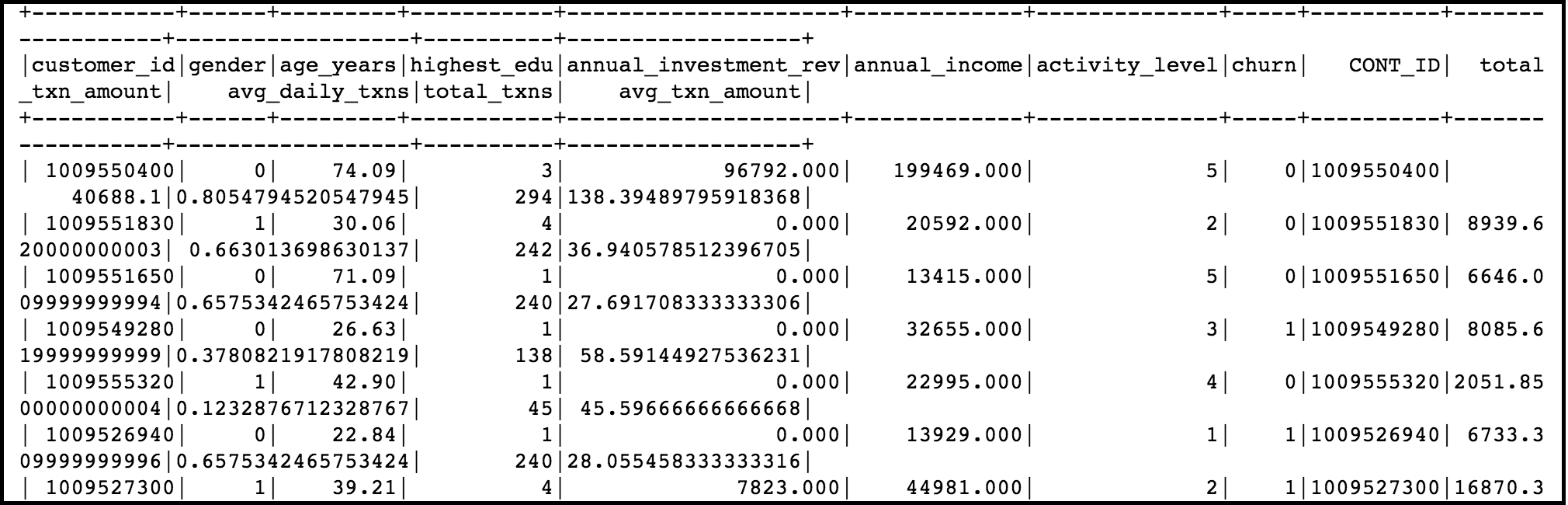

The prepared Python program will access DB2 and VSAM data using the dsdbc driver, perform transformations on the data, and join the two tables in a Pandas DataFrame using python APIs. It will also perform a random forest regression analysis and plot several charts.

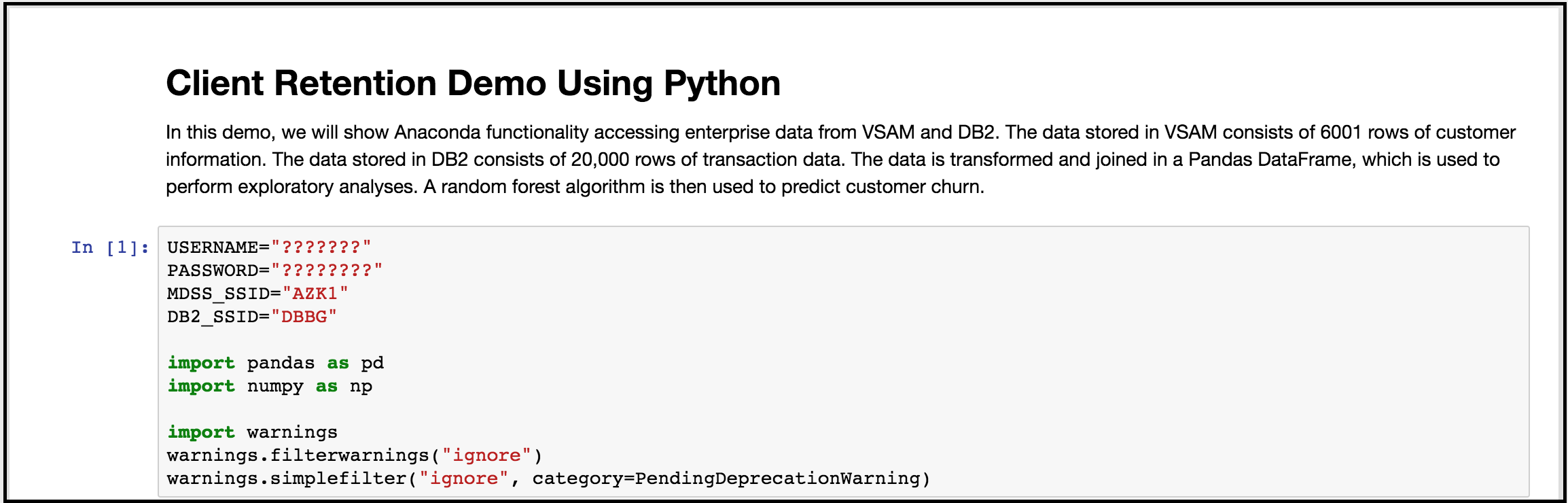

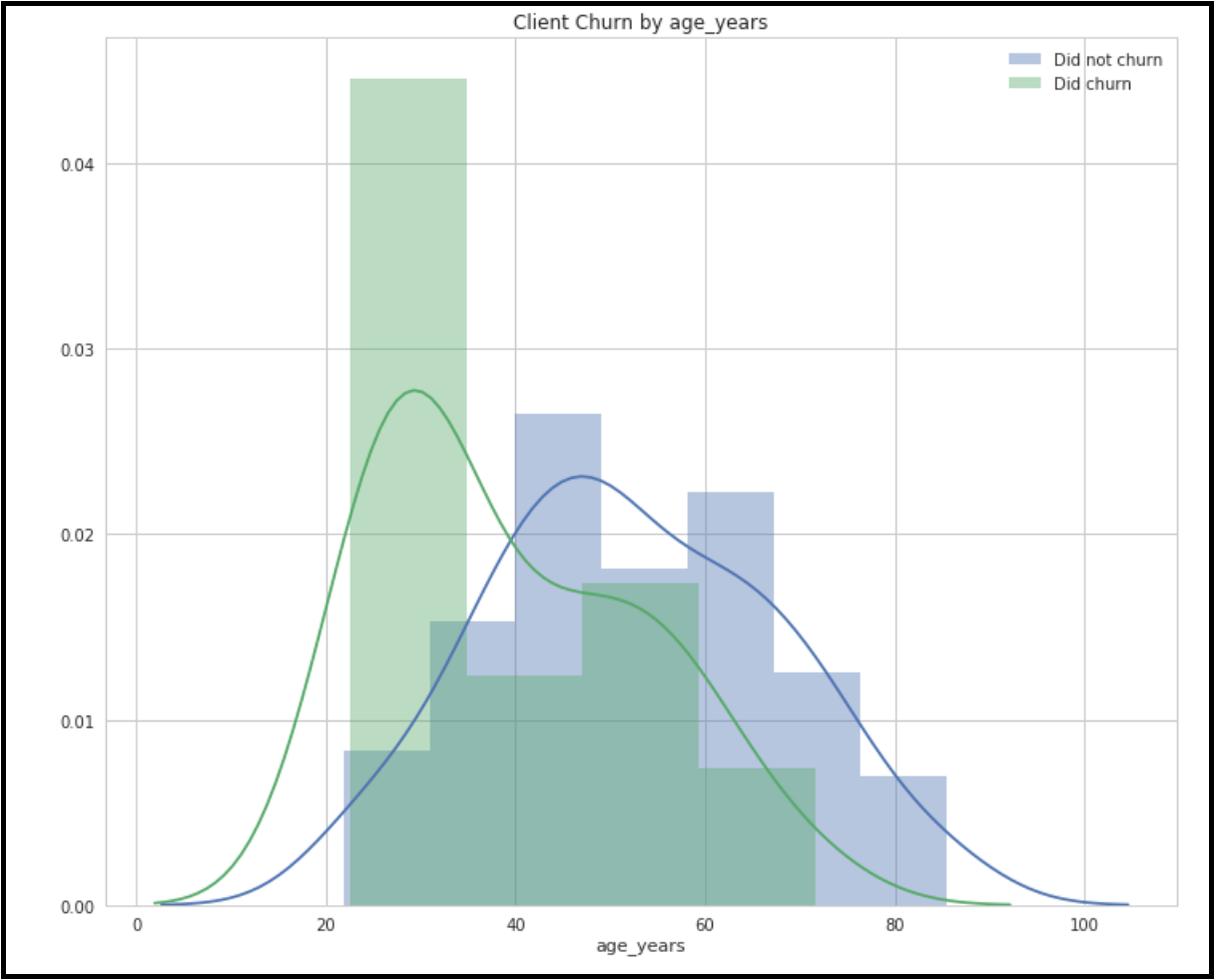

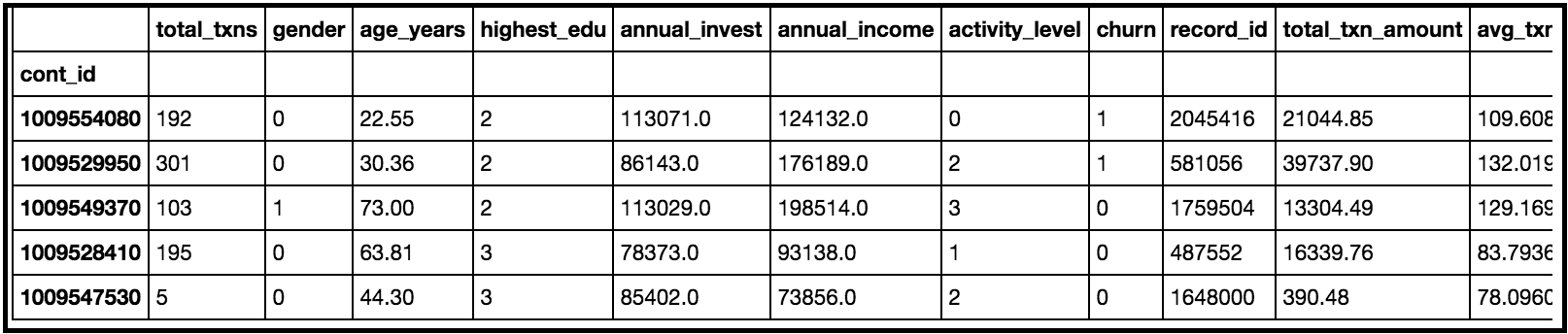

The data stored in VSAM consists of 6,001 rows of customer information. The data stored in DB2 consists of 20,000 rows of transaction data. The data is transformed and joined in a Pandas DataFrame, which is used to perform exploratory analyses. A random forest algorithm is then used to predict customer churn.

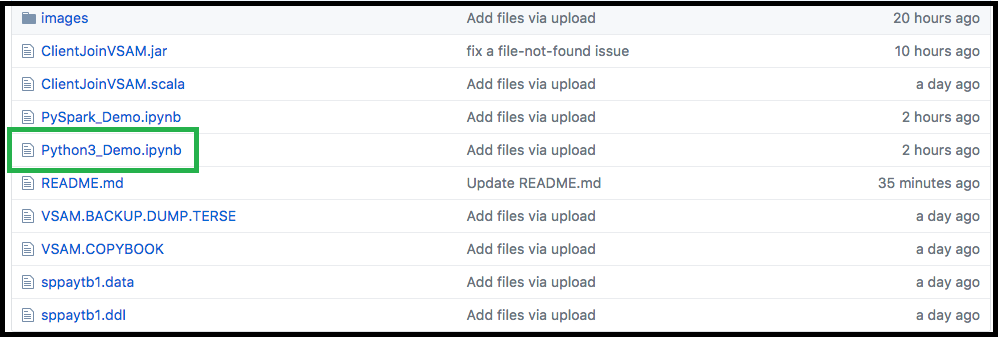

-

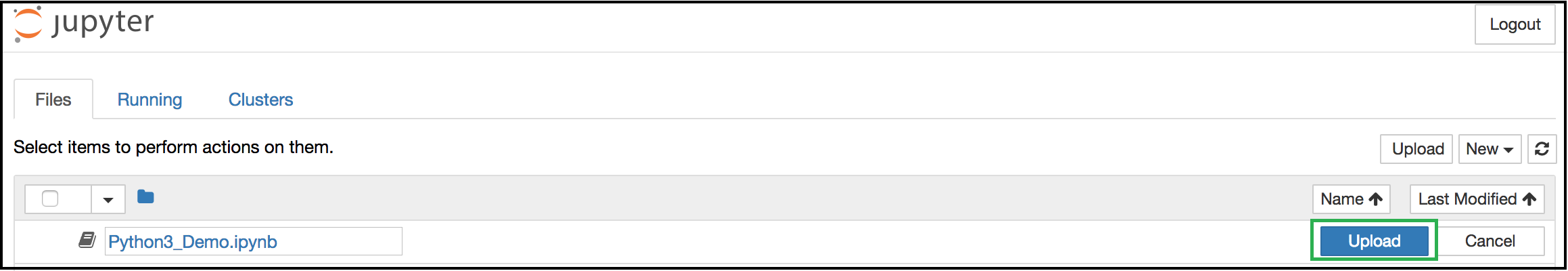

Download the prepared Python example from the zAnalytics Github repository to your local workstation.

- Click the Python3_Demo.ipynb file

- Click 'Raw'

- From your browser's 'File' menu, select 'Save Page As...', keep the file name 'Python3_Demo.ipynb', and click 'Save'

-

Launch the Jupyter Notebook service from your dashboard in your browser.

-

Upload the Jupyter Notebook from your local workstation.

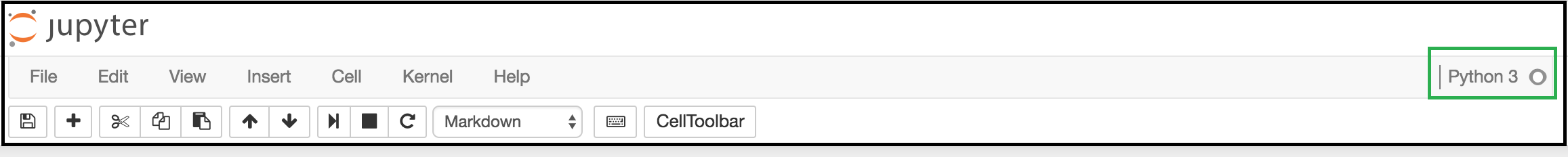

The Jupyter Notebook will connect to your Analytics Instance automatically and will be in the ready state when the Python 3 indicator in the top right-hand corner of the screen is clear.

The environment is divided into input cells labeled with ‘In [#]:’.

-

Execute the Python code in the first cell.

- Click on the first ‘In [ ]:’

The left border will change to blue when a cell is in command mode.

- Click in the cell to edit

The left border will change to green when a cell is in edit mode.

- Change the value of USERNAME to your ‘Analytics Instance Username’

- Change the value of PASSWORD to your ‘Analytics Instance Password’

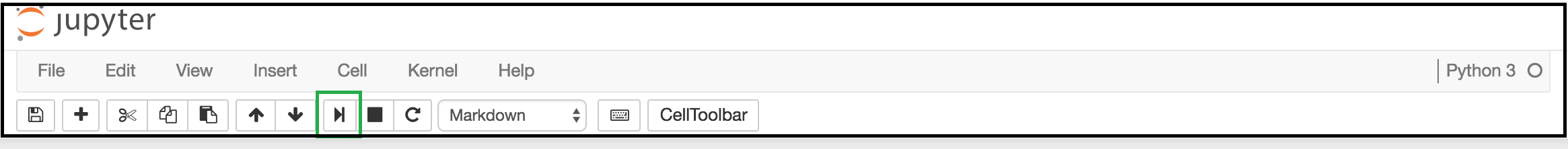

- Click the run cell button as shown below:

Jupyter Notebook will check the Python code for syntax errors and run the code for you. The Jupyter Notebook connection to your Spark instance is in the busy state when the Python 3 indicator in the top right-hand corner of the screen is grey.

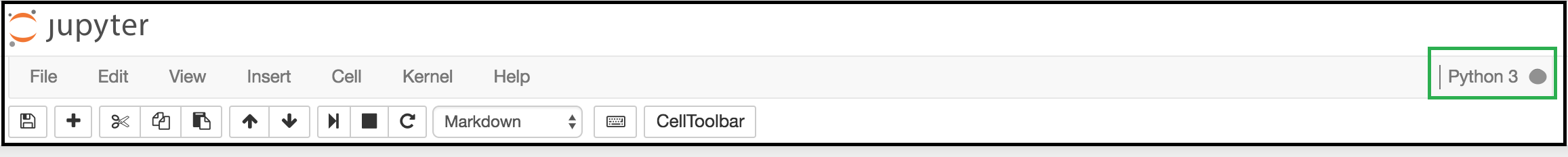

When this indicator turns clear, the cell run has completed and returned to the ready state.

If no error messages appear, the cell run was successful.

-

Click and run the second cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

7.Click and run the third cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

8.Click and run the fourth cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

-

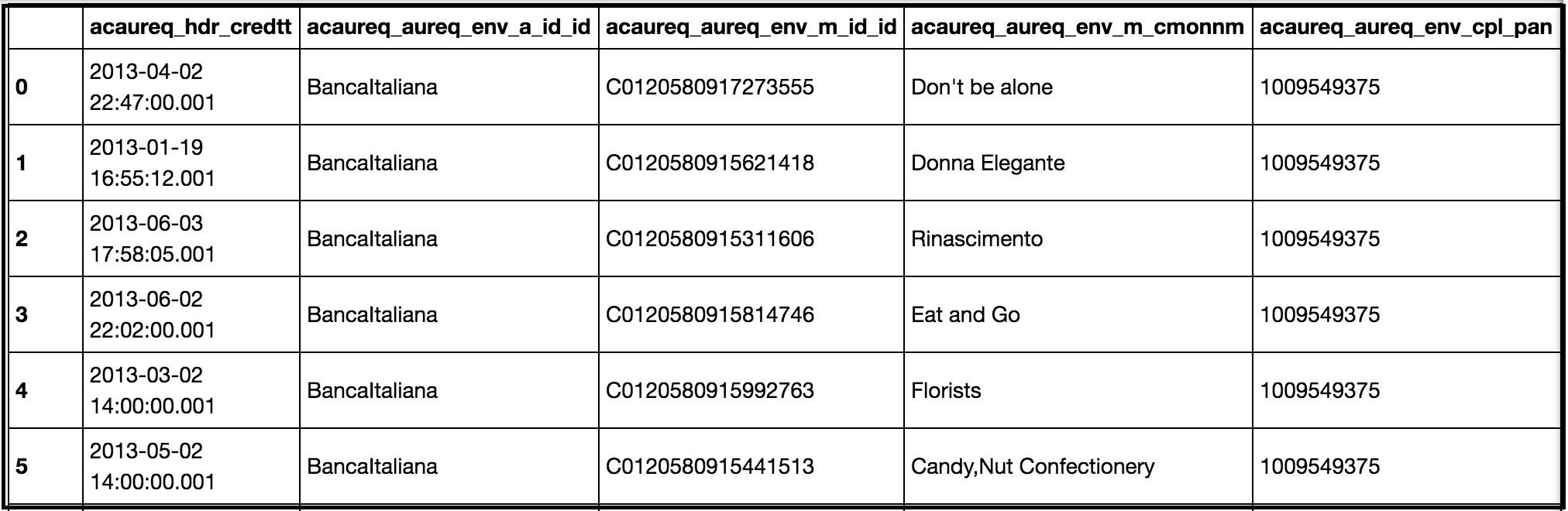

Click and run the fifth cell ‘In [ ]:’.

The output should be similar to the following:

-

Click and run the sixth cell ‘In [ ]:’.

The output should be similar to the following:

-

Click and run the seventh cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

-

Click and run the eighth cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

-

Click and run the ninth cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

-

Click and run the tenth cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

-

Click and run the eleventh cell ‘In [ ]:’.

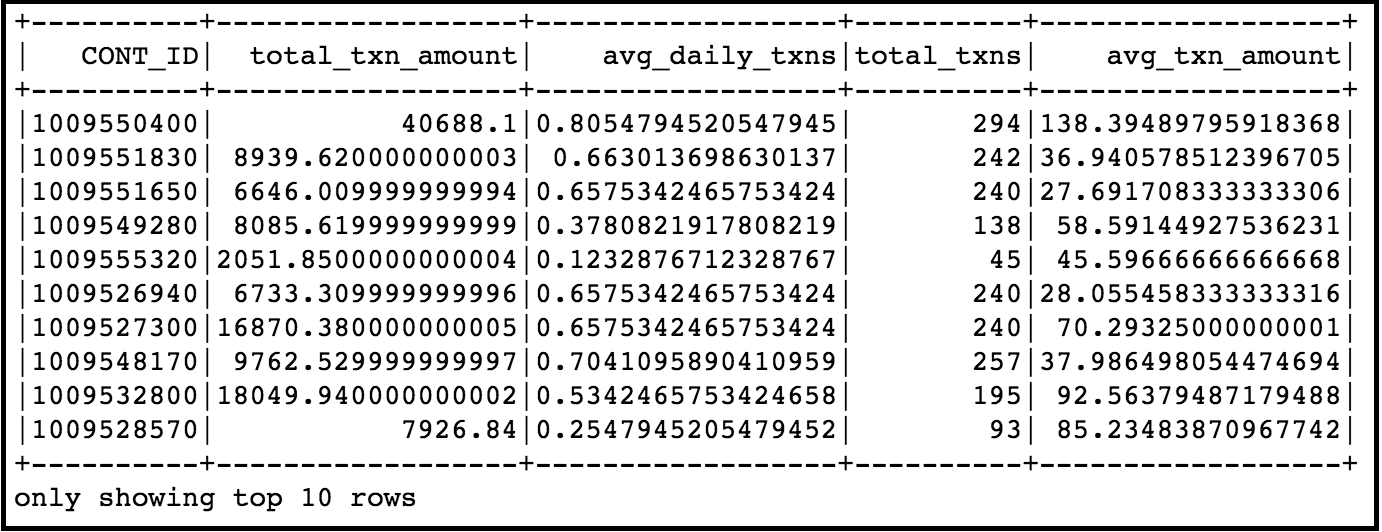

The output should be similar to the following:

-

Click and run the twelfth cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

-

Click and run the thirteenth cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

-

Click and run the fourteenth cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

-

Click and run the fifteenth cell ‘In [ ]:’.

The output should be similar to the following:

-

Click and run the sixteenth cell ‘In [ ]:’.

The output should be similar to the following:

-

Click and run the seventeenth cell ‘In [ ]:’.

The output should be similar to the following:

-

Click and run the eighteenth cell ‘In [ ]:’.

The output should be similar to the following:

-

Click and run the nineteenth cell ‘In [ ]:’.

The output should be similar to the following:

-

Click and run the twentieth cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

-

Click and run the twenty-first cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

-

Click and run the twenty-second cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

-

Click and run the twenty-third cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

-

Click and run the twenty-fourth cell ‘In [ ]:’.

The output should be similar to the following:

-

Click and run the twenty-fifth cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

-

Click and run the twenty-sixth cell ‘In [ ]:’.

The output should be similar to the following:

In this section, you will use the Jupyter Notebook tool that is installed in the dashboard. This tool allows you to write and submit Python code, and view the output within a web GUI.

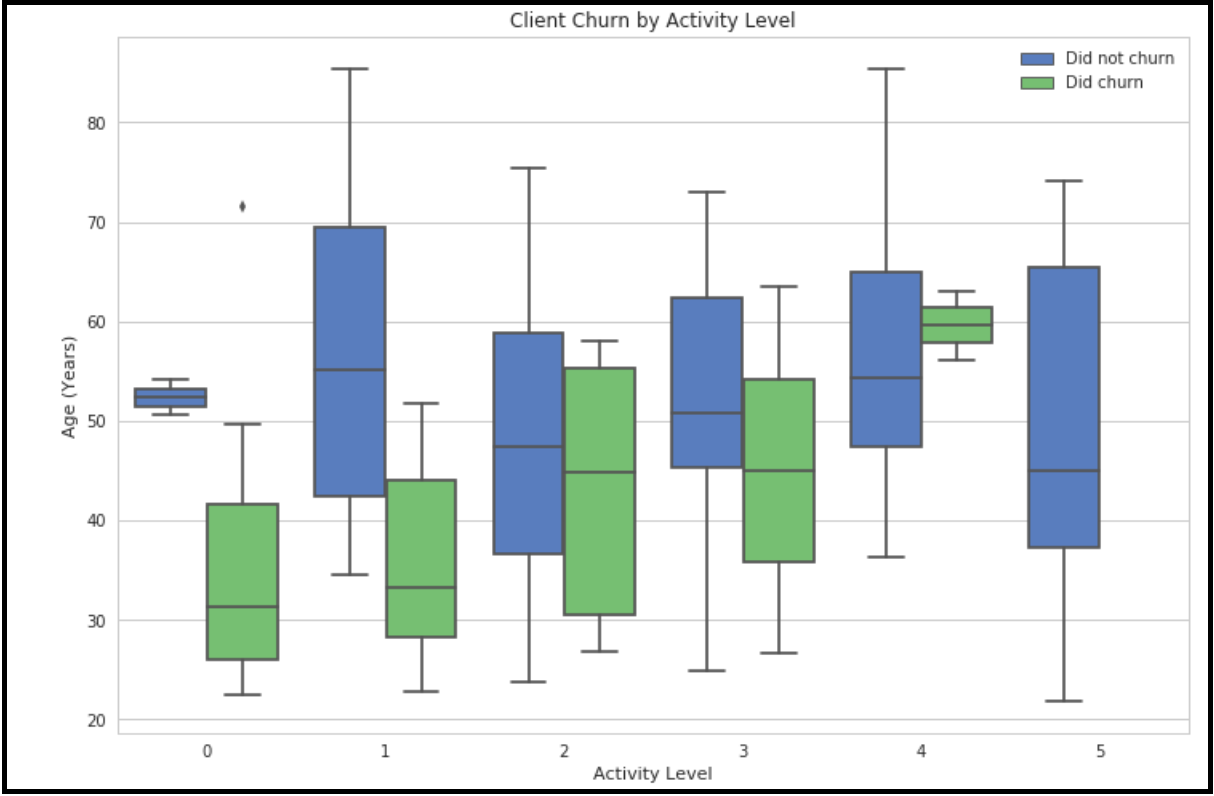

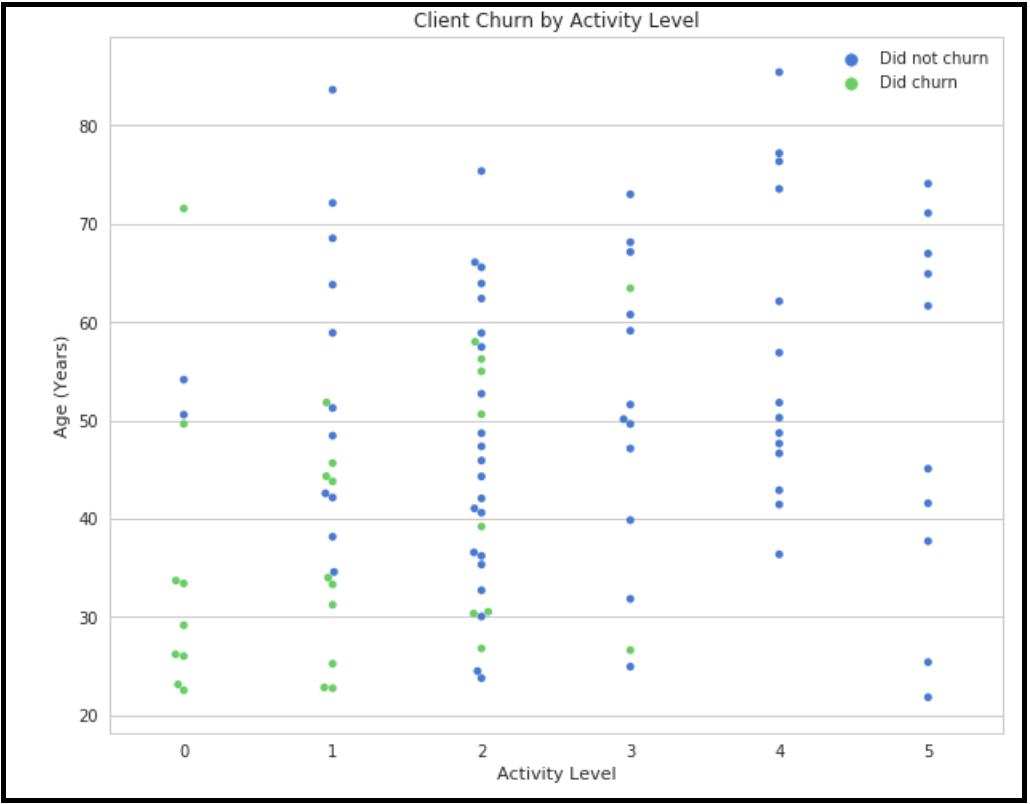

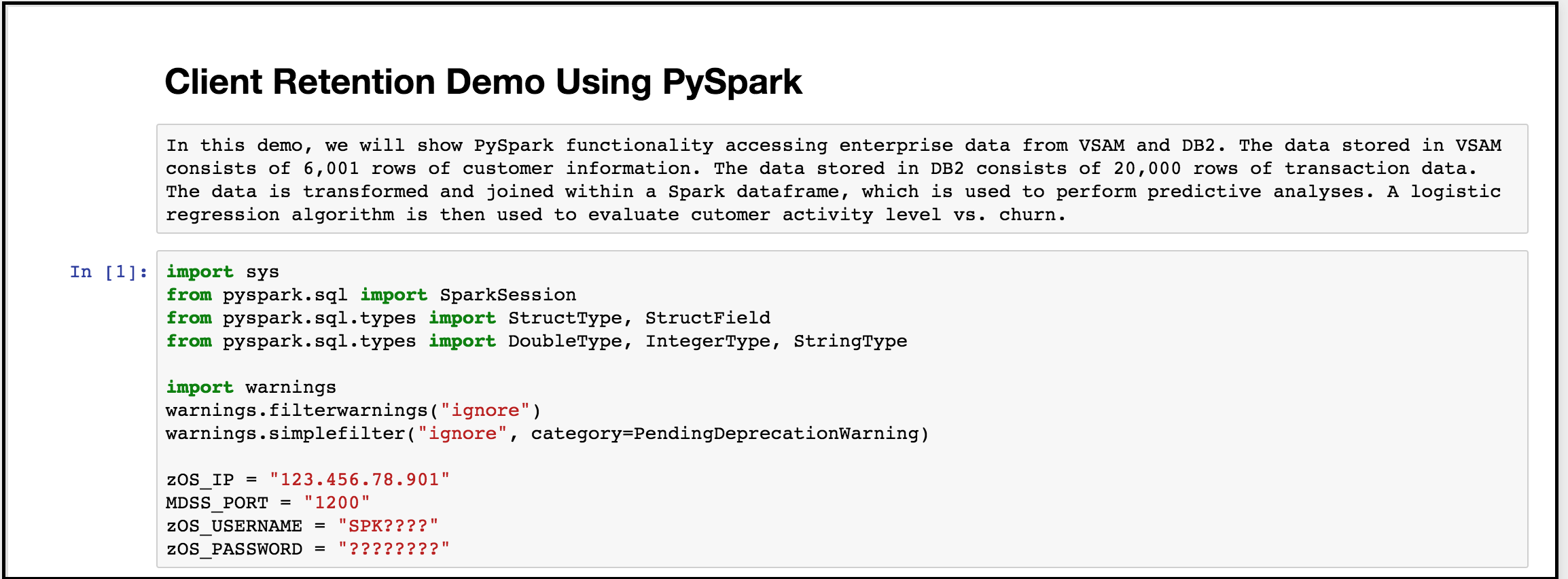

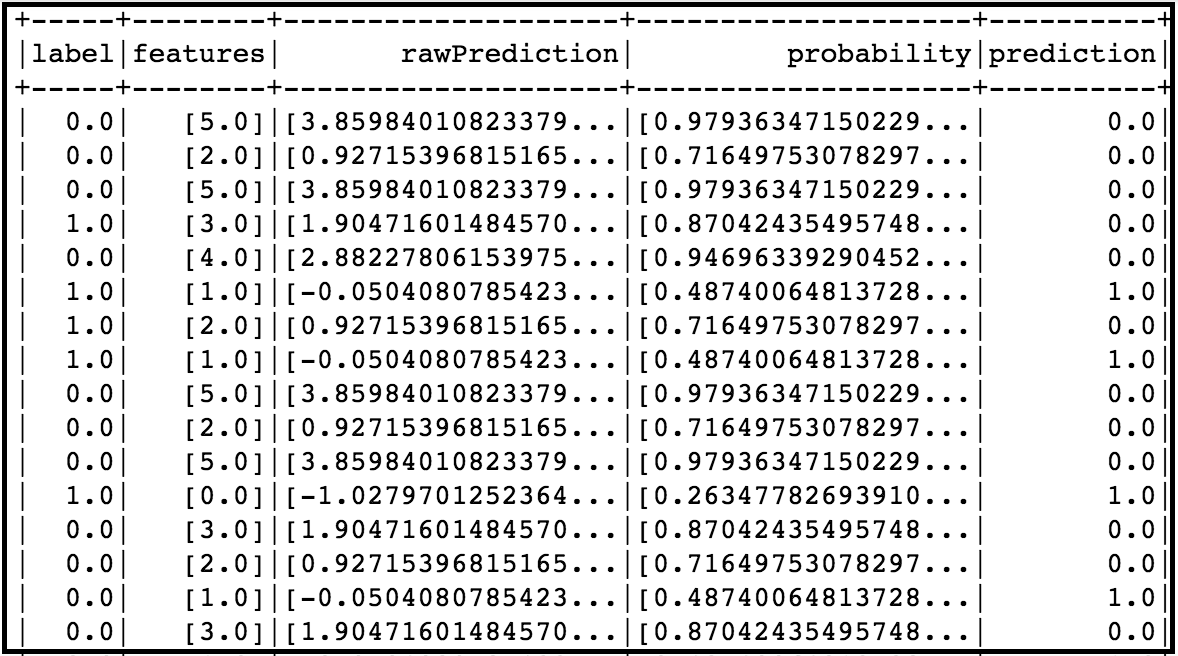

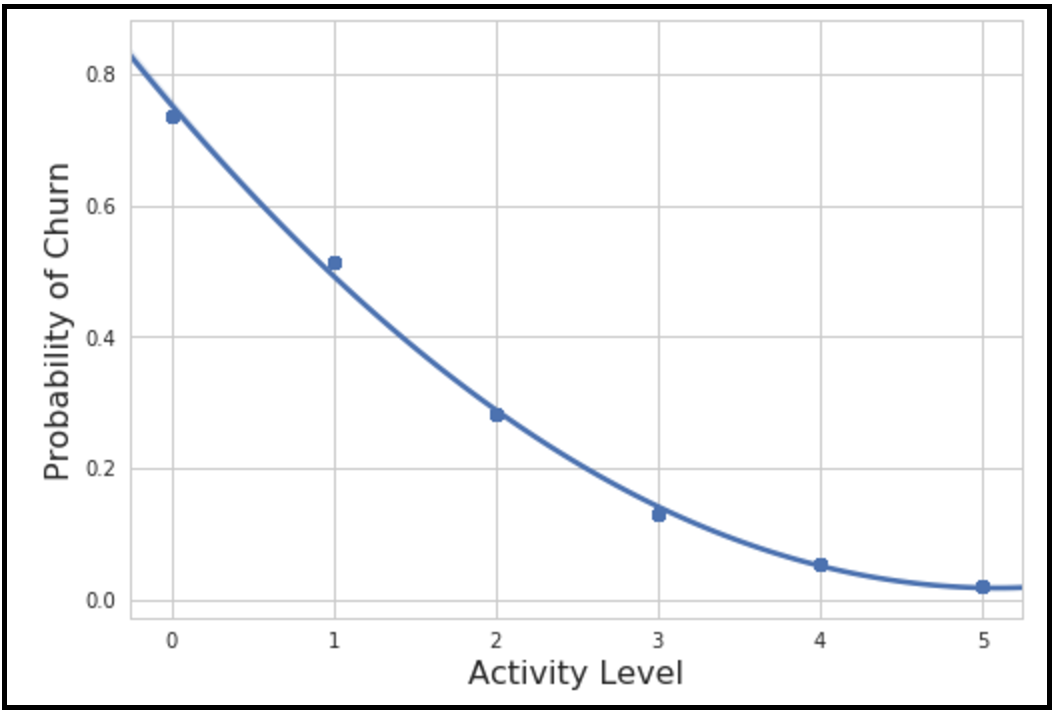

The prepared PySpark program will access DB2 and VSAM data using the jdbc driver, perform transformations on the data, and join the tables in a Spark dataframe using PySpark APIs. It will also perform a logistic regression analysis and create a plot using matplotlib.

The data stored in VSAM consists of 6,001 rows of customer information. The data stored in DB2 consists of 20,000 rows of transaction data. The data is transformed and joined within a Spark dataframe, which is used to perform predictive analyses. A logistic regression algorithm is then used to evaluate cutomer activity level vs. churn.

-

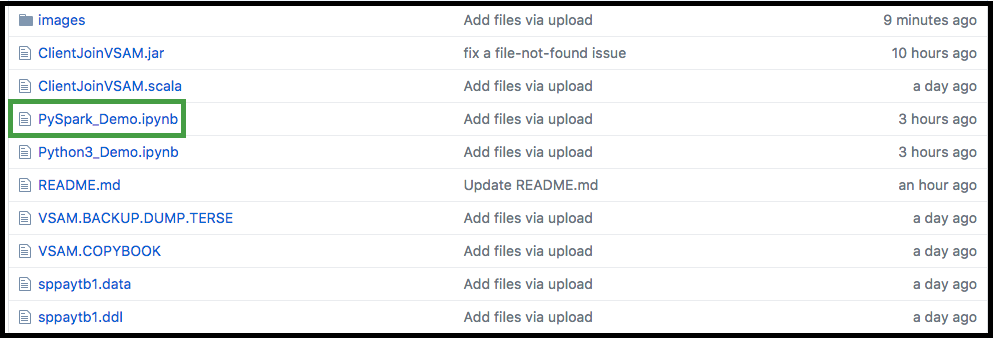

Download the prepared Python example from the zAnalytics Github repository to your local workstation.

- Click the PySpark_Demo.ipynb file

- Click 'Raw'

- From your browser's 'File' menu, select 'Save Page As...', keep the file name 'PySpark_Demo.ipynb', and click 'Save'

-

Launch the Jupyter Notebook service from your dashboard in your browser.

-

Upload the Jupyter Notebook from your local workstation.

The Jupyter Notebook will connect to your Analytics Instance automatically and will be in the ready state when the Python 3 indicator in the top right-hand corner of the screen is clear.

The environment is divided into input cells labeled with ‘In [#]:’.

-

Execute the Python code in the first cell.

- Click on the first ‘In [ ]:’

The left border will change to blue when a cell is in command mode.

- Click in the cell to edit

The left border will change to green when a cell is in edit mode.

- Change the value of zOS_IP to your ‘Analytics Instance IP Address’

- Change the value of zOS_USERNAME to your ‘Analytics Instance Username’

- Change the value of zOS_PASSWORD to your ‘Analytics Instance Password’

- Click the run cell button as shown below:

Jupyter Notebook will check the Python code for syntax errors and run the code for you. The Jupyter Notebook connection to your Spark instance is in the busy state when the Python 3 indicator in the top right-hand corner of the screen is grey.

When this indicator turns clear, the cell run has completed and returned to the ready state.

If no error messages appear, the cell run was successful.

-

Click and run the second cell ‘In [ ]:’.

If no error messages appear, the cell run was successful.

7.Click and run the third cell ‘In [ ]:’.

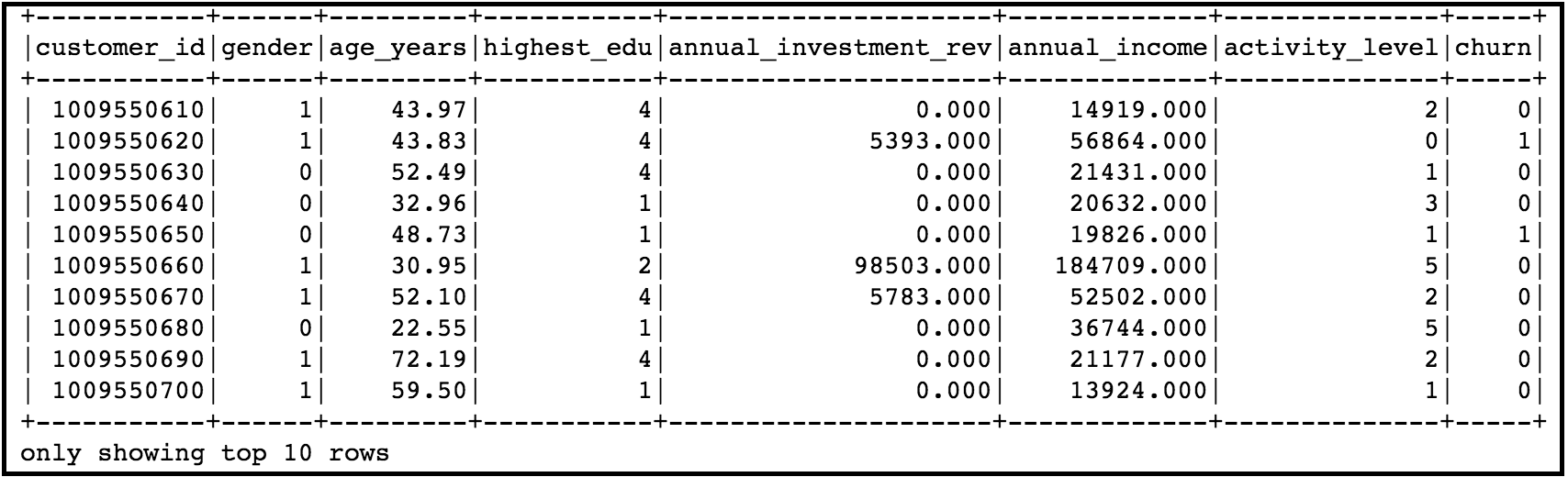

The output should be similar to the following:

8.Click and run the fouth cell ‘In [ ]:’.

The output should be similar to the following:

9.Click and run the fifth cell ‘In [ ]:’.

The output should be similar to the following:

-

Click and run the sixth cell ‘In [ ]:’.

The output should be similar to the following:

-

Click and run the seventh cell ‘In [ ]:’.

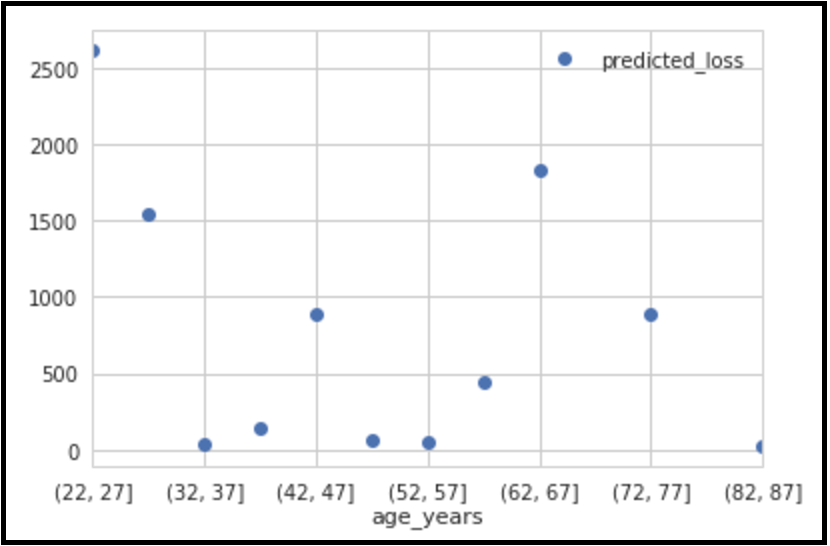

The output should be similar to the following:

-

Click and run the eighth cell ‘In [ ]:’.

The output should be similar to the following:

-

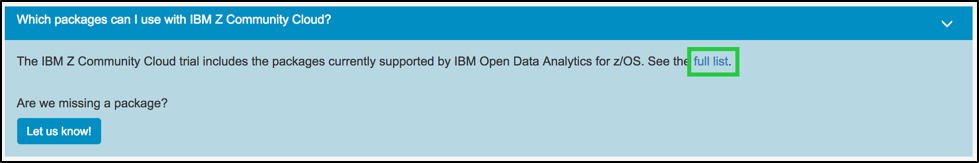

You may view a list of packages that are pre-installed in your instance and available to use in your analytics applications.

From the dashboard,

- Click ‘Which packages can I use with IBM Z Community Cloud?’

- Click ‘full list’

The link will open a GitHub page with a list of installed packages and version numbers.

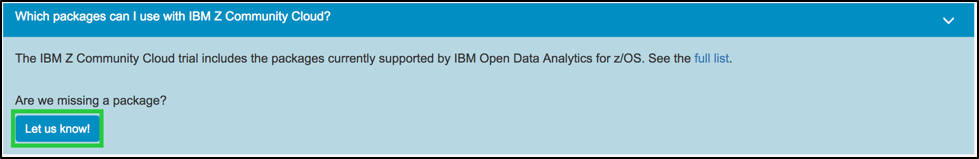

If you don’t see a package listed, you may submit a request for review consideration.

- Click ‘Let us know!’

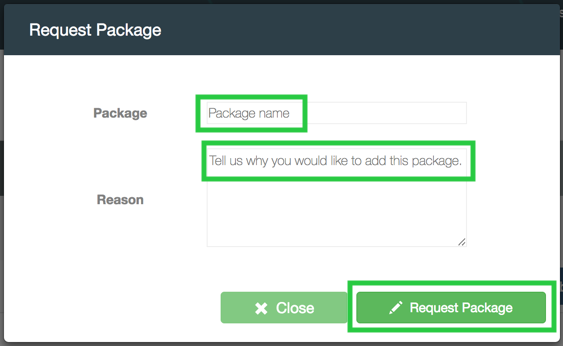

-

Enter the package name and version number you would like to request

-

Enter the reason you are requesting the package

-

Click ‘Request Package’

- Click ‘OK’