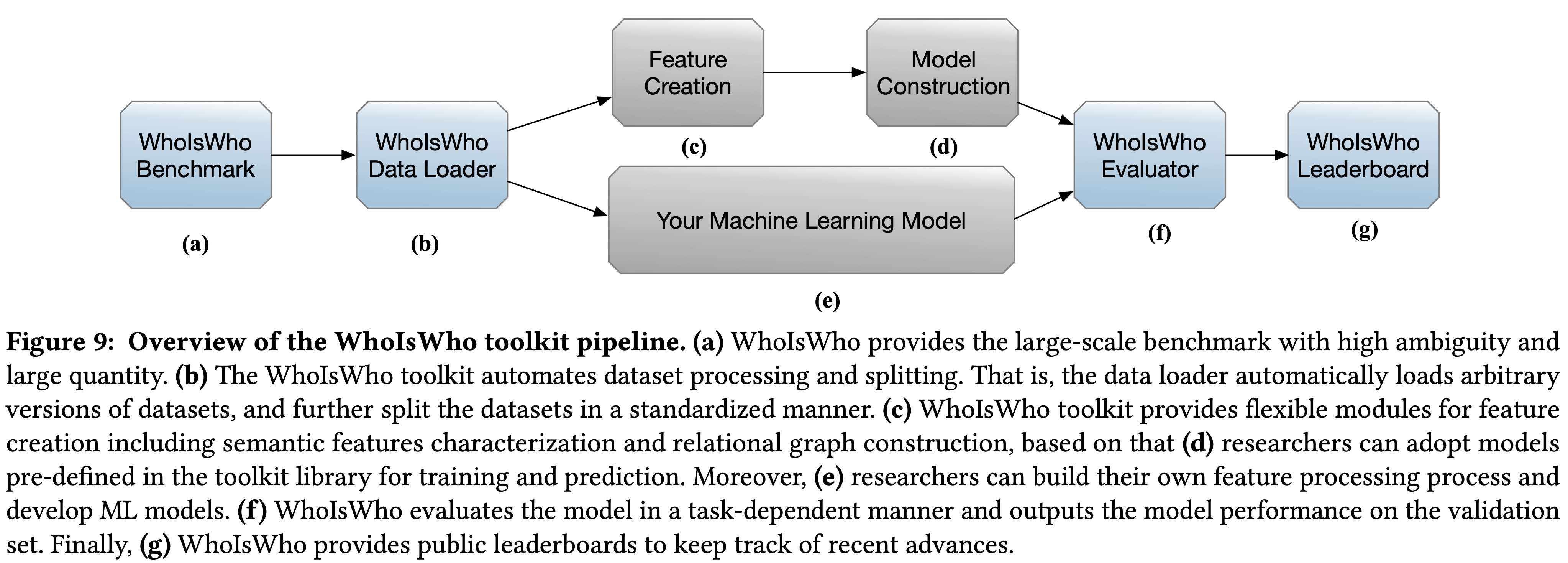

TL;DR: By automating data loading, feature creation, model construction, and evaluation processes, the WhoIsWho toolkit is easy for researchers to use and let them develop new name disambiguation approaches.

The toolkit is fully compatible with PyTorch and its associated deep-learning libraries, such as Hugging face. Additionally, the toolkit offers library-agnostic dataset objects that can be used by any other Python deep-learning framework such as TensorFlow.

pip install whoiswho

The WhoIsWho toolkit aims at providing lightweight APIs to facilitate researchers to build SOTA name disambiguation algorithms with several lines of code. The abstraction has 4 components:

-

WhoIsWho Data Loader: Automating dataset processing and splitting.

-

Feature Creation: Providing flexible modules for extracting and creating features.

-

Model Construction: Adopting pre-defined models in the toolkit library for training and prediction.

-

WhoIsWho Evaluator: Evaluating models in a task-dependent manner and output the model performance on the validation set.

Input the name of the dataset and task type as well as the dataset category and features to be used for name disambiguation. For example:

python -u demo.py --task rnd --name v3 --type train valid test --feature adhoc oagbert graphWith a pipeline design based on four components, you can clearly implement name disambiguation algorithms. The predict result is stored in './whoiswho/training/{task}_result '.

def pipeline(name: str , task: str ,type_list: list, feature_list: list):

version = {"name": name, "task": task}

if task == 'RND':

# Module-1: Data Loading

download_data(name,task)

# Partition the training set into unassigned papers and candidate authors

processdata_RND(version=version)

# Modules-2: Feature Creation

generate_feature(version,

type_list = type_list,

feature_list = feature_list)

# Module-3: Model Construction

trainer = RNDTrainer(version,simplified=False,graph_data=True)

cell_model_list = trainer.fit()

trainer.predict(whole_cell_model_list=cell_model_list,datatype='valid')

# Modules-4: Evaluation

# Please uppload your result to http://whoiswho.biendata.xyz/#/

if task == 'SND':

# Module-1: Data Loading

download_data(name, task)

processdata_SND(version=version)

# Modules-2: Feature Creation & Module-3: Model Construction

trainer = SNDTrainer(version)

trainer.fit(datatype='valid')

# Modules-4: Evaluation

# Please uppload your result to http://whoiswho.biendata.xyz/#/To enable users to quickly reproduce name disambiguation algorithms, the project provides a small dataset NA_Demo based on WhoIsWho v3. Under each author name in the training set, there are more than 20 authors with the same name.

Under the Real-time Name Disambiguation(RND) task, the time costs of NA_Demo and WhoIsWho v3 are as follows:

| Module | Operation | NA_Demo(train+valid) | whoiswho_v3(train+valid+test) |

|---|---|---|---|

| Feature Creation | Semantic Feature(soft) | 20min | 5h |

| Semantic Feature(adhoc) | 14min | 1h23min | |

| Relational Feature(ego) | -- | 2h22min | |

| Model Construction | RNDTrainer.fit | 10min | 1h |

| RNDTrainer.predict | 1min | 37min | |

| Spend Time | 45min | 8~10h | |

| Weighted-F1(%) | 98.64% | 93.52% | |

| Module | Operation | NA_Demo(train+valid) | whoiswho_v3(train+valid+test) |

|---|---|---|---|

| Feature Creation | Semantic Feature | 1min | 7min |

| Relational Feature | 3min | 2h40min | |

| Model Construction | SNDTrainer.fit | 3s | 10s |

| SNDTrainer.predict | 1s | 20s | |

| Spend Time | 4min | 2~3h | |

| Pairwise-F1(%) | 98.30% | 89.22% | |

If you wish to do more extensive experiments, you can obtain the full WhoIsWho v3 dataset. Our preprocessed NA_Demo already provides a representative subset for initial exploration.

Download: https://pan.baidu.com/s/169-dMQa2vgMFe7DUP-BKyQ Password: 9i84

If you want to quickly try pipeline by NA_Demo dataset:

-

Download our NA_Demo dataset

-

Unzip it to the data folder './whoiswho/dataset/data/'.

-

Run the provided baseline code

-

Check the evaluation results on the valid set

This will allow you to quickly reproduce state-of-the-art name disambiguation algorithms on a small demo dataset.

Download: https://pan.baidu.com/s/1q6-v0FF9M9LMvXNaynfyJA Password: 2se6

Here is an introduction to the preprocessed data we have prepared for you. You can decide whether to download it based on your needs:

saved.tar.gz

This is the necessary file for RND and SND, including tf-idf, oagbert model and other data. Unzip it to './whoiswho/'.

processed_data.tar.gz

The RND data processed in whoiswho v3, you can get this data in processed_RND in pipeline. If you want to use this data, execute download_data in pipeline and unzip it to './whoiswho/dataset/data/v3/RND/'.

graph_data.tar.gz & graph_embedding

Ego-graphs constructed for papers and authors in whoiswho v3. If graph information needs to be added to RND task of whoiswho v3, unzip graph_data.tar.gz to './whoiswho/dataset/data/v3/RND/'.

The graph_embedding.tar is too big and we split it into multiple parts, run the following command to merge graph_embedding.tar.* and decompress it:

cat graph_embedding.tar.* |tar -x whoiswhograph_extend_processed_data.tar.gz

If graph information needs to be added to RND task of whoiswho v3, unzip whoiswhograph_extend_processed_data.tar.gz to './whoiswho/dataset/data/v3/RND/'.

Download: https://pan.baidu.com/s/1phxvyKI5p3r-drx3tAysYA Password: h1ea

We provide hand, oagbert, graph features for the RND task of whoiswho v3. If you want to save time, directly load these features to your NA model. You can also get these features in generate_feature in pipeline.

If you want to generate your own graph feature, remember to put gat_model_oagbert_sim.pt in the

'./whoiswho/featureGenerator/rndFeature/'. You can train gat_model_oagbert_sim.pt on your own with the following command:

cd ./whoiswho/featureGenerator/rndFeature/

python graph_dataloader.py

python graph_model.pyIf you find our work useful, please consider citing the following paper.

@article{chen2023web,

title={Web-Scale Academic Name Disambiguation: the WhoIsWho Benchmark, Leaderboard, and Toolkit},

author={Chen, Bo and Zhang, Jing and Zhang, Fanjin and Han, Tianyi and Cheng, Yuqing and Li, Xiaoyan and Dong, Yuxiao and Tang, Jie},

booktitle={Proceedings of the 29th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining},

pages={3817-3828}

year={2023}

}