This repository contains information, data and code of NaturalCodeBench: A Challenging Application-Driven Dataset for Code Synthesis Evaluation.

We propose NaturalCodeBench (NCB), a comprehensive code benchmark designed to mirror the complexity and variety of scenarios in real coding tasks. NCB comprises 402 high-quality problems in Python and Java, meticulously selected from an online coding service, covering 6 different domains.

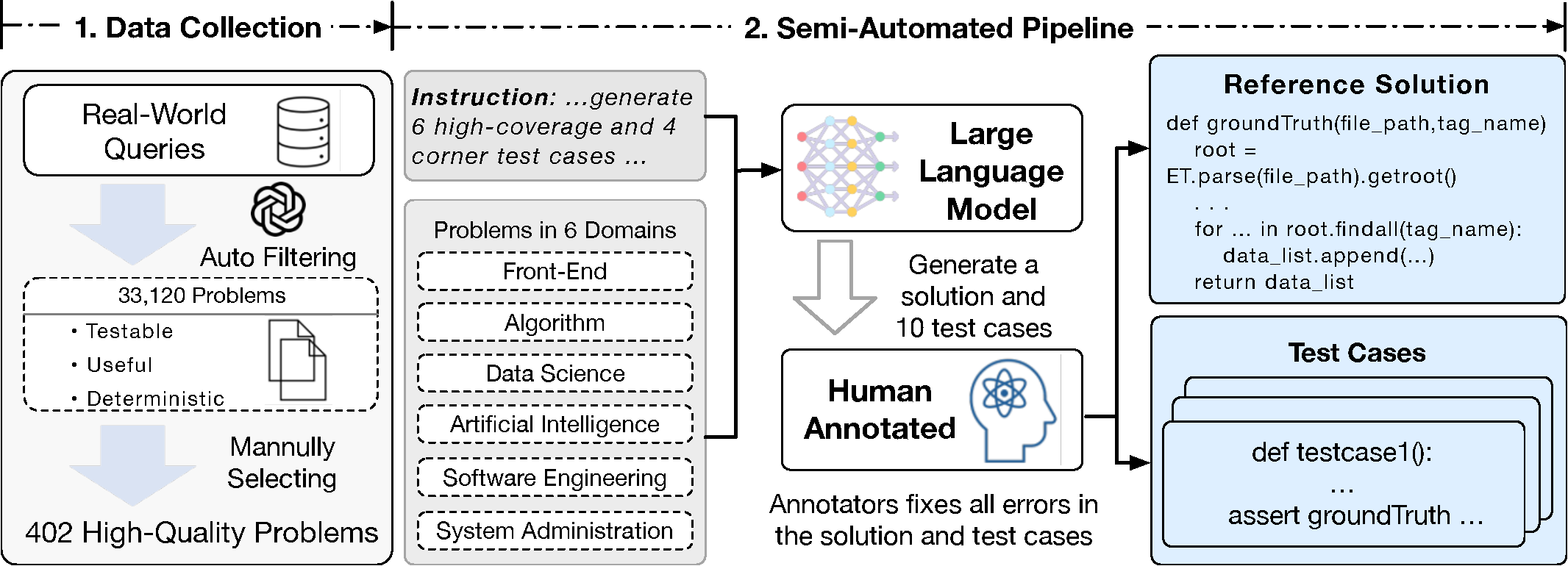

The overall framework of NaturalCodeBench is shown in the above image, including the data collection pipeline and the semi-automated pipeline.

For a full description of NaturalCodeBench, please refer to the paper: https://arxiv.org/abs/2405.04520

To construct a challenging application-driven dataset for code synthesis evaluation, the seed problems of NCB are cleaned from the queries in coding online services, spanning across 6 domains: Artificial Intelligence, Data Science, Algorithm and Data Structure, Front-End, Software Engineering, and System Administration.

| Domains | #Problems | ||

| Dev | Test | Total | |

| Software Engineering | 44 | 88 | 132 |

| Data Science | 32 | 68 | 100 |

| Algorithm and Data Structure | 22 | 73 | 95 |

| System Administration | 22 | 17 | 33 |

| Artificial Intelligence | 15 | 13 | 28 |

| Front-End | 11 | 3 | 14 |

NaturalCodeBench contains 402 high-quality problems in total. We release the development set of NCB, which contains 140 problems (70 in Python and 70 in Java) for research purpose. The data is placed in ...

The data format is as follows.

_id(integer): A unique identifier for each question.prompt(string): The prompt involving problem description and instruction.problem(string): The problem descriptiontestcases(string): The code of testcasessetup_code(string): The code for test setupreference_solution(string): A reference answer to solve the problem.classification(string): The domain of the problem

We provide a docker to setup the environment. Firstly pull the image.

docker pull codegeex/codegeex:0.1.23

Then start Docker and mount the code directory.

docker run --rm -it --shm-size 32g -v /path/to/NaturalCodeBench:/ncb codegeex/codegeex:0.1.23 /bin/bashAfter starting the Docker shell, transfer data into the repository.

cd /ncb

cp -r /NaturalCodeBench/data .

Generate samples and save them in the following JSON Lines (jsonl) format, where each sample is formatted into a single line like so:

{"_id": "NaturalCodeBench Problem ID", "response": "The response of model without prompt"}

Place your JSONL files into the results directory according to the following directory structure.

results/

└── {model_name}/

├── {model_name}_ncb_java_en.jsonl

├── {model_name}_ncb_java_zh.jsonl

├── {model_name}_ncb_python_en.jsonl

└── {model_name}_ncb_python_zh.jsonl

We provide reference under results to illustrate the format and help with debugging.

To evaluate the samples, run

python ncb/evaluate.py --languages python java --natural_lang zh en --ckpt_name {your_model_name} --num_workers 64 --ks 1 10 100

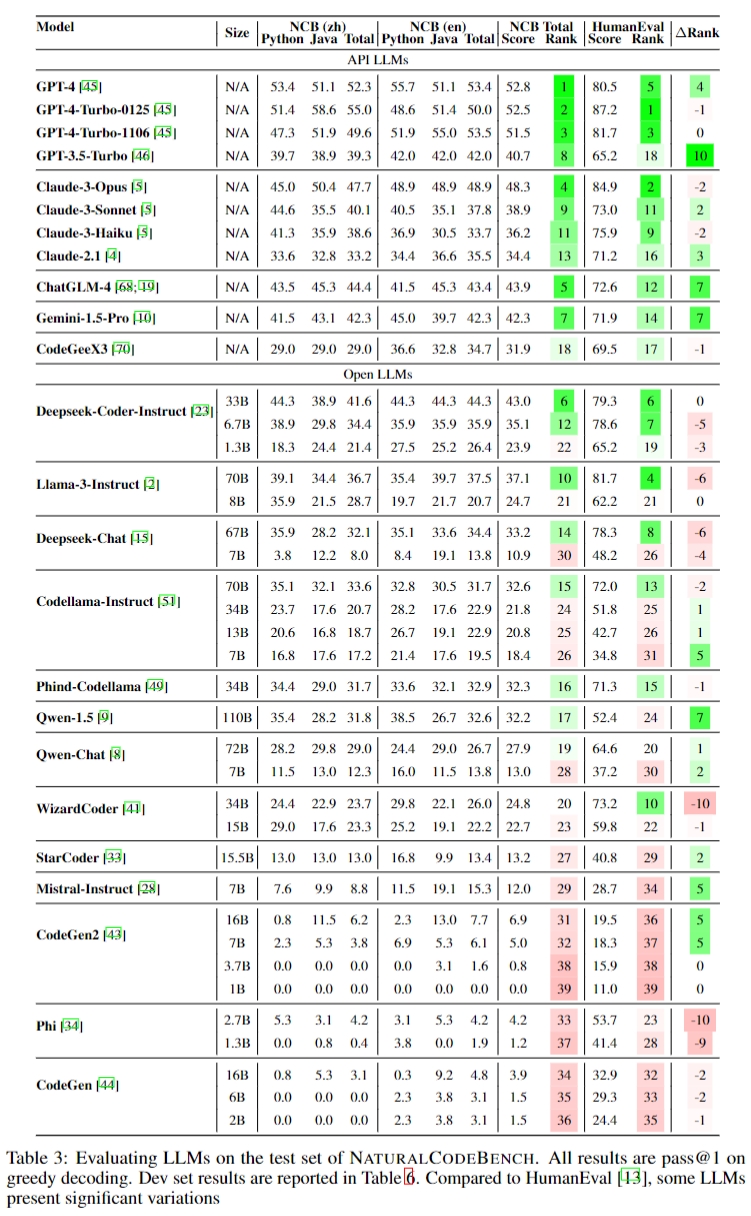

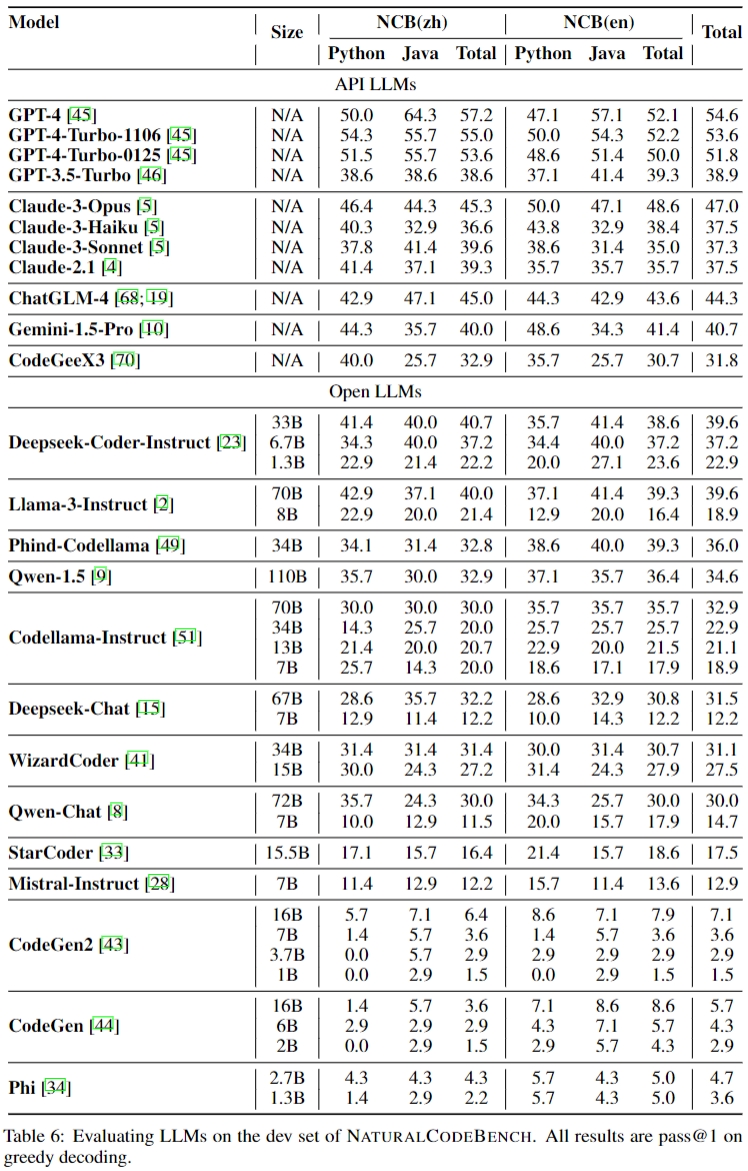

We report our evaluation results of 39 LLMs on the test and dev datasets.

Test set results:

Dev set result:

@misc{zhang2024naturalcodebench,

title={NaturalCodeBench: Examining Coding Performance Mismatch on HumanEval and Natural User Prompts},

author={Shudan Zhang and Hanlin Zhao and Xiao Liu and Qinkai Zheng and Zehan Qi and Xiaotao Gu and Xiaohan Zhang and Yuxiao Dong and Jie Tang},

year={2024},

eprint={2405.04520},

archivePrefix={arXiv},

primaryClass={cs.CL}

}