DragNUWA: DragNUWA enables users to manipulate backgrounds or objects within images directly, and the model seamlessly translates these actions into camera movements or object motions, generating the corresponding video.

-

Clone this repo into custom_nodes directory of ComfyUI location

-

Run pip install -r requirements.txt

-

Download the weights of DragNUWA drag_nuwa_svd.pth and put it to

ComfyUI/models/checkpoints/drag_nuwa_svd.pth

For chinese users:drag_nuwa_svd.pth

smaller and faster fp16 model: dragnuwa-svd-pruned.fp16.safetensors from https://github.com/painebenjamin/app.enfugue.ai

For chinese users: wget https://hf-mirror.com/benjamin-paine/dragnuwa-pruned-safetensors/resolve/main/dragnuwa-svd-pruned.fp16.safetensors 不能直接在浏览器下载,或者参照 https://hf-mirror.com/ 官方使用说明

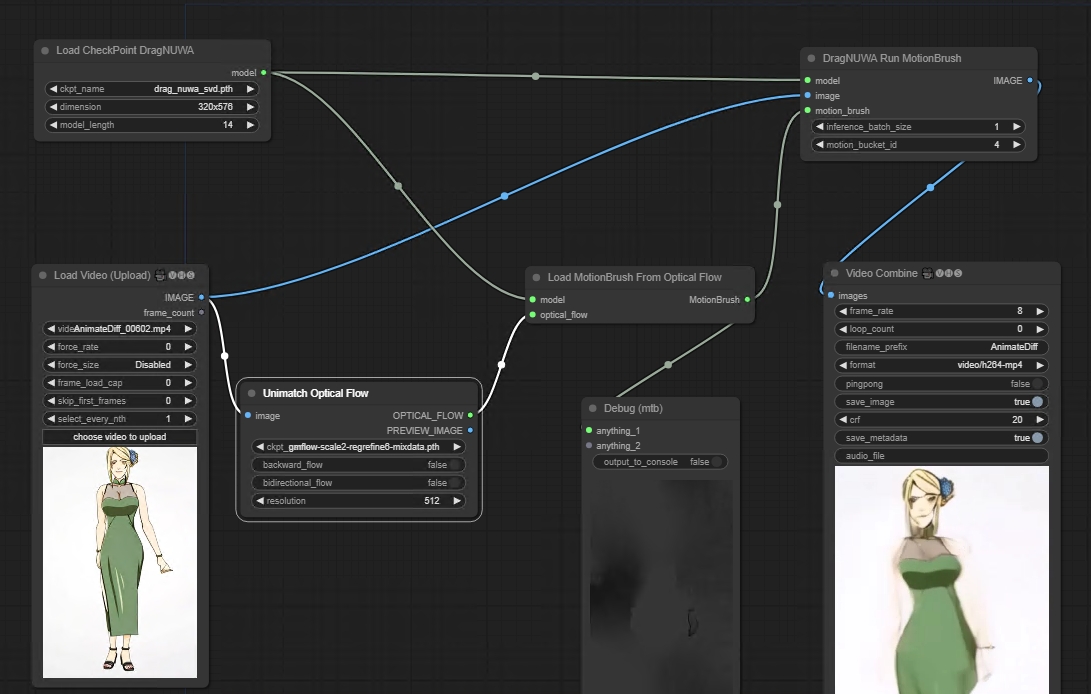

Two nodes Load CheckPoint DragNUWA & DragNUWA Run

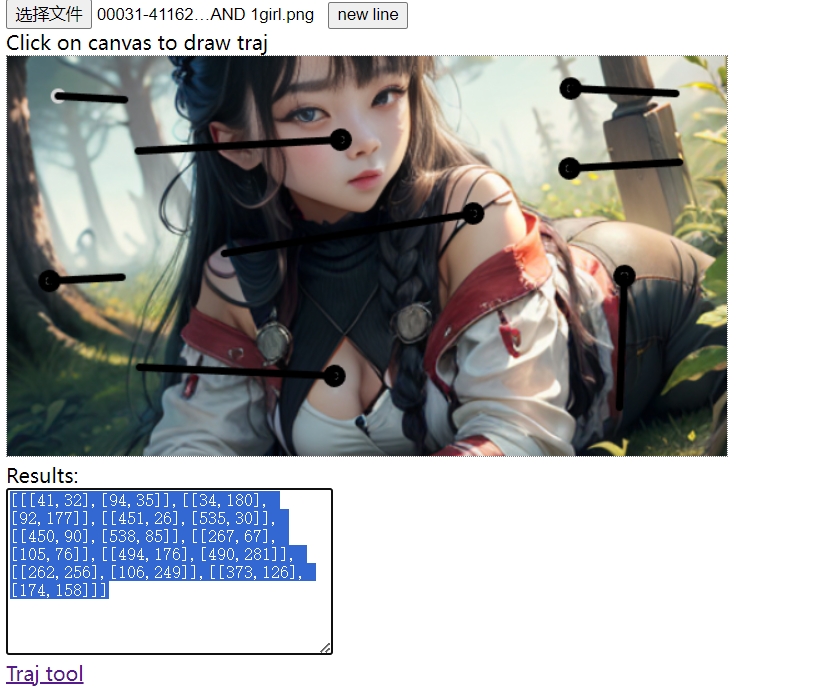

Motion Traj Tool Generate motion trajectories

- base workflow

https://github.com/chaojie/ComfyUI-DragNUWA/blob/main/workflow.json

- auto traj video generation (working on)

one flow: video -> dwpose -> keypoints -> trajectory -> DragNUWA (dragposecontrol animateanyone)

- optical flow workflow

Thanks for Fannovol16's Unimatch_ OptFlowPreprocessor Thanks for toyxyz's load optical flow from directory

https://github.com/chaojie/ComfyUI-DragNUWA/blob/main/workflow optical_flow.json