†: joint first author & equal contribution *: corresponding author

🏎️Special thanks to Dr. Wentao Feng for the workplace, computation power, and physical infrastructure support.

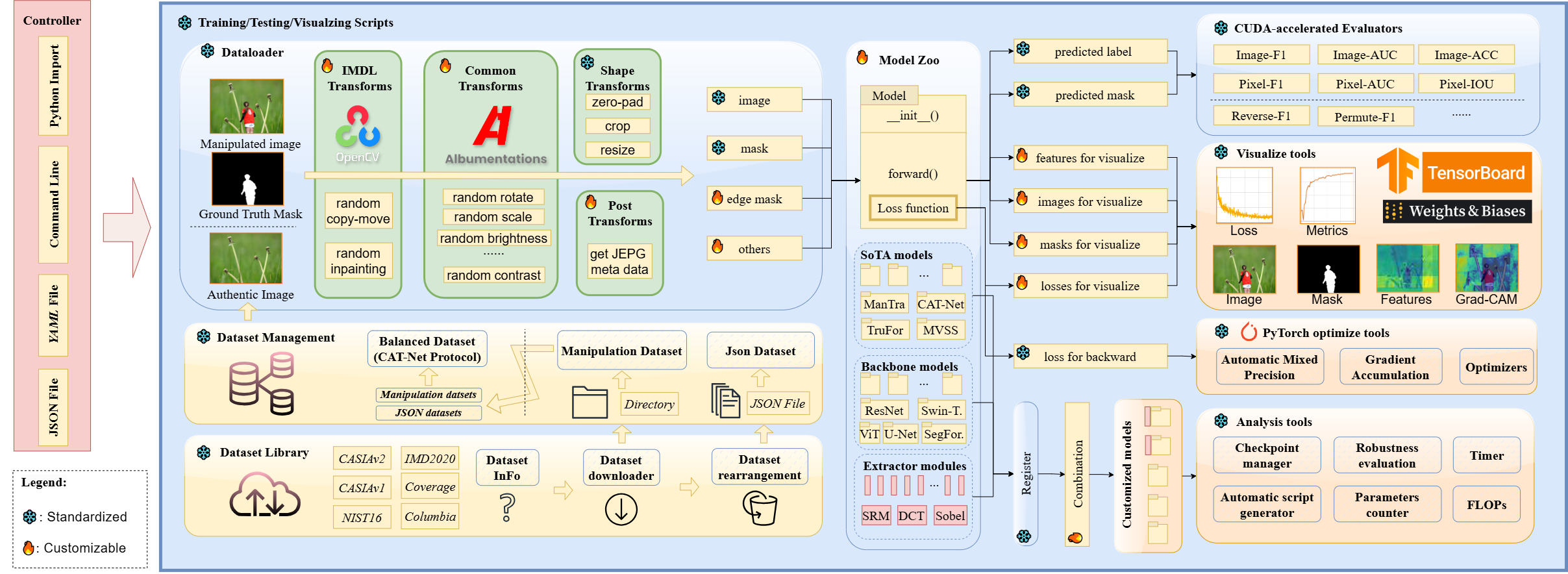

☑️Welcome to IMDL-BenCo, the first comprehensive IMDL benchmark and modular codebase.

- This codebase is under long-term maintenance and updating. New features, extra baseline/sota models, and bug fixes will be continuously involved. You can find the corresponding plan here shortly.

- This repo decomposes the IMDL framework into standardized, reusable components and revises the model construction pipeline, improving coding efficiency and customization flexibility.

- This repo fully implements or incorporates training code for state-of-the-art models to establish a comprehensive IMDL benchmark.

- Cite and star if you feel helpful. This will encourage us a lot 🥰.

☑️About the Developers:

- IMDL-BenCo's project leader/supervisor is Associate Professor 🏀Jizhe Zhou (周吉喆), Sichuan University🇨🇳.

- IMDL-BenCo's codebase designer and coding leader is Research Assitant Xiaochen Ma (马晓晨), Sichuan University🇨🇳.

- IMDL-BenCo is jointly sponsored and advised by Prof. Jiancheng LV (吕建成), Sichuan University 🐼, and Prof. Chi-Man PUN (潘治文), University of Macau 🇲🇴, through the Research Center of Machine Learning and Industry Intelligence, China MOE platform.

Important! The current documentation and tutorials are not complete. This is a project that requires a lot of manpower, and we will do our best to complete it as quickly as possible.

Currently, you can use the demo following the brief tutorial below.

This repository has completed training, testing, robustness testing, Grad-CAM, and other functionalities for mainstream models.

However, more features are currently in testing for improved user experience. Updates will be rolled out frequently. Stay tuned!

-

Install and download via PyPI

-

Based on command line invocation, similar to

condain Anaconda.- Dynamically create all training scripts to support personalized modifications.

-

Information library, downloading, and re-management of IMDL datasets.

-

Support for Weight & Bias visualization.

Currently, you can create a PyTorch environment and run the following command to try our repo.

git clone https://github.com/scu-zjz/IMDLBenCo.git

cd IMDLBenCo

pip install -r requirements.txt- We defined three types of Dataset class

JsonDataset, which gets input image and corresponding ground truth from a JSON file with a protocol like this:where "Negative" represents a totally black ground truth that doesn't need a path (all authentic)[ [ "/Dataset/CASIAv2/Tp/Tp_D_NRN_S_N_arc00013_sec00045_11700.jpg", "/Dataset/CASIAv2/Gt/Tp_D_NRN_S_N_arc00013_sec00045_11700_gt.png" ], ...... [ "/Dataset/CASIAv2/Au/Au_nat_30198.jpg", "Negative" ], ...... ]ManiDatasetwhich loads images and ground truth pairs automatically from a directory having sub-directories namedTp(for input images) andGt(for ground truths). This class will generate the pairs using the sortedos.listdir()function. You can take this folder as an example.BalancedDatasetis a class used to manage large datasets according to the training method of CAT-Net. It reads an input file as./runs/balanced_dataset.json, which contains types of datasets and corresponding paths. Then, for each epoch, it randomly samples over 1800 images from each dataset, achieving uniform sampling among datasets with various sizes.

Some models like TruFor may need pre-trained weights. Thus you need to download them in advance. You can check the guidance to download the weights in each folder under the ./IMDLBenCo/model_zoo for the model. For example, the guidance for TruFor is under IMDLBenCo\model_zoo\trufor\README.md

You can achieve customized training by modifying the dataset path and various parameters. For specific meanings of these parameters, please use python ./IMDLBenco/training_scripts/train.py -h to check.

By default, all provided scrips are called as follows:

sh ./runs/demo_train_iml_vit.sh

Now, you can call a Tensorboard to visualize the training results by a browser.

tensorboard --logdir ./

Our design paradigm aims for the majority of customization for new models (including specific models and their respective losses) to occur within the model_zoo. Therefore, we have adopted a special design paradigm to interface with other modules. It includes the following features:

- Loss functions are defined in

__init__and computed withinforward(). - The parameter list of

forward()must consist of fixed keys to correspond to the input of required information such asimage,mask, and so forth. Additional types of information can be generated via post_func and their respective fields, accepted through corresponding parameters with the same names inforward(). - The return value of the

forward()function is a well-organized dictionary containing the following information as an example:

# -----------------------------------------

output_dict = {

# loss for backward

"backward_loss": combined_loss,

# predicted mask, will calculate for metrics automatically

"pred_mask": mask_pred,

# predicted binaray label, will calculate for metrics automatically

"pred_label": None,

# ----values below is for visualization----

# automatically visualize with the key-value pairs

"visual_loss": {

# customized float for visualize, the key will shown as the figure name. Any number of keys and any str can be added as key.

"predict_loss": predict_loss,

"edge_loss": edge_loss,

"combined_loss": combined_loss

},

"visual_image": {

# customized tensor for visualize, the key will shown as the figure name. Any number of keys and any str can be added as key.

"pred_mask": mask_pred,

"edge_mask": edge_mask

}

# -----------------------------------------Following this format, it is convenient for the framework to backpropagate the corresponding loss, compute final metrics using masks, and visualize any other scalars and tensors to observe the training process.

If you find our work valuable and it has contributed to your research or projects, we kindly request that you cite our paper. Your recognition is a driving force for our continuous improvement and innovation🤗.

@misc{ma2024imdlbenco,

title={IMDL-BenCo: A Comprehensive Benchmark and Codebase for Image Manipulation Detection & Localization},

author={Xiaochen Ma and Xuekang Zhu and Lei Su and Bo Du and Zhuohang Jiang and Bingkui Tong and Zeyu Lei and Xinyu Yang and Chi-Man Pun and Jiancheng Lv and Jizhe Zhou},

year={2024},

eprint={2406.10580},

archivePrefix={arXiv},

primaryClass={cs.CV}

}