tl;dr - Make a PR with poster.png < 1.5MB.

Details:

- fork this repo and clone it to your local computer

- update the repo with your poster and its description

- git add your poster image to

posters/folder- or just directly upload to the folder on your webbrowser

- update your repo's

readme.mdwith the right format (you can also directly edit it on webbrowser)### [poster session code] TITLE | LINK_TO_PAPER | LINK_TO_CODE | LINK_TO_WHATEVER AUTHOR NAMES > "SHORT DESCRIPTION THAT YOU USED IN THE CONFERENCE WEBSITE" POSTER IMAGE HERE WITH A RELATIVE LINK, i.e.,

- git add your poster image to

- commit, push

- make sure it looks good on

readme.mdof your forked repo - make a pull request

- DO NOT CHANGE OTHERS' POSTERS - WE DON'T NEED A MERGE CONFLICT HERE!

Session A, Session B, Session C, Session D, Session E, Session F, Session G, Late-Breaking/Demo MIREX

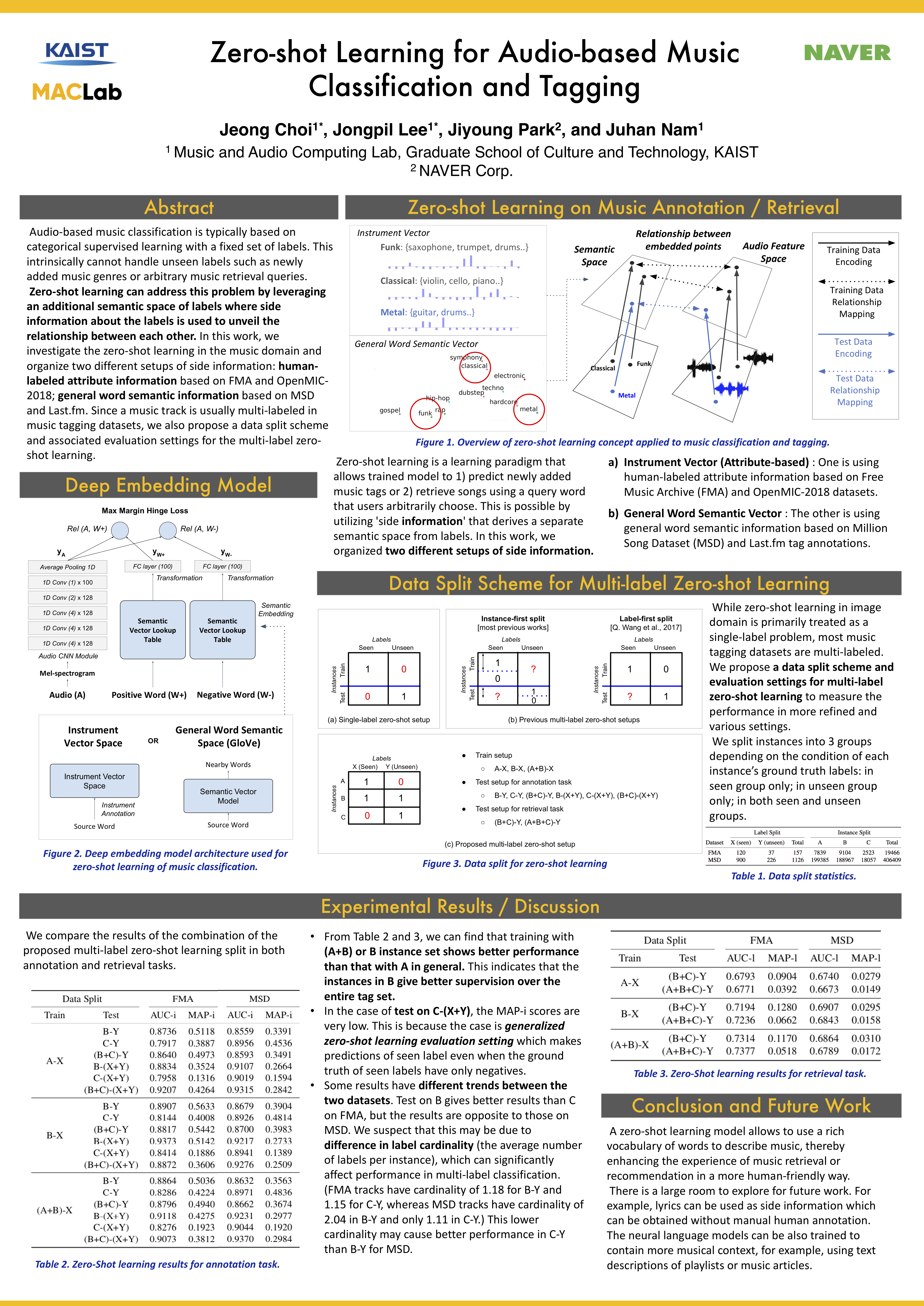

Jeong Choi; Jongpil Lee; Jiyoung Park; Juhan Nam

"Investigated the paradigm of zero-shot learning applied to music domain. Organized 2 side information setups for music calssification task. Proposed a data split scheme and associated evaluation settings for the multi-label zero-shot learning."

"We find extremely valuable to compare playlist datasets generated in different contexts, as it allows to understand how changes in the listening experience are affecting playlist creation strategies."

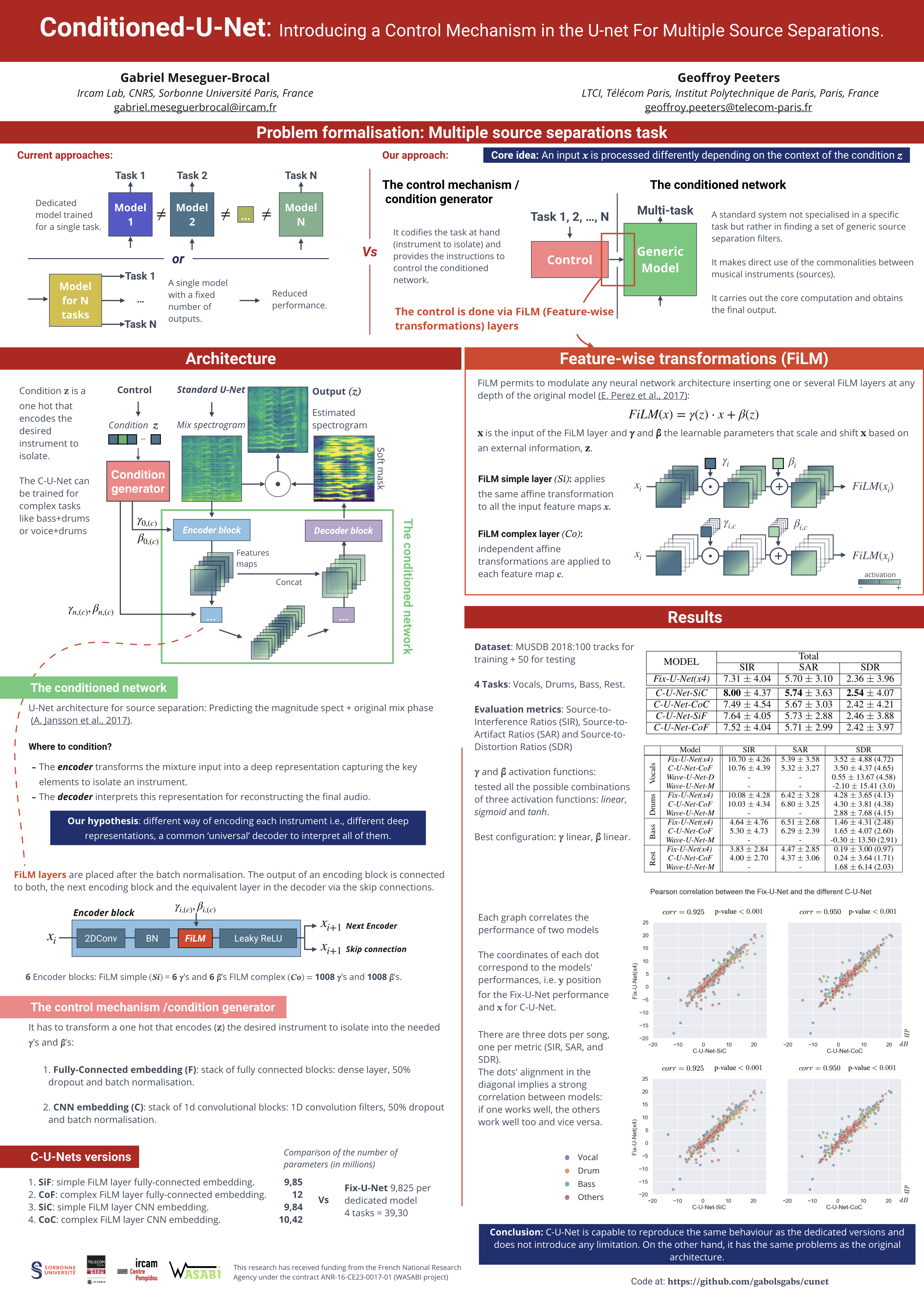

[A-13] Conditioned-U-Net: Introducing a Control Mechanism in the U-Net for Multiple Source Separations | paper | code

Gabriel Meseguer-Brocal; Geoffroy Peeters

"In this paper, we apply conditioning learning to source separation and introduce a control mechanism to the standard U-Net architecture. The control mechanism allows multiple instrument separations with just one model without losing performance."

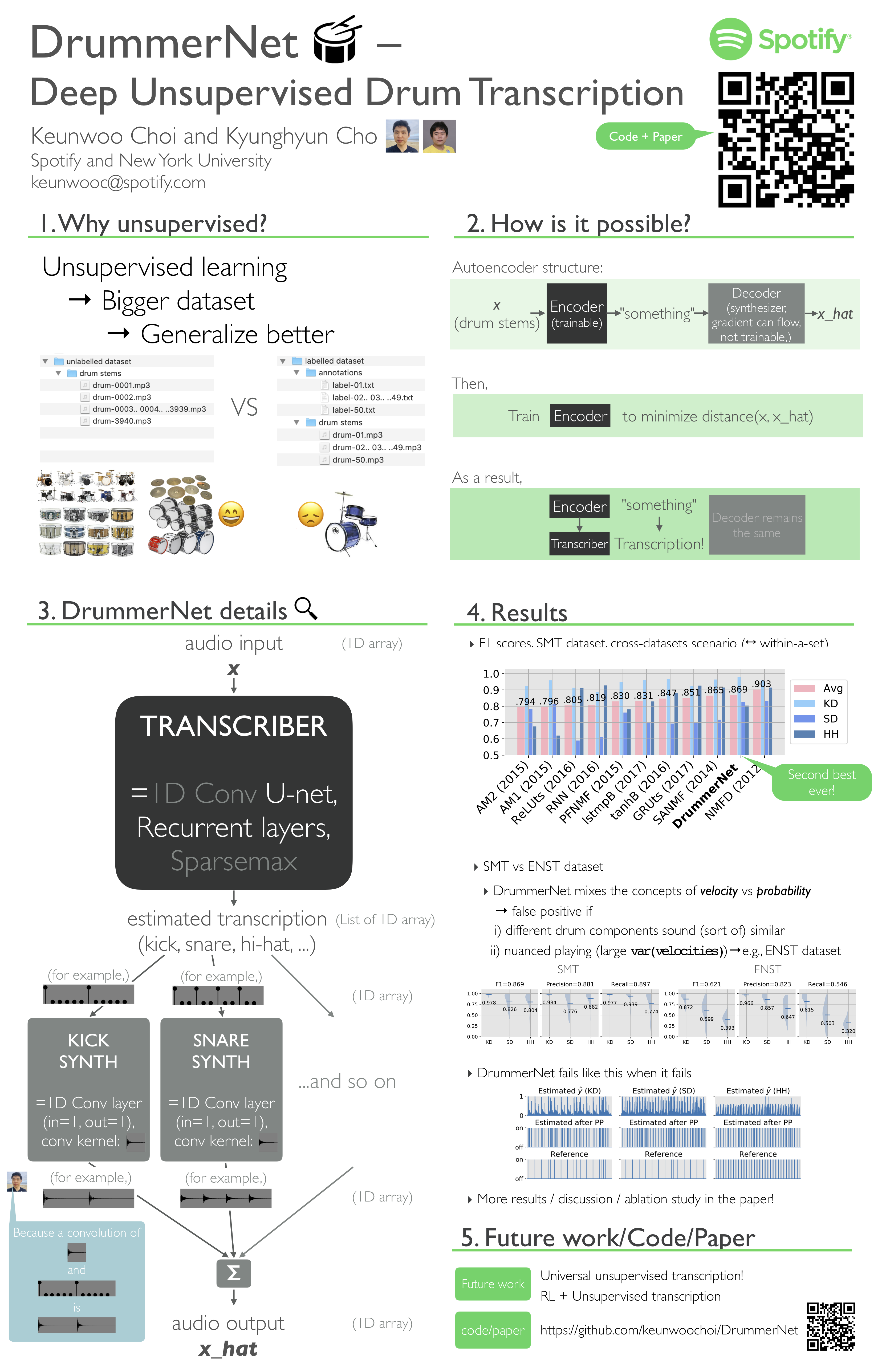

"DrummerNet is a drum transcriber trained in an unsupervised fashion. DrummerNet learns to transcribe by learning to reconstruct the audio with the transcription estimate. Unsupervised learning + a large dataset allow DrummerNet to be less-biased."

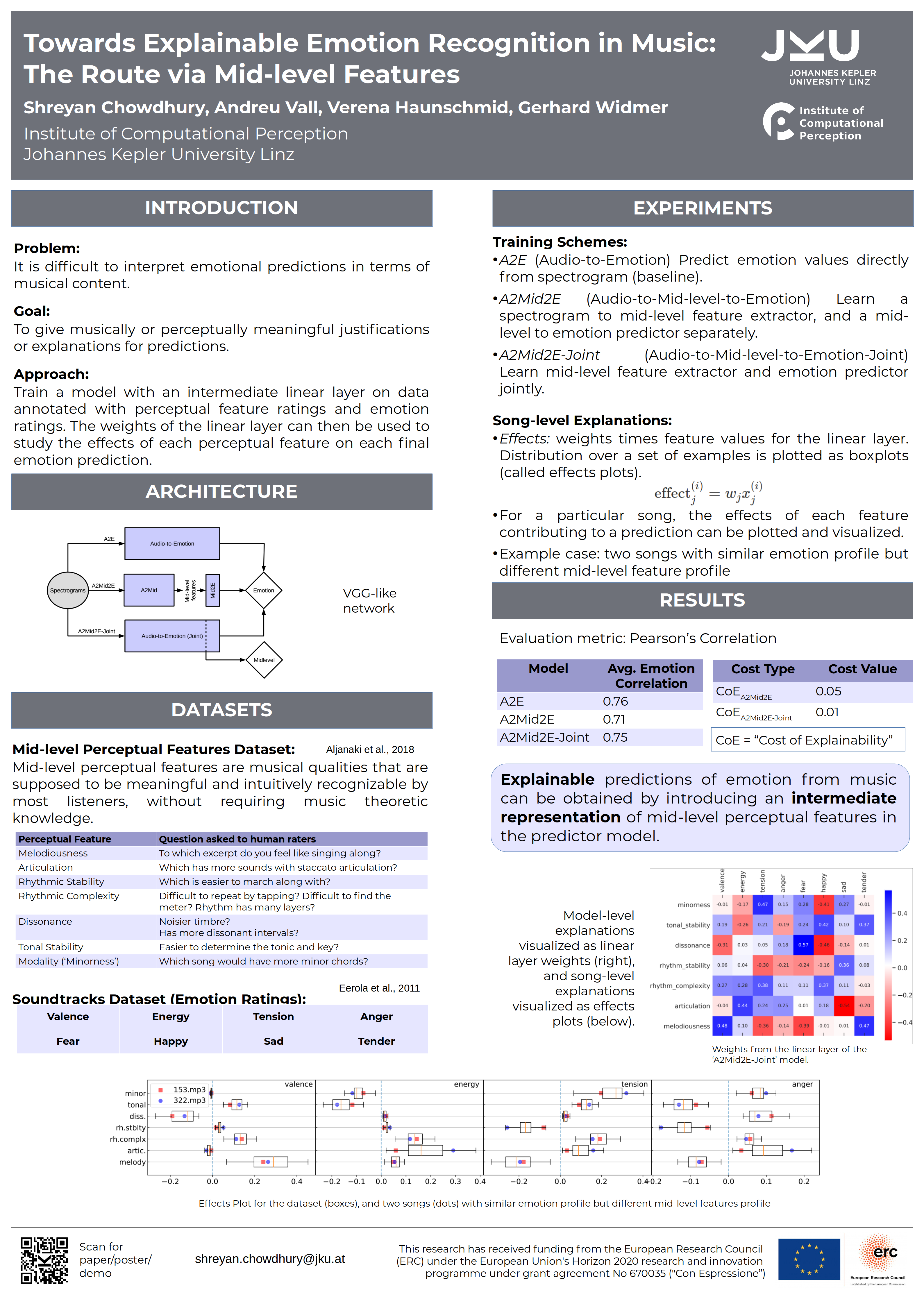

[B-09] Towards Explainable Emotion Recognition in Music: The Route via Mid-level Features | paper | demo

Shreyan Chowdhury, Andreu Vall Portabella, Verena Haunschmid, Gerhard Widmer

"Explainable predictions of emotion from music can be obtained by introducing an intermediate representation of mid-level perceptual features in the predictor deep neural network."

[C-01] Learning a Joint Embedding Space of Monophonic and Mixed Music Signals for Singing Voice | paper | code | mashup example

"The paper introduces a new method of obtaining a consistent singing voice representation from both monophonic and mixed music signals. Also, it presents a simple music mashup pipeline to create a large synthetic singer dataset"

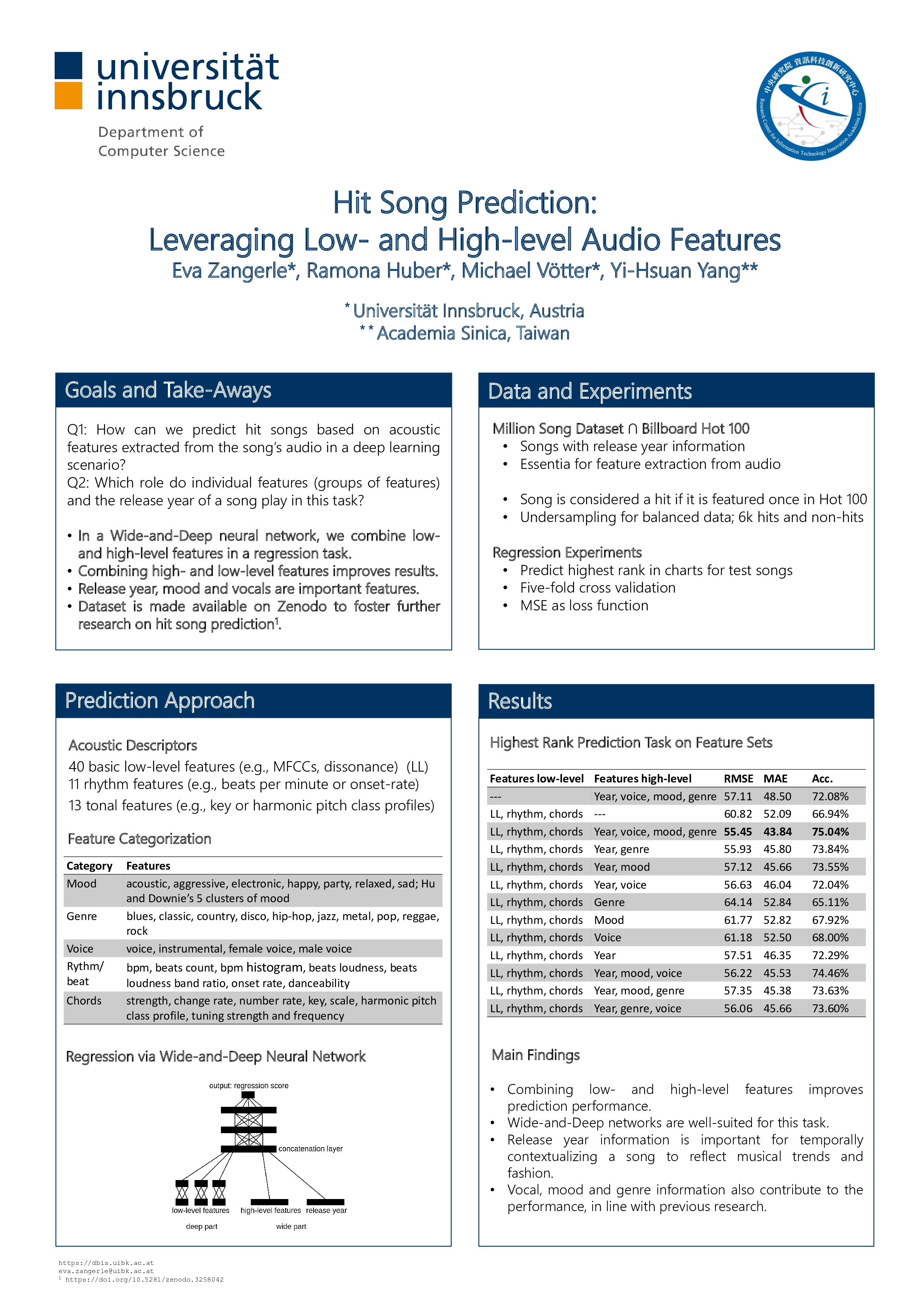

Eva Zangerle; Michael Vötter; Ramona Huber; Yi-Hsuan Yang

"We show that for predicting the potential success of a song, both low- and high-level audio features are important. We use a deep and wide neural network to model these features and perform a regression task on the track’s rank in the charts."

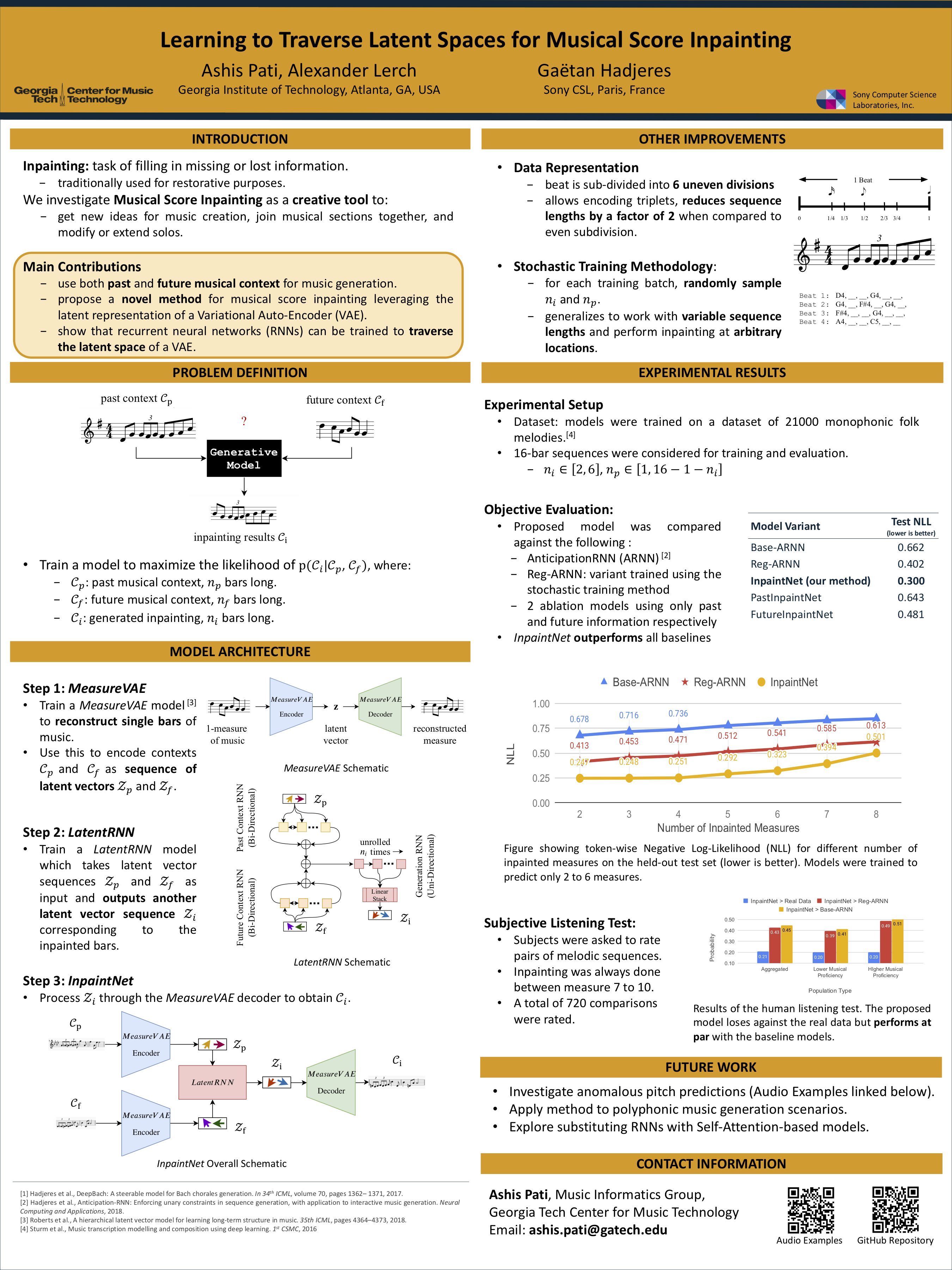

[C-07] Learning to Traverse Latent Spaces for Musical Score Inpainting | paper | code | audio-examples

Ashis Pati, Alexander Lerch, Gaëtan Hadjeres

"Recurrent Neural Networks can be trained using latent embeddings of a Variational Auto-Encoder-based model to to perform interactive music generation tasks such as inpainting."

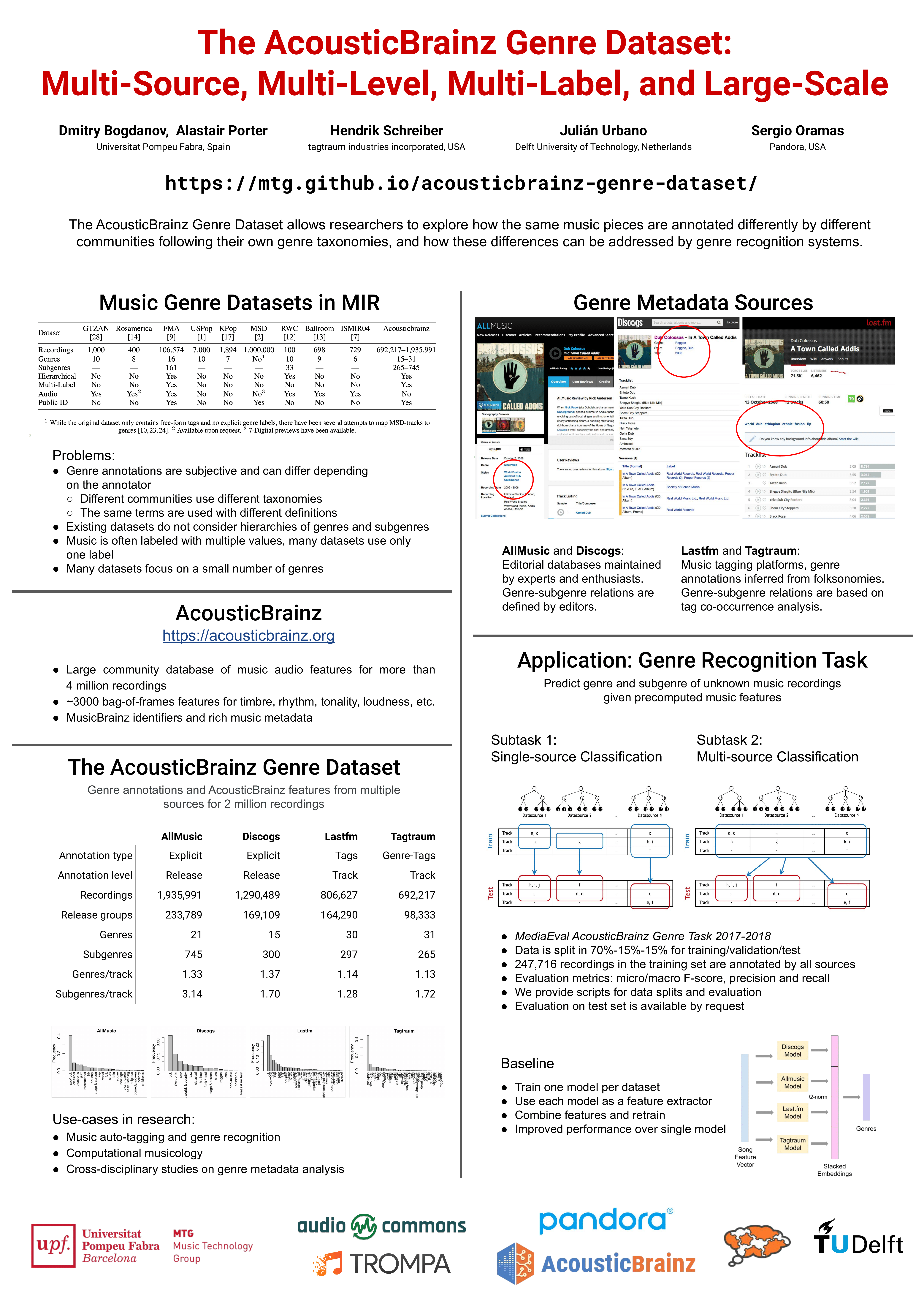

[C-09] The AcousticBrainz Genre Dataset: Multi-Source, Multi-Level, Multi-Label, and Large-Scale | paper | dataset

Dmitry Bogdanov, Alastair Porter, Hendrik Schreiber, Julián Urbano, Sergio Oramas

"The AcousticBrainz Genre Dataset allows researchers to explore how the same music pieces are annotated differently by different communities following their own genre taxonomies, and how these differences can be addressed by genre recognition systems."

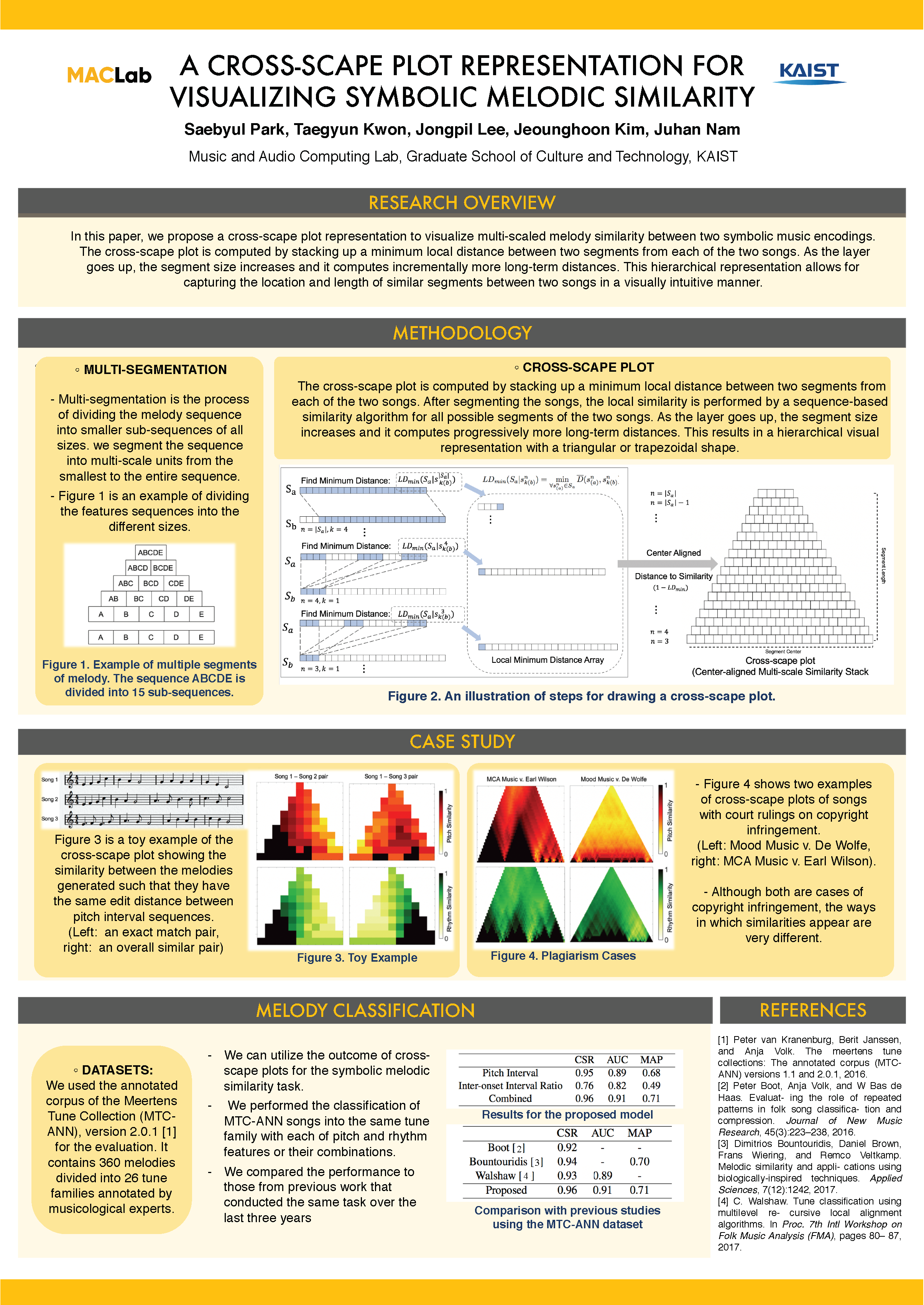

Saebyul Park; Taegyun Kwon; Jongpil Lee; Jeounghoon Kim; Juhan Nam

"We propose a cross-scape plot representation to visualize multi-scaled melody similarity between two symbolic music. We evaluate its effectiveness on examples from folk music collections with similarity-based categories and plagiarism cases."

[D-04] A Dataset of Rhytmic Pattern Reproductions and Baseline Automatic Assessment System | paper | code | MAST rhythm dataset | re-annotated dataset

Felipe Falcão, Baris Bozkurt, Xavier Serra, Nazareno Andrade, Ozan Baysal

"This present work is an effort to address the shortage of music datasets designed for rhythmic assessment. A new dataset and baseline rhythmic assessment system are provided in order to support comparative studies about rhythmic assessment."

[D-06] Blending Acoustic and Language Model Predictions for Automatic Music Transcription | paper | code | supplementary-material

Adrien Ycart, Andrew McLeod, Emmanouil Benetos, Kazuyoshi Yoshii

"Dynamically integrating predictions from an acoustic and a language model with a blending model improves automatic music transcription performance on the MAPS dataset. Results are further improved by operating on 16th-note timesteps rather than 40ms."

[D-08] A Comparative Study of Neural Models for Polyphonic Music Sequence Transduction | paper

Adrien Ycart, Daniel Stoller, Emmanouil Benetos

"A systematic study using various neural models and automatic music transcription systems shows that a cross-entropy-loss CNN improves transduction performance, while an LSTM does not. Using an adversarial set-up also does not yield improvement."

[E-04] A Diplomatic Edition of Il Lauro Secco: Ground Truth for OMR of White Mensural Notation | paper | dataset

Emilia Parada-Cabaleiro, Anton Batliner, Björn Schuller

"We present a symbolic representation in mensural notation of the anthology Il Lauro Secco. For musicological analysis we encoded the repertoire in **mens and MEI; to support OMR research we present ground truth in agnostic and semantic formats."

[E-05] The Harmonix Set: Beats, Downbeats, and Functional Segment Annotations of Western Popular Music, Nieto, O., McCallum, M., Davies., M., Robertson, A., Stark, A., Egozy, E. | poster | paper | code

"Human annotated dataset containing beats, downbeats, and structural segmentation for over 900 pop tracks."

[E-06] FMP Notebooks: Educational Material for Teaching and Learning Fundamentals of Music Processing | paper | web

"The FMP notebooks include open-source Python code, Jupyter notebooks, detailed explanations, as well as numerous audio and music examples for teaching and learning MIR and audio signal processing."

[F-13] Learning Disentangled Representations of Timbre and Pitch for Musical Instrument Sounds Using Gaussian Mixture Variational Autoencoders | paper | demo

Yin-Jyun Luo; Kat Agres; Dorien Herremans

"We disentangle pitch and timbre of musical instrument sounds by learning separate interpretable latent spaces using Gaussian mixture variational autoencoders. The model is verified by controllable sound synthesis and many-to-many timbre transfer."

[G-03] Adaptive Time–Frequency Scattering for Periodic Modulation Recognition in Music Signals | paper | dataset

Changhong Wang; Emmanouil Benetos; Vincent Lostanlen; Elaine Chew

"Scattering transform provides a versatile and compact representation for analysing playing techniques."

Jordan B. L. Smith; Yuta Kawasaki; Masataka Goto

"Unmixer is a web interface where users can upload music, extract loops, remix them, and mash-up loops from different songs. To extract loops with source separation, we use a nonnegative tensor factorization method improved with a sparsity constraint."

[G-08] GENERATING STRUCTURED DRUM PATTERN USING VARIATIONAL AUTOENCODER AND SELF-SIMILARITY MATRIX | paper | code

"We proposed a model that incorporates long term structure into the music generation process using VAE amd SSM. Subjective evaluation results suggest its effectiveness in generating transitions at structural boundaries."

[G-12] Audio-query Based Music Source Separation | paper

Jie Hwan Lee(); Hyeong-Seok Choi(); Kyogu Lee (*: Equal contribution)

“We propose a network for audio query-based music source separation that can explicitly encode the source information from a query signal regardless of the number and/or kind of target signals. The proposed method consists of a Query-net and a Separator: given a query and a mixture, the Query-net encodes the query into the latent space, and the Separator estimates masks conditioned by the latent vector, which is then applied to the mixture for separation.”

[G-13] Adaptive Time–Frequency Scattering for Periodic Modulation Recognition in Music Signals | paper | code/examples

Dan MacKinlay; Zdravko Botev

“We apply sparse dictionary decomposition twice to autocorrelograms of signals, to get a novel analysis of and method for mosaicing music style transfer, which has the novel feature of handling time-scaling of the source audio naturally.”

[G-16] VirtuosoNet: A Hierarchical RNN-based System for Modeling Expressive Piano Performance | paper | code

Dasaem Jeong; Taegyun Kwon; Yoojin Kim; Kyogu Lee; Juhan Nam

"We present an RNN-based model that reads MusicXML and generates human-like performance MIDI. The model employs a hierarchical approach by using attention network and an independent measure-level estimation module. We share our code and dataset."

[L-06] Tools for Semi-Automatic Bounding Box Annotation of Musical Measures in Sheet Music | paper | web

Frank Zalkow; Angel Villar Corrales; TJ Tsai; Vlora Arifi-Müller; Meinard Müller;

"In score following, one main goal is to highlight measure positions in sheet music synchronously to audio playback. Such applications require alignments between sheet music and audio representations. Often, such alignments can be computed automatically in the case that the sheet music representations are given in some symbolically encoded music format. However, sheet music is often available only in the form of digitized scans. In this case, the automated computation of accurate alignments poses still many challenges [1]. In this contribution, we present various semi-automatic tools for solving the subtask of determining bounding boxes (given in pixels) of measure positions in digital scans of sheet music—a task that is extremely tedious when being done manually."

[L-07] Improving Music Tagging from Audio with User-Track Interactions | paper

Andres Ferraro; Jae Ho Jeon; Jisang Yoon; Xavier Serra; Dmitry Bogdanov

"We propose to improve the tagging of music by using audio and collaborative filtering information (user-track interactions). We use Matrix Factorization (MF) to obtain a representation of the tracks from the user-track interactions and map those representations to the tags predicted from audio. The preliminary results show that following this approach we can increase the tagging performance."

[L-10] Creating a Tool for Faciltiating and Researching Human Annotation of Musical Patterns | paper | code

Stephan Wells; Iris Yuping Ren; Anja Volk

"Musical patterns (repeated segments of music) are highly widespread in all varieties of music, and annotations of such patterns are valuable in many areas of music information retrieval. Unfortunately, there is a lack of expert annotations of musical patterns, and most annotation is done by hand. In this project, we introduce a novel software, ANOMIC, designed for users to intuitively annotate repeated musical segments, and we perform a user study which yields a large database of annotations done using the tool. We find that the tool’s reception was strongly positive and show that the annotations done with it reach high levels of inter-annotator agreement compared to traditional approaches."

Kin Wai Cheuk; Kat Agres; Dorien Herremans

"With the advent of Graphics Processing Units (GPUs), many computational methods, such as Tensorflow and PyTorch, are now taking advantage of these technologies to dramatically speed up calculations. The number of GPU based processing libraries for signal processing is limited to tools such as tf.signal, torchaudio (PyTorch based), and Kapre (Tensorflow based). We propose a PyTorch based neural network audio processing tool called nnAudio. Our library offer GPU-leveraged calculation of linear spectrograms, log spectrograms, Mel Spectrograms (MelSpec), and constant-Q transform (CQT). nnAudio is the only GPU-based library that offers CQT calculation. We record a speed increase of over 100 times when using nnAudio versus traditional signal processing tools"

[L-12] Generative Audio Synthesis with a Parametric Model | paper | Audio Examples

Krishna Subramani; Alexandre D'Hooge; Preeti Rao

Propose a parametric representation for audio corresponding more directly to its musical attributes such as pitch, dynamics and timbre. For more control over generation, we also propose the use of a conditional variational autoencoder which conditions the timbre on pitch.

[L-35] Automated Time-Frequency Domain Audio Crossfades Using Graph Cuts | paper | code | 'DJ' Example, 'Standard' Example

Kyle Robinson; Dan Brown

"We present the first implementation of a new method to automatically transition between songs by finding an optimal seam in the time-frequency spectrum."

Matevž Pesek; Darian Tomašević; Iris Yuping Ren; Matija Marolt

"The Pattern Annotation Framework (PAF) tool collects the data about the annotator and the annotation process, to enable an analysis of relations between the user's experience/background and the annotations. The tool tracks the user's actions, such as the start and end time of an individual annotation and its changes, midi player actions and other. By open-sourcing the tool, we hope to aid other researchers in the MIR field dealing with pattern-related data gathering."

[L-49] Linking and Visualising Performance Data and Semantic Music Encodings in Real-Time | paper | code | web

David M. Weigl; Carlos Cancino-Chacón; Martin Bonev; Werner Goebl

"We present CLARA (Companion for Long-term Analyses of Rehearsal Attempts), a visualisation interface for real-time performance-to-score alignment based on the MELD (Music Encoding and Linked Data) framework for semantic digital notation, employing MAPS (Matcher for Alignment of Performance and Score), an HMM-based polyphonic score-following system for symbolic (MIDI) piano performances."