- October 16' 2023: We released the version 0.2.0 of

vod- simpler & better code ✨ - September 21' 2023: We integrated the BeIR datasets 🍻

- July 19' 2023: We integrated Qdrant as a search backend 📦

- June 15' 2023: We adopted Ligthning's Fabric ⚡️

- April 24' 2023: We will be presenting Variational Open-Domain Question Answering at ICML2023 @ Hawaii 🌊

VOD aims at building, training and evaluating next-generation retrieval-augmented language models (REALMs). The project started with our research paper Variational Open-Domain Question Answering, in which we introduce the VOD objective: a new variational objective for end-to-end training of REALMs.

The original paper only explored the application of the VOD objective to multiple-choice ODQA, this repo aims at exploring generative tasks (generative QA, language modelling and chat). We are building tools to make training of large generative search models possible and developper-friendly. The main modules are:

vod_dataloaders: efficienttorch.utils.DataLoaderwith dynamic retrieval from multiple search enginesvod_search: a common interface to handlesparseanddensesearch engines (elasticsearch, faiss, Qdrant)vod_models: a collection of REALMs using large retrievers (T5s, e5, etc.) and OS generative models (RWKV, LLAMA 2, etc.). We also includevod_gradients, a module to compute gradients of RAGs end-to-end.

Progress tracked in VodLM#1

The repo is currently in research preview. This means we already have a few components in place, but we still have work to do before a wider adoption of VOD, and before training next-gen REALMS. Our objectives for this summer are:

- Search API: add Filtering Capabilities

- Datasets: support more datasets for common IR & Gen AI

- UX: plug-and-play, extendable

- Modelling: implement REALM for Generative Tasks

- Gradients: VOD for Generative Tasks

If you also see great potential in combining LLMs with search components, join the team! We welcome developers interested in building scalable and easy-to-use tools as well as NLP researchers.

| Module | Usage | Status |

|---|---|---|

| vod_configs | Sturctured pydantic configurations |

✅ |

| vod_dataloaders | Dataloaders for retrieval-augmented tasks | ✅ |

| vod_datasets | Universal dataset interface (rosetta) and custom dataloaders (e.g., BeIR) |

✅ |

| vod_exps | Research experiments, configurable with hydra |

✅ |

| vod_models | A collection of REALMs + gradients (retrieval, VOD, etc.) | |

| vod_ops | ML operations using lightning.Fabric (training, benchmarking, indexing, etc.) |

✅ |

| vod_search | Hybrid and sharded search clients (elasticsearch, faiss and qdrant) |

✅ |

| vod_tools | A collection of utilities (pretty printing, argument parser, etc.) | ✅ |

| vod_types | A collection data structures and python typing modules |

✅ |

Note The code for VOD gradient and sampling methods currently lives at VodLM/vod-gradients. The project is still under development and will be integrated into this repo in the next month.

We only support development mode for now. You need to install and run elasticsearch on your system. Then install poetry and the project using:

curl -sSL https://install.python-poetry.org | python3 -

poetry installNote See the tips and tricks section to build faiss latest with CUDA support

# How load datasets wtih the universal `rosetta` intergace

poetry run python -m examples.datasets.rosetta

# How to start and use a `faiss` search engine

poetry run python -m examples.search.faiss

# How to start and use a `qdrant` search engine

poetry run python -m examples.search.qdrant

# How to compute embeddings for a large dataset using `lighning.Fabric`

poetry run python -m examples.features.predict

# How to build a Realm dataloader backed by a Hybrid search engine

poetry run python -m examples.features.dataloader

VOD allows training large retrieval models while dynamically retrieving sections from a large knowledge base. The CLI is accessible with:

poetry run train

# Debugging -- Run the training script with a small model and small dataset

poetry run train model/encoder=debug datasets=scifact🔧 Arguments & config files

The train endpoint uses hydra to parse arguments and configure the run.

See vod_exps/hydra/main.yaml for the default configuration. You can override any of the default values by passing them as arguments to the train endpoint. For example, to train a T5-base encoder on MSMarco using FSDP:

poetry run train model/encoder=t5-base batch_size.per_device=4 datasets=msmarco fabric/strategy=fsdp# Navigate to library you wish to work on (`cd libs/<library name>`)

python -m venv .venv

source .venv/bin/activate

# Install globally pinned pip

pip install -r $(git rev-parse --show-toplevel)/pip-requirements.txt

# Install shared development dependencies and project/library-specific dependencies

pip install -r $(git rev-parse --show-toplevel)/dev-requirements.txt -r requirements.txt

# Build and install the library (according to pyproject.toml specification)

pip install -e .

# Use th following utilities to validate the state of the library

python -m black --check .

python -m flake8 .

python -m isort --check-only .

python -m pyright .🐙 Multiple datasets & Sharded search

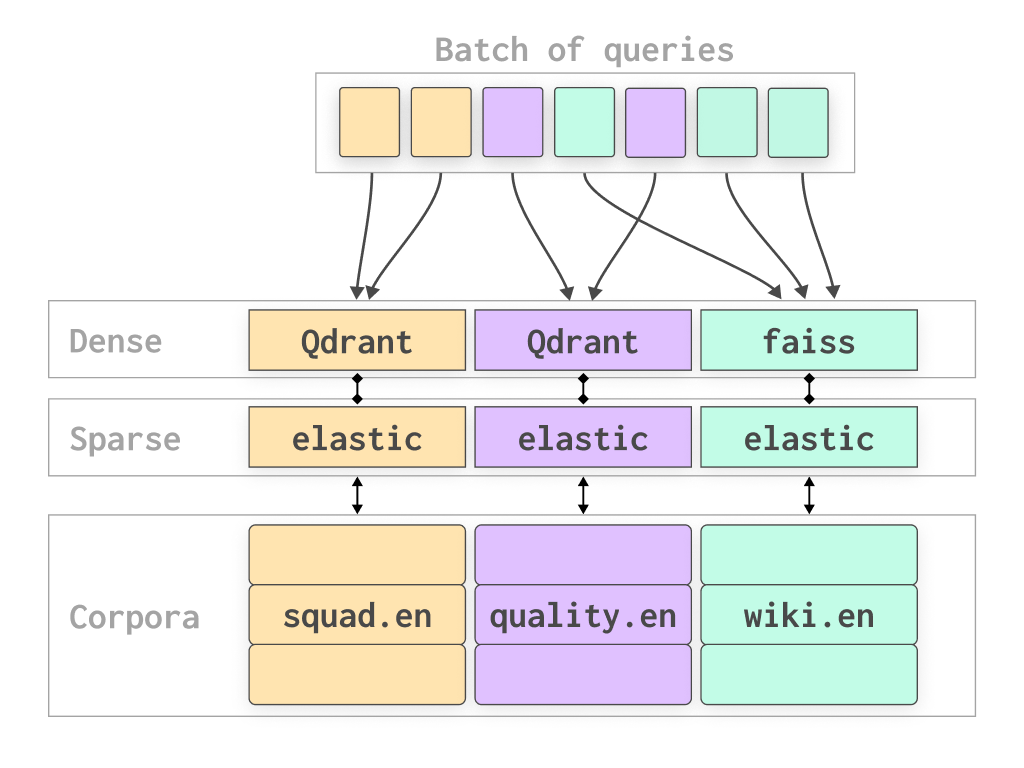

VOD is built for multi-dataset training. Youn can multiple training/validation/test datasets, each pointing to a different corpus. Each corpus can be augmented with a specific search backend. For instance this config allows using Qdrant as a backend for the squad sections and QuALITY contexts while using faiss to index Wikipedia.

VOD implement a hybrid sharded search engine. This means that for each indexed corpus, VOD fits multiple search engines (e.g., Elasticsearch + Qdrant). At query time, data points are dispatched to each shard (corpus) based on the dataset.link attribute.

🐍 Setup a Mamba environment and build faiss-gpu from source

# install mamba

curl -L -O "https://github.com/conda-forge/miniforge/releases/latest/download/Mambaforge-$(uname)-$(uname -m).sh"

bash Mambaforge-$(uname)-$(uname -m).sh

# setup base env - try to run it, or follow the script step by step

bash setup-scripts/setup-mamba-env.sh

# build faiss - try to run it, or follow the script step by step

bash setup-scripts/build-faiss-gpu.sh

# **Optional**: Install faiss-gpu in your poetry env:

export PYPATH=`poetry run which python`

(cd libs/faiss/build/faiss/python && $PYPATH setup.py install)This repo is a clean re-write of the original code FindZebra/fz-openqa aiming at handling larger datasets, larger models and generative tasks.

@InProceedings{pmlr-v202-lievin23a,

title = {Variational Open-Domain Question Answering},

author = {Li\'{e}vin, Valentin and Motzfeldt, Andreas Geert and Jensen, Ida Riis and Winther, Ole},

booktitle = {Proceedings of the 40th International Conference on Machine Learning},

pages = {20950--20977},

year = {2023},

editor = {Krause, Andreas and Brunskill, Emma and Cho, Kyunghyun and Engelhardt, Barbara and Sabato, Sivan and Scarlett, Jonathan},

volume = {202},

series = {Proceedings of Machine Learning Research},

month = {23--29 Jul},

publisher = {PMLR},

pdf = {https://proceedings.mlr.press/v202/lievin23a/lievin23a.pdf},

url = {https://proceedings.mlr.press/v202/lievin23a.html},

abstract = {Retrieval-augmented models have proven to be effective in natural language processing tasks, yet there remains a lack of research on their optimization using variational inference. We introduce the Variational Open-Domain (VOD) framework for end-to-end training and evaluation of retrieval-augmented models, focusing on open-domain question answering and language modelling. The VOD objective, a self-normalized estimate of the Rényi variational bound, approximates the task marginal likelihood and is evaluated under samples drawn from an auxiliary sampling distribution (cached retriever and/or approximate posterior). It remains tractable, even for retriever distributions defined on large corpora. We demonstrate VOD’s versatility by training reader-retriever BERT-sized models on multiple-choice medical exam questions. On the MedMCQA dataset, we outperform the domain-tuned Med-PaLM by +5.3% despite using 2.500$\times$ fewer parameters. Our retrieval-augmented BioLinkBERT model scored 62.9% on the MedMCQA and 55.0% on the MedQA-USMLE. Last, we show the effectiveness of our learned retriever component in the context of medical semantic search.}

}

The project is currently supported by the Technical University of Denmark (DTU) and Raffle.ai.