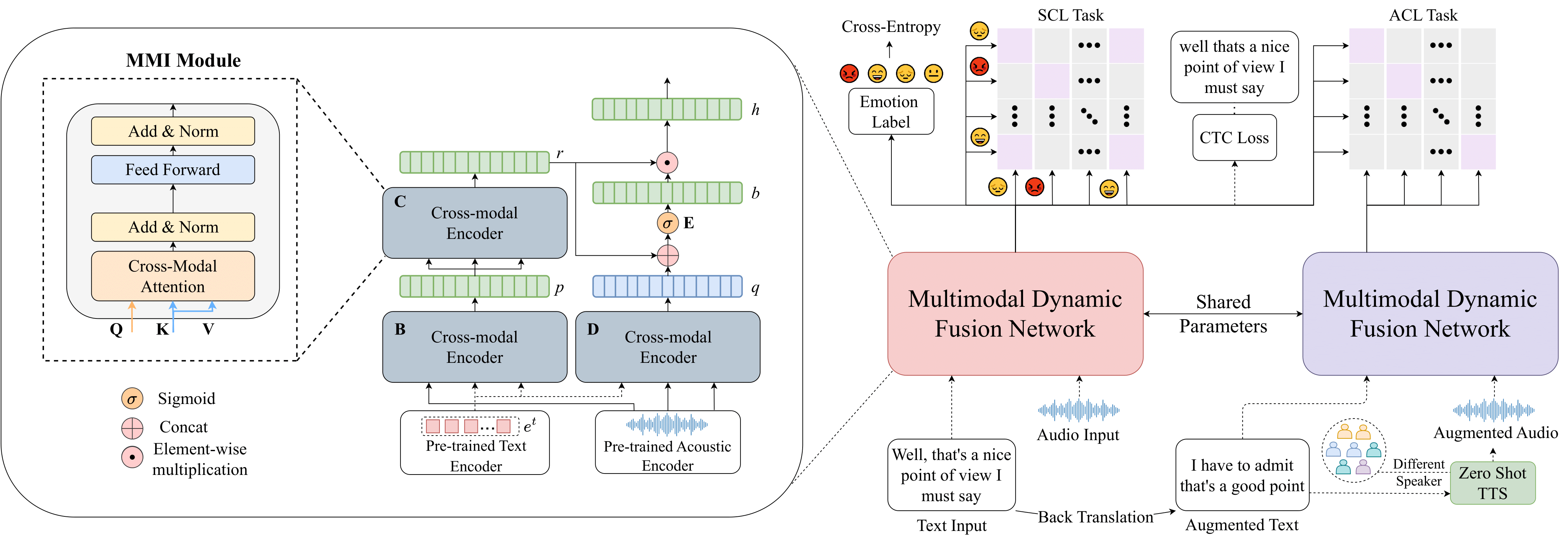

This repository contains code for our InterSpeech 2023 paper - MMER: Multimodal Multi-task Learning for Speech Emotion Recognition

Tu run our model, please download and prepare data according to instructions below:

- download the roberta embeddings and unzip them in the

data/robertafolder. - download the roberta embeddings for augmentations and unzip them in the

data/roberta_augfolder. - download the iemocap dataset and put the tar file in the

datafolder. Then prepare and extract IEMOCAP audio files indata/iemocapusing instructions indata_prepfolder. - download iemocap augmented files and put them in the

data/iemocap_augfolder.

To train MMER, please execute:

sh run.sh

You can optionally change the hyper-parameters in run.sh. Some useful ones are listed below:

--lambda : weight for auxiliary losses

--epochs : number of epochs you want your model to train for (defaults to 100)

--save_path: path to your saved checkpoints and logs (defaults to output/)

--batch_size: batch size for training (defaults to 2)

--accum_iter: number of gradient accumulation steps (scale accordingly with batch_size)

We provide 2 checkpoints. Checkpoint 1 is trained on Sessions 2-5 while Checkpoint 2 is trained on Sessions 1,3,4 and 5. You can download the checkpoints for inference or use checkpoints trained on your own run.

For inference, please execute:

bash infer.sh session_index /path/to/config /path/to/iemocap.csv /path/to/audio /path/to/roberta /path/to/checkpoint

If you find this work useful, please cite our paper:

@inproceedings{ghosh23b_interspeech,

author={Sreyan Ghosh and Utkarsh Tyagi and S Ramaneswaran and Harshvardhan Srivastava and Dinesh Manocha},

title={{MMER: Multimodal Multi-task Learning for Speech Emotion Recognition}},

year=2023,

booktitle={Proc. Interspeech 2023},

}