This is the official pytorch implementation of the paper: Conversational Image Search. Liqiang Nie, Fangkai Jiao, Wenjie Wang, Yinglong Wang, and Qi Tian. TIP 2021.

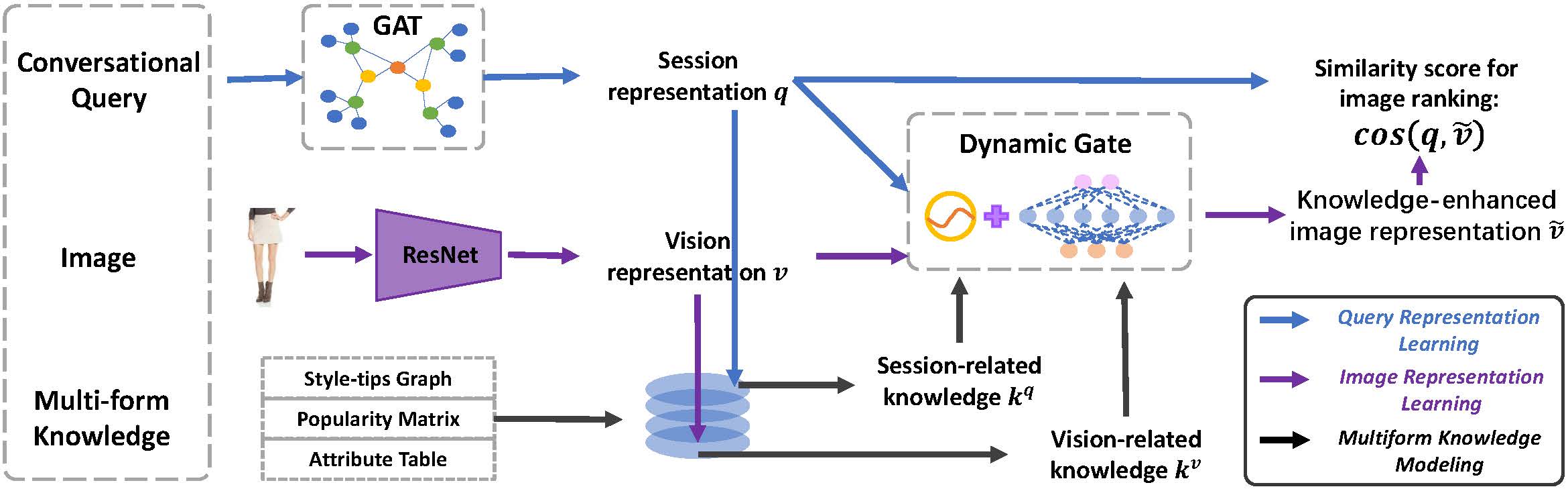

Conversational image search, a revolutionary search mode, is able to interactively induce the user response to clarify their intents step by step. Several efforts have been dedicated to the conversation part, namely automatically asking the right question at the right time for user preference elicitation, while few studies focus on the image search part given the well-prepared conversational query. In this paper, we work towards conversational image search, which is much difficult compared to the traditional image search task, due to the following challenges: 1) understanding complex user intents from a multimodal conversational query; 2) utilizing multiform knowledge associated images from a memory network; and 3) enhancing the image representation with distilled knowledge. To address these problems, in this paper, we present a novel contextuaL imAge seaRch sCHeme (LARCH for short), consisting of three components. In the first component, we design a multimodal hierarchical graph-based neural network, which learns the conversational query embedding for better user intent understanding. As to the second one, we devise a multi-form knowledge embedding memory network to unify heterogeneous knowledge structures into a homogeneous base that greatly facilitates relevant knowledge retrieval. In the third component, we learn the knowledge-enhanced image representation via a novel gated neural network, which selects the useful knowledge from retrieved relevant one. Extensive experiments have shown that our LARCH yields significant performance over an extended benchmark dataset. As a side contribution, we have released the data, codes, and parameter settings to facilitate other researchers in the conversational image search community.

train_1.tar.gz (Extraction Code: wl8s)

train_2.tar.gz (Extraction Code: 324x)

valid.tar.gz (Extraction Code: kw5j)

test.tar.gz (Extraction Code: h5kr)

image_id.json (Extraction Code: 7zsp)

url2img.txt (Extraction Code: azdk)

pip install -r requirements.txtCUDA_VISIBLE_DEVICES=0 python main.py train_dglThe hyper-parameters can be found in constants.py. Here are some details:

DISABLE_STYLETIPS = False # If `true`, the `style tips` knowledge is removed.

DISABLE_ATTRIBUTE = False # If `true`, the `attribute` knowledge is removed.

DISABLE_CELEBRITY = False # If `true`, the `celebrity` knowledge is removed.

IMAGE_ONLY = False # If `true`, all forms of knowledge will be removed.

# Ablation study

KNOWLEDGE_TYPE = 'bi_g_wo_img' # LARCH w/o vision-aware knowledge.

KNOWLEDGE_TYPE = 'bi_g_wo_que' # LARCH w/o query-aware knowledge.To train the model employing the multimodal hierarchical encoder (MHRED) as query encoder, use the following command:

CUDA_VISIBLE_DEVICES=0 python main.py train_textCUDA_VISIBLE_DEVICES=0 python main.py eval_graphTo evaluate the performance of LARCH w/o GRAPH, using the following command:

CUDA_VISIBLE_DEVICES=0 python main.py eval_textYou should change the path of saved checkpoint in evaluator to the path of the model to be evaluated.

Any question please contact: jiaofangkai [AT] hotmail [DOT] com

If this work is helpful, please cite it:

@ARTICLE{conv-img-search-nie-2021,

author={Nie, Liqiang and Jiao, Fangkai and Wang, Wenjie and Wang, Yinglong and Tian, Qi},

journal={IEEE Transactions on Image Processing},

title={Conversational Image Search},

year={2021},

volume={},

number={},

pages={1-1},

doi={10.1109/TIP.2021.3108724}}