The idea here is to use QGIS to style the images then use the QGIS python API to create the tiles.

- Docker is installed

- AWS cli is installed and configured

- Linux is the Operation System (or on MAC terminal)

- Using Amazon Web Services

- Automate GeoTiff SouthFACT Change Images Copt from Drive to AWS

- Automate GeoTiff SouthFACT Change Images from AWS

- Automate updload to AWS

Run the following scripts from the terminal, or set as cron job, currently I would run each one separately unless you run on am xtra-large AWS server.

./process_latest_change_swirall.sh

./process_latest_change_ndvi.sh

./process_latest_change_swirthreshold.sh

./process_latest_change_ndmi.sh

- Its a good idea to name the bucket with domain and subdomain so it can be served i.e.

tiles.southfact.com(only do this once) - Upload the entire folder created in the bash scripts below

- use the aws cli to do this

The process will remove all blank images from the tile cached to save space. It is up to you to redirect the missing tiles to a one single blank.png.

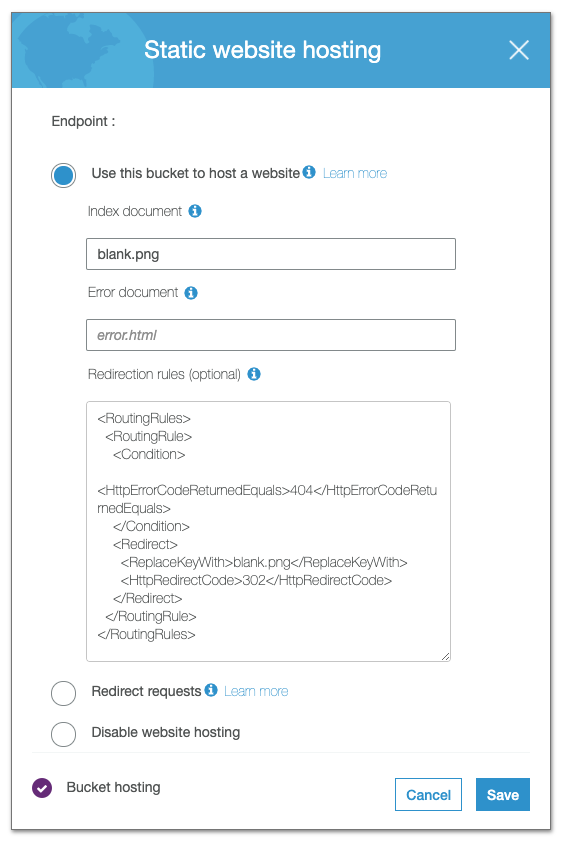

In Amazons s3 we do it with this by going to the bucket properties and:

Adding this redirection rule:

<RoutingRules>

<RoutingRule>

<Condition>

<HttpErrorCodeReturnedEquals>404</HttpErrorCodeReturnedEquals>

</Condition>

<Redirect>

<ReplaceKeyWith>blank.png</ReplaceKeyWith>

<HttpRedirectCode>302</HttpRedirectCode>

</Redirect>

</RoutingRule>

</RoutingRules>

Add this as the index document: blank.png (blank.png must be in the route of the bucket hosting the tile directories)

set env variables for aws key and secret id for the ecr user obviously use the correct key id and key. do commit to GH

AWS_ACCESS_KEY_ID=[the correct AWS aws_access_key_id]

AWS_SECRET_ACCESS_KEY=[the correct AWS aws_secret_access_key]

aws ecr get-login-password --region us-east-1 --profile southfactecr | docker login --username AWS --password-stdin 937787351555.dkr.ecr.us-east-1.amazonaws.com

docker build -t test-tiles-img . --build-arg AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID --build-arg AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY

docker tag test-tiles-img 937787351555.dkr.ecr.us-east-1.amazonaws.com/tiles-test

docker push 937787351555.dkr.ecr.us-east-1.amazonaws.com/tiles-test```