This project tries to see if static word embedding models trained on parallel Bible translations, using word2vec, is enough to cluster languages according to traditional language families. Models are trained on the same sections of the Bible (approximately 30k segmented lines), with the same hyperparameters, for a relatively short period of time.

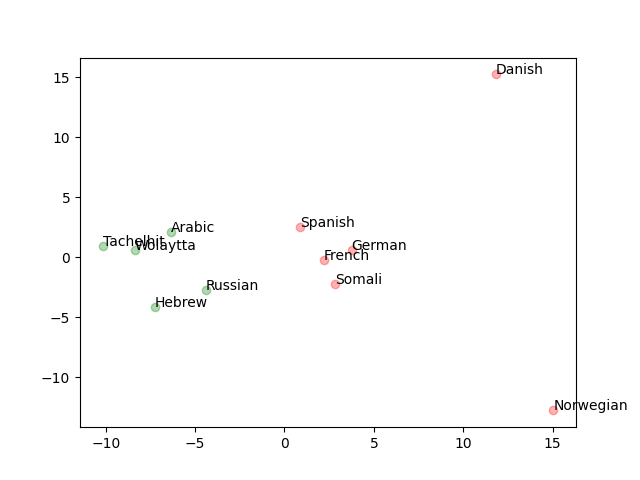

A first attempt at seperating some Indo-European and Afro-Asiatic languages (with some errors).

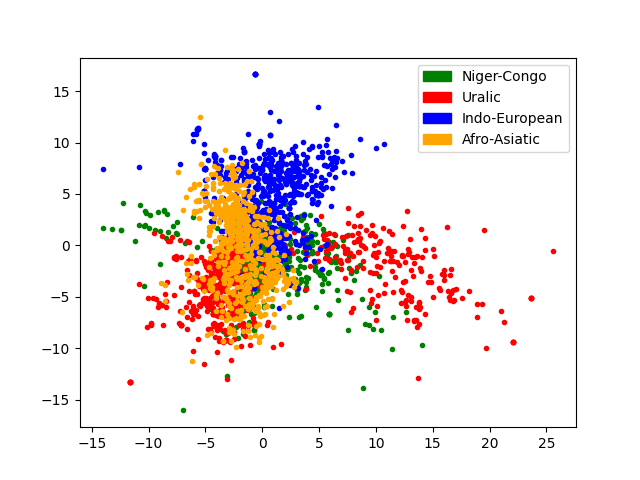

PCA plot of 100 observations for three languages in each of the families: Niger-Congo, Uralic, Indo-European, Afro-Asiatic.

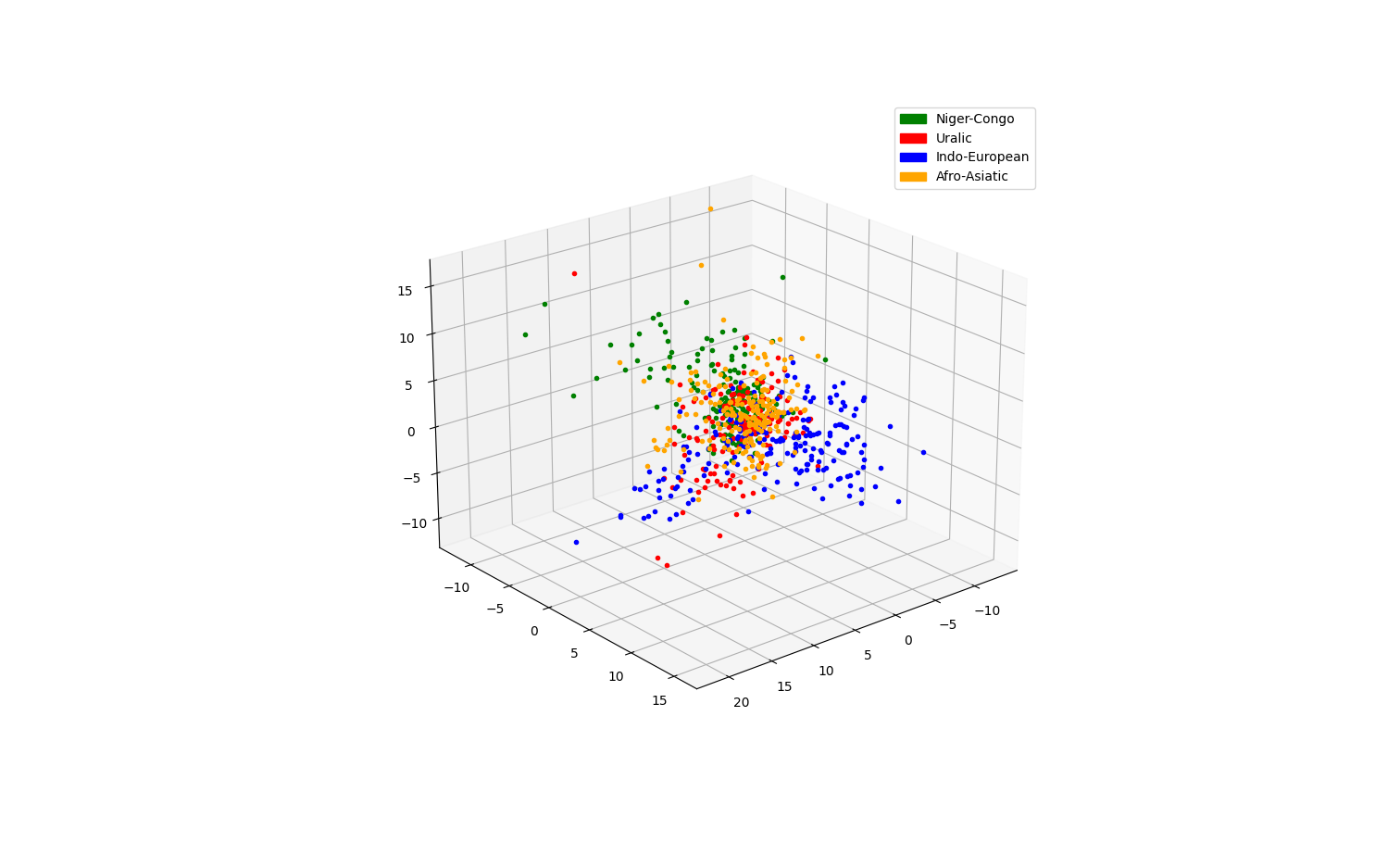

A 3D PCA plot of 50 observations for 3-5 languages in each of the families: Niger-Congo, Uralic, Indo-European, Afro-Asiatic.

- How does the embedding size influence the clustering.

- Differences between tSNE and PCA for dim.reduction on this type of data.

- The impact of components during the dim.reduction.

- Differences between clustering algorithms.

- Latin/Non-Latin scripted languages.

- Other types of genus and sub-genus.

- Why is the Danish translation so off?

- What type of conclusions can be drawn from thesd groupings?

- Other types of pooling.

- Cluster on something other than the averaged Bible vector per langauge.

I'm using the translations made available through the paper A massively parallel corpus: the Bible in 100 languages, by Christos Christodoulopoulos and Mark Steedman. The paper can be read here, and the data is available through the NLPL. To run the scripts, download the languages you would like to work with through that url and place the extracted folder in the project root. For the languages that have been translated using MT, remove the -MT suffix on the XML.

As of now:

- Parse source XML to JSON and serialize into a line-by-line corpus.

- Train a word2vec model either with skipgram or CBOW.

- Create averaged vectors of a sample of lines from the Bible per language (averaged over words).

- Do PCA on the embeddings.

- (Cluster using KMeans or Spectral Clustering)

- Plot the PCA results, coloured by the original gold label (the actual language family)

- Metric of success: Do the colours cluster together? If so, something interesting might be happening despite random initialization and a relatively small corpora per language. If the modeled languages align with the traditional affiliations, then it is possible to construct these traditional language families from semantic distributedness alone; that is, how words in each language's own vocabulary relate to eachother would then be different for the different families.