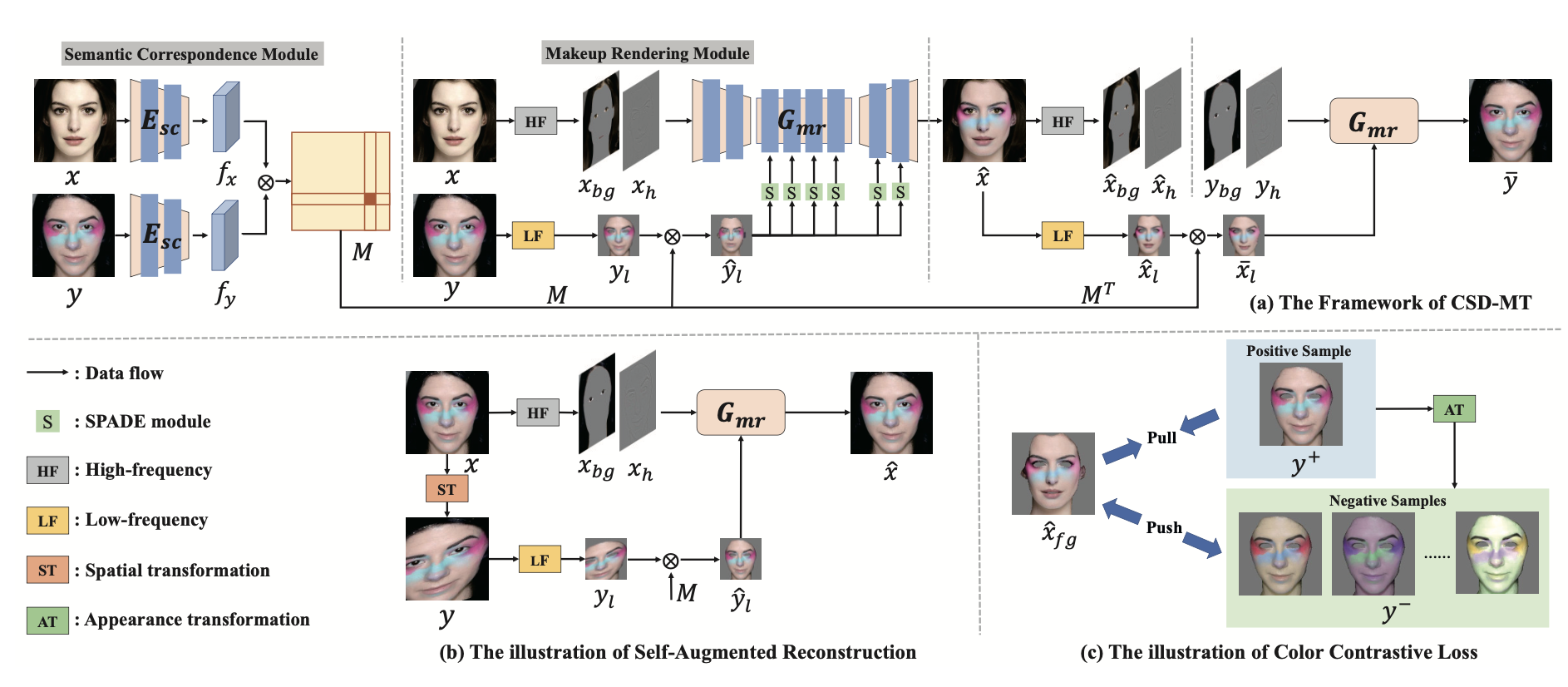

[CVPR2024] Content-Style Decoupling for Unsupervised Makeup Transfer without Generating Pseudo Ground Truth

Zhaoyang Sun, Shegnwu Xiong, Yaxiong Chen, Yi Rong

https://arxiv.org/abs/2405.17240

We recommend that you just use your own pytorch environment; the environment needed to run our model is very simple. If you do so, please ignore the following environment creation.

A suitable conda environment named CSDMT can be created

and activated with:

conda env create -f environment.yaml

conda activate CSDMT

Pre-training models can be downloaded here Baidu Drive,password: 1d3e and Google Drive

Please put CSD_MT.pth in CSD_MT/weights/CSD_MT.pth

Put lms.dat in faceutils/dlibutils/lms.dat

Put 79999_iter.pth in facultyils/face_parsing/res/cp/79999 _iter.pth

The computational complexity and computational resources required for our model are also small, and we only tested the code on CPU. In the current directory, run the following command

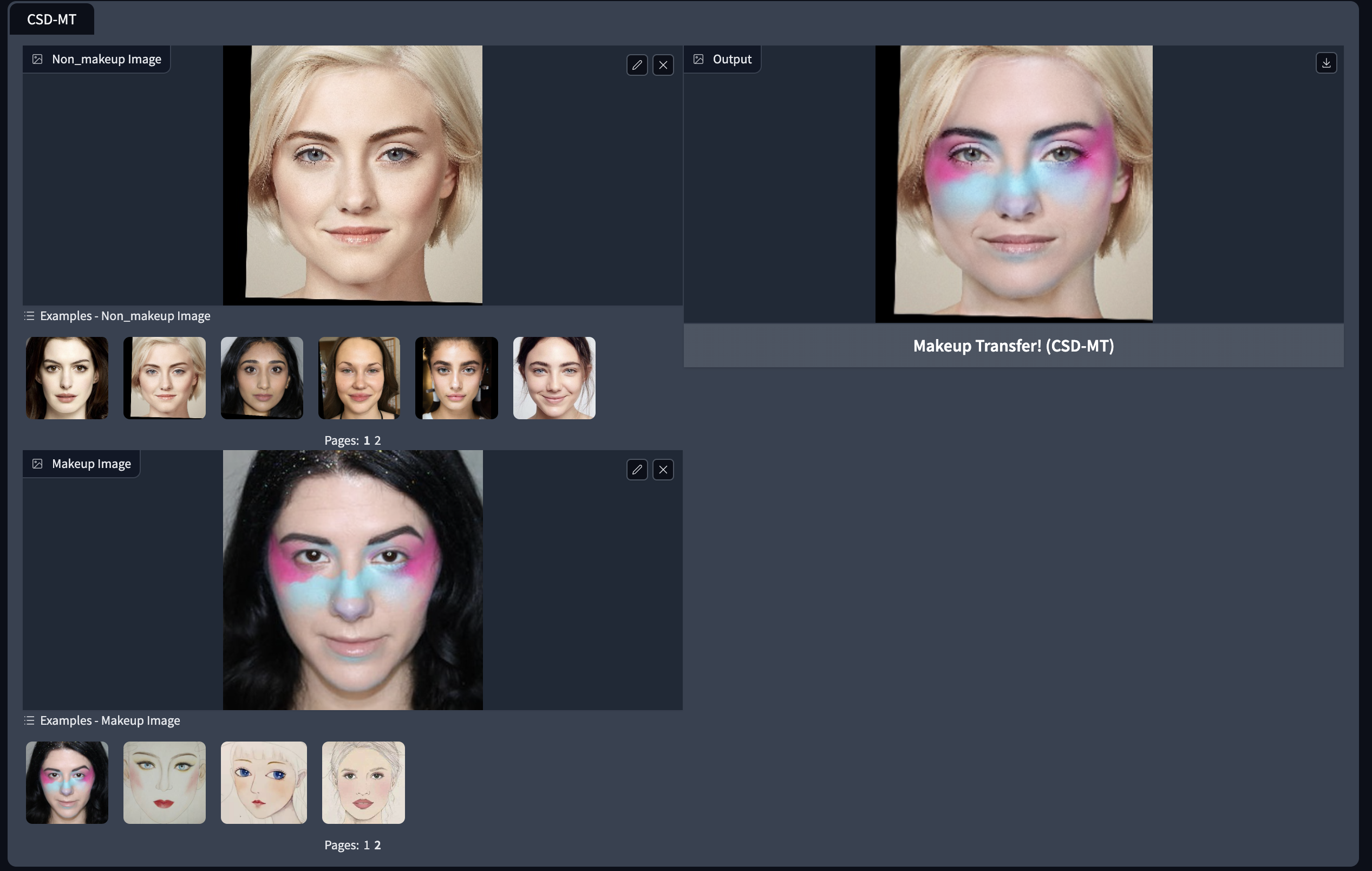

python gradio_makeup_transfer.py

Just upload your own images in the above gradio screen.

If this work is helpful for your research, please consider citing the following BibTeX entry.

@article{sun2024content,

title={Content-Style Decoupling for Unsupervised Makeup Transfer without Generating Pseudo Ground Truth},

author={Sun, Zhaoyang and Xiong, Shengwu and Chen, Yaxiong and Rong, Yi}

journal={Proceedings of the IEEE/CVF conference on computer vision and pattern recognition},

year={2024}

}

Some of the codes are build upon PSGAN, Face Parsing and aster.Pytorch.

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.