This project contains codes that allow the distribution of SimPLU3D on very large zones with calculation distribution processed by the OpenMOLE project. Several scripts have been prepared to realize this workflow and the following documentation explains how to run them. Some of the steps are specially prepared for an experiment with specific data. Nevertheless, otherones are runnable on data with specifications simple to fulfill.

Some results of this work can be found on this video or this publication :

Brasebin, M., P. Chapron, G. Chérel, M. Leclaire, I. Lokhat, J. Perret and R. Reuillon (2017) Apports des méthodes d’exploration et de distribution appliquées à la simulation des droits à bâtir, Actes du Colloque International de Géomatique et d'Analyse Spatiale (SAGEO 2017). Article , Présentation

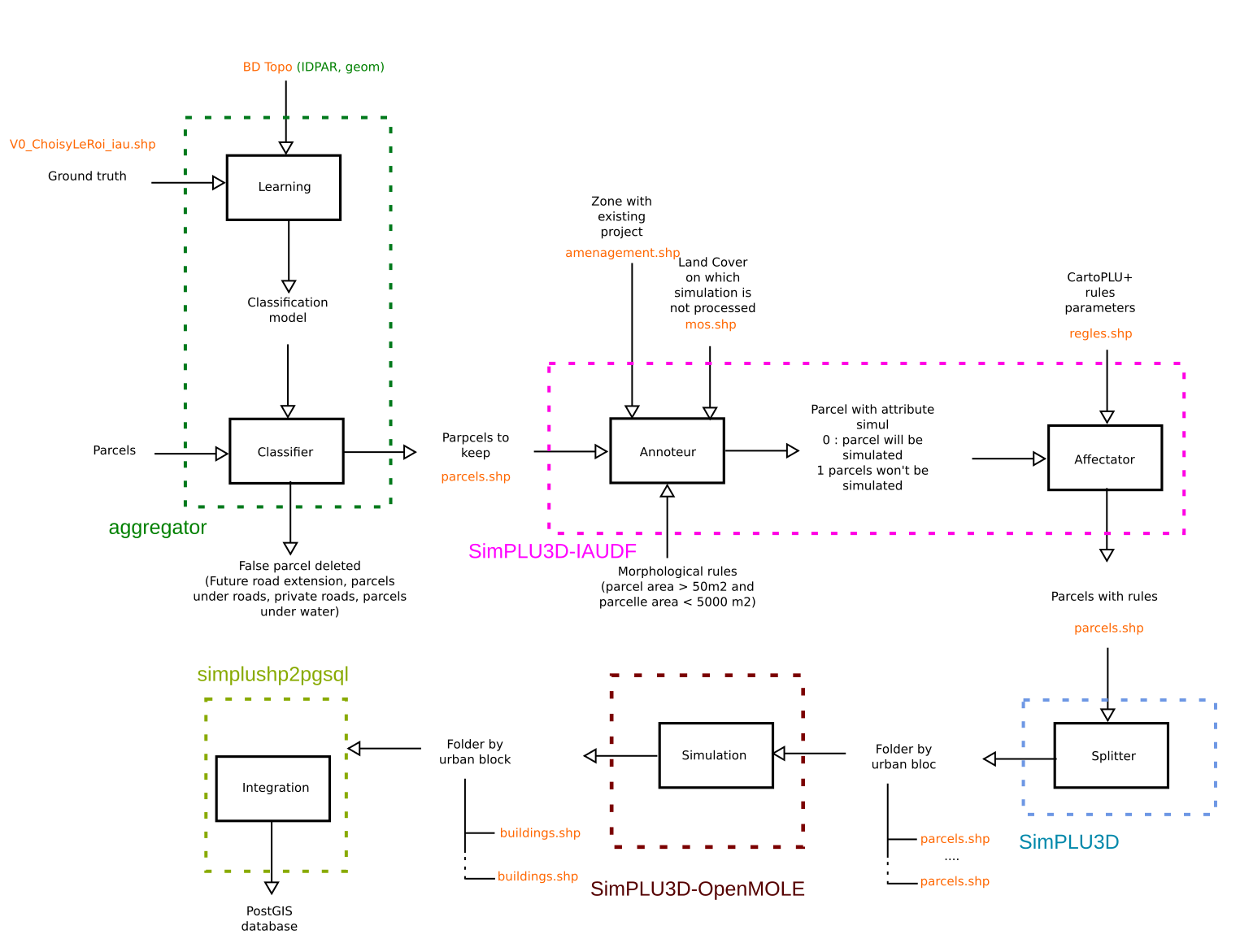

The following image presents the global workflow (you can click on it to get a full resolution image)

The workflow is composed of five majors steps :

- Learning/Classifier (aggregator box) : this algorithm aims at determining and deleting false parcels from geographic data and a ground truth ;

- Annoteur/affectator (SimPLU3D-IAIUDF box) : this steps 1/ determines which parcels have to be simulated from land cover and 2/ affect rules parameters to parcels ;

- Splitter (SimPLU3D box) : this steps split parcels into in order to get packages with a maximum quantity of simulable parcels ;

- Simulation (SimPLU3D-OpenMOLE box) : this step is the calculation distribution with the OpenMOLE project.

- Integration (simplushp2pgsql) : Integration of calculation simulation into a PostGIS database.

Different scripts are necessary to run this task, in order to get all of them, you will have :

- 1 - To download or clone the content of this repository ;

- 2 - To run get_scripts.sh that will download the scripts that are not hosted by this repository

This task aims at learning and detecting false parcels in a parcel shapefile. This script is available in the aggregator folder downloaded by get_scripts.sh.

To run it,

./aggregator-1.0/bin/aggregator /path/to/BD_Topo/ /path/to/Cadastre/to_simulate/ /path/to/ground_truth/ ./temp/ ./output_workflow/parcels.shpwhere :

- /path/to/Cadastre/to_simulate/ is the path to a cadastral parcel shapefile. It can be downloaded on the ETALAB repositories. This dataset determines the area on which the simulation will be processed ;

- /path/to/ground_truth/ the ground truth folder that is used to detect if a parcel is buildable or not (cf the image). It has a buildable attribute that can take 0 or 1 as value if the parcel is real or not. The area of the ground truth must be included in the simulation zone and has to be nammed ; parcelles.shp ;

- /path/to/BD_Topo/ : a set of topographic data that is produced by the French IGN. More information available on the dedicated website ;

- ./temp/ : a temporary folder that will contain intermediary results ;

- ./output_workflow/parcels.shp : the result of the process that can be used in the following task.

This step aims at determining which parcels have to be simulated according to a land cover dataset and morphological indicators. It is realized with a PostGIS database. The scripts are in the iauidf_prepare_parcels_for_zone_packer/ directory.

Step 1 : Creation of a PostGIS database.

Step 2 : Import of the data in PostGIS

You have to run the create_parcelles_rules.sh script that needs to be updated and notably :

- information relative to PostGIS connexion

- parcelle_shape="parcels_94.shp" : the parcel file produced during the previous step ;

- amenagement_shape="amenagement.shp" : a database that contains project planning on which simulation won't be processed ;

- mos_shape="mos.shp" : a LAND Cover dataset produced by the IAUIDF on which simulation will not be processed ;

- regles_shape="regles.shp" : the rules parameters from CartoPLU+ database.

The script parcelles_rulez.sql will be applied once the data is imported.

The table parcelles_rulez has to be exported for the following step.

The splitter step aims at creating sets of data with a maximal number of parcels to simulate. The idea is to avoid to distribute zones with too important quantity of parcels.

The script is SimPLU3D-openmole-release/zonepacker.sh downloaded with the get_scripts.sh script and can be run as follow :

./zonepacker.sh "/path/to/parcels_rulez.shp" "/tmp/tmp/" "/tmp/out/" 20where :

- /path/to/parcels_rulez.shp : the shapefile produced in the previous step ;

- /tmp/tmp/ : an empty temporary folder ;

- /tmp/out/ : a folder that will contain a set of folders with an homogeneous set of simulable parcels ;

- 20 : the maximal number of parcels in a set.

This steps aims at distributing the calculation with OpenMole. The downloaded SimPLU3D-openmole-release folder contains all the necessary materials to do this in the openmole folder :

- simplu3d-openmole-plugin_2.12-1.0.jar : that can be imported as an OpenMOLE plugin ;

- EPFIFTaskRunner.oms : a script to distribute the calculation according to the folder tree contained in dataBasicSimu ;

- scenario : a folder that contains two samples of scenarios (a fast to test if the script works and a real one) ;

- 94 : a folder in which the data produced in the previsous step has to be pasted.

This steps aims at integrating the simulation results into the database created in the Annoteteur/affectator step.

It is done by running the import_results_to_pg.sh available in iauidf_results_to_db/.

It mainly consists of two steps :

- first, importing the geometries with the simplushp2pgsql.jar program. it needs a configuration file to be set accordingly to the database credentials and the path to the simulation results

- then, splitting the output.csv file in two, one for errors and the other for the floor area calculation, and then importing them in the database also

The variables to be set are in the import_results_to_pg.sh and are self explicit.

This software is free to use under CeCILL license. However, if you use this library in a research paper, you are kindly requested to acknowledge the use of this software.

Furthermore, we are interested in every feedbacks about this library if you find it useful, if you want to contribute or if you have some suggestions to improve it.

Mickaël Brasebin & Julien Perret COGIT Laboratory ({surname.name} (AT) {gmail} (POINT) {com})

- IAUIDF and DRIEA for supporting the project by providing advice to improve the quality of simulations and CartoPLU+ database.