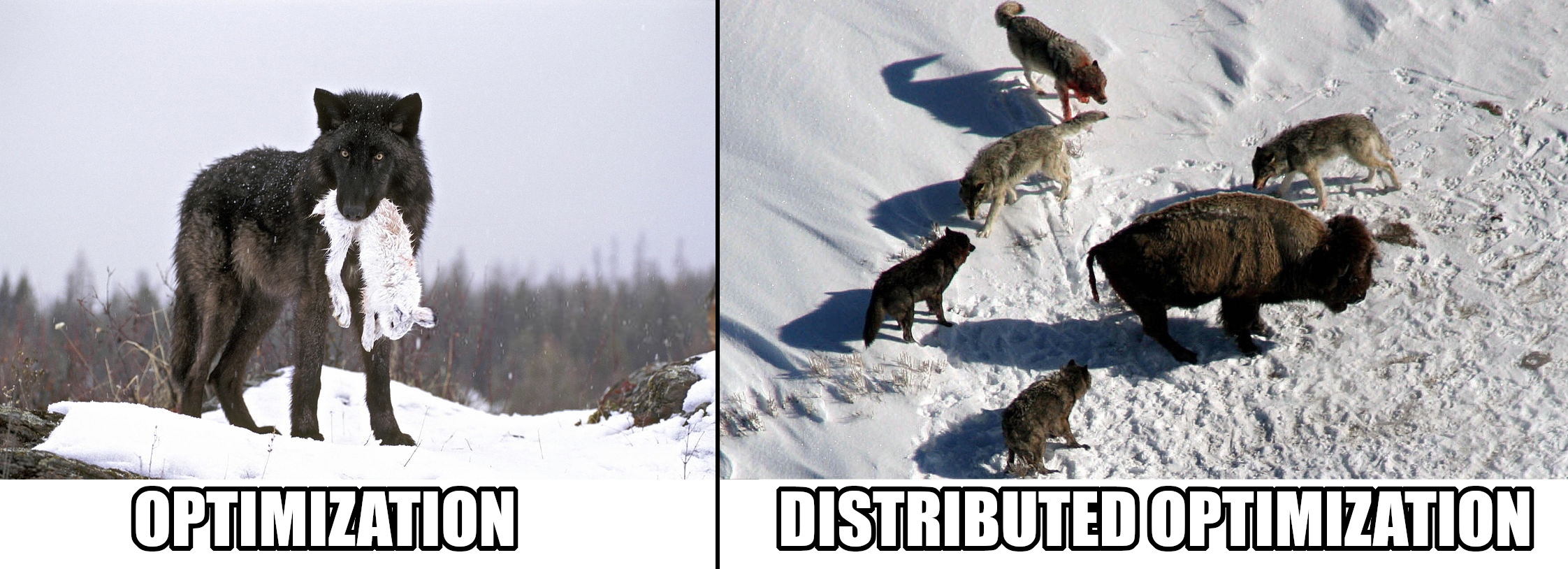

With the growing number of distributed computations, the need for optimal distributed algorithms has increased. The benefit from distributed computations is clear, as we multiply the computing powers, thus reducing the computation time, and allow processing of data containing an extreme number of features. But there is a significant overhead caused by the network communication. New optimization methods and algorithms are to solve the problem introduced by the communication expenses.

This work aims to implement and compare some of the most popular distributed convex optimization algorithms:

- ADMM (centralized),

- DANE (centralized),

- Network-DANE (decentralized),

- Network-SARAH (decentralized),

- etc

in solving the problem of multi-label classification on fashion MNIST.

You can read the detailed report in docs/report.pdf

To run the benchmark:

- Create a virtual environment:

virtualenv .venv

source .venv/bin/activate- Install requirements using pip

pip3 install -r requirements.txt- Run the main script

python3 main.py- Samy Wu Fung et. al. ADMM-SOFTMAX : An ADMM Approach for Multinomial Logistic Regression

- Boyd et al. Distributed Optimization and Statistical Learning Via the Alternating Direction Method of Multipliers

- Shamir et al. Communication-Efficient Distributed Optimization using an Approximate Newton-type Method

- Boyue et al. Communication-Efficient Distributed Optimization in Networks with Gradient Tracking and Variance Reduction