Python, BeuatifulSoup, Pandas, Splinter to scrape NASA Mars News and save data in Mongodb

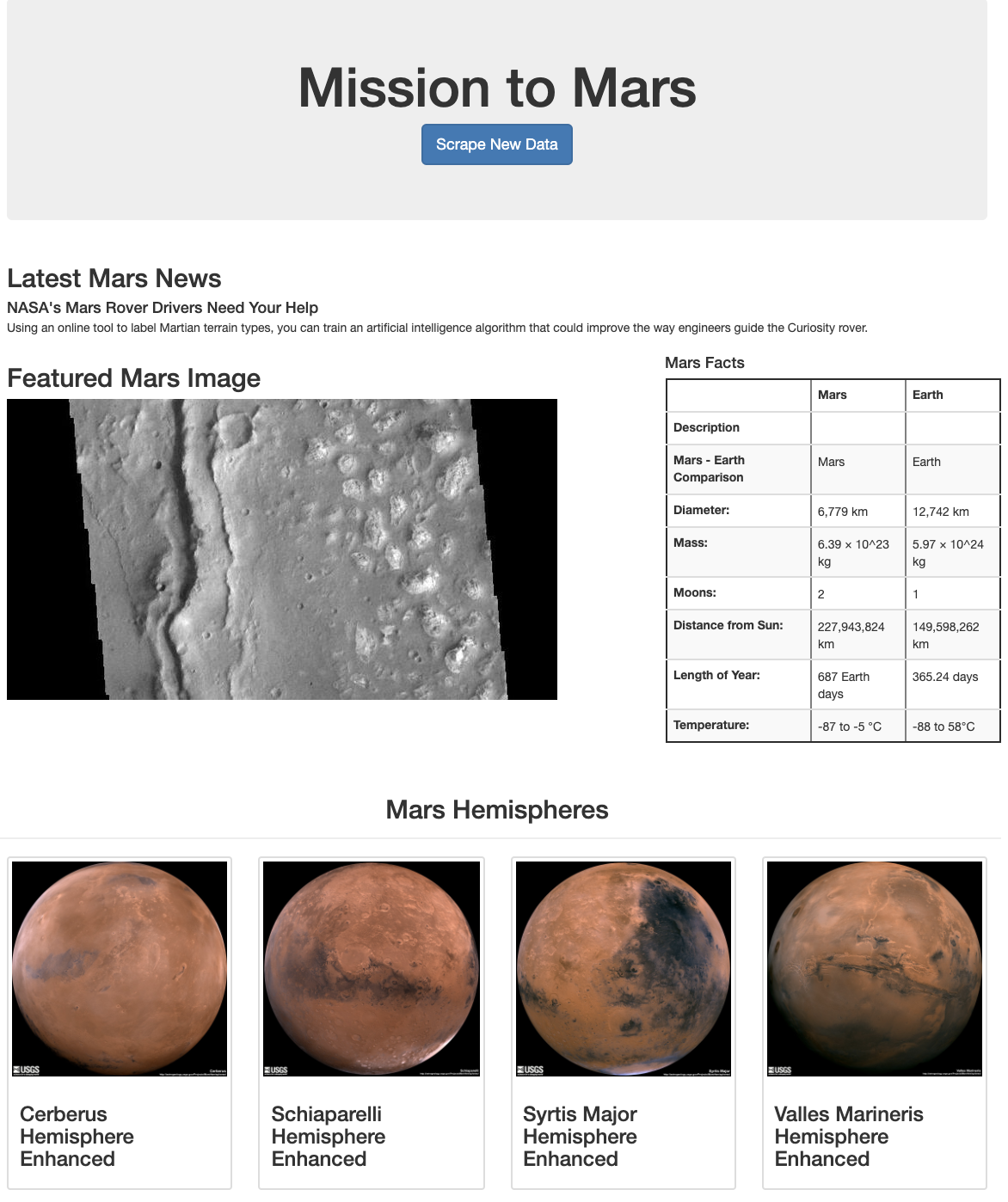

Built a web application that scrapes various websites for data related to the Mission to Mars and displays the information in a single HTML page.

-

Created a new repository for this project called

web-scraping. -

Cloned the new repository to the computer.

-

Inside the local git repository, created a directory for the web scraping activity. Used a folder name to correspond to the challenge: Missions_to_Mars.

-

Added the Jupyter notebook files to this folder as well as the flask app.

-

Pushed the above changes to GitHub or GitLab.

Completed the initial scraping using Jupyter Notebook, BeautifulSoup, Pandas, and Splinter.

- Created a Jupyter Notebook file called

mission_to_mars.ipynband used this to complete all of the scraping and analysis tasks. The following outlines what was scraped.

- Scraped the Mars News Site and collected the latest News Title and Paragraph Text. Assigned the text to variables that were referenced later.

# Example:

news_title = "NASA's Next Mars Mission to Investigate Interior of Red Planet"

news_p = "Preparation of NASA's next spacecraft to Mars, InSight, has ramped up this summer, on course for launch next May from Vandenberg Air Force Base in central California -- the first interplanetary launch in history from America's West Coast."-

Visited the url for the Featured Space Image site here.

-

Used splinter to navigate the site and find the image url for the current Featured Mars Image and assigned the url string to a variable called

featured_image_url. -

Made sure to find the image url to the full size

.jpgimage. -

Made sure to save a complete url string for this image.

# Example:

featured_image_url = 'https://spaceimages-mars.com/image/featured/mars2.jpg'-

Visited the Mars Facts webpage here and use Pandas to scrape the table containing facts about the planet including Diameter, Mass, etc.

-

Used Pandas to convert the data to a HTML table string.

-

Saved a 'mars_table.html' file to view results that were retrieved, then saved in notebook to view any udpates

-

Visited the astrogeology site here to obtain high resolution images for each of Mar's hemispheres.

-

Needed to click each of the links to the hemispheres in order to find the image url to the full resolution image.

-

Saved both the image url string for the full resolution hemisphere image, and the Hemisphere title containing the hemisphere name. Used a Python dictionary to store the data using the keys

img_urlandtitle. -

Appended the dictionary with the image url string and the hemisphere title to a list. This list will contained one dictionary for each hemisphere.

# Example:

hemisphere_image_urls = [

{"title": "Valles Marineris Hemisphere", "img_url": "..."},

{"title": "Cerberus Hemisphere", "img_url": "..."},

{"title": "Schiaparelli Hemisphere", "img_url": "..."},

{"title": "Syrtis Major Hemisphere", "img_url": "..."},

]Used MongoDB with Flask templating to create a new HTML page that displays all of the information that was scraped from the URLs above.

-

Started by converting the Jupyter notebook into a Python script called

scrape_mars.pywith a function calledscrapethat executes all of the scraping code from above and returns one Python dictionary containing all of the scraped data. -

Next, created a route called

/scrapethat imports thescrape_mars.pyscript and called thescrapefunction.- Stored the return value in Mongo as a Python dictionary.

-

Created a root route

/that queries the Mongo database and passed the mars data into an HTML template to display the data. -

Created a template HTML file called

index.htmlthat takes the mars data dictionary and display all of the data in the appropriate HTML elements. Used the following as a guide for what the final product should look like, but feel free to create your own design.

-

Use Splinter to navigate the sites when needed and BeautifulSoup to help find and parse out the necessary data.

-

Use Pymongo for CRUD applications for your database. One can simply overwrite the existing document each time the

/scrapeurl is visited and new data is obtained. -

Use Bootstrap to structure your HTML template.

-

Responsive (chrome) - some aspects

© 2021 Trilogy Education Services, LLC, a 2U, Inc. brand. Confidential and Proprietary. All Rights Reserved.