Website | Paper | Video | Getting Started

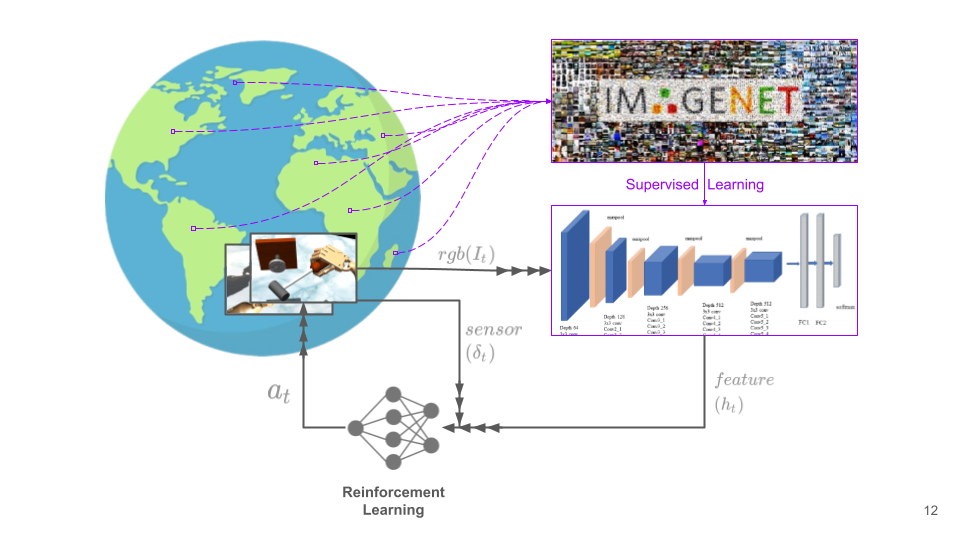

Representations do not necessarily have to be trained on the exact task distribution; a representation trained on a sufficiently wide distribution of real-world scenarios will be robust to scene variations, and will remain effective on any distribution a policy optimizing a 'task in the real world' might induce.

Leveraging resnet features, RRL delivers natural human like behaviors trained directly form proprioceptive inputs. Presented below are behaviors acquired on ADROIT manipulation benchmark task suite rendered from the camera viewpoint. We also overlay the visual features (layer-4 of Resnet model of the top 1 class using GradCAM) to highlight the features RRL is attending to during the task execution. Even though standard image classification models aren't trained with robot images, we emphasize that the features they acquire, by training on large corpus of real world scenes, remain relevant for robotics tasks that are representative of real world and rich in depth perspective (even in simulated scenes).

|

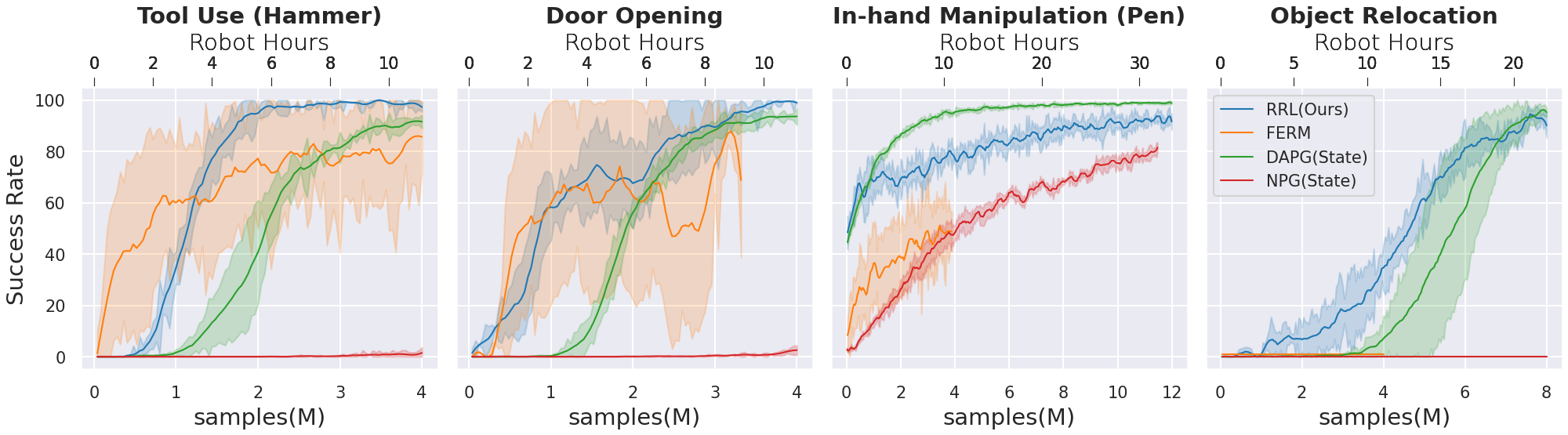

Compared to baselines, which often fail and are sensitive to hyper-parameters, RRL demonstrates relatively stable and monotonic performance; often matching the efficiency of state based methods. We present comparisons with methods that learn directly from state (oracle) as well as ones that uses proprioceptive visual inputs.

-

NPG(State) : State of the art policy gradient method struggles to solve the suite even with privileged low level state information, establishing the difficulty of the suite.

-

DAPG(State) : A demonstration accelerated method using privileged state information, can be considered as an oracle of our method.

-

RRL(Ours) : Demonstrates stable performance and approaches performance of DAPG(State).

-

FERM : A competing baseline; shows good initial, but unstable, progress in a few tasks and often saturates in performance before exhausting our computational budget (40 hours/ task/ seed).

RRL codebase can be installed by cloning this repository. Note that it uses git submodules to resolve dependencies. Please follow the steps as below to install correctly.

-

Clone this repository along with the submodules

git clone --recursive https://github.com/facebookresearch/RRL.git -

Install the package using

conda. The dependencies (apart frommujoco_py) are listed inenv.ymlconda env create -f env.yml conda activate rrl -

The environment require MuJoCo as a dependency. You may need to obtain a license and follow the setup instructions for mujoco_py. Setting up mujoco_py with GPU support is highly recommended.

-

Install

mj_envsandmjrlrepositories.cd RRL pip install -e mjrl/. pip install -e mj_envs/. pip install -e . -

Additionally, it requires the demonstrations published by

hand_dapg

-

First step is to convert the observations of demonstrations provided by

hand_dapgto the encoder feature space. An example script is provided here. Note the script saves the demonstrations in a.pickleformat inside therrl/demonstrationsdirectory.For the

mj_envstasks :python convertDemos.py --env_name hammer-v0 --encoder_type resnet34 -c top -d <path-to-the-demo-file>python convertDemos.py --env_name door-v0 --encoder_type resnet34 -c top -d <path-to-the-demo-file>python convertDemos.py --env_name pen-v0 --encoder_type resnet34 -c vil_camera -d <path-to-the-demo-file>python convertDemos.py --env_name relocate-v0 --encoder_type resnet34 -c cam1 -c cam2 -c cam3 -d <path-to-the-demo-file> -

Launching

RRLexperiments using DAPG.An example launching script is provided

job_script.pyin theexamples/directory and the configs used are stored in theexamples/config/directory. Note : Hydra configs are used.python job_script.py demo_file=<path-to-new-demo-file> --config-name hammer_dapgpython job_script.py demo_file=<path-to-new-demo-file> --config-name door_dapgpython job_script.py demo_file=<path-to-new-demo-file> --config-name pen_dapgpython job_script.py demo_file=<path-to-new-demo-file> --config-name relocate_dapg