Educational implementation of Variational Autoencoder (VAE) for the MNIST dataset, supporting both fully connected (FNN) and convolutional (CNN) architectures. It includes training, evaluation, and empirical analysis.

- Train VAE models (FNN/CNN) on MNIST

- Configurable loss functions (BCE, MSE)

- Checkpointing and logging

- Empirical evaluation notebook

- Utilities for loading checkpoints and configuration

main.py: Entry point for trainingmodel_fnn.py,model_cnn.py: Model definitionstrainer.py: Training loop and logicutils/: Utility scripts (checkpoint loading, YAML config)empirical_eval.ipynb: Notebook for empirical evaluationdata/: MNIST dataoutputs/: Model checkpoints, logs, generated samples

- Clone the repository

- Install dependencies:

pip install -r requirements.txt

- Training:

python main.py --config config.yaml

- Empirical Evaluation:

Open

empirical_eval.ipynbin Jupyter and run the cells.

Edit config.yaml to change model, training parameters.

See requirements.txt for Python dependencies.

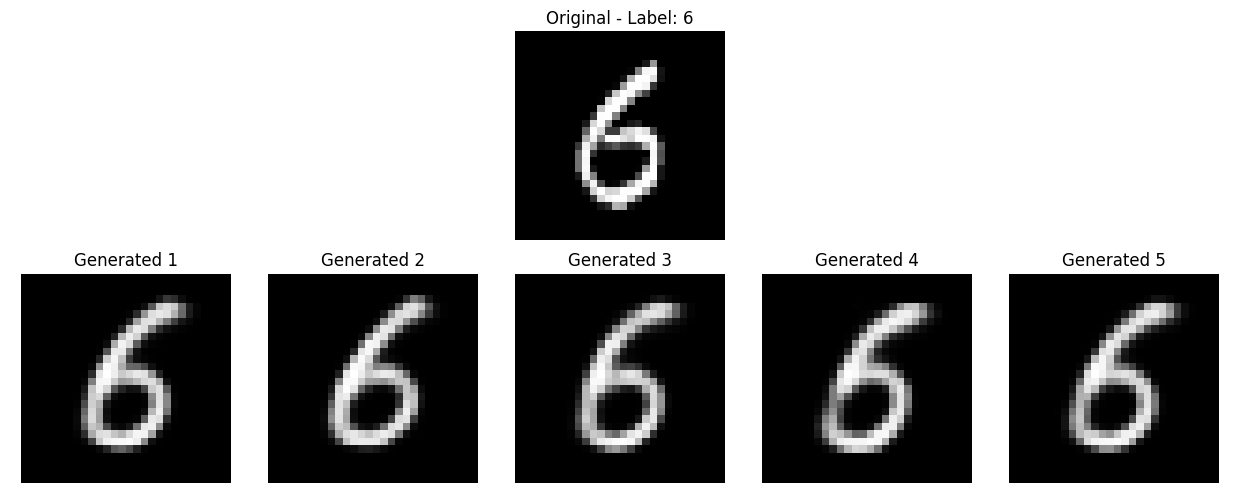

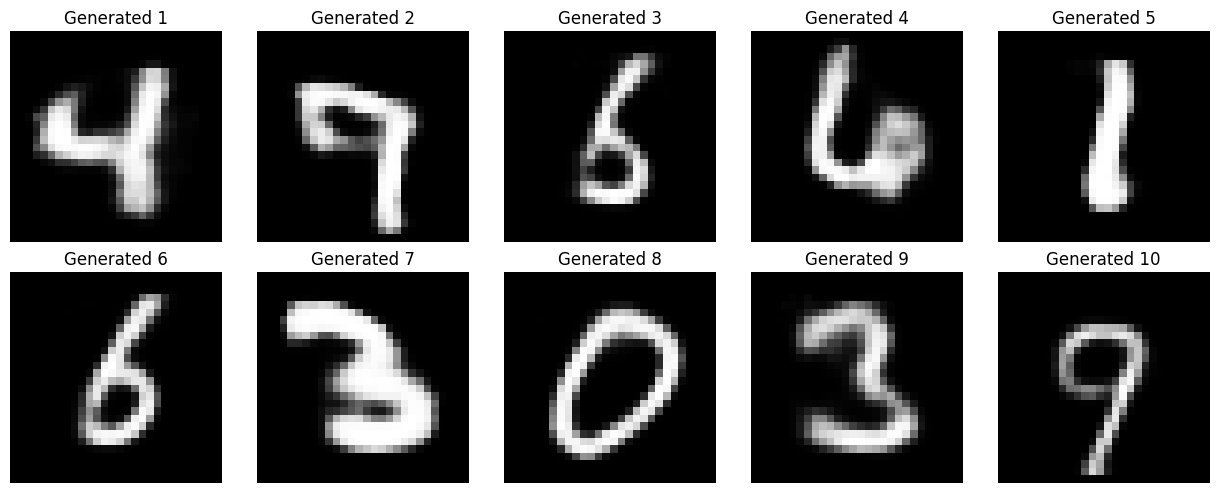

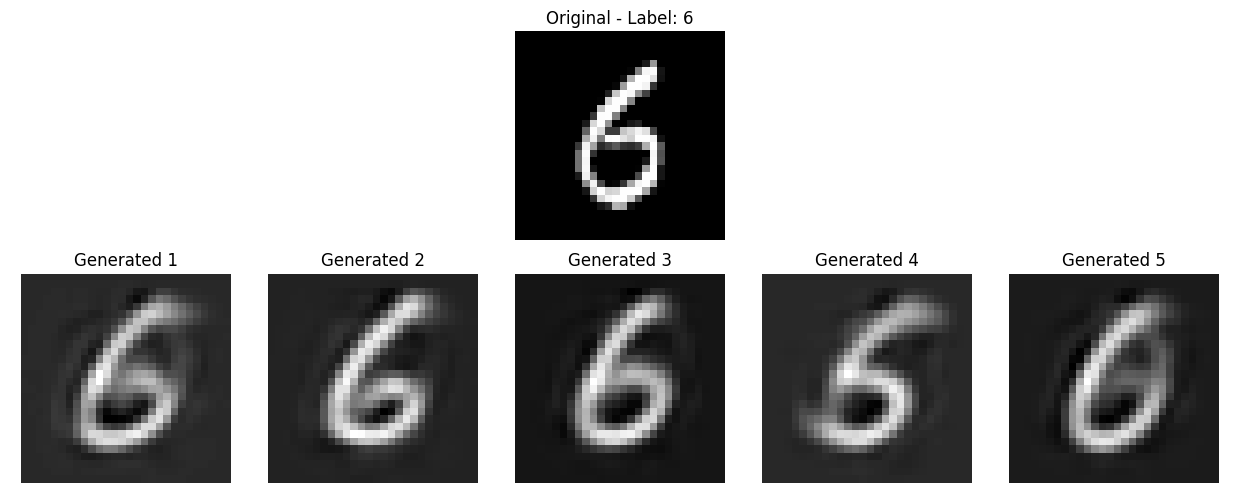

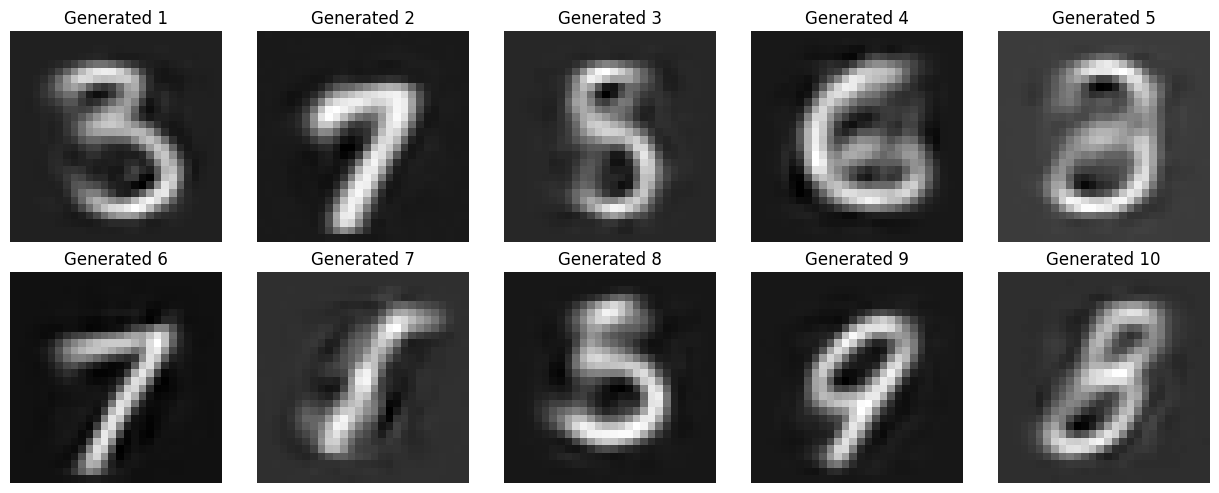

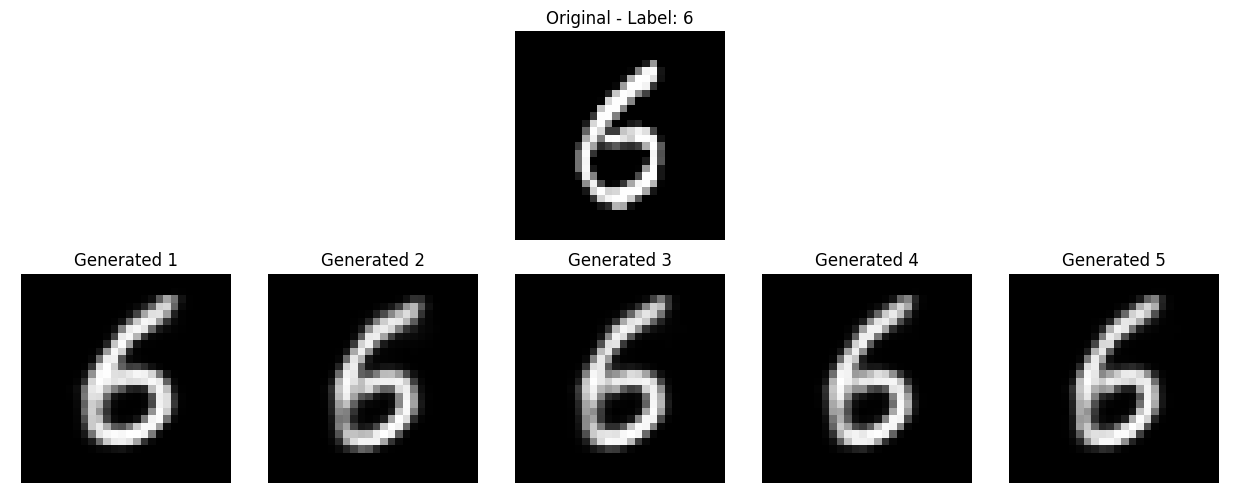

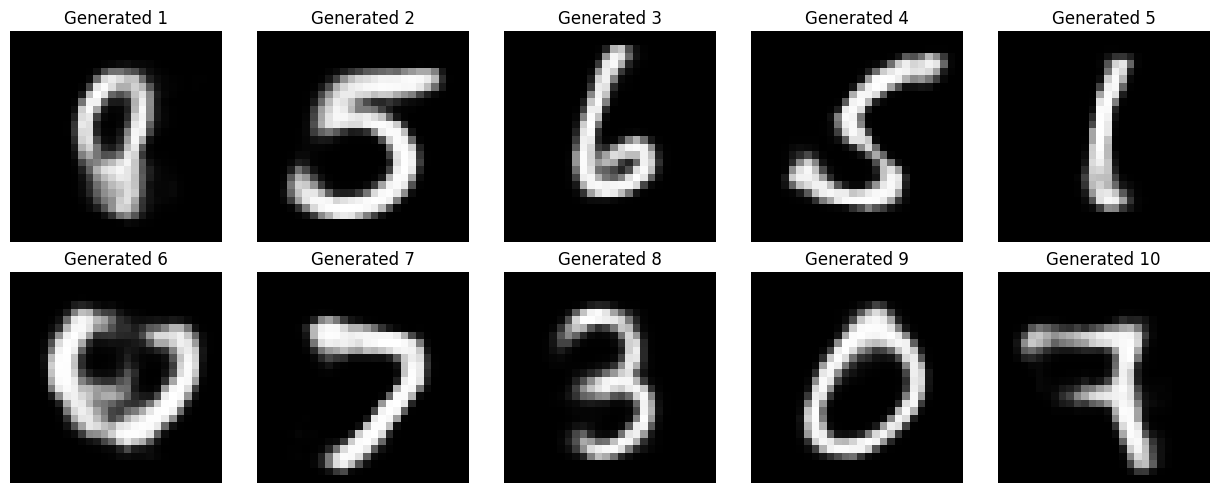

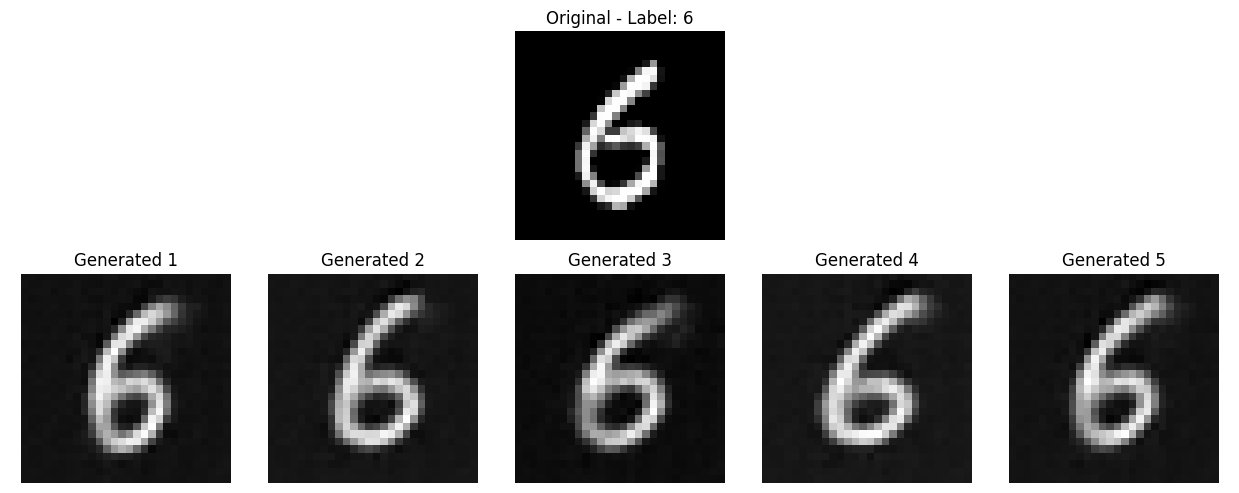

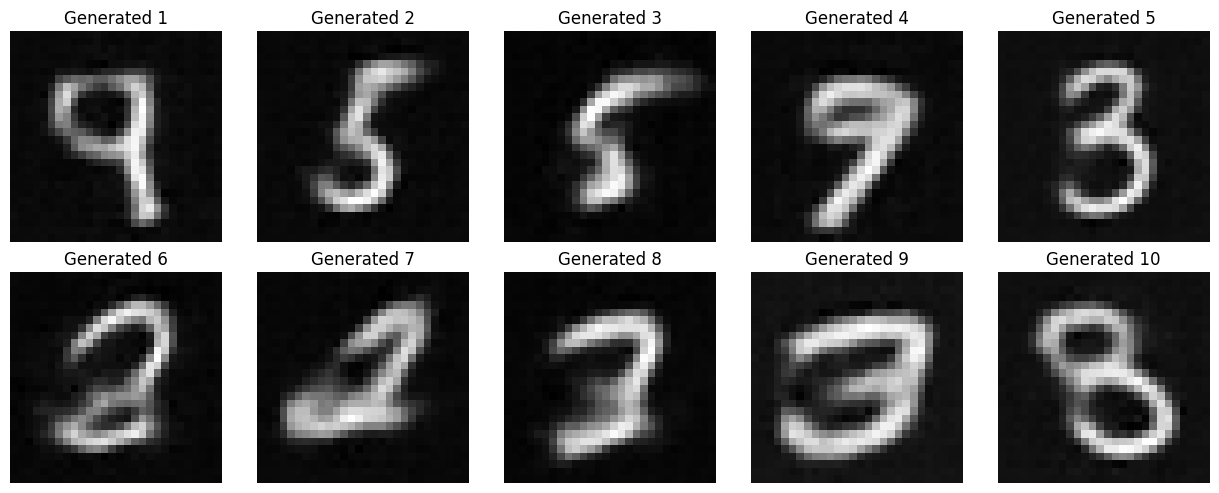

- FNN-VAEs and CNN-VAEs were trained with both MSE and BCE losses.

- For each model, we visualize:

- Reconstruction of real images (original vs. generated)

- Generation of new images by sampling from the latent space

| Model | Loss | Reconstruction Example | Generation Example |

|---|---|---|---|

| FNN | BCE |  |

|

| FNN | MSE |  |

|

| CNN | BCE |  |

|

| CNN | MSE |  |

|

- CNN-VAEs outperform FNN-VAEs, likely due to better preservation of image structure.

- BCE loss yields better reconstructions than MSE loss in this experiment.

For detailed code and more visualizations, see the empirical_eval.ipynb notebook.

MIT License