Torch2Chip is an End-to-end Deep Neural Network compression toolkit designed for prototype accelerator designer for algorithm-hardware co-design with high-degree of algorithm customization.

[Website] (coming soon) | [Documentation] (coming soon)

- [04/15/2024]: Initial version of Torch2Chip is published together with the camera-ready version of our MLSys paper! 🔥

- [04/26/2024]: SmoothQuant and QDrop are added to Torch2Chip!

- [04/26/2024]: INT8 Vision models and checkpoints are available for download!

- Integer-only model with fully observable input, weight, output tensors.

- [04/26/2024]: INT8 BERT model is ready with SST-2 dataset demo (Beta version).

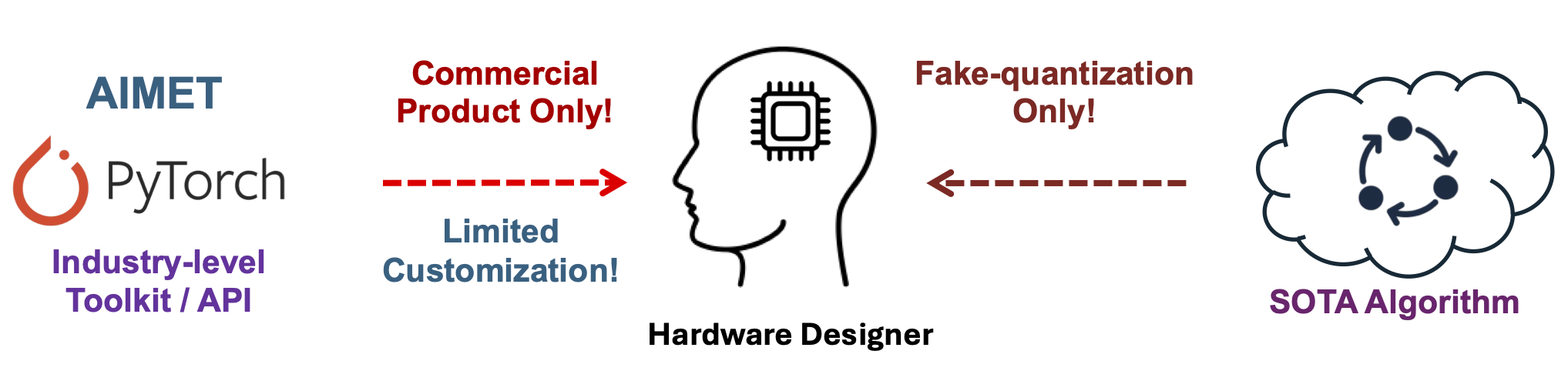

The current "design-and-deploy" workflow faces under-explored challenges in the current hardware-algorithm co-design community due to some unavoidable flaws:

-

Deep Learning framework: Although the state-of-the-art (SoTA) quantization algorithm can achieve ultra-low precision with negligible degradation of accuracy, the latest deep learning framework (e.g., PyTorch) can only support non-customizable 8-bit precision, data format (

torch.qint8). -

Algorithms: Most of the current SoTA algorithm treats the quantized integer as an intermediate result, while the final output of the quantizer is the "discretized" floating-point values, ignoring the practical needs and adding additional workload to hardware designers for integer parameter extraction and layer fusion.

-

Industry standard Toolkit: The compression toolkits designed by the industry (e.g., OpenVino) are constrained to their in-house product or a handful of algorithms. The limited degree of freedom in the current toolkit and the under-explored customization hinder the prototype ASIC-based accelerator.

From the perspectives of the hardware designers, the conflicts from the DL framework, SoTA algorithm, and current toolkits formulate the cumbersome and iterative designation workflow of chip prototyping, which is what Torch2Chip aim to resolve.

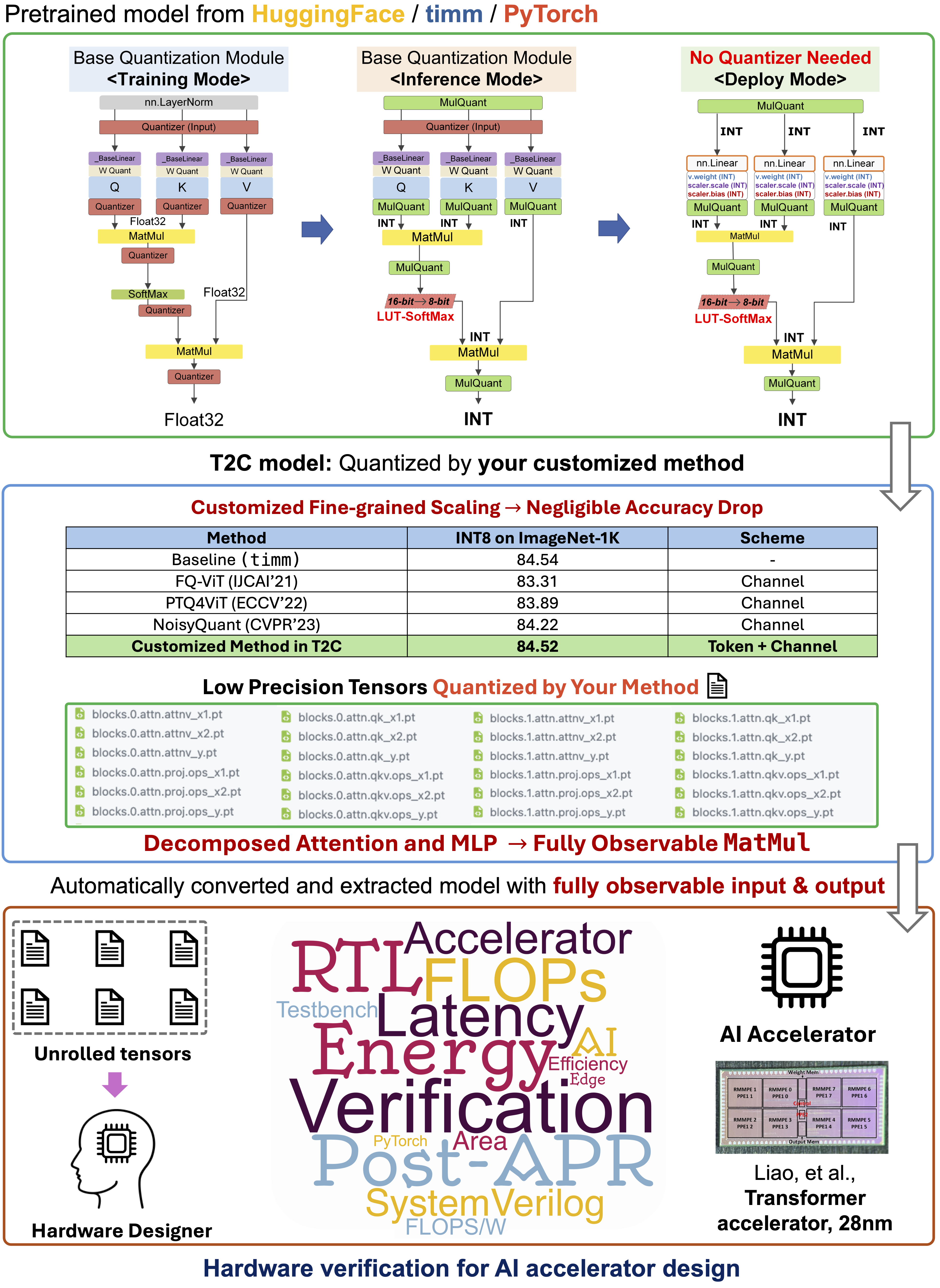

Torch2Chip is a toolkit that enables customized model compression (e.g., quantization) with full-stack observability for customized hardware designers. Starting from the user-customized compression algorithms, Torch2Chip perfectly meet the bottom-level needs for the customized AI hardware designers:

-

[Model and Modules]: Unlike the open-sourced quantization algorithms, Torch2Chip does not require the user to have your customized moule or even model file (e.g.,

resnet.py). -

[Customize]: User just need to implement their own algorithm by following the proposed "dual-path" design. Torch2Chip will take care of the reamining step.

-

[Decompose & Extract]: Torch2Chip decompose the entire model down to the basic operations (e.g.,

matmul) , where the inputs are compressed by the user-customized algorithm.

The model checkpoint is packed with the extracted tensors out of the basic operations (e.g., MatMul, Conv).

# Downloaded file

vit_small_lsq_adaround.tar.gz

|

--ptq

|

--[quantization method]

|

--checkpoint.pth.tar

|

--/t2c/

|

----/tensors/

----t2c_model.pth.tarThe pre-trained checkpoint contains both model file and all the extracted tensors.

-

The folder

quantization methodis named by the selected activation and weight quantization method from user (e.g.,lsq_minmax_channel) . -

checkpoint.pth.tarcontains the model file that directly quantized via the PTQ method with fake quantization. -

/t2c/folders contains 1) The integer-only model after conversion and 2) the extracted tensors out of the basic operations.-

t2cfolder contains the intermediate input and output tensors of the basic operations with batch size = 1.E.g.,

blocks.0.attn.qk_x1.ptis the low precision input (Query) of theMatMuloperation between query and key.

-

| Model | Pre-trained By | MinMax Channel+LSQ | AdaRound+LSQ | MinMaxChannel + Qdrop | MinMaxChannel + LSQToken | MinMaxChannel + MinMaxToken | MinMaxChannel+QDropToken |

|---|---|---|---|---|---|---|---|

| ResNet-50 | torchvision | 76.16 (link) | 76.12 (link) | 76.18 (link) | N/A | N/A | N/A |

| ResNet-34 | torchvision | 73.39 (link) | 73.38 (link) | 73.43 (link) | N/A | N/A | N/A |

| ResNet-18 | torchvision | 69.84 (link) | 69.80 (link) | 69.76 (link) | N/A | N/A | N/A |

| VGG16-BN | torchvision | 73.38 (link) | 73.40 (link) | 73.39 (link) | N/A | N/A | N/A |

| MobileNet-V1 | t2c (link) | 71.21 (link) | 69.87 (link) | 71.13 (link) | N/A | N/A | N/A |

| vit_tiny_patch16_224 | timm | 72.79 (link) | 72.65 (link) | 72.41 (link) | 72.49 (link) | 73.27 (link) | 73.00 (link) |

| vit_small_patch16_224 | timm | 81.05 (link) | 81.02 (link) | 80.89 (link) | 80.21 (link) | 80.04 (link) | 80.22 (link) |

| vit_base_patch16_224 | timm | 84.87 (link) | 84.62 (link) | 84.50 (link) | 84.68 (link) | 83.86 (link) | 84.53 (link) |

| swin_tiny_patch4_window7_224 | timm | 80.83 (link) | 80.76 (link) | 80.71 (link) | 80.30 (link) | 80.74 (link) | 80.10 (link) |

| swin_base_patch4_window7_224 | timm | 84.73 (link) | 84.62 (link) | 84.65 (link) | 84.27 (link) | 84.58 (link) | 84.32 (link) |

| BERT-Base-SST2 | HuggingFace | 0.922 (link) |

[Coming soon!]

Members of Seo Lab @ Cornell University led by Professor Jae-sun Seo.

Jian Meng, Yuan Liao, Anupreetham, Ahmed Hasssan, Shixing Yu, Han-sok Suh, Xiaofeng Hu, and Jae-sun Seo.

Publication: Torch2Chip: An End-to-end Customizable Deep Neural Network Compression and Deployment Toolkit for Prototype Hardware Accelerator Design (Meng et al., MLSys, 2024).

This work was supported in part by Samsung Electronics and the Center for the Co-Design of Cognitive Systems (CoCoSys) in JUMP 2.0, a Semiconductor Research Corporation (SRC) Program sponsored by the Defense Advanced Research Projects Agency (DARPA).