Welcome to the preview of the Media Insights Engine (MIE) project!

MIE is a serverless framework to accelerate the development of applications that discover next-generation insights in your video, audio, text, and image resources by utilizing AWS Machine Learning services. MIE lets builders:

- Create media analysis workflows from a library of base operations built on AWS Machine Learning and Media Services such as Amazon Rekognition, Amazon Transcribe, Amazon Translate, Amazon Cognito, Amazon Polly, and AWS Elemental MediaConvert.

- Execute workflows and store the resulting media and analysis for later use.

- Query analysis extracted from media.

- Interactively explore some of the capabilities of MIE using the included content and analysis and search web application.

- Extend MIE for new applications by adding custom operators and custom data stores.

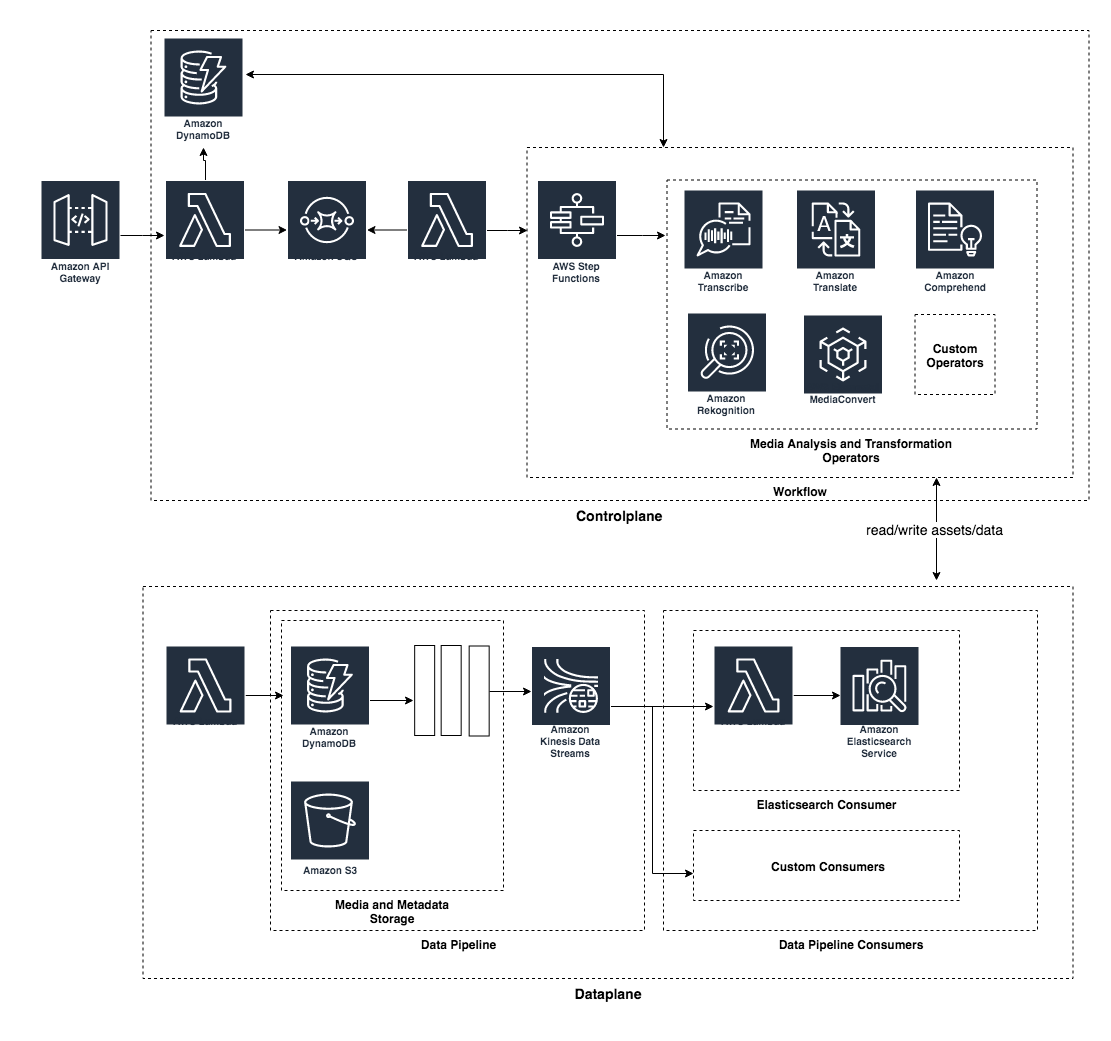

Media Insights Engine is a serverless architecture on AWS. The following diagram is an overview of the major components of MIE and how they interact when an MIE workflow is executed.

Triggers the execution of a workflow. Also triggers create, update and delete workflows and operators. Monitors the status of workflows.

Executes the AWS Step Functions state machine for the workflow against the provided input. Workflow state machines are generated from MIE operators. As operators within the state machine are executed, the interact with the MIE data plane to store and retrieve derived asset and metadata generated from the workflow.

Generated state machines that perform media analysis or transformation operation.

Generated state machines that execute a number of operators in sequence.

Stores media assets and their associated metadata that are generated by workflows.

Trigger create, update, delete and retrieval of media assets and their associated metadata.

Stores metadata for an asset that can be retrieved as a single block or pages of data using the objects AssetId and Metadata type. Writing data to the pipeline triggers a copy of the data to be stored in a Kinesis Stream.

A lambda function that consumes data from the data plane pipeline and stores it (or acts on it) in another downstream data store. Data can be stored in different kind of data stores to fit the data management and query needs of the application. There can be 0 or more pipeline consumers in a MIE application.

Deploy the demo architecture and application in your AWS account and start exploring your media.

| Region | Launch |

|---|---|

| US East (N. Virginia) |  |

The Media Insights sample application lets you upload videos, images, audio and text files for content analysis and add the results to a collection that can be searched to find media that has attributes you are looking for. It runs an MIE workflow that extracts insights using many of the ML content analysis services available on AWS and stores them in a search engine for easy exploration. A web based GUI is used to search and visualize the resulting data along-side the input media. The analysis and transformations included in MIE workflow for this application include:

- Proxy encode of videos and separation of video and audio tracks using AWS Elemental MediaConvert.

- Object, scene, and activity detection in images and video using Amazon Rekognition.

- Celebrity detection in images and video using Amazon Rekognition

- Face search from a collection of known faces in images and video using Amazon Rekognition

- Facial analysis to detect facial features and faces in images and videos to determine things like happiness, age range, eyes open, glasses, facial hair, etc. In video, you can also measure how these things change over time, such as constructing a timeline of the emotions expressed by an actor. From Amazon Rekognition.

- Unsafe content detection using Amazon Rekognition. Identify potentially unsafe or inappropriate content across both image and video assets.

- Convert speech to text from audio and video assets using Amazon Transcribe.

- Convert text from one language to another using Amazon Translate.

- Identify entities in text using Amazon Comprehend.

- Identify key phrases in text using Amazon Comprehend

Data are stored in Amazon Elasticsearch Service and can be retrieved using Lucene queries in the Collection view search page.

MIE is a reusable architecture that can support many different applications. Examples:

- Content analysis analysis and search - Detect objects, people, celebrities and sensitive content, transcribe audio and detect entities, relationships and sentiment. Explore and analyze media using full featured search and advanced data visualization. This use case is implemented in the included sample application.

- Automatic Transcribe and Translate - Generate captions for Video On Demand content using speech recognition.

- Content Moderation - Detect and edit moderated content from videos.

The Media Insights Engine is built to be extended for new use cases. You can:

- Run existing workflows using custom runtime configurations.

- Create new operators for new types of analysis or transformations of your media.

- Create new workflows using the existing operators and/or your own operators.

- Add new data consumers to provide data management that suits the needs of your application.

See the Developer Guide for more information on extending the application for a custom use case.

API Reference - Coming soon!

Builder's guide - Coming soon!

Visit the Issue page in this repository for known issues and feature requests.

See the CONTRIBUTING file for how to contribute.

See the LICENSE file for our project's licensing.

Copyright 2019 Amazon.com, Inc. or its affiliates. All Rights Reserved.

Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.