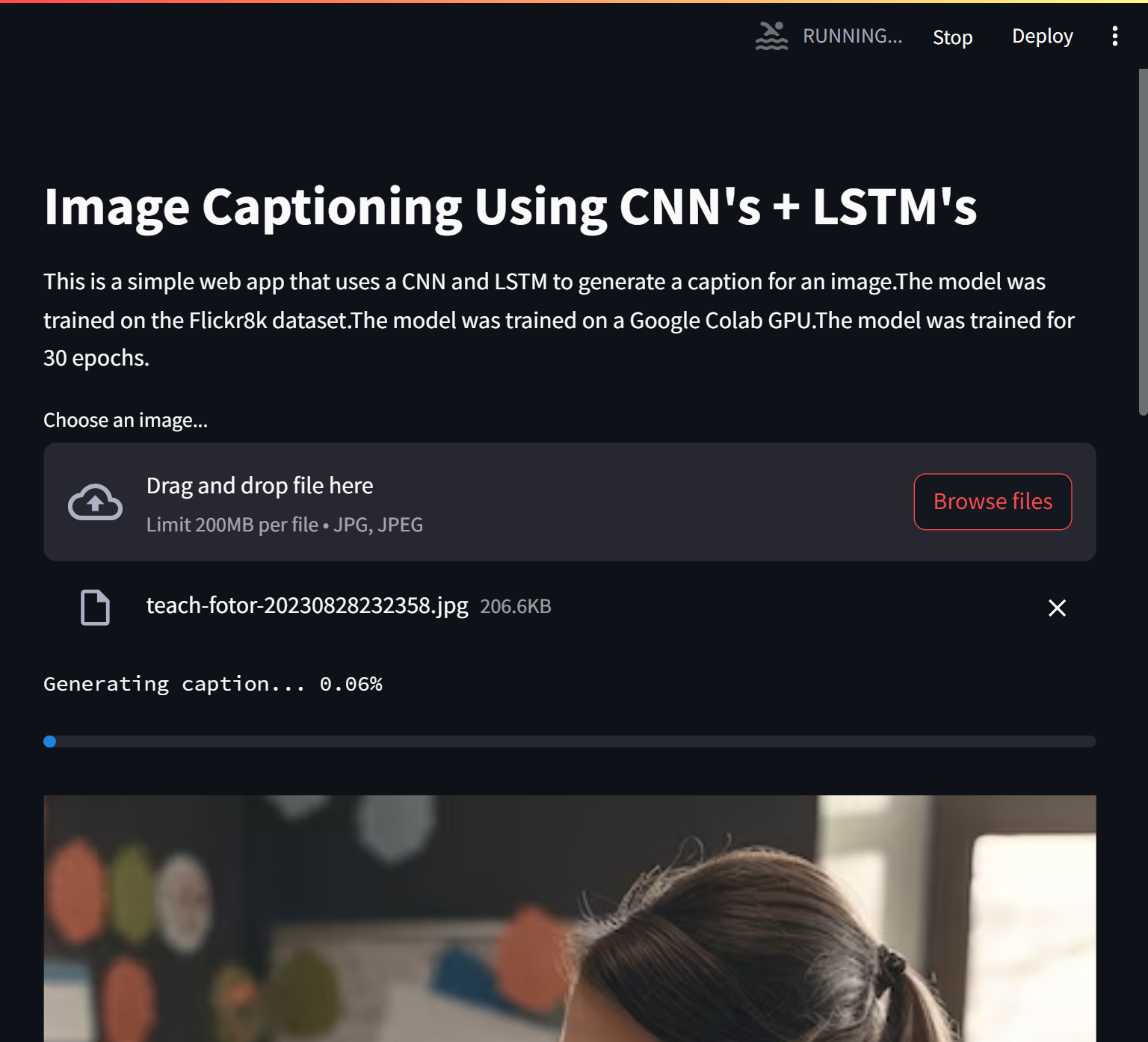

This project uses DenseNet201, CNN and LSTM to generate captions for images. The model is trained on the Flickr8k dataset. The model is trained on a GPU for 20 epochs. The model is then tested on a few images from the test dataset. The model is able to generlize well and generate captions for images it has never seen before.

The dataset used for this project is the Flickr8k dataset. The dataset contains 8000 images. The dataset is split into 6000 images for training, 1000 images for validation and 1000 images for testing. The dataset also contains 5 captions for each image. The dataset is preprocessed and the captions are cleaned before training the model.

The model is trained on a GPU for 20 epochs. The model is then tested on a few images from the test dataset. The model is able to generlize well and generate captions for images it has never seen before. The model is trained on a GPU for 20 epochs. The model is then tested on a few images from the test dataset. The model is able to generlize well and generate captions for images it has never seen before. The model has mainly 3 parts:

DenseNet201- TheDenseNet201model is used to extract features from the images. The model is pretrained on theImageNetdataset. The model is used as a feature extractor and the last layer is removed. The model is then used to extract features from the images.Data Generation- TheData Generationpart is used to generate data for training the model. The data is generated using theDenseNet201model. The model is used to extract features from the images. The features are then used to train theLSTMmodel.LSTM- TheLSTMmodel is used to generate captions for the images. The model is trained on the features extracted from the images.

The DenseNet201 model is used to extract features from the images. The model is pretrained on the ImageNet dataset. The model is used as a feature extractor and the last layer is removed. The model is then used to extract features from the images.DenseNet 201 Architecture is used to extract the features from the images. Any other pretrained architecture can also be used for extracting features from these images. Since the Global Average Pooling layer is selected as the final layer of the DenseNet201 model for our feature extraction, our image embeddings will be a vector of size 1920

Since Image Caption model training like any other neural network training is a highly resource utillizing process we cannot load the data into the main memory all at once, and hence we need to generate the data in the required format batch wise. The inputs will be the image embeddings and their corresonding caption text embeddings for the training process. The text embeddings are passed word by word for the caption generation during inference time

The LSTM model is used to generate captions for the images. The model is trained on the features extracted from the images.DenseNet 201 Architecture is used to extract the features from the images. Any other pretrained architecture can also be used for extracting features from these images. Since the Global Average Pooling layer is selected as the final layer of the DenseNet201 model for our feature extraction, our image embeddings will be a vector of size 1920. The image embedding representations are concatenated with the first word of sentence ie. starseq and passed to the LSTM network. The LSTM network starts generating words after each input thus forming a sentence at the end

- Clone the repository

git clone https://github.com/Sarath191181208/image_captioning.git- Install the requirements

pip install -r requirements.in- Run the project

python -m streamlit run main.pyThis project has been licensed under MIT License. Please see the LICENSE file for more details.