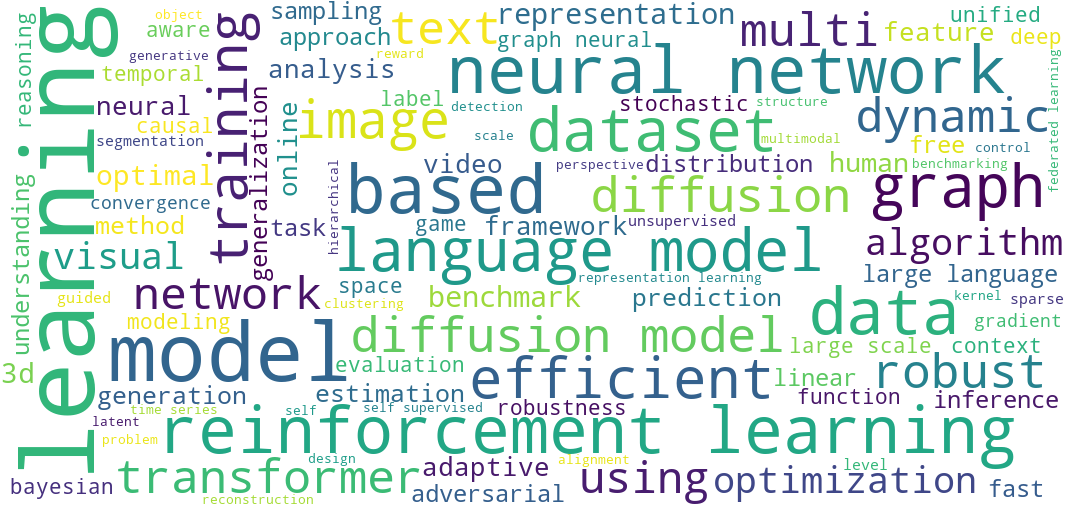

Caption: Wordcloud of all NeurIPS 2023 titles

Caption: Wordcloud of all NeurIPS 2023 titles

Welcome to the hub for all things NeurIPS 2023! We scraped the data for all 3500+ NeurIPS projects and dove into the depths of Hugging Face, GitHub, LinkedIn, and Arxiv to pick out the most interesting content.

In this repo, you will find:

- Data Analysis: detailed analysis of the titles and abstracts from NeurIPS 2023 accepted papers

- Awesome Projects: synthesized collection of 40 NeurIPS 2023 papers you won't want to miss

- Conference Schedule: comprehensive listing of all NeurIPS 2023 projects (title, authors, abstract) organized by poster session and sorted alphabetically

The raw data is included in this repo. If you have ideas for other interesting analyses, feel free to create an issue or submit a PR!

For now, insights are organized into the following categories:

- Authors

- Titles

- Abstracts

For the data analysis itself, check out the Jupyter Notebook!

The top 10 authors with the most papers at NeurIPS 2023 are:

- Bo Li: 15 papers

- Ludwig Schmidt: 14 papers

- Bo Han: 13 papers

- Mihaela van der Schaar: 13 papers

- Hao Wang: 12 papers

- Dacheng Tao: 11 papers

- Bernhard Schölkopf: 11 papers

- Masashi Sugiyama: 11 papers

- Andreas Krause: 11 papers

- Tongliang Liu: 11 papers

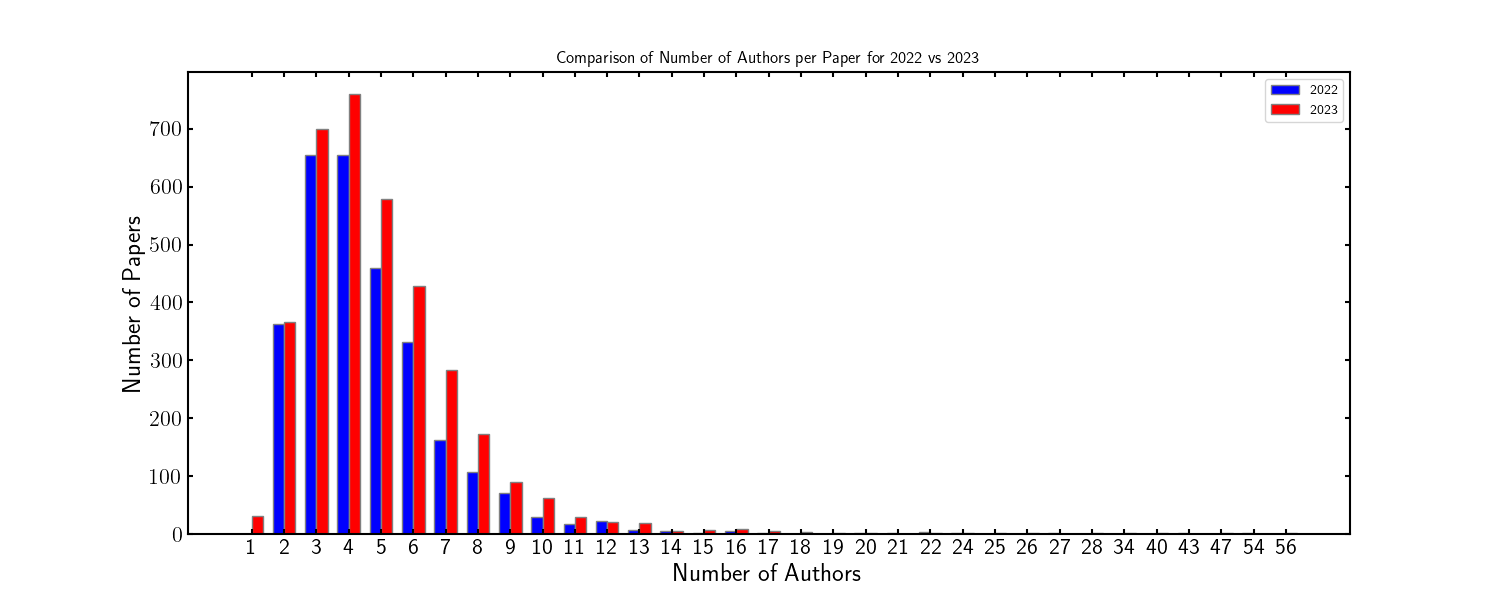

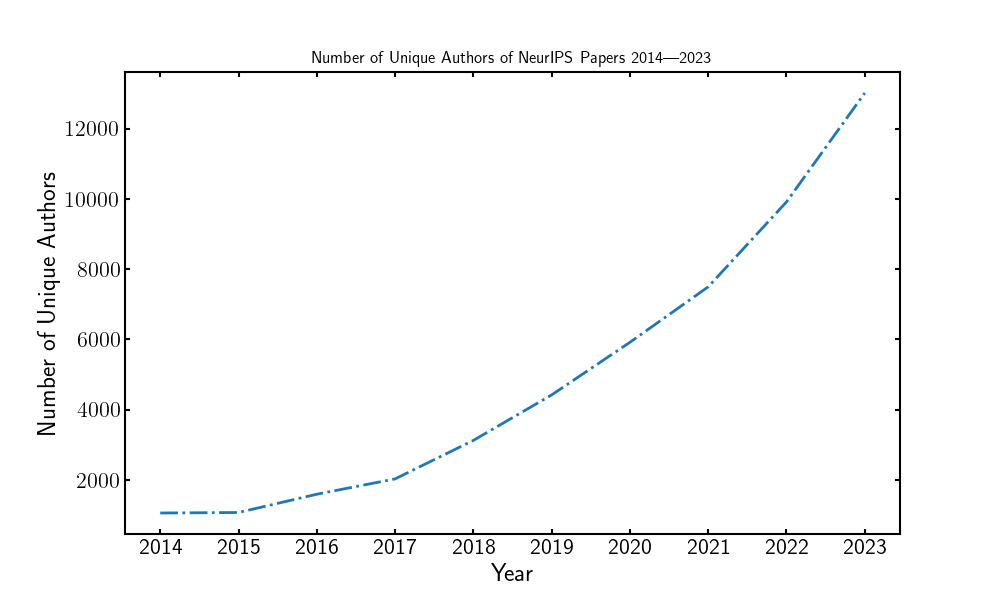

There were 13,012 unique authors at NeurIPS 2023, up from 9913 at NeurIPS 2022.

This continues the exponential explosion of unique authors over the past decade.

- The average number of authors per paper was 4.98, up from 4.66 at NeurIPS 2022.

- Additionally, there were a handful of single-author papers, in contrast to NeurIPS 2022, where the minimum number of authors was 2.

- The paper with the most authors was ClimSim: A large multi-scale dataset for hybrid physics-ML climate emulation

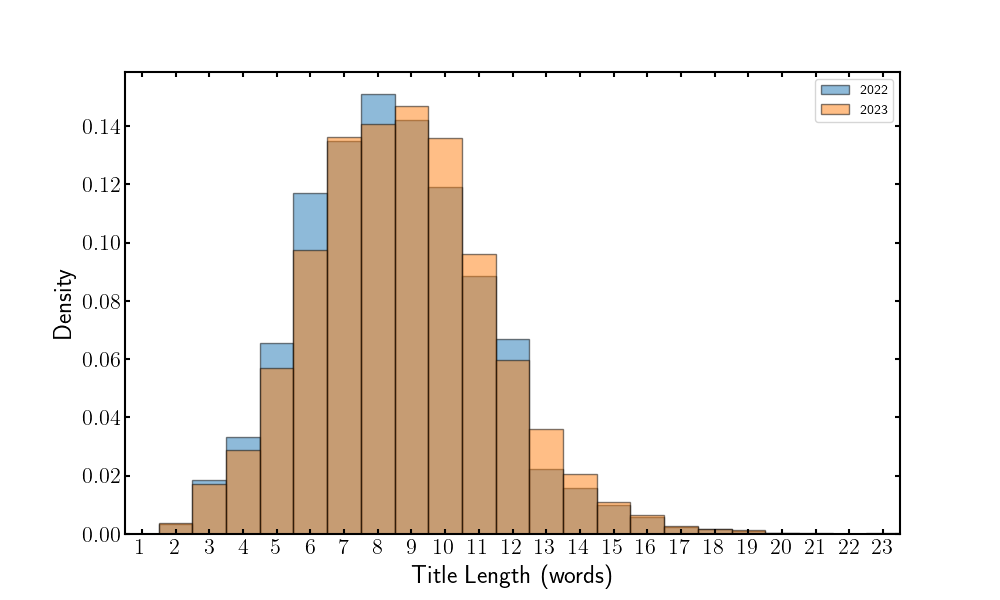

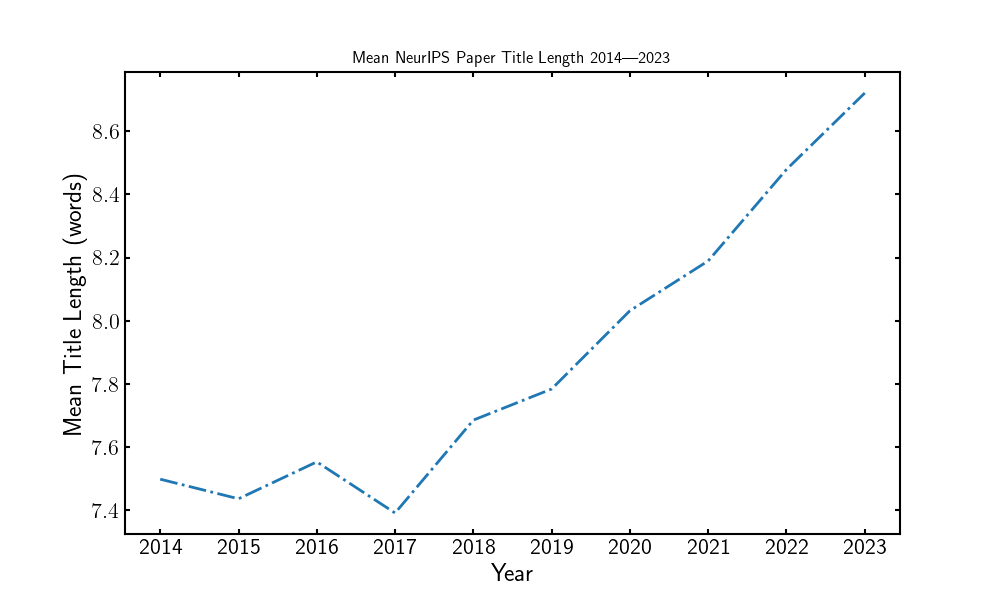

- The average title length was 8.72 words, up from 8.48 at NeurIPS 2022. This continues an ongoing trend of title lengthening:

22% of titles introduced an acronym, up from 18% at NeurIPS 2022.

- 1.3% of titles contained LaTeX, whereas none of the titles at NeurIPS 2022 contained LaTeX.

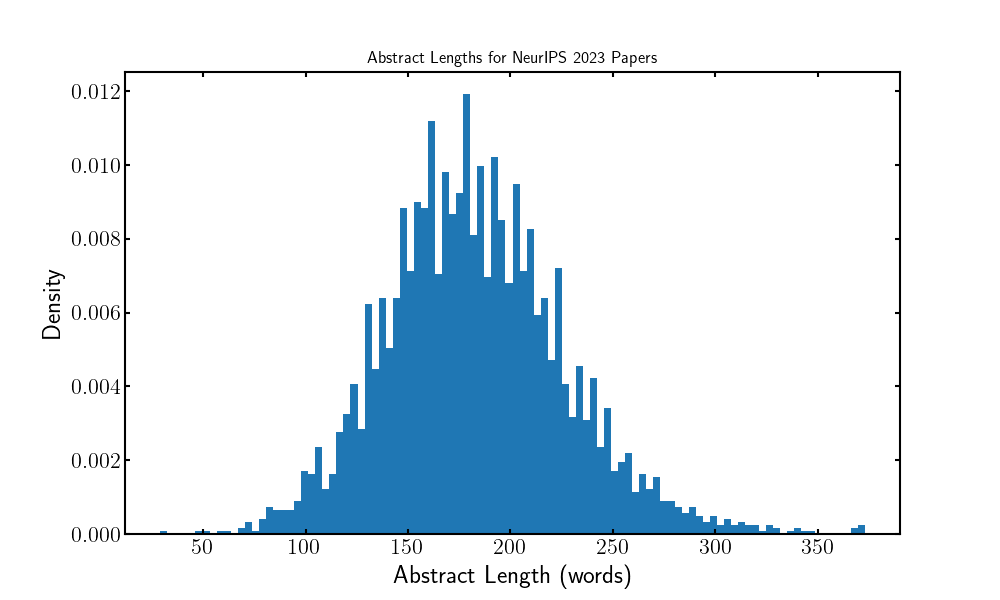

- The longest abstract was from [Re] On the Reproducibility of FairCal: Fairness Calibration for Face Verification, which has 373 words.

- The shortest abstract was from Improved Convergence in High Probability of Clipped Gradient Methods with Heavy Tailed Noise, which has 29 words.

- Out of the 3581 abstracts, 675 explicitly mention GitHub, including a link to their code, models, or data.

- Only 79 abstracts include a URL that is not GitHub.

Using a CLIP model, we zero-shot

classified/predicted the modality of focus for each paper based on its abstract.

The categories were ["vision", "text", "audio", "tabular", "time series", "multimodal"].

By far the biggest category was multimodal, with a count of 1296. However, the

CLIP model's inclination towards "multimodal" may be somewhat biased by trying

to partially fit other modalities — the words multi-modal and multimodal only

show up in 156 abstracts, and phrases like vision-language and text-to-image

only appear a handful of times across the dataset.

Themes occurring frequently include:

- "benchmark": 730

- ("generation", "generate"): 681

- ("efficient", "efficiency"): 963

- "agent": 280

- ("llm", "large language model"): 238

| Title | Paper | Code | Project Page | Hugging Face |

|---|---|---|---|---|

| An Inverse Scaling Law for CLIP Training |  |

|

||

| Augmenting Language Models with Long-Term Memory |  |

|

||

| Chameleon: Plug-and-Play Compositional Reasoning with Large Language Models |  |

|

Project | |

| Cheap and Quick: Efficient Vision-Language Instruction Tuning for Large Language Models |  |

|

Project | |

| DataComp: In search of the next generation of multimodal datasets |  |

|

Project | |

| Direct Preference Optimization: Your Language Model is Secretly a Reward Model |  |

|

||

| DreamSim: Learning New Dimensions of Human Visual Similarity using Synthetic Data |  |

|

Project | |

| Fine-Tuning Language Models with Just Forward Passes |  |

|

||

| Generating Images with Multimodal Language Models |  |

|

Project | |

| Holistic Evaluation of Text-To-Image Models |  |

|

Project | |

| HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face |  |

|

|

|

| ImageReward: Learning and Evaluating Human Preferences for Text-to-Image Generation |  |

|

|

|

| InstructBLIP: Towards General-purpose Vision-Language Models with Instruction Tuning |  |

|

|

|

| Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena |  |

|

||

| LAMM: Multi-Modal Large Language Models and Applications as AI Agents |  |

|

Project | |

| LIMA: Less Is More for Alignment |  |

|||

| LLM-Pruner: On the Structural Pruning of Large Language Models |  |

|

||

| LightZero: A Unified Benchmark for Monte Carlo Tree Search in General Sequential Decision Scenario |  |

|

||

| MVDiffusion: Enabling Holistic Multi-view Image Generation with Correspondence-Aware Diffusion |  |

|

Project | |

| MagicBrush: A Manually Annotated Dataset for Instruction-Guided Image Editing |  |

|

Project |  |

| Mathematical Capabilities of ChatGPT |  |

|

Project | |

| Michelangelo: Conditional 3D Shape Generation based on Shape-Image-Text Aligned Latent Representation |  |

|

Project | |

| Motion-X: A Large-scale 3D Expressive Whole-body Human Motion Dataset |  |

|

Project | |

| MotionGPT: Human Motion as Foreign Language |  |

|

Project |  |

| OBELICS: An Open Web-Scale Filtered Dataset of Interleaved Image-Text Documents |  |

|

|

|

| Photoswap: Personalized Subject Swapping in Images |  |

|

Project | |

| Pick-a-Pic: An Open Dataset of User Preferences for Text-to-Image Generation |  |

|

|

|

| QLoRA: Efficient Finetuning of Quantized LLMs |  |

|

|

|

| Reflexion: Language Agents with Verbal Reinforcement Learning |  |

|

||

| ResShift: Efficient Diffusion Model for Image Super-resolution by Residual Shifting |  |

|

Project | |

| Segment Anything in 3D with NeRFs |  |

|

Project | |

| Segment Anything in High Quality |  |

|

|

|

| Segment Everything Everywhere All at Once |  |

|

||

| Self-Refine: Iterative Refinement with Self-Feedback |  |

|

Project | |

| Simple and Controllable Music Generation |  |

|

||

| Squeeze, Recover and Relabel : Dataset Condensation at ImageNet Scale From A New Perspective |  |

|

||

| The RefinedWeb Dataset for Falcon LLM: Outperforming Curated Corpora with Web Data, and Web Data Only |  |

|

||

| Toolformer: Language Models Can Teach Themselves to Use Tools |  |

|||

| Unlimiformer: Long-Range Transformers with Unlimited Length Input |  |

|

||

| Visual Instruction Tuning |  |

|

Project |  |

Note: GitHub automatically truncates files larger than 512 KB. To have all papers display on GitHub, we've split the file up by session.