This repository contains the source code for our work:

Stereo-LiDAR Depth Estimation with Deformable Propagation and Learned Disparity-Depth Conversion (ICRA 2024)

by Ang Li, Anning Hu, Wei Xi, Wenxian Yu and Danping Zou. Shanghai Jiao Tong University and Midea Group

If you find this code useful in your research, please cite:

@article{li2024stereo,

title={Stereo-LiDAR Depth Estimation with Deformable Propagation and Learned Disparity-Depth Conversion},

author={Li, Ang and Hu, Anning and Xi, Wei and Yu, Wenxian and Zou, Danping},

journal={arXiv preprint arXiv:2404.07545},

year={2024}

}

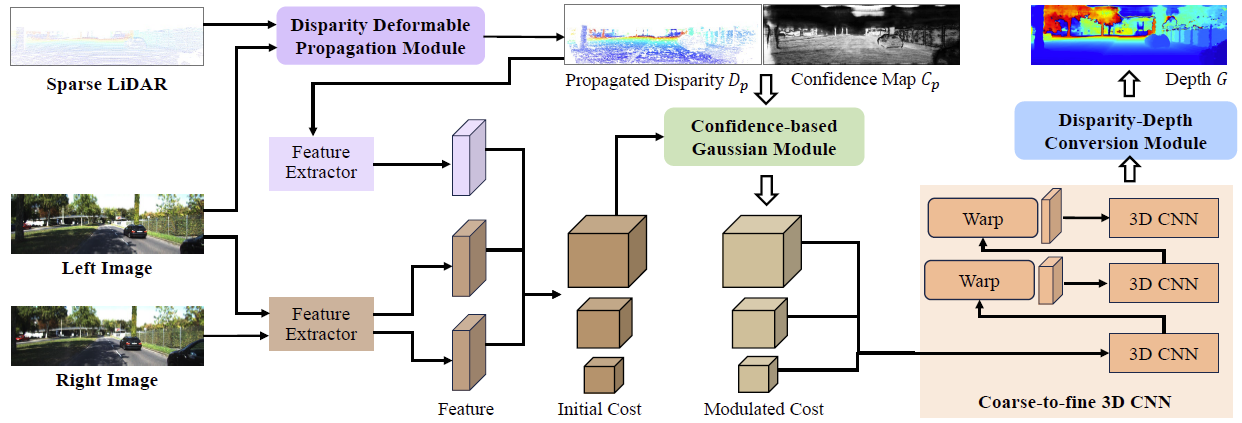

- The architecture of our network

The code has been tested with PyTorch 1.8 and Cuda 11.1.

conda env create -f environment.yaml

conda activate sdgTo evaluate/train SDG-Depth, you will need to download the required datasets.

By default datasets.py will search for the datasets in these locations. You can create symbolic links to wherever the datasets were downloaded in the datasets folder

├── datasets

├── kitti_mono

├── 2011_09_26

├── 2011_09_26_drive_001_sync

├── image_02

├── image_03

├── train

├── 2011_09_26_drive_0001_sync

├── proj_depth

├── groundtruth

├── velodyne_raw

├── val

├── 2011_09_26_drive_0002_sync

├── proj_depth

├── groundtruth

├── velodyne_raw

├── vkitti2

├── Scene01

├── 15-deg-left

├── frames

├── depth

├── rgb

├── MS2

├── proj_depth

├── _2021-08-06-11-23-45

├── rgb

├── depth_filtered

├── sync_data

├── _2021-08-06-11-23-45

├── rgb

├── img_left

Pretrained models can be downloaded from google drive.

You can run a trained model with

bash ./scripts/eval.shYou can train a model from scratch on KITTI Depth Completion with

bash ./scripts/train.shThanks to the excellent work RAFT-Stereo, CFNet, and EG-Depth. Our work is inspired by these work and part of codes are migrated from RAFT-Stereo, CFNet and EG-Depth.