Learning Efficient GANs for Image Translation via Differentiable Masks and co-Attention Distillation (Link) .

.

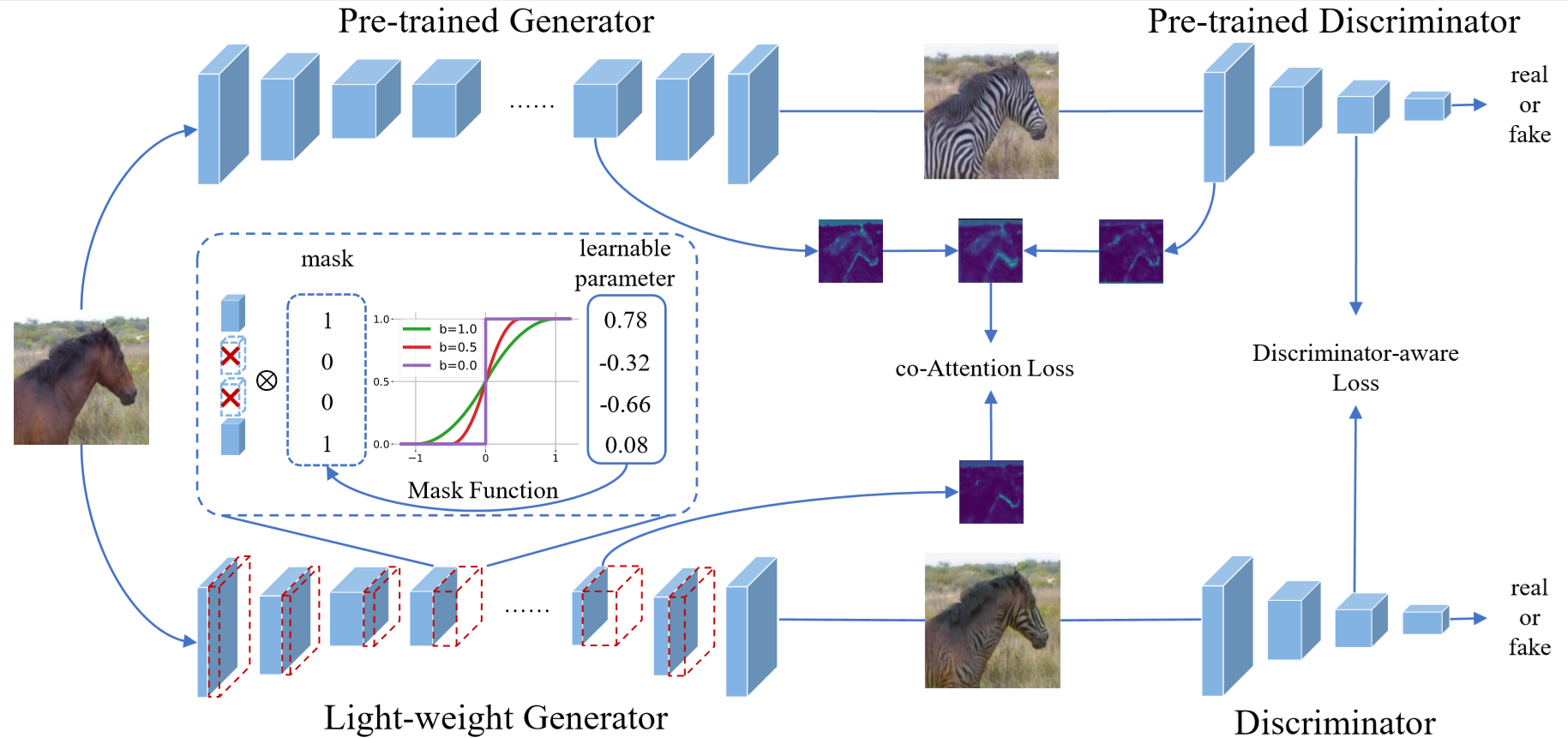

Framework of our method. We first build a pre-trained model similar to a GAN network, upon which a differentiable mask is imposed to scale the convolutional outputs of the generator and derive a light-weight one. Then, the co-Attention of the pre-trained GAN and the outputs of the last-layer convolutions of the discriminator are distilled to stabilize the training of the light-weight model.

Any problem, free to contact the first authors (shaojieli@stu.xmu.edu.cn).

The code has been tested using Pytorch1.5.1 and CUDA10.2 on Ubuntu 18.04.

Please type the command

pip install -r requirements.txtto install dependencies.

-

Download the Cyclcegan dataset (eg. horse2zebra)

bash datasets/download_cyclegan_dataset.sh horse2zebra

-

Download our pre-prepared real statistic information for computing FID, and then copy them to the root directionary of dataset.

Task Download horse2zebra Link summer2winter Link -

Train the model using our differentiable masks (eg. horse2zebra)

bash scripts/cyclegan/horse2zebra/train.sh

-

Finetune the searched light-weight models with co-Attention distillation

bash scripts/cyclegan/horse2zebra/finetune.sh

-

Download the Pix2Pix dataset (eg. edges2shoes)

bash datasets/download_pix2pix_dataset.sh edges2shoes-r

-

Download our pre-trained real statistic information for computing FID or DRN-D-105 model for computing mIOU, and then copy them to the root directionary of dataset.

Task Download edges2shoes Link cityscapes Link -

Train the model using our differentiable masks (eg. edges2shoes)

bash scripts/pix2pix/edges2shoes/train.sh

-

Finetune the searched light-weight models with co-Attention distillation

bash scripts/pix2pix/edges2shoes/finetune.sh

We provide our compressed models in the experiments.

| Model | Task | MACs (Compress Rate) |

Parameters (Compress Rate) |

FID/mIOU | Download |

|---|---|---|---|---|---|

| CycleGAN | horse2zebra | 3.97G(14.3×) | 0.42M(26.9×) | FID:62.41 | Link |

| CycleGAN* | horse2zebra | 2.41G(23.6×) | 0.28M(40.4×) | FID:62.96 | Link |

| CyclceGAN | zebra2horse | 3.50G (16.2×) | 0.30M (37.7×) | FID:139.3 | Link |

| CyclceGAN | summer2winter | 3.18G (17.9×) | 0.24M (47.1×) | FID:78.24 | Link |

| CyclceGAN | winter2summer | 4.29G (13.2×) | 0.45M (25.1×) | FID:70.97 | Link |

| Pix2Pix | edges2shoes | 2.99G (6.22×) | 2.13M (25.5×) | FID:46.95 | Link |

| Pix2Pix* | edges2shoes | 4.30G (4.32×) | 0.54M (100.7×) | FID:24.08 | Link |

| Pix2Pix | cityscapes | 3.96G (4.70×) | 1.73M (31.4×) | mIOU:40.53 | Link |

| Pix2Pix* | cityscapes | 4.39G (4.24×) | 0.55M (98.9×) | mIOU:41.47 | Link |

* indicates that a generator with separable convolutions is adopted

You can use the following code to test our compression models.

python test.py

--dataroot ./database/horse2zebra

--model cyclegan

--load_path ./result/horse2zebra.pthOur code is developed based on pytorch-CycleGAN-and-pix2pix and GAN Compression.