Jiachen Li, Vidit Goel, Marianna Ohanyan, Shant Navasardyan, Yunchao Wei, Humphrey Shi

Aug 25, 2022: Codes are released with project page and arxiv version.

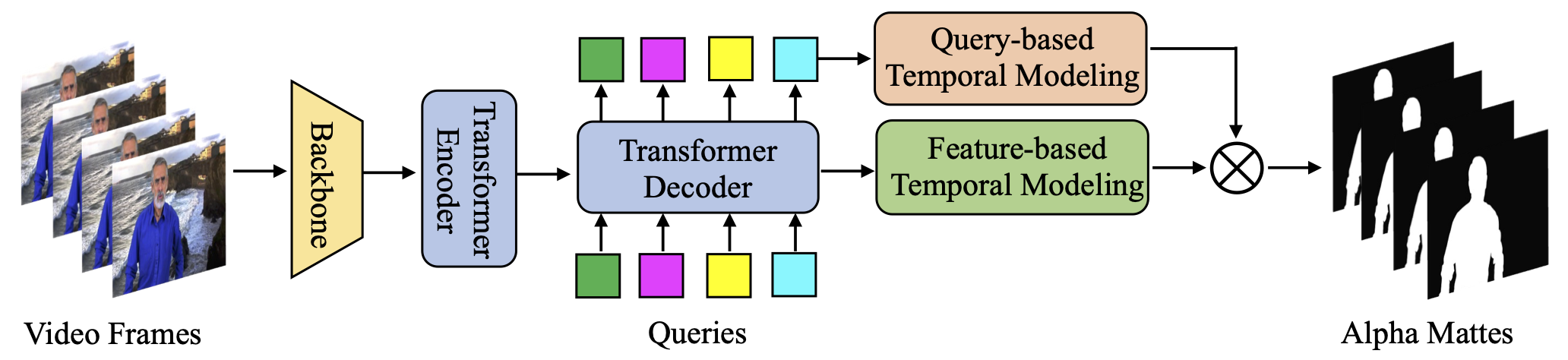

Video matting aims to predict the alpha mattes for each frame from a given input video sequence. Recent solutions to video matting have been dominated by deep convolutional neural networks (CNN) for the past few years, which have become the de-facto standard for academia and industry. However, they have the inbuilt inductive bias of locality and do not capture the global characteristics of an image due to the CNN-based architectures. They also need long-range temporal modeling considering computational costs when dealing with feature maps of multiple frames. In this paper, we propose VMFormer: a transformer-based end-to-end method for video matting. It makes predictions on alpha mattes of each frame from learnable queries given a video input sequence. Specifically, it leverages self-attention layers to build global integration of feature sequences with short-range temporal modeling on successive frames. We further apply queries to learn global representations through cross-attention in the transformer decoder with long-range temporal modeling upon all queries. In the prediction stage, both queries and corresponding feature maps are used to make the final prediction of alpha matte. Experiments show that VMFormer outperforms previous CNN-based video matting methods on the composited benchmarks. To the best knowledge, it is the first end-to-end video matting solution built upon a full vision transformer with predictions on the learnable queries

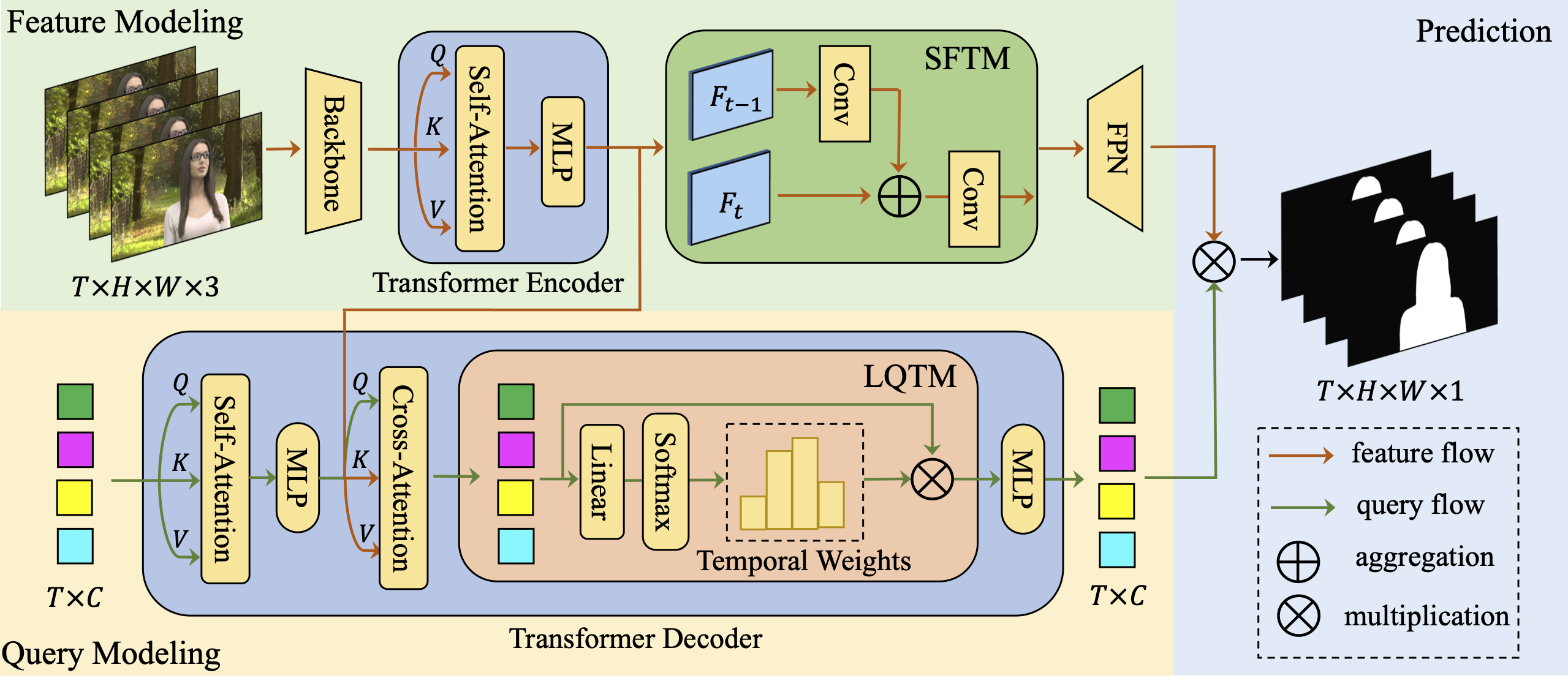

VMFormer contains two separate paths for modeling of features and queries: a) The feature modeling path contains a CNN-based backbone network to extract feature pyramids and a transformer encoder integrates feature sequences globally with short-range feature-based temporal modeling (SFTM). b) The query modeling path has a transformer decoder for queries to learn global representations of feature sequences and long-range query-based temporal modeling (LQTM) are built upon all queries. The final alpha mattes predictions based on matrix multiplication between queries and feature maps. LayerNorm, residual connection and repeated blocks are omitted for simplicity.

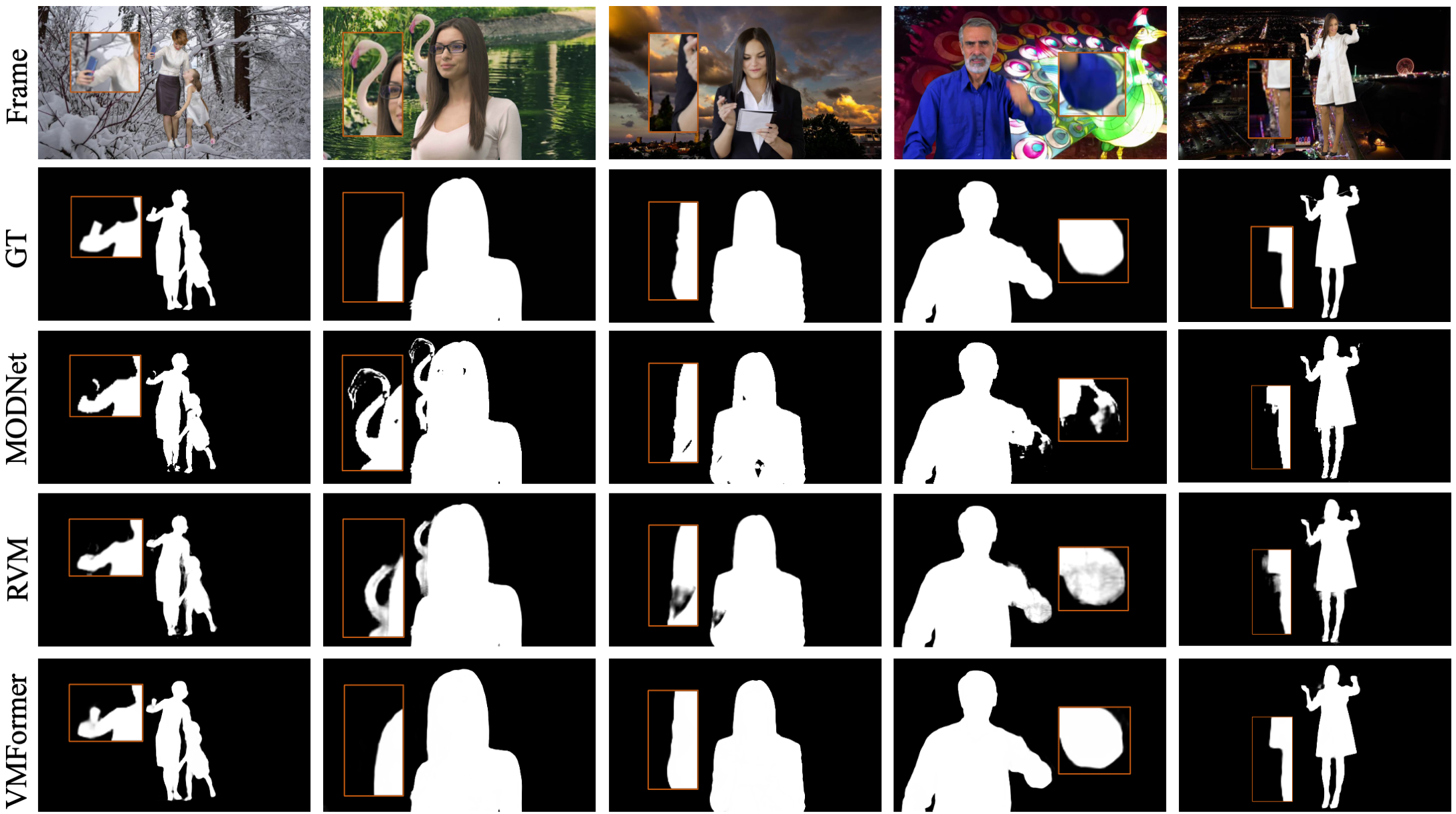

Visualization of alpha matte predictions from MODNet, RVM and VMFormer under challenging frames from the composited test set. VMFormer shows better ability to distinguish ambiguous foreground from background regions as shown in the magnified image patches.

See Installation Instructions.

See Getting Started.

| Model | MAD | MSE | Grad | Conn |

|---|---|---|---|---|

| 512x288: VMFormer [google drive] | 4.91 | 0.55 | 0.40 | 0.25 |

| 1920x1080: VMFormer [google drive] | 4.81 | 0.78 | 4.90 | 3.34 |

- PyTorch codes release

- Video inference support

- Huggingface demo

- Colab demo

@article{li2022vmformer,

title={VMFormer: End-to-End Video Matting with Transformer},

author={Jiachen Li and Vidit Goel and Marianna Ohanyan and Shant Navasardyan and Yunchao Wei and Humphrey Shi},

journal={arXiv preprint},

year={2022},

}

This repo is based on Deformable DETR, SeqFormer and RVM. Thanks for their open-sourced works.