Implementation of SCGN in paper "Deep View Synthesis via Self-Consistent Generative Networks" [arxiv].

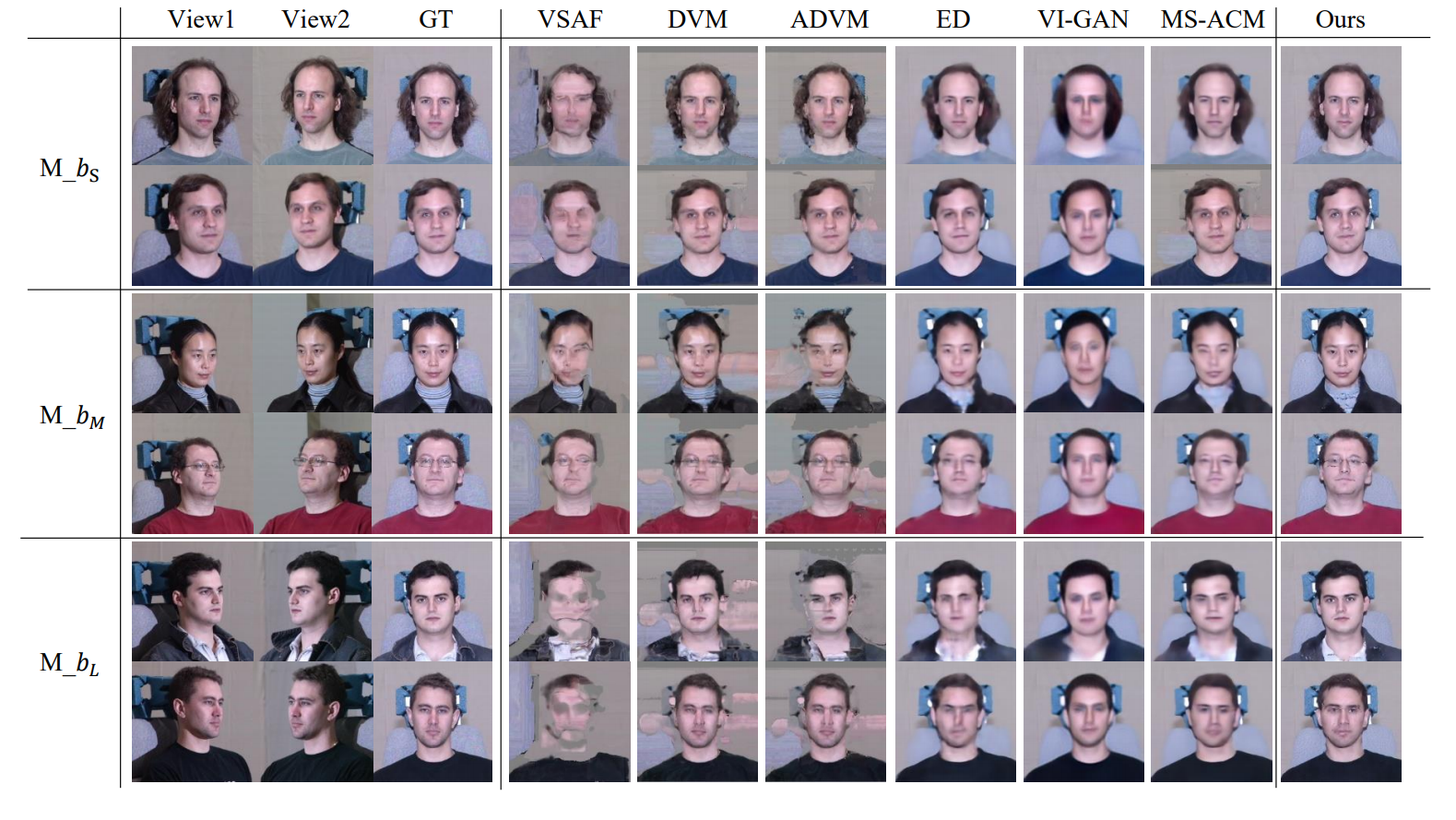

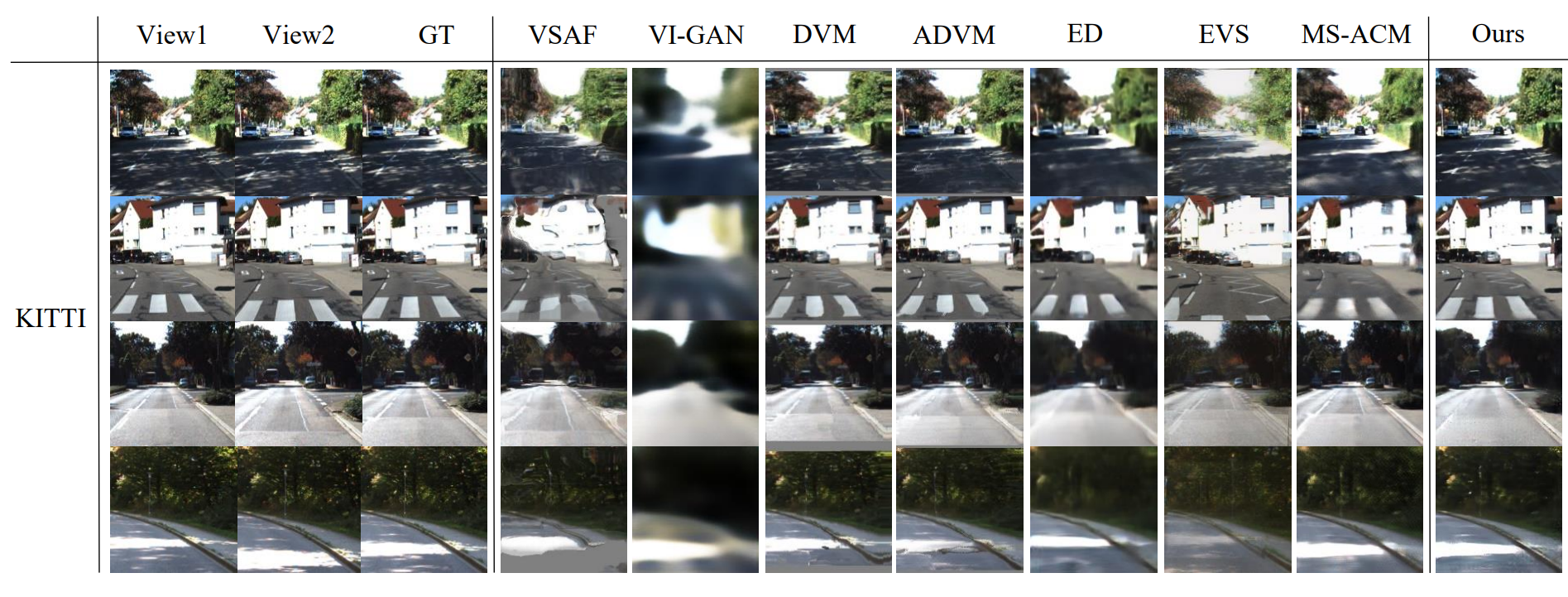

We propose a novel end-to-end deep generative model, called self-consistent generative network (SCGN), to synthesize novel views from given input views relying on image content only.

- Python == 3.x

- OpenCV-Python

- Tensorflow >= 1.12

- Platform: Linux

git clone https://github.com/zhuomanliu/SCGN.git

cd SCGN/- Download dataset from here.

- According to the Content page, we collect data from varied poses (take 05_1 as the target pose) with the highest illumination (i.e., the 7th illumination).

- Specifically, the original image would be center-cropped and resized into 224x224.

- Download the odometry data (color) from here.

- Collect the first 11 sequences from /dataset/sequences/${seq_id}/image_2/, where seq_id is from 00 to 10, and place the sequences in the folder ./datasets/kitti/data. Similarly, the raw image would be center-cropped and resized into 224x224.

- Execute ./datasets/kitti/gen_list.py for train-test split and random sampling, then train.csv and test.csv will be generated in ./datasets/kitti/split.

- Alternatively, we also provide the prepared KITTI dataset. [Google Drive] [Baidu Cloud, pwd: 57gq]

- Download pre-trained models [Multi-PIE, KITTI] and unzip them in the folder ./ckpts for testing.

- Start training or testing as follows:

# train

./train.sh 0 multipie run-multipie # for Multi-PIE

./train.sh 0 kitti run-kitti # for KITTI

# test

./test.sh 0 multipie run-multipie res-multipie # for Multi-PIE

./test.sh 0 kitti run-kitti res-kitti # for KITTI- We have provided some samples in ./demo/multipie and ./demo/kitti for inference.

- Start inference as follows:

# for Multi-PIE

./demo.sh 0 multipie run-multipie ./demo/multipie 15_input_l.png 15_input_r.png 15_result

./demo.sh 0 multipie run-multipie ./demo/multipie 30_input_l.png 30_input_r.png 30_result

./demo.sh 0 multipie run-multipie ./demo/multipie 45_input_l.png 45_input_r.png 45_result

# for KITTI

./demo.sh 0 kitti run-kitti ./demo/kitti 09_input_l.png 09_input_r.png 09_result

./demo.sh 0 kitti run-kitti ./demo/kitti 10_input_l.png 10_input_r.png 10_resultPlease cite the following paper if this repository helps your research:

@article{liu2021deep,

title={Deep View Synthesis via Self-Consistent Generative Network},

author={Liu, Zhuoman and Jia, Wei and Yang, Ming and Luo, Peiyao and Guo, Yong and Tan, Mingkui},

journal={IEEE Transactions on Multimedia},

year={2021},

publisher={IEEE}

}