SAP HANA Interactive Education or SHINE is a demo application that includes the following features:

-

HDI Features:

- Table

- CDS Views

- Sequence

- Calculation Views

- Associations

- Table Functions

- Synonyms

- Procedures

- Cross Container Access

- Multiple Containers

- Spatial Features

- Local Time Data Generation

- Comments for Tables

- Index

- Structured Privilege

- Usage of Table Functions in CDS views

-

XSA Features:

- User Authentication and Authorization (UAA)

- App Router

- oData V2 Services (Node.js)

- Node js

- Authorization (Roles)

- oData Exits

- Job Scheduler Token-Based Authentication

- oData Batch

SHINE follows the XS Advanced Programming Model(XSA) and uses SAP HANA Service for the database. This article describes the steps to be followed to Migrate this demo application to the Cloud Application Programming Model(CAP) with SAP HANA Cloud as the database.

| Source XSA Artifact | Target CAP Artifact | Notes | Available Tools(for automation) | More Information |

|---|---|---|---|---|

| HDBCDS entity | CAP CDS | Some code changes are required:

Entities have to be renamed in the HDI container for that we use the toRename function of the CDS Compiler |

|

|

| Native HDB artifacts | Native HDB artifacts |

|

No |

|

| OData service | OData service |

|

No | |

| XSJS (compatibility) | Nothing | It is not compatible with CAP, So it is recommended to rewrite the code in CAP | No |

|

| JavaScript/Node.js | Node.js | 1:1 XSA-CAP | No | CAP NodeJS |

| Java | Jave | 1:1 XSA-CAP | No | CAP Java |

| Fiori | Fiori | Supported in CAP CDS | Fiori elements are not used in Shine so further tests on annotation based application is required | CAP with Fiori |

| SAPUI5 | SAPUI5 | Supported in CAP CDS | We can reuse the same code | SAPUI5 |

- SAP HANA 2.0 (on-premise or SAP HANA Service on BTP) along with the HDI Container. In Shine example, SAP HANA Service Instance was used.

- Hana Cloud Service Instance should be created.

- We can use SAP BAS or VScode for the Migration. Tools like cf cli and mbt have to be installed.

- The deployments can be done either by using the

@sap/hdi-deploymodule or by using the MTA. - CDS development kit should be installed with the command

npm i -g @sap/cds-dk(Note: Make sure the latest cds version is installed).

We have successfully migrated the SHINE sample application and content in order to identify the challenges and issues that customers have encountered in doing a migration from SAP HANA 2.0 to SAP HANA Cloud. The path followed for this Migration involves the below steps:

- Preparing XS Advanced Source and CAP Target Applications

- Rename the HANA Artifacts in the Source SAP HANA 2.0/XSA HDI Container.

- CAP Application Deployment with hdbcds and hdbtable format to the Source SAP HANA 2.0/XSA HDI Container.

- Migration of SAP HANA 2.0 HDI Container using Self-Service Migration for SAP HANA Cloud and Bind the CAP application to the migrated container.

- Migration of SRV and UI layers.

In this Step, we will prepare the XS Advanced Source and CAP Target Applications.

The first step of the migration is to clone and deploy the Source Application.

- Open a command line terminal and clone the SHINE Application.

git clone https://github.com/SAP-samples/hana-shine-xsa.git -b shine-cf - Build the application

mbt build -p=cf - Once the build is complete, an mta_archives folder will be created in the root folder. Navigate inside the mta_archives folder.

- Deploy the source application to the SAP Business Technology Platform(SAP BTP) using the below command.

cf deploy <Generated_MTAR_Name>.mtar

The next step of the migration is to create a Target CAP application.

In this step, we will create a Target CAP application and copy the database of the XSA application and modify it to support the CAP format.

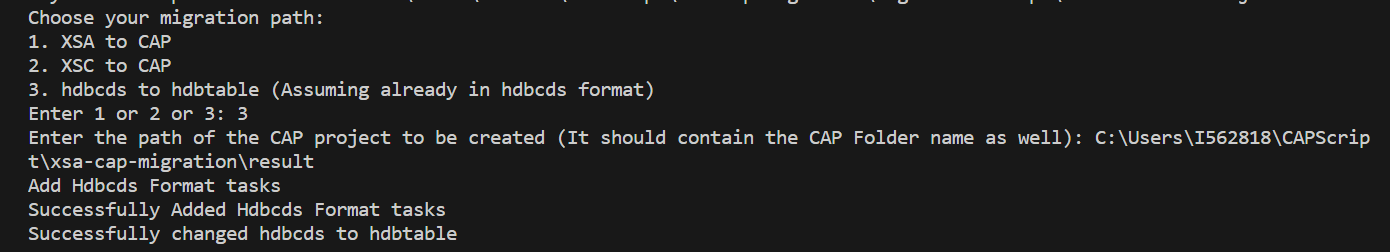

Note: All the below steps can be done automatically by running our migration-script script. This will also rename the HANA database artifacts to the CAP CDS supported format. As this is an example script, Adjust the "calcview.xsl" file with the attributes that are required by the application and build before running the script.

-

Open a command line window and navigate to any folder with the command

cd <folder>. -

Create a folder for the CAP project and navigate inside it.

mkdir <CAP Project folder> cd <CAP Project folder> -

Create an initial CAP project by executing the command

cds initinside the root directory. -

Copy all the files from the database folder of the XSA/SAP HANA 2.0 Application (Eg: core-db) to the db folder of the newly created CAP project.

Note: If the source project contains multiple containers with data in multiple folders, CAP Application can be modified to include multiple folders like db, db1 and so on and the data from the source folders can be copied to these folders.

-

Change the extension of the hdbcds files to cds.

-

Modify the notation in view definitions from

""to. This delimiter makes the processing in CAPCDS more reliable. -

Change the HANA CDS Datatypes to CAP CDS Datatypes.

HANA CDS CAP CDS LocalDate Date LocalTime Time UTCDateTime DateTime UTCTimestamp Timestamp BinaryFloat Double -

Replace

@OData.publish:truewith@cds.autoexpose. -

@Commentshould be changed to Doc comments. -

Change the artifact table type to type or remove them as CAP CDS doesn't generate table types anymore. We will create a .hdbtabletype files for each table type definition in the later steps.

-

Temporary entities are not supported in CAP. One way to reuse the existing table is to use

@cds.persistence.existsannotation for the entity in conjunction with.hdbdropcreatetable. In the migration-script script, we just convert these entities to regular entities. -

Move all the CDS files from their respective folders (Eg: src/) to the db folder of the CAP project. If cds files are inside the src folder then the deployment will fail because of where the "cds" plugin is. As per CAP, the cds files shouldn’t be in src folder because only the gen folder will push the data, but in the XSA application all the artifacts will reside inside the src folder. So we have to move the cds files to the db folder for the deployment to work correctly.

-

Remove the full text index as they are no longer supported.

-

Convert Series entity to a regular entity.

-

Add the technical configurations in the

@sql.appendannotation above the entity as below example@sql.append: ``` technical configuration { partition by HASH (PARTNERID) Partitions GET_NUM_SERVERS(); } ``` Entity BusinessPartner {} -

Add the privileges in the

@sql.appendannotation above the view.@sql.append:'with structured privilege check' define view ItemView as SELECT from Item {}; -

Add the inline

@sql.appendannotationentity MyEntity1 { key ID : Integer; a : Integer; b : String; c : Integer @sql.append: `generated always as a+b`; }; -

Enhance Project Configuration for SAP HANA Cloud by running the command

cds add hana. -

Install the npm node modules in the CAP project by running the command

npm install.

In this step, we will manually remove or modify the unsupported database features in the CAP CDS files. Some of them are listed below

- Convert Calculated field to regular field and use the

@sql.appendannotation above the field to add the calculation as below exampleNote: During hdbtable deployment we will convert this to a Stored calculated element.Entity Employees { key ID: Integer; NAME { FIRST: String; LAST: String; }; @sql.append: `generated always as NAME_FIRST || '' || NAME_LAST` FULLNAME: String(100); }; - Based on the advanced odata annotations, convert it to the CAP structure.

- We can use

@cds.persistence.udffor User-Defined Functions in the Calculation view. - We can use the

@cds.persistence.existsand@cds.persistence.calcviewto expose the Calculation views. Eg: datamodel.cds - Modify the

usingstatements in the cds files to point to the correct definitions.

Note: For the SHINE example, you can find the modified CDS files in the hdbcds examples folder.

As CAP expects unquoted identifiers with . replaced by _, we have to perform some rename operation that will rename all the existing Source Artifacts to Hana Cloud/CAP supported format.

Note: This step can be executed only after the changes recommended in Step-2 are made and executing the command cds build --production from the root folder of the CAP project doesn't throw any errors.

The Rename procedure can be created using the toRename command of the cds compiler.

-

Install the SAP cds-compiler globally with the command

npm i -g @sap/cds-compiler. -

Create a folder outside the CAP application and copy all the cds files here (Note: Views can be ignored as they will be renamed during deployment).

-

Call the toRename function to generate the hdbprocedure with the below command. This will create a RENAME_HDBCDS_TO_PLAIN.hdbprocedure file.

cdsc toRename (folder name)/*.cds > (folder name)/RENAME_HDBCDS_TO_PLAIN.hdbprocedure -

Update the Calculated Element field in the procedure like this:

PROCEDURE RENAME_HDBCDS_TO_PLAIN LANGUAGE SQLSCRIPT AS BEGIN -- -- Employees EXEC 'RENAME TABLE "Employees" TO "EMPLOYEES"'; EXEC 'RENAME COLUMN "EMPLOYEES"."FULLNAME" TO "__FULLNAME"'; EXEC 'ALTER TABLE "EMPLOYEES" ADD ("FULLNAME" NVARCHAR(100))'; EXEC 'UPDATE "EMPLOYEES" SET "FULLNAME" = "__FULLNAME"'; EXEC 'ALTER TABLE "EMPLOYEES" DROP ("__FULLNAME")'; EXEC 'RENAME COLUMN "EMPLOYEES"."NAME.FIRST" TO "NAME_FIRST"'; EXEC 'RENAME COLUMN "EMPLOYEES"."NAME.LAST" TO "NAME_LAST"'; END;

Note: The RENAME_HDBCDS_TO_PLAIN.hdbprocedure file contains the code of the final Rename procedure for the SHINE example.

The default_access_role is required to Alter the tables. Without this role, the rename procedure will throw an Insufficient privilege error when called because the basic application hdi-container user will not have the alter permissions.

Note: The first three steps will be done automatically if the migration-script is used to convert the application from XSA to CAP.

- In the XSA/SAP HANA 2.0 Application, create a "defaults" folder inside the "src" folder.

- Create the file "default_access_role.hdbrole" with the

ALTERprivilege added in the defaults folder as below{ "role" : { "name" : "default_access_role", "schema_privileges": [ { "privileges": [ "SELECT", "INSERT", "UPDATE", "DELETE", "EXECUTE", "CREATE TEMPORARY TABLE", "SELECT CDS METADATA", "ALTER", "CREATE ANY" ] } ] } } - Create a .hdinamespace file in the "defaults" folder with the empty name space as below to ensure that the role can be named default_access_role.

{ "name": "", "subfolder": "ignore" } - Also move the above created RENAME_HDBCDS_TO_PLAIN.hdbprocedure file to the "procedures" folder.

- Deploy the rename procedure and default access role into the SAP HANA 2.0 HDI Container. The deployment can be done either by using the SAP HDI Deployer or by using the MTA.

On calling the deployed RENAME_HDBCDS_TO_PLAIN procedure from the Database Explorer, the Database tables will be renamed.

- Open the Database explorer.

- Load the SAP HANA 2.0 HDI Container in the Database explorer.

- Select the deployed RENAME_HDBCDS_TO_PLAIN procedure under the Procedures folder of the container.

- Right click on the procedure and select "Generate Call Statement". This will open the Call statement in the SQL Console.

- Run the SQL query and this will rename the Database Tables and their respective columns.

Just like the Database Tables, the other HANA artifacts should also be renamed as the entities referenced in those artifacts no longer exist after calling the rename procedure, so on access it will throw an error. So, in the CAP Application, make the below changes

Note: All the below steps can be done automatically if the migration-script is used to convert the application from XSA to CAP.

-

Delete the .hdbtabledata and .csv files.

Note: When we migrate the HDI container the data will be retained so the sample data is not required. Retaining these files might lead to duplicate entry errors during deployment in case your table expects to have unique records.

-

Create .hdbtabletype files for the table types. Just converting the table type to type will not work, because during cds build, the type definition will be replaced wherever used in the hdbtable and views but if the table type is used in a procedure, the definition will not be updated and during deployment we get the error that the table type mentioned in the procedure is not provided by any file. So when we generate the hdbtabletype file with the table type definition, the deployment will work as expected. The example code is as below

TYPE "PROCEDURES_TT_ERRORS" AS TABLE ( "HTTP_STATUS_CODE" Integer, "ERROR_MESSAGE" VARCHAR(5000), "DETAIL" NVARCHAR(100) );Note: SAP CAP Model does not support .hdbtabletype files natively, as CAP is designed to be a database-agnostic model and platform. Instead, CAP encourages developers to use CDS for defining and working with data models. So .hdbtabletype usage should be carefully considered and properly justified as it might not integrate well with CAP environment.

-

Update the other Hana artifacts to point to the new Database tables.

Note: migration-script will take care of renaming all of the Hana artifacts mentioned in the list. It will also handle the renaming of the .hdbrole, .hdbsynonymconfig, .hdbsynonym , .hdbsynonymtemplate, .hdbroleconfig and .hdbgrants. For the remaining artifacts manual rename is required at this point to make them point to the new DB artifacts.

-

If there is a .hdinamespace files in your project, update it as an empty namespace as below.

{ "name": "", "subfolder": "ignore" }Note: If the Script is used, make sure the above change is made as the script will change the id, so we must ignore the namespace else it will fail during deployment.

-

Make sure the casing is correct in the calculation views, if anything is not modified it might lead to errors during deployment.

Note: The modified artifacts for the example SHINE Application can be accessed with the link.

Step-3: CAP Application Deployment with hdbcds and hdbtable format to the Source SAP HANA 2.0/XSA HDI Container.

To retain the data in the container, we have to first deploy the CAP Application with the hdbcds format and then with hdbtable format.

The hdbcds deployment will map the CAP entities to the existing entities in the SAP HANA 2.0 HDI container.

- In the package.json file in the root folder of the CAP application, the deploy-format should be changed to "hdbcds" and kind as "hana" as below.

Note: The migration script covers this part.

"cds": { "hana": { "deploy-format": "hdbcds" }, "requires": { "db": { "kind": "hana" } } } - Next, we need to remove the rename procedure and the default_access_role as its no longer required. Delete the RENAME_HDBCDS_TO_PLAIN.hdbprocedure from the "procedures" folder and also delete the "defaults" folder. Add the path of these files in the undeploy.json file like this:

[ "src/defaults/*", "src/procedures/RENAME_HDBCDS_TO_PLAIN.hdbprocedure" ] - Build the CAP Application by running the command

cds build --production. This will generate the database artifacts with the hdbcds format. - Deploy the CAP db module to the SAP HANA 2.0 HDI container. The deployment can be done either by using the SAP HDI Deployer or by using the MTA.

Note: The CAP db module of the example SHINE Application with hdbcds format can be accessed with the link

As Hana Cloud doesn't support hdbcds format, The hdbtable deployment will convert the mapped entities to hdbtables which can then be migrated to the Hana Cloud.

-

In the package.json file in the root folder of the CAP application, the deploy-format should be changed to "hdbtable".

"cds": { "hana": { "deploy-format": "hdbtable" }, "requires": { "db": { "kind": "hana" } } }Note: The migration script covers this part. Once the script is running, provide the below parameters to execute the script.

-

Change the Calculated field to a Stored calculated element as below example

Entity Employees { key ID: Integer; NAME { FIRST: String; LAST: String; }; FULLNAME: String(100) = (NAME.FIRST || ' ' || NAME.LAST) stored; };Note: Stored calculated element is supported from cds version 7 onwards.

-

Modify the

@sql.appendannotations above the entity to remove the technical configuration blocks.Eg:

@sql.append:'PARTITION BY HASH ( "PARTNERID" ) PARTITIONS GET_NUM_SERVERS()' Entity BusinessPartner {}Note: Technical configurations of Row store: Since we are converting the temporary table to table, by default it will be stored as a column table so we can remove the row store. Technical configurations of Column store: Its default so it can also be removed.

-

Open the undeploy.json file in the db folder and update it to undeploy all the hdbcds database artifacts and replace them with .hdbtable database artifacts like this:

[ "src/gen/*.hdbcds" ] -

Build the CAP Application by running the command

cds build --production. This will generate the db artifacts with the hdbtable format. -

Deploy the CAP db module to the SAP HANA 2.0 HDI container. The deployment can be done either by using the SAP HDI Deployer or by using the MTA.

Note: The CAP db module of the example SHINE Application with hdbtable format can be accessed with the link

Step-4: Migration of SAP HANA 2.0 HDI Container using Self-Service Migration for SAP HANA Cloud and Connect the CAP application to the migrated container.

Migrate the SAP HANA 2.0 HDI container to Hana Cloud Container and Connect the existing CAP application to this migrated container.

Use the Self-Service Migration for SAP HANA Cloud to migrate the SAP HANA 2.0 container to Hana Cloud container. This link can be used to access the Migration Guide for the detailed flow.

Bind and Deploy the existing CAP application to the migrated container

- In the CAP Application, Open the package.json file in the root folder and change the kind to "hana-cloud". Note:

deploy-formatcan be removed ashdbtableis the default deploy format for Hana Cloud."cds": { "requires": { "db": { "kind": "hana-cloud" } } } - Bind or Connect the CAP Application to the HANA Cloud container created by the migration service.

- Build and Deploy the CAP db module to the Hana Cloud container. The deployment can be done either by using the SAP HDI Deployer or by using the MTA.

Migrate the SRV and the UI layer of the XSA Application to CAP.

Migrate the srv module from xsodata and xsjs to CAP.

- For SRV module based on the xsodata service which is exposed and where the annotation

@odata.publish:trueis written, on top of the entities write your own services to expose them in srv module. One approach to verify if the services behave in an expected way is by test driven development approach. That is to write tests for the XSA application and then run the same tests for the deployed CAP application and verify that both behave in the same manner. Eg: service.cds - If your existing XSA project is running with odatav2, then make the changes in your cap application by following the given link.

- Authentication and authorization can be migrated as per the business needs by following the CAP documentation.

We can reuse the same code for the UI layer. Just modify the OData routes and REST endpoints to point to the exposed CAP application services and endpoints Eg: service.js.

Once all the changes are made, Build and deploy the CAP application to the SAP BTP/HANA Cloud environment using the MTA Deployment Descriptor Eg: mta.yml.

- Build the application using the command

mbt build -p=cffrom the root folder of the target CAP application. - Once the build is complete, an mta_archives folder will be generated. Navigate inside the mta_archives folder.

- Deploy the target CAP application to the SAP BTP/HANA Cloud environment environment using the below command.

cf deploy <Generated_MTAR_Name>.mtar

- Part of XSJS Javascript code can be reused in CAP but it's not the recommended approach as there are Sync/Async problems for Node.js > 16 and the xsjs module is not supported for Node.js > 14

- There is No Option to select the specific containers to migrate so it will migrate all the containers in the given space.

- CAP Documentation.

- SHINE for XSA.

- SAP Hana Cloud.

- SAP HANA Cloud Migration Guide.

- Migration Guidance for Incompatible Features.

- Compatibility with Other SAP HANA Products.

- The Self-Service Migration Tool for SAP HANA Cloud.

- Manual Migration of XSA to CAP.

- SAPUI5.

- Fiori Elements.

- Partition a table in HANA Deployment Infrastructure(HDI)

- Deploy Your Multi-Target Application (MTA)

Create an issue in this repository if you find a bug or have questions about the content.

For additional support, ask a question in SAP Community.

If you wish to contribute code, offer fixes or improvements, please send a pull request. Due to legal reasons, contributors will be asked to accept a DCO when they create the first pull request to this project. This happens in an automated fashion during the submission process. SAP uses the standard DCO text of the Linux Foundation.

Copyright (c) 2023 SAP SE or an SAP affiliate company. All rights reserved. This project is licensed under the Apache Software License, version 2.0 except as noted otherwise in the LICENSE file.