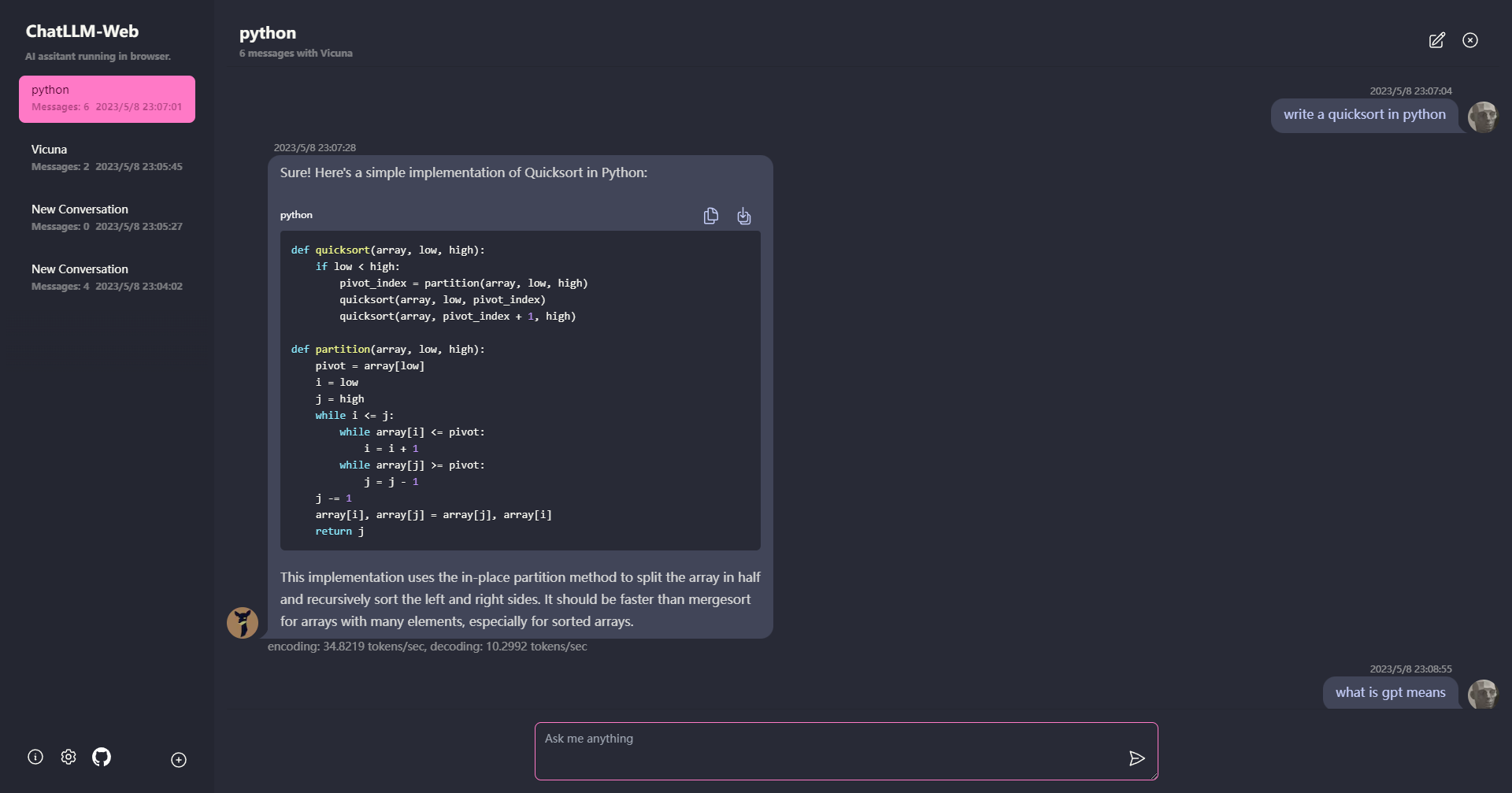

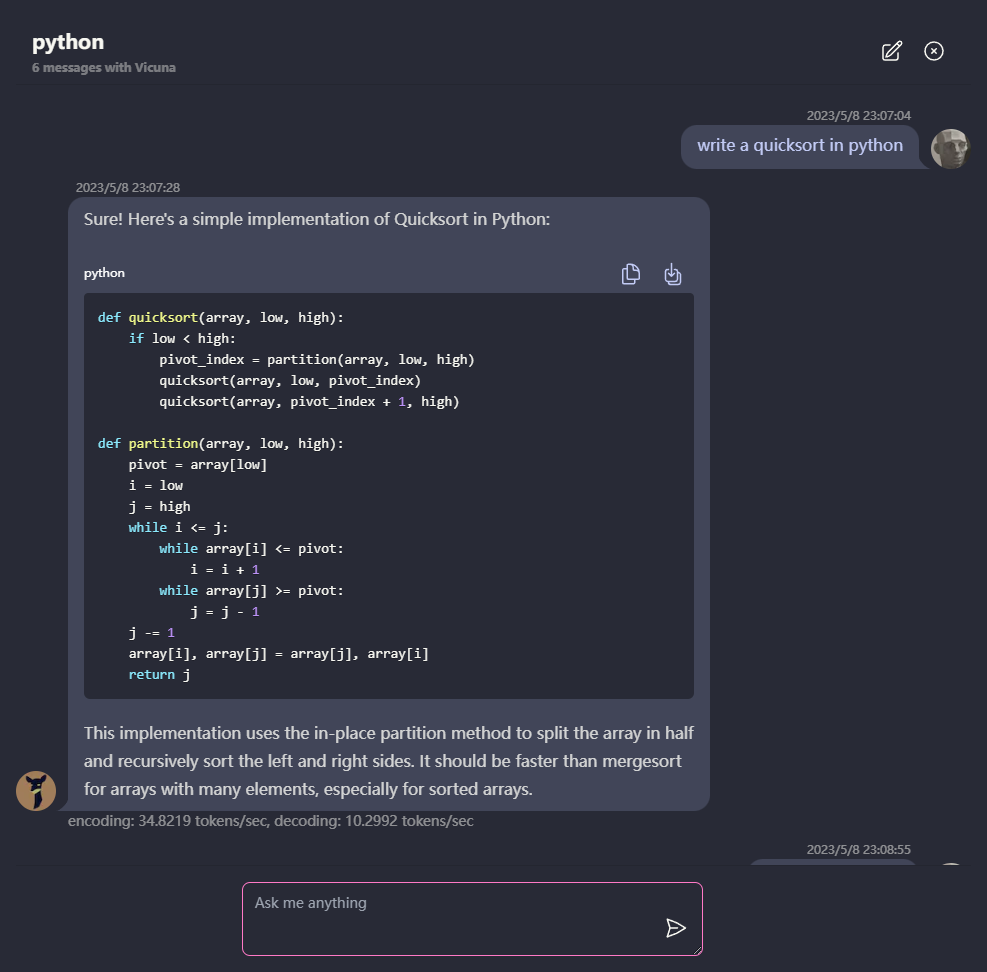

🗣️ Chat with LLM like Vicuna totally in your browser with WebGPU, safely, privately, and with no server. Powered By web-llm.

-

🤖 Everything runs inside the browser with no server support and is accelerated with WebGPU.

-

⚙️ Model runs in a web worker, ensuring that it doesn't block the user interface and providing a seamless experience.

-

🚀 Easy to deploy for free with one-click on Vercel in under 1 minute, then you get your own ChatLLM Web.

-

💾 Model caching is supported, so you only need to download the model once.

-

💬 Multi-conversation chat, with all data stored locally in the browser for privacy.

-

📝 Markdown and streaming response support: math, code highlighting, etc.

-

🎨 responsive and well-designed UI, including dark mode.

-

💻 PWA supported, download it and run totally offline.

-

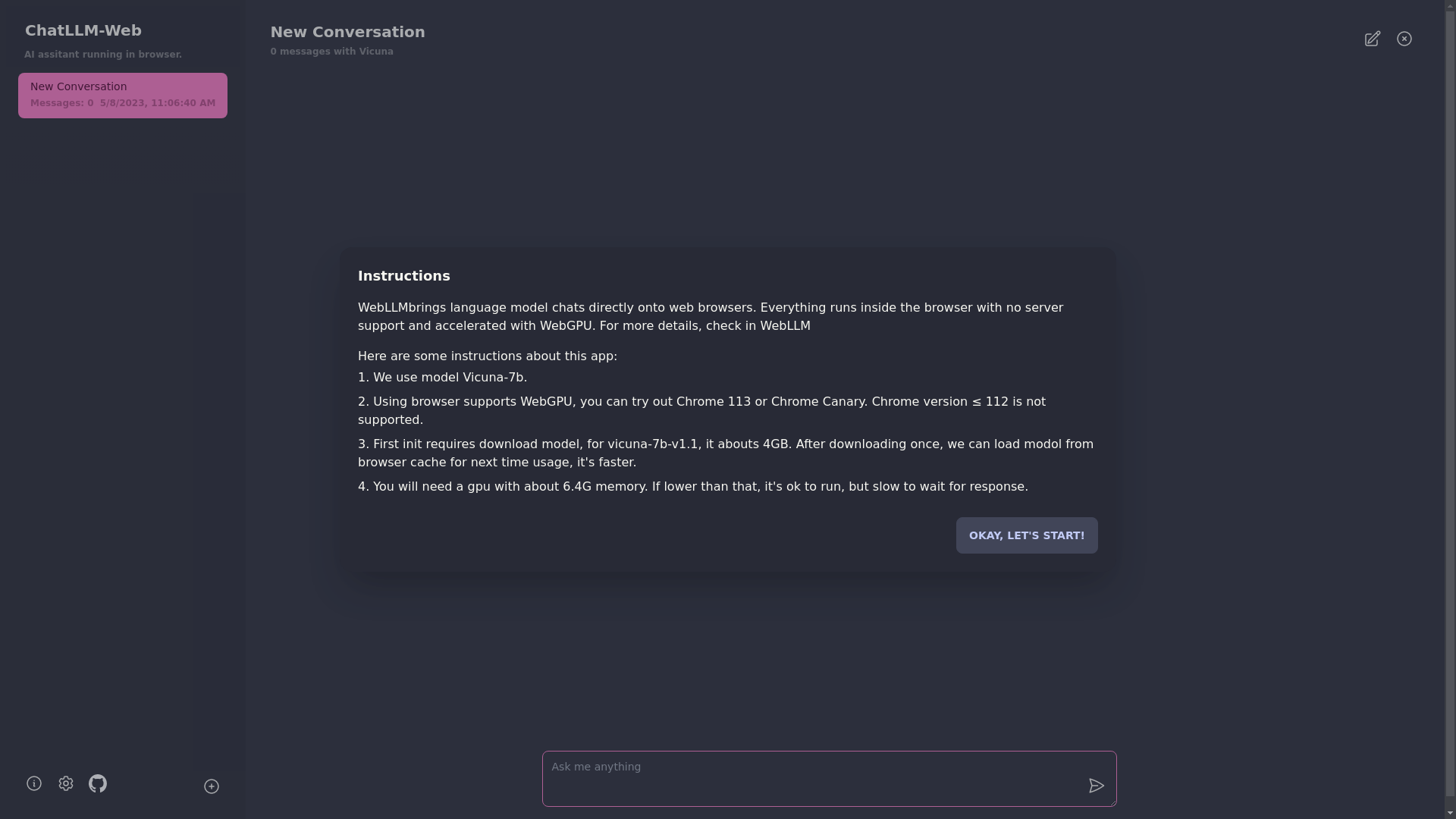

🌐 To use this app, you need a browser that supports WebGPU, such as Chrome 113 or Chrome Canary. Chrome versions ≤ 112 are not supported.

-

💻 You will need a GPU with about 6.4GB of memory. If your GPU has less memory, the app will still run, but the response time will be slower.

-

📥 The first time you use the app, you will need to download the model. For the Vicuna-7b model that we are currently using, the download size is about 4GB. After the initial download, the model will be loaded from the browser cache for faster usage.

-

ℹ️ For more details, please visit mlc.ai/web-llm

-

[✅] LLM: using web worker to create an LLM instance and generate answers.

-

[✅] Conversations: Multi-conversation support is available.

-

[✅] PWA

-

[] Settings:

- ui: dark/light theme

- device:

- gpu device choose

- cache usage and manage

- model:

- support multi models: vicuna-7b✅ RedPajama-INCITE-Chat-3B []

- params config: temperature, max-length, etc.

- export & import model

git clone https://github.com/Ryan-yang125/ChatLLM-Web.git

cd ChatLLM-Web

npm i

npm run dev