This repo is a concise summary and replacement of the Amazon Redshift by Edureka tutorial. Using the hyperlinks below is optional.

What is a data warehouse? - a repository where data generated from your organization's operational systems is collected, transformed, and stored.

What is Redshift? - a parallel, column-oriented database for analyzing data in your data warehouse.

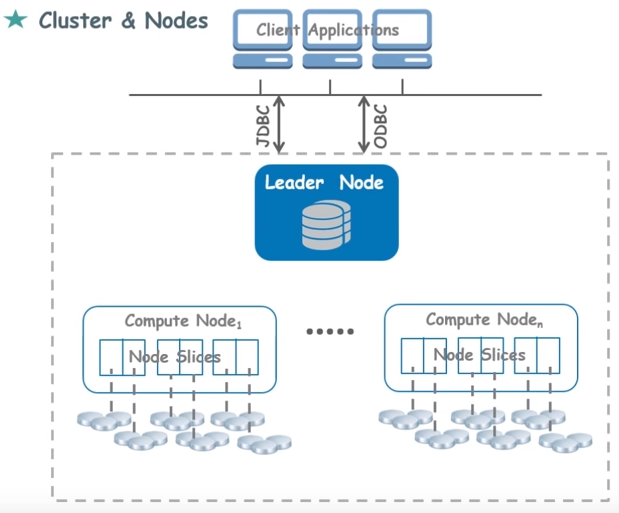

What are clusters and nodes? - nodes are a collection of compute resources. A group of nodes is called a cluster. Each cluster runs a Redshift engine, and it contains 1+ databases.

What is a leader node? - receives queries from client applications. It coordinates the parallel execution of a query using 1+ compute nodes. The leader node also aggregates the results from the compute nodes and sends it back to the client appliation.

What is a compute node? - compute resources that are used to process queries that the leader node sends it. Compute nodes can transfer data amongst themselves to solve queries.

What are node slices? - compute nodes are divided into node slices. Each slice receives memory and disk space. Slices work in parallel to perform operations.

How do clients communicate with Redshift? - client applications communicate with the Leader Node using either:

- JDBC (Java Database Connectivity Driver) - an API for Java, or

- ODBC (Other Database Connectivity Driver) - uses SQL to interact with Leader Node

What are some settings you select during Redshift cluster creation? - type of node you want, number of nodes, the VPC where you want to create your data warehouse, etc.

How does Redshift autoscale up and down? - it just increases or lowers the number of compute nodes.

Is Redshift row or column storage? - column storage.

What are some benefits of column storage? Since the data is stored next to each other, we get:

- Improved Data compression

- Faster queries and updates on columns

What is a Data Lake? - a storage repository that holds a vast amount of raw data in it's native format until it's needed (CSV, Parquet, TSV, RCFile, etc.)

What is ETL? - A sample ETL is when you Extract, Transform, Load data from a data lake into Redshift. This is time-consuming, compute-intensive, and costly (due to the need of growing your clusters in Redshift).

What is Amazon Redshift Spectrum? - Instead of doing ETL jobs, you can use Amazon RedShift Spectrum to directly query data in s3 or a data lake, without unnecessary data movement.

How is data backed up in Redshift? - Redshift offers backup and recovery. As soon as data is stored in Redshift, a copy of that data is sent to s3 through a secure connection. If you lose your data, you can restore it easily using s3.

Does Redshift provide encryption? - yes.

What software do you need to install to use Redshift?

- SQL Workbench - this is where you do queries

- JDBC Driver - enables the client application to communicate with Redshift

- Also, Java Runtime should be enabled on your OS

How create a Redshift cluster in AWS Console? - in the AWS Console, go to Amazon Redshift and click Quick launch cluster (fastest way) or Launch cluster (more versatile way)

When creating a Redshift cluster, what is the default port? - they set it to 5439 for us. We use this port number later.

How is a database created in Redshift? - when we used the "Quick launch cluster" option, a default database called dev is created for us.

What URL does SQL workbench need to connect to the cluster - either a JDBC URL or ODBC URL. Both can be found in AWS Console's Redshift dashboard, under Clusters.

How create tables in Redshift? - can use a sample database from AWS documentation (which is a bunch of create table commands to copy/paste)

How copy data for a database from s3 into Redshift?

You can do something like:

copy users from 's3://awssamplebuswest2/tickit/allusers_pipe.txt'

credentials 'aws_iam_role=<iam-role-arn>'

delimiter '|' region 'us-west-2';- This copies to the table

usersfrom a given path. - You also have to provide an IAM role as credentials

- The

delimiteris what separates the fields of the columns regionis where your s3 bucket is located

How perform queries on this new data? - use SQL workbench.

How see previous queries you've performed? - In AWS Console, go to your Redshift cluster and click the Queries tab.

How much data should you have for Redshift to be a good idea? - 100 GB or more. Otherwise, use MySQL.

Is Redshift good for BLOB data? - No. Use s3 instead.

How is backup/restore achieved? The compute nodes asynchronously backup to s3 (currently this is every 5 GB of changed data, or 8 hours)

What is the primary goal when distributing data across nodes? - to distribute the data evenly. This maximizes parallel processing.

What are the distribution styles: DISTKEY, ALL, EVEN?

- DISTKEY - hashes a key to distribute data.

- ALL - puts a copy of the table on every node.

- EVEN - splits a table evenly across 2+ nodes

What's the best distribution style (DISTKEY, ALL, EVEN) for small tables? - ALL. For scenarios where a big table is being joined with a small table, it's beneficial that the small table exists on the same node, so no data transfer happens between nodes.

If JOINs are being performed often on a column (that has distinct values), how should you distribute data? - DISTKEY will evenly distribute the data across nodes. In addition, since JOINs are being performed often on a certain column, using that column as the key will spread out that column's data evenly across nodes.

What if you have a huge table, and Column1 and Column2 are each accessed 50% of the time? How can you do distribution? - Create 2 of this table, one with a DISTKEY for Column1, and one with a DISTKEY for Column2.

When do you use the EVEN distribution style? - when you have no insights into the data, or which columns are accessed frequently, you can just distribute the data evenly across the nodes.

Does Redshift enforce primary key or foreign keys? - No, but defining them can speed up queries.

What's a good way to copy data into redshift, using files? - Instead of using 1 big file, split the data into multiple files and use 1 COPY command to copy data from multiple files. Each slice in a node will process 1 file, so if you have 960 slices, use 960 files.

What is vacuuming? - When you perform a delete, rows are marked for deletion, but not removed. Redshift automatically runs a VACUUM DELETE operation (during periods of reduced load) to actually delete this rows.

Instead of deleting old data, what's a better idea? - Deleting data causes vacuuming, which is slow (could take hours). Instead, split the data into tables by time (such as month). You can delete old data by simply using DROP TABLE on the table.

What are subqueries? When are they okay to use? - A subquery is a query within a query. Use subqueries in cases where one table in the query is used only for predicate conditions. A subquery in a query results in nested loops, so only use it when a subquery returns a small number of rows (like less than 200).

What are blocks? - Column data is persisted to 1 MB immutable blocks. When factoring in compression, there can be 1 million values in a single block.

What is a Redshift Sort Key? - Redshift Sort Key determines the order in which rows in a table are stored. Query performance is improved when Sort keys are properly used as it enables query optimizer to read fewer chunks of data filtering out the majority of it.

- Youtube: Amazon Redshift Tutorial | AWS Tutorial for Beginners | AWS Certification Training | Edureka - good beginner tutorial.

- YouTube: Deep Dive on Amazon Redshift - AWS Online Tech Talks - advanced, high-level tutorial for users who already know Redshift. Mediocre.

- A Cloud Guru: Hands on with AWS Redshift: Table Design - assumes AWS Knowledge (Security Groups, VPC, etc.). Everything starting from "Load data and run sql queries" was not well explained. Very confusing tutorial.