Run Our Colab Notebook And Get Started In Less Than 10 Lines Of Code!

For guides and tutorials on how to use this package, visit https://docs.relevance.ai/docs.

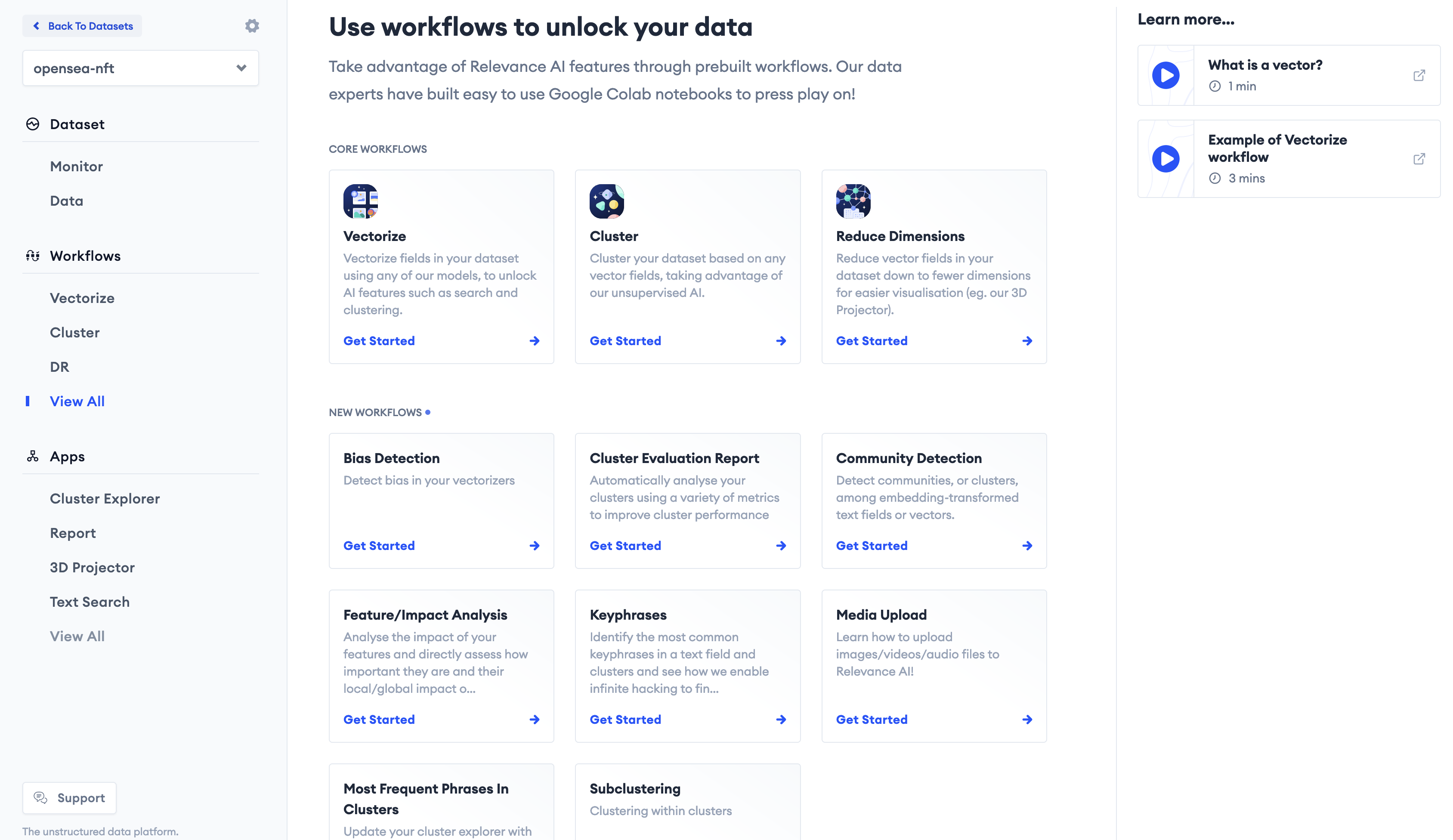

This is a home for all of RelevanceAI's workflows as seen in the dashboard. Sign up and getting started here!

Workflows provide users with a guide on how to run relevant code in Relevance AI. It provides a guided interface through Relevance AI Features.

Some of these features include:

- Clustering

- Dimensionality Reduction

- Labelling/Tagging

- Launching Projectors

How to add a workflow

- Add a subfolder and move notebook there and push the notebook

- Modify scripts/manual_add_to_db.py and add a new document in the

DOCSvariable and commit/push the script. Make sure to see what the other documents are doing and copy that structure else things may error! - Create PR

- Fast vector search with free dashboard to preview and visualise results

- Vector clustering with support for libraries like scikit-learn and easy built-in customisation

- Store nested documents with support for multiple vectors and metadata in one object

- Multi-vector search with filtering, facets, weighting

- Hybrid search with support for weighting keyword matching and vector search ... and more!

- Python ^3.7.0

- AWS CLI v2 - if you need to upload new workflows to prod

To get started with development, install the dev dependencies

❯ make installMake sure your AWS SSO profile and creds configured in ~/.aws/config.

Install yawsso or similar to sync API creds w/ SSO profile needed for CDK.

Set the AWS_PROFILE env var to bypass having to specify --profile on every AWS CLI call.

❯ ENVIRONMENT=development AWS_PROFILE=Relevance-AI.WorkflowsAdminAccess make uploadThen run testing using:

Don't forget to set your test credentials!

export TEST_ACTIVATION_TOKEN=<YOUR_ACTIVATION_TOKEN>

## For testing core workflows

export WORKFLOW_TOKEN_CLUSTER_YOUR_DATA_WITH_RELEVANCE_AI=<DASHBOARD_BASE64_TOKEN_FROM_CLUSTER_WORKFLOW>

export WORKFLOW_TOKEN_VECTORIZE_YOUR_DATA_WITH_RELEVANCE_AI=<DASHBOARD_BASE64_TOKEN_FROM_VECTORIZE_WORKFLOW>

export WORKFLOW_TOKEN_REDUCE_THE_DIMENSIONS_OF_YOUR_DATA_WITH_RELEVANCE_AI=<DASHBOARD_BASE64_TOKEN_FROM_DR_WORKFLOW>

export WORKFLOW_TOKEN_CORE_SUBCLUSTERING<DASHBOARD_BASE64_TOKEN_FROM_SUBCLUSTERING_WORKFLOW>Run test script

- tests all notebooks in

workflows - outputs error

notebook_error.log

❯ python scripts/test_notebooks.py

## Testing indiv notebook

❯ python scripts/test_notebooks.py --notebooks subclustering/core_subclustering.ipynb❯ make help

Available rules:

clean Delete all compiled Python files

install Install dependencies

lint Lint using flake8

test Test dependencies

update Update dependencies

upload Upload notebooks to S3 and update ds