This environment has been created for the sole purpose of providing an easy to deployment and consume Red Hat OpenShift Container Platform v3.x environment as a sandpit.

This install will create a 'Minimal Viable Setup', which anyone can extend to their needs and purpose.

Use at your own please and risk!

Openshift Container Platform needs valid Red Hat subscription for Red Hat OpenShift Container Platform! And access to access.redhat.com web site is required to download RHEL 7.x cloud images.

If you want to provide additional feature, please feel free to contribute via pull requests or any other means. We are happy to track and discuss ideas, topics and requests via 'Issues'.

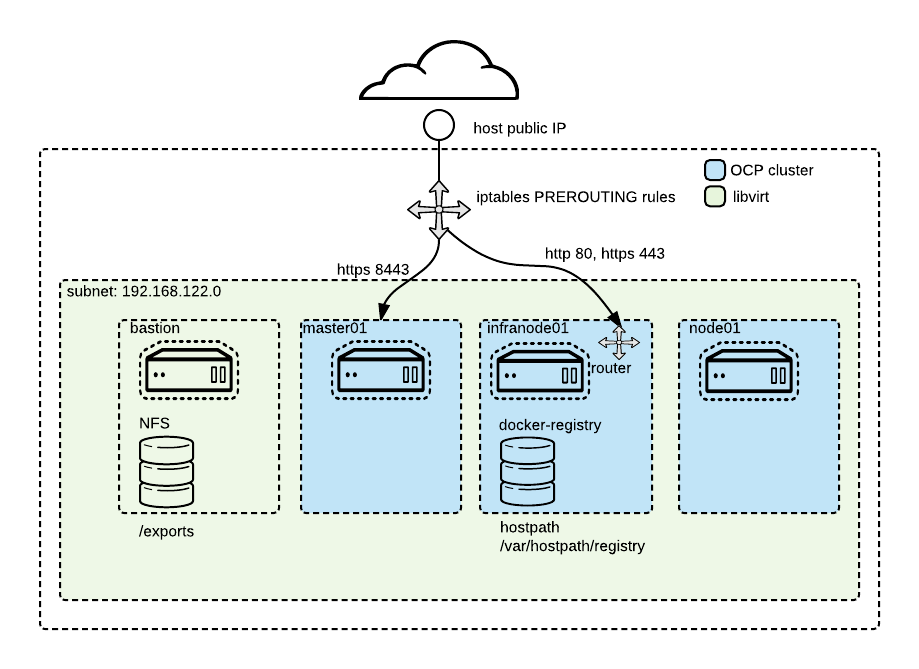

When following these instructional steps, you will end with a setup similar to

NOTE: Our instructions are based on the Root-Server as provided by https://www.hetzner.com/ , please feel free to adapt it to the needs of your prefered hosting provider. We are happy to get pull requests for an updated documentation, which makes consuming this setup easy also for other hosting provider.

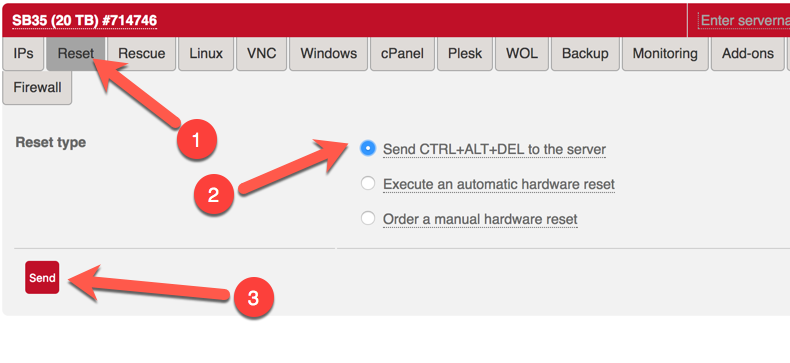

When you get your server you get it without OS and it will be booted to rescue mode where you decide how it will be configured.

When you login to machine it will be running Debian based rescure system and welcome screen will be something like this

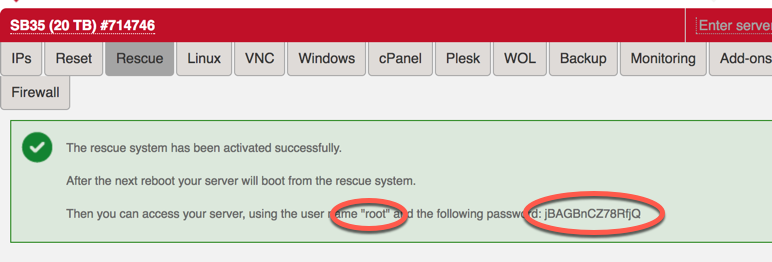

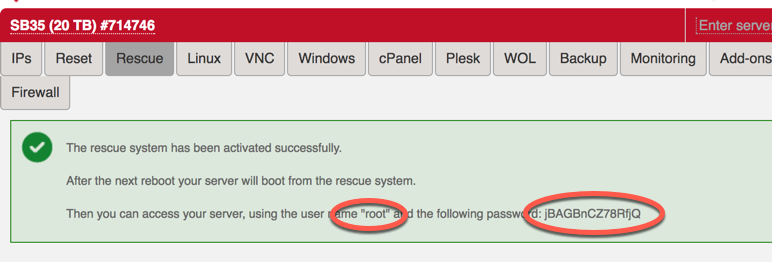

NOTE: If your system is not in rescue mode anymore, you can activate it from https://robot.your-server.de/server. Select your server and "Rescue" tab. From there select Linux, 64bit and public key if there is one.

This will delete whatever you had on your system earlier and will bring the machine into it's rescue mode. Please do not forget your new root password.

After resetting your server, you are ready to connect to your system via ssh.

When you login to your server, the rescue system will display some hardware specifics for you:

-------------------------------------------------------------------

Welcome to the Hetzner Rescue System.

This Rescue System is based on Debian 8.0 (jessie) with a newer

kernel. You can install software as in a normal system.

To install a new operating system from one of our prebuilt

images, run 'installimage' and follow the instructions.

More information at http://wiki.hetzner.de

-------------------------------------------------------------------

Hardware data:

CPU1: Intel(R) Core(TM) i7 CPU 950 @ 3.07GHz (Cores 8)

Memory: 48300 MB

Disk /dev/sda: 2000 GB (=> 1863 GiB)

Disk /dev/sdb: 2000 GB (=> 1863 GiB)

Total capacity 3726 GiB with 2 Disks

Network data:

eth0 LINK: yes

MAC: 6c:62:6d:d7:55:b9

IP: 46.4.119.94

IPv6: 2a01:4f8:141:2067::2/64

RealTek RTL-8169 Gigabit Ethernet driver

From these information, the following ones are import to note:

- Number of disks (2 in this case)

- Memory

- Cores

Guest VM setup uses hypervisor vg0 af volume group for guest. So leave as much as possible free space on vg0.

installimage tool is used to install CentOS. It takes instructions from a text file.

Create new config.txt file

vi config.txt

Copy below content to that file as an template

DRIVE1 /dev/sda

DRIVE2 /dev/sdb

SWRAID 1

SWRAIDLEVEL 1

BOOTLOADER grub

HOSTNAME CentOS-76-64-minimal

PART /boot ext3 512M

PART lvm vg0 all

LV vg0 root / ext4 200G

LV vg0 swap swap swap 5G

LV vg0 tmp /tmp ext4 10G

LV vg0 home /home ext4 40G

IMAGE /root/.oldroot/nfs/install/../images/CentOS-76-64-minimal.tar.gz

There are some things that you will probably have to changes

- Do not allocated all vg0 space to

/ swap /tmpand/home. - If you have a single disk remove line

DRIVE2and linesSWRAID* - If you have more than two disks add

DRIVE3... - If you dont need raid just change

SWRAIDto0 - Valid values for

SWRAIDLEVELare 0, 1 and 10. 1 means mirrored disks - Configure LV sizes so that it matches your total disk size. In this example I have 2 x 2Tb disks RAID 1 so total diskspace available is 2Tb (1863 Gb)

- If you like you can add more volume groups and logical volumes.

When you are happy with file content, save and exit the editor via :wq and start installation with the following command

installimage -a -c config.txt

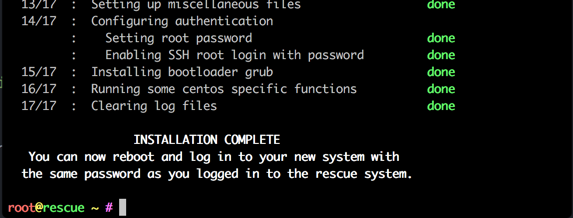

If there are error, you will be informed about then and you need to fix them. At completion, the final output should be similar to

You are now ready to reboot your system into the newly installed OS.

reboot now

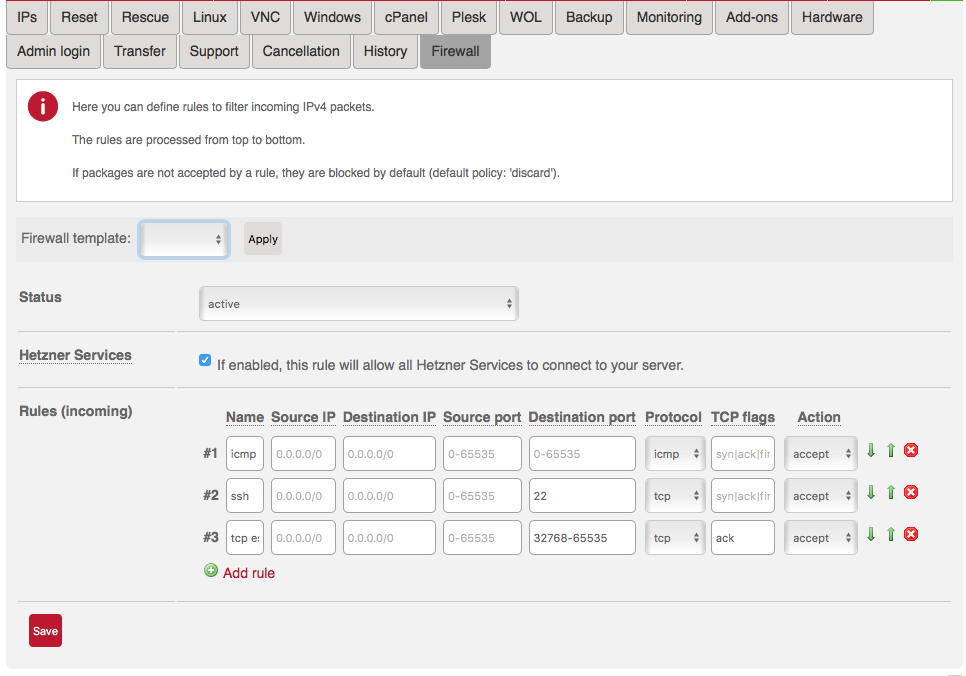

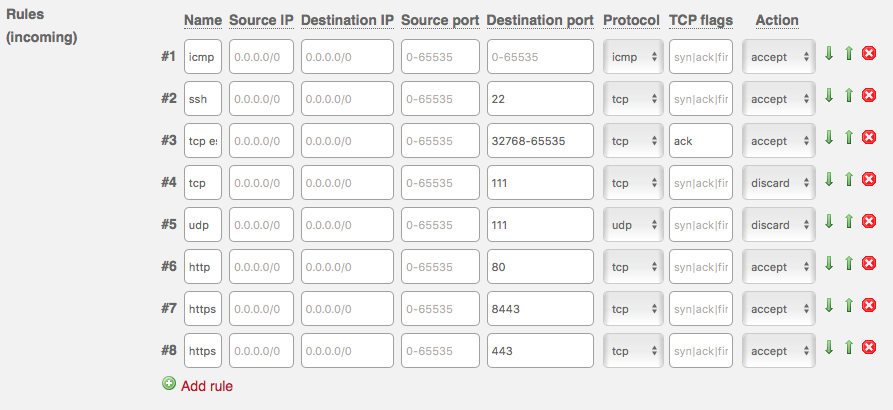

While server is rebootting you can modify your servers firewall rules so that OCP traffic can get it. By default firewall allows port 22 for SSH and nothing else. Check below image how firewall setup looks. Firewall settings can be modified under your server's settings in Hetzner's web UI https://robot.your-server.de/server.

You need to add ports 80, 443 and 8443 to the rules and also block 111. If you don't block that port you need to stop and disable rpcbind.service and rpcbind.socket. Add new rules so that rule listing matches below screenshot.

Install ansible and git

[root@CentOS-73-64-minimal ~]# yum install -y ansible git wget screen

You are now ready to clone this project to your CentOS system.

git clone https://github.com/RedHat-EMEA-SSA-Team/hetzner-ocp.git

We are now ready to install libvirtas our hypervisor, provision VMs and prepare those for OCP.

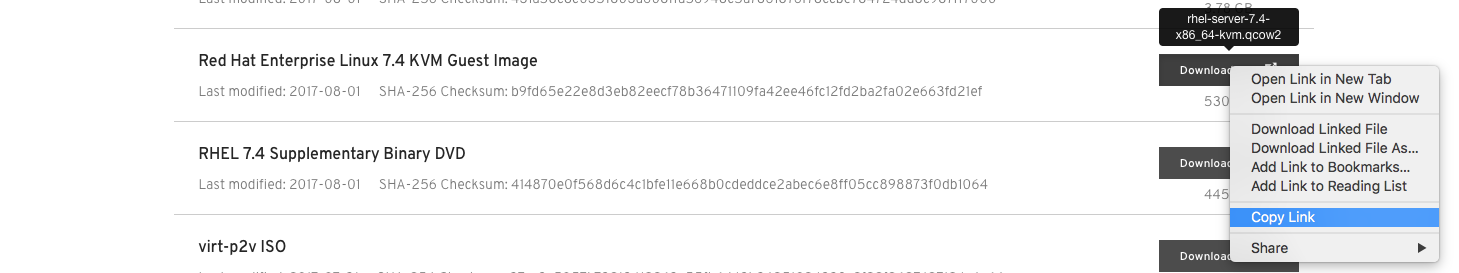

- Go to RHEL downloads page https://access.redhat.com/downloads/content/69/ver=/rhel---7/7.6/x86_64/product-software

- Copy download link from image Red Hat Enterprise Linux 7.6 KVM Guest Image to your clipboard

Downlaod image

wget -O /root/rhel-kvm.qcow2 "PASTE_URL_HERE"

or

#download image onto your Notebook

scp rhel-server-7.6-x86_64-kvm.qcow2 root@5.9.77.247:/root/rhel-kvm.qcow2

With our hypervisor installed and ready, we can now proceed with the creation of the VMs, which will then host our OpenShift installation.

Check playbooks/vars/guests.yml and modify it to correspond your environment. By default following VMs are installed:

- bastion

- master01

- infranode01

- node01

Here is a sample of a guest definition, which perfectly fits for a 64Gb Setup.

guests:

- name: bastion

cpu: 1

mem: 2048

disk_os_size: 40g

node_group: bastion

- name: master01

cpu: 2

mem: 18384

disk_os_size: 40g

ocs_host: 'true'

node_group: node-config-master

- name: infranode01

cpu: 2

mem: 18384

disk_os_size: 40g

ocs_host: 'true'

node_group: node-config-infra

- name: node01

cpu: 2

mem: 18384

disk_os_size: 40g

ocs_host: 'true'

node_group: node-config-compute

Basically you need to change only num of VMs and/or cpu and mem values.

Provision VMs and prepare them for OCP. Password for all hosts is p.

Be careful with picking the right subscription-pool-id, you have to check if there are enough RHEL-entilements left in this pool.

[root@CentOS-73-64-minimal ~]# cd hetzner-ocp

[root@CentOS-73-64-minimal hetzner-ocp]# export ANSIBLE_HOST_KEY_CHECKING=False; ansible-playbook playbooks/setup.yml

Provisioning of the hosts take a while and they are in running state until provisioning and preparations is finnished. Maybe time for another cup of coffee?

When playbook is finished successfully you should have 4 VMs running.

[root@CentOS-73-64-minimal hetzner-ocp]# virsh list

Id Name State

----------------------------------------------------

34 bastion running

35 master01 running

36 infranode01 running

37 node01 running

Installation of OCP is done on bastion host. So you need to ssh to bastion Its is done with normal OCP installation playbooks. You start installation on bastion with following commands:

chmod is for enabling cloud-user to write a retry-file.

Additionally check the versions gluster-fs according to https://access.redhat.com/solutions/3617551 in File playbooks/roles/inventory/templates/hosts.j2

NOTE: If you want to use valid TLS certificates issue certificates before installation. Check section Adding valid certificates from Let's Encrypt

[root@CentOS-73-64-minimal hetzner-ocp]# ssh bastion -l cloud-user

[cloud-user@bastion ~]# sudo chmod 777 /usr/share/ansible/openshift-ansible/playbooks

[cloud-user@bastion ~]# ansible-playbook /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml

[cloud-user@bastion ~]# ansible-playbook /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml

After installation there are no users in Openshift. You can use following playbooks on hypervisor to create users

Create normal user

[root@CentOS-73-64-minimal hetzner-ocp]# ansible-playbook hetzner-ocp/playbooks/utils/add_user.yml

Create cluster admin user

[root@CentOS-73-64-minimal hetzner-ocp]# ansible-playbook hetzner-ocp/playbooks/utils/add_cluster_admin.yml

If you are NOT using OCS you need to provide some kind of persistent storage for registry. Use following playbook to add hostpath volume to registry. Playbook assumes that you have single infranode named infranode01, if you have several, you have to use node selector to deploy registry to correct node.

[root@CentOS-73-64-minimal hetzner-ocp]# ansible-playbook hetzner-ocp/playbooks/utils/registry_hostpath.yml

After successful installation of Openshift, you will be able to login via. Password is the one that you entered when you added new user(s).

URL: https://master.<your hypervisors IP>.xip.io:8443

User: <username>

Password: <password>

If you have installed OCP, your dont need to add any other storage.

Note: For now this works only if you have single node :)

Check how much disk you have left df -h, if you have plenty then you can change pv disk size by modifying var named size in playbooks/hostpath.yml. You can also increase size of PVs by modifying array values...remember to change both.

To start hostpath setup execute following on hypervisor

[root@CentOS-73-64-minimal hetzner-ocp]# ansible-playbook playbooks/utils/hostpath.yml

By default bastion host is setup for NFS servers. To created correct directories and pv objects, execute following playbook on hypervisor

[root@CentOS-73-64-minimal hetzner-ocp]# ansible-playbook playbooks/utils/nfs.yml

Execute following on hypervisor

[root@CentOS-73-64-minimal hetzner-ocp]# ansible-playbook playbooks/clean.yml

Clean up will also remove iptable rules

By defaults guest VMs are provisioned using defaults. If you need to modify guest options to better suit your needs, it can be done by modifying playbooks/vars/guests.yml

Make modifications and start installtion process. Installer will automatically use file named guests.yml. Remember to clean old installation with ansible-playbook playbooks/clean.yml

Let's Encrypt can provide free TLS certificates for API and apps traffic. In this example domain is registered to Amazon Route 53 and acme.sh (https://github.com/Neilpang/acme.sh) is used to issue certificates. If you use other DNS provider, follow documentation at acme.sh repo.

Certificate is issued on root machine.

Install socat

yum install -y socat

Install acme.sh

curl https://get.acme.sh | sh

Create public zone for your domain. You need three A records; master, apps wildcard and apps, that point to your machine public ip. In this example I use my ocp.ninja domain.

Add your AWS API key and secret to env. If you don't have those follow this documentation https://github.com/Neilpang/acme.sh/wiki/How-to-use-Amazon-Route53-API

export AWS_ACCESS_KEY_ID=...

export AWS_SECRET_ACCESS_KEY=....

Issue certificate

cd .acme.sh/

./acme.sh --issue -d master.ocp.ninja -d apps.ocp.ninja -d *.apps.ocp.ninja --dns dns_aws --debug

After issue process is done you have your certificate files in /root/.acme.sh/DOMAIN directory (/root/.acme.sh/master.ocp.ninja/)

ls -la /root/.acme.sh/master.ocp.ninja

total 36

drwxr-xr-x 2 root root 4096 Mar 7 11:22 .

drwx------ 6 root root 4096 Mar 7 11:21 ..

-rw-r--r-- 1 root root 1648 Mar 7 11:22 ca.cer

-rw-r--r-- 1 root root 3608 Mar 7 11:22 fullchain.cer

-rw-r--r-- 1 root root 1960 Mar 7 11:22 master.ocp.ninja.cer

-rw-r--r-- 1 root root 761 Mar 7 11:22 master.ocp.ninja.conf

-rw-r--r-- 1 root root 1025 Mar 7 11:21 master.ocp.ninja.csr

-rw-r--r-- 1 root root 251 Mar 7 11:21 master.ocp.ninja.csr.conf

-rw-r--r-- 1 root root 1675 Mar 7 11:21 master.ocp.ninja.key

Copy cert files to bastion

[root@...]# scp -r /root/.acme.sh/master.ocp.ninja cloud-user@bastion:/home/cloud-user

Modify playbooks/group_vars/all so that installer finds certificates and uses proper domain.

[root@C...]# vi /root/hetzner-ocp/playbooks/group_vars/all

Uncomment vars apps_dns and master_dns (remove #) and change value to match your domain.

apps_dns: apps.ocp.ninja

master_dns: master.ocp.ninja

Uncomment cert file locations and change them to match your cert files

cert_router_certfile: "/home/cloud-user/master.ocp.ninja/master.ocp.ninja.cer"

cert_router_keyfile: "/home/cloud-user/master.ocp.ninja/master.ocp.ninja.key"

cert_router_cafile: "/home/cloud-user/master.ocp.ninja/ca.cer"

cert_master_certfile: "/home/cloud-user/master.ocp.ninja/master.ocp.ninja.cer"

cert_master_keyfile: "/home/cloud-user/master.ocp.ninja/master.ocp.ninja.key"

cert_master_cafile: "/home/cloud-user/master.ocp.ninja/ca.cer"

File locations point to bastion file system.

Save and exit.

For some reason each host needs to have search cluster.local on each nodes /etc/resolv.conf. This entry is set by installer, but resolv.conf if rewritten always on VM restart.

If you need to restart VMs or for some other reason you get this errors message during build.

Pushing image docker-registry.default.svc:5000/test/test:latest ...

Registry server Address:

Registry server User Name: serviceaccount

Registry server Email: serviceaccount@example.org

Registry server Password: «non-empty»

error: build error: Failed to push image: Get https://docker-registry.default.svc:5000/v1/_ping: dial tcp: lookup docker-registry.default.svc on 192.168.122.48:53: no such host

Then you should run this (on hypervisor)

[root@CentOS-73-64-minimal hetzner-ocp]# ansible-playbook /root/hetzner-ocp/playbooks/fixes/resolv_fix.yml

NOTE: This fix is lost if VM is restarted. To make persistent change, you need to do following on all nodes.

- ssh to host

- vi /etc/NetworkManager/dispatcher.d/99-origin-dns.sh

- Add below line after line 113

echo "search cluster.local" >> ${NEW_RESOLV_CONF}

Save and exit and then restart NetworkManager with following command

systemctl restart NetworkManager

Directory permission and SELinux magic might not be setup correctly during installation. If that happens you will encounter Error 500 during build. If you experience that error, should verify error from docker-registry pod.

You can get logs from docker registry with this command from master01 host

[root@CentOS-73-64-minimal hetzner-ocp]# ssh master01

[root@localhost ~]# oc project default

[root@localhost ~]# oc logs dc/docker-registry

If you have 'permission denied' on registry logs you need to run following playbook on hypervisor and restart registry pod

Playbook for fixing permissions

[root@CentOS-73-64-minimal hetzner-ocp]# ansible-playbook /root/hetzner-ocp/playbooks/fixes/registry_hostpath.yml

Restart docker-registry pod

[root@CentOS-73-64-minimal hetzner-ocp]# ssh master01

[root@localhost ~]# oc delete po -l deploymentconfig=docker-registry

Sometimes OCP installation fails do master access denied problems. In that case you might see error message like following.

FAILED - RETRYING: Verify API Server (1 retries left).

fatal: [master01]: FAILED! => {

"attempts": 120,

"changed": false,

"cmd": [

"curl",

"--silent",

"--tlsv1.2",

"--cacert",

"/etc/origin/master/ca-bundle.crt",

"https://master01:8443/healthz/ready"

],

"delta": "0:00:00.050985",

"end": "2017-08-31 12:39:30.218688",

"failed": true,

"rc": 0,

"start": "2017-08-31 12:39:30.167703"

}

STDOUT:

{

"kind": "Status",

"apiVersion": "v1",

"metadata": {},

"status": "Failure",

"message": "User \"system:anonymous\" cannot \"get\" on \"/healthz/ready\"",

"reason": "Forbidden",

"details": {},

"code": 403

}

Solution is to uninstall current installation from bastion host prepare guests again and reinstall.

Uninstall current current installation.

[root@CentOS-73-64-minimal hetzner-ocp]# ssh bastion

[root@localhost ~]# ansible-playbook /usr/share/ansible/openshift-ansible/playbooks/openshift_glusterfs/uninstall.yml

[root@localhost ~]# ansible-playbook /usr/share/ansible/openshift-ansible/playbooks/adhoc/uninstall.yml

You can also clean up everything with playbooks/clean.yml, if you want real fresh start. clean.yml playbook is executed in hypervisor

Prepare guests again

[root@CentOS-73-64-minimal ~]# cd hetzner-ocp

[root@CentOS-73-64-minimal hetzner-ocp]# export ANSIBLE_HOST_KEY_CHECKING=False

[root@CentOS-73-64-minimal hetzner-ocp]# ansible-playbook playbooks/setup.yml

Start installation again

[root@CentOS-73-64-minimal hetzner-ocp]# ssh bastion

ansible-playbook /usr/share/ansible/openshift-ansible/playbooks/prerequirement.yml

ansible-playbook /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml