The Open-Source AI Development Platform Built for Self-Hosting

AI-powered development environment with advanced agent orchestration - designed for complete data sovereignty and infrastructure control.

Quick Start · Features · Documentation · Contributing

Includes support for llama.cpp, LM Studio, Ollama, Openrouter, and any provider you choose.

Infrastructure-first AI development platform designed for complete ownership and control.

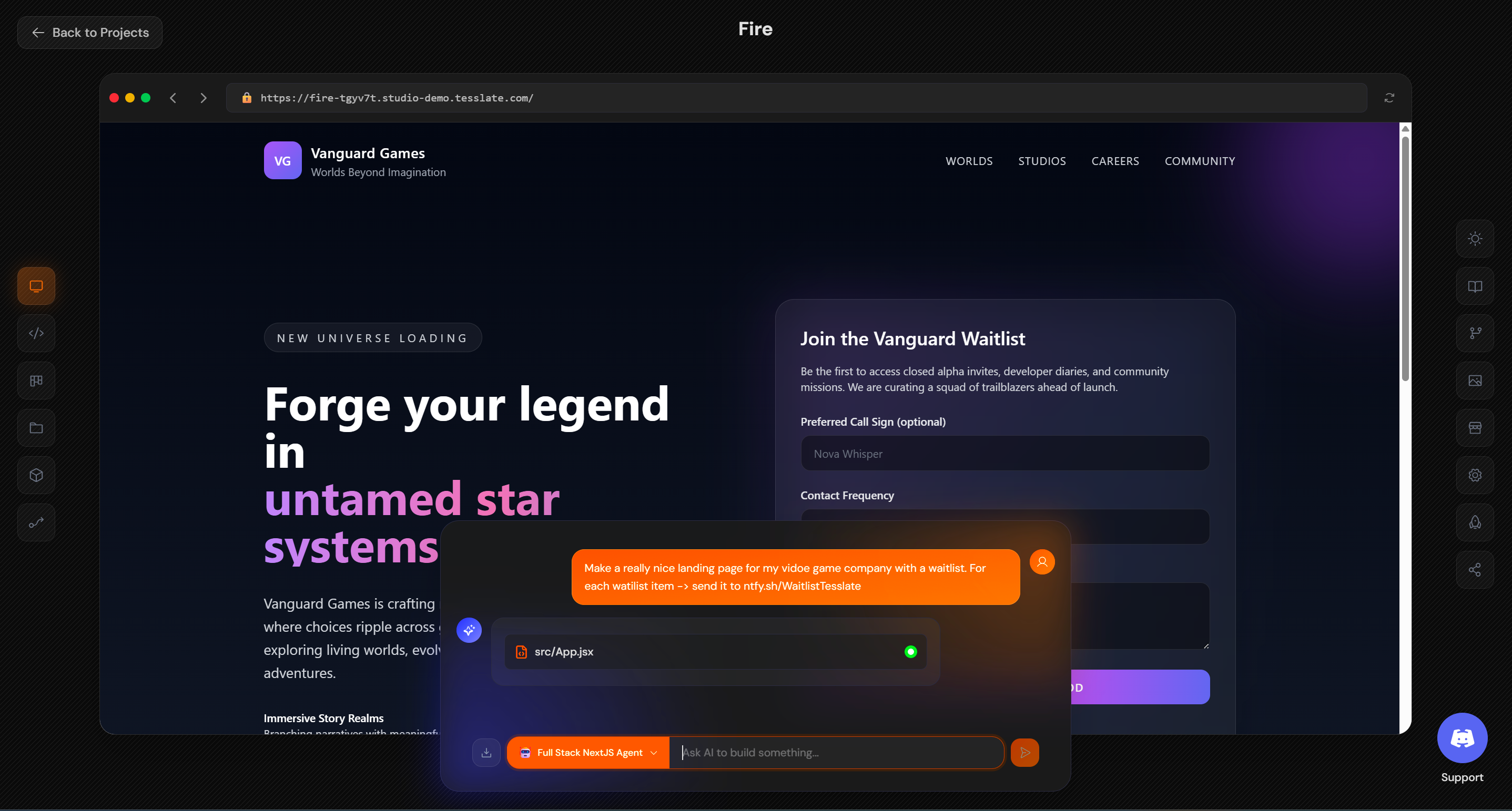

Tesslate Studio isn't just another code generation tool - it's a complete development platform architected from the ground up for self-hosting and data sovereignty:

- Run anywhere: Your machine, your cloud, your datacenter

- Container isolation: Each project runs in its own sandboxed Docker container

- Subdomain routing: Clean URLs (

project.studio.localhost) for easy project access - Data sovereignty: Your code never leaves your infrastructure

- Iterative Agents: Autonomous "think-act-reflect" loops that debug, research, and iterate independently

- Tool Registry: File operations (read/write/patch), persistent shell sessions, web fetch, planning tools

- Command Validation: Security sandboxing with allowlists, blocklists, and injection protection

- (Coming soon) Multi-agent orchestration: Built on TframeX framework - agents collaborate across frontend, backend, database concerns

- (Coming soon) Model Context Protocol (MCP): Inter-agent communication for complex task coordination

- JWT authentication with refresh token rotation and revocable sessions

- Encrypted credential storage using Fernet encryption for API keys and tokens

- Audit logging: Complete command history for compliance

- Container isolation: Projects run in isolated environments

- Command sanitization: AI-generated shell commands validated before execution

- Kanban project management: Built-in task tracking with priorities, assignees, and comments

- Architecture visualization: AI-generated Mermaid diagrams of your codebase

- Git integration: Full version control with commit history, branching, and GitHub push/pull

- Agent marketplace: Pluggable architecture - fork agents, swap models, customize prompts

- Database integration: PostgreSQL with migration scripts and schema management

- Tesslate Forge: Train, fine-tune, and deploy custom models as agents

- Open source agents: All 10 marketplace agents are forkable and modifiable

- Model flexibility: OpenAI, Anthropic, Google, local LLMs via Ollama/LM Studio

- Platform customization: Fork the entire platform for proprietary workflows

Built for:

- Developers who want complete control over their AI development environment

- Teams needing data privacy and on-premises deployment

- Regulated industries (healthcare, finance, government) requiring data sovereignty

- Organizations building AI-powered internal tools

- Engineers wanting to customize the platform itself

Get running in 3 steps, 3 minutes:

# 1. Clone and configure

git clone https://github.com/TesslateAI/Studio.git

cd Studio

cp .env.example .env

# 2. Add your API keys (OpenAI, Anthropic, etc.) to .env

# Edit .env: Set SECRET_KEY and LITELLM_MASTER_KEY

# 3. Start everything

docker compose up -dThat's it! Open http://studio.localhost

What's included:

- 10 AI agents ready to use

- 3 project templates pre-loaded

- Live preview with hot reload

- Authentication system ready

First time with Docker? Click here for help

Install Docker:

- Windows/Mac: Docker Desktop

- Linux:

curl -fsSL https://get.docker.com | sh

Generate secure keys:

# SECRET_KEY

python -c "import secrets; print(secrets.token_urlsafe(32))"

# LITELLM_MASTER_KEY

python -c "import secrets; print('sk-' + secrets.token_urlsafe(32))"Natural language to full-stack applications. Describe what you want, watch it build in real-time with streaming responses.

Every project gets its own subdomain (your-app.studio.localhost) with hot module replacement. See changes instantly as AI writes code.

10 pre-built, open-source agents: Stream Builder, Full Stack Agent, Code Analyzer, Test Generator, API Designer, and more. Fork them, swap models (GPT-5, Claude, local LLMs), edit prompts - it's your code.

Start fast with ready templates:

- Next.js 15 (App Router, SSR, API routes)

- Vite + React + FastAPI (Python backend)

- Vite + React + Go (high-performance backend)

- One command deployment:

docker compose up -d - Container per project: Isolated development environments

- PostgreSQL for persistent data

- Traefik ingress with subdomain routing

- JWT authentication, audit logging, secrets management

Full VSCode-like editing experience in the browser. Syntax highlighting, IntelliSense, multi-file editing.

Your code never leaves your infrastructure. GitHub OAuth, encrypted secrets, comprehensive audit logs, role-based access control.

Why we built this:

We needed an AI development platform that could run on our own infrastructure without sacrificing data sovereignty or architectural control. Every existing solution required choosing between convenience and control - cloud platforms were fast but locked us in, while local tools lacked the sophistication we needed.

So we built Tesslate Studio as infrastructure-first: Docker for simple deployment, container isolation for project sandboxing, and enterprise security built-in. It's designed for developers and organizations that need the power of AI-assisted development while maintaining complete ownership of their code and data.

The name "Tesslate" comes from tessellation - the mathematical concept of tiles fitting together perfectly without gaps. That's our architecture: AI agents, human developers, isolated environments, and scalable infrastructure working together seamlessly.

Open source from the start: We believe critical development infrastructure should be transparent, auditable, and owned by the teams using it - not controlled by vendors who can change terms overnight.

Tesslate Studio creates isolated containerized environments for each project:

┌─────────────────────────────────────────────────────┐

│ Your Machine / Your Cloud / Your Datacenter │

├─────────────────────────────────────────────────────┤

│ │

│ ┌──────────────────────────────────────────┐ │

│ │ Tesslate Studio (You control this) │ │

│ │ │ │

│ │ • FastAPI Orchestrator (Python) │ │

│ │ • React Frontend (TypeScript) │ │

│ │ • PostgreSQL Database │ │

│ │ • AI Agent Marketplace │ │

│ └───────────┬──────────────────────────────┘ │

│ │ │

│ ▼ │

│ ┌──────────────────────────────────────────┐ │

│ │ Project Containers (Isolated) │ │

│ │ │ │

│ │ todo-app.studio.localhost │ │

│ │ dashboard.studio.localhost │ │

│ │ prototype.studio.localhost │ │

│ └──────────────────────────────────────────┘ │

│ │

│ ┌──────────────────────────────────────────┐ │

│ │ Your AI Models (You choose) │ │

│ │ │ │

│ │ • OpenAI GPT-5 (API) │ │

│ │ • Anthropic Claude (API) │ │

│ │ • Local LLMs via Ollama │ │

│ │ • Or any LiteLLM-compatible provider │ │

│ └──────────────────────────────────────────┘ │

└─────────────────────────────────────────────────────┘

Key Architecture Principles:

- Container-per-project - True isolation, no conflicts

- Subdomain routing - Clean URLs, easy project access

- Bring your own models - No vendor lock-in for AI

- Self-hosted - Complete infrastructure control

- Docker Desktop (Windows/Mac) or Docker Engine (Linux)

- 8GB RAM minimum (16GB recommended)

- OpenAI or Anthropic API key (or run local LLMs with Ollama)

Step 1: Clone the repository

git clone https://github.com/TesslateAI/Studio.git

cd StudioStep 2: Configure environment

cp .env.example .envEdit .env and set these required values:

# Generate with: python -c "import secrets; print(secrets.token_urlsafe(32))"

SECRET_KEY=your-generated-secret-key

# Your LiteLLM master key

LITELLM_MASTER_KEY=sk-your-litellm-key

# AI provider API keys (at least one required)

OPENAI_API_KEY=sk-your-openai-key

ANTHROPIC_API_KEY=sk-your-anthropic-keyStep 3: Start Tesslate Studio

docker compose up -dStep 4: Create your account

Open http://studio.localhost and sign up. The first user becomes admin automatically.

Step 5: Start building

- Click "New Project" → Choose a template

- Describe what you want in natural language

- Watch AI generate your app in real-time

- Open live preview at

{your-project}.studio.localhost

Full Docker (Recommended for most users)

docker compose up -dEverything runs in containers. One command, fully isolated.

Hybrid Mode (Fastest for active development)

# Start infrastructure

docker compose up -d traefik postgres

# Run services natively (separate terminals)

cd orchestrator && uv run uvicorn app.main:app --reload

cd app && npm run devNative services for instant hot reload, Docker for infrastructure.

Tesslate uses LiteLLM as a unified gateway. This means you can use:

- OpenAI (GPT-5, GPT-4, GPT-3.5)

- Anthropic (Claude 3.5, Claude 3)

- Google (Gemini Pro)

- Local LLMs (Ollama, LocalAI)

- 100+ other providers

Configure in .env:

# Default models

LITELLM_DEFAULT_MODELS=gpt-5o-mini,claude-3-haiku,gemini-pro

# Per-user budget (USD)

LITELLM_INITIAL_BUDGET=10.0Development: PostgreSQL runs in Docker automatically.

Production: Use a managed database:

DATABASE_URL=postgresql+asyncpg://user:pass@your-postgres:5432/tesslateLocal development:

APP_DOMAIN=studio.localhostProduction:

APP_DOMAIN=studio.yourcompany.com

APP_PROTOCOL=httpsProjects will be accessible at {project}.studio.yourcompany.com

We'd love your help making Tesslate Studio better!

- Fork the repo and clone your fork

- Create a branch:

git checkout -b feature/amazing-feature - Make your changes and test locally

- Commit:

git commit -m 'Add amazing feature' - Push:

git push origin feature/amazing-feature - Open a Pull Request with a clear description

New to the project? Check out issues labeled good first issue.

# Clone your fork

git clone https://github.com/YOUR-USERNAME/Studio.git

cd Studio

# Start in hybrid mode (fastest for development)

docker compose up -d traefik postgres

cd orchestrator && uv run uvicorn app.main:app --reload

cd app && npm run dev- Tests: Add tests for new features

- Docs: Update documentation if you change functionality

- Commits: Use clear, descriptive commit messages

- PRs: One feature per PR, keep them focused

Before submitting:

- Run tests:

npm test(frontend),pytest(backend) - Update docs if needed

- Test with

docker compose up -d

Visit our complete documentation at docs.tesslate.com

- Self-Hosting Quickstart - Get running in 5 minutes

- Configuration Guide - All environment variables explained

- Production Deployment - Deploy with custom domains and SSL

- Architecture Overview - How everything works under the hood

- Development Setup - Contributor and developer guide

- API Documentation - Backend API reference

- Getting Started - Cloud version quickstart

- Working with Projects - Create and manage projects

- AI Agents Guide - Understanding and using AI agents

- FAQ - Frequently asked questions

We take security seriously. Found a vulnerability?

Please DO NOT open a public issue. Instead:

Email us: security@tesslate.com

We'll respond within 24 hours and work with you to address it.

- JWT authentication with refresh tokens

- Encrypted secrets storage (GitHub tokens, API keys)

- Audit logging (who did what, when)

- Role-based access control (admin, user, viewer)

- Container isolation (projects can't access each other)

- HTTPS/TLS in production (automatic Let's Encrypt)

Tesslate Studio is Apache 2.0 licensed. See LICENSE.

What this means:

- Commercial use - Build paid products with it

- Modification - Fork and customize freely

- Distribution - Share your modifications

- Patent grant - Protected from patent claims

- Trademark - "Tesslate" name is reserved

- Liability - Provided "as is" (standard for open source)

This project uses open-source software. Full attributions in THIRD-PARTY-NOTICES.md.

Coming soon:

- Multi Multi Agent ;)

- Local to Cloud Agent marketplace

- Two Way Git Sync

- Plugin system for custom integrations

Have an idea? Open a feature request

Q: Do I need to pay for OpenAI/Claude API?

A: You bring your own API keys. Tesslate Studio doesn't charge for AI - you pay your provider directly (usually pennies per request). You can also use free local models via Ollama.

Q: Can I use this commercially?

A: Yes! Apache 2.0 license allows commercial use. Build SaaS products, internal tools, whatever you want.

Q: Is my code/data sent to Tesslate's servers?

A: No. Tesslate Studio is self-hosted - everything runs on YOUR infrastructure. We never see your code or data.

Q: Can I modify the AI agents?

A: Absolutely! All 10 agents are open source. Fork them, edit prompts, swap models (GPT → Claude → local LLM), or create entirely new agents.

Q: Can I run this without Docker?

A: While Docker is recommended, you can run services natively. You'll need to manually set up PostgreSQL, Traefik, and configure networking.

Q: What hardware do I need?

A: Minimum 8GB RAM, 16GB recommended. Works on Windows, Mac, and Linux. An internet connection is needed for AI API calls (unless using local models).

- Documentation - Comprehensive guides

- GitHub Discussions - Ask questions, share ideas

- Issues - Report bugs, request features

- Email - Direct support (response within 24h)

- Star this repo to get notified of updates

- Watch releases for new versions

- Follow on Twitter/X - News and tips

Contributions are welcome and encouraged! See our Development Guide for setup instructions and contribution guidelines.

Special thanks to our contributors:

Tesslate Studio wouldn't exist without these amazing open-source projects:

- FastAPI - Modern Python web framework

- React - UI library

- Vite - Lightning-fast build tool

- Monaco Editor - VSCode's editor

- LiteLLM - Unified AI gateway

- Traefik - Cloud-native proxy

- PostgreSQL - Reliable database

Built by developers who believe critical infrastructure should be open