This repo compares different data loading methods for TensorFlow (tf.data, Keras Sequence, pure Python generator) and provides a performance benchmark.

This is still ongoing work. If you spot an error or a possible improvement, open an issue.

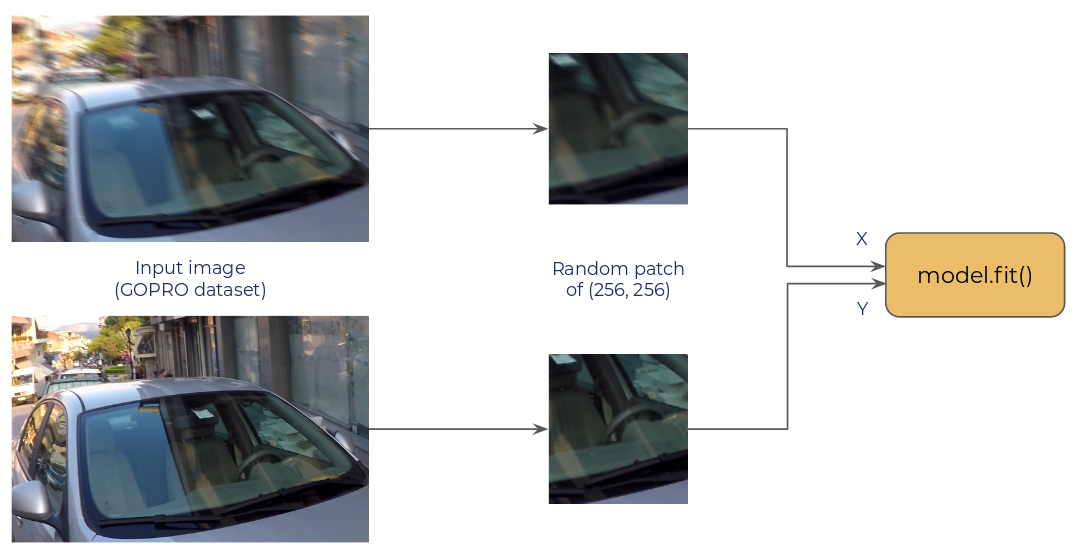

The objective is a deblurring task, where we have to pass two random crops of a given input image to the model for training.

Training time is monitored on a RTX 2080 (Cuda 10.0, tensorflow-gpu==2.0.0) for:

- num_epochs: 5

- steps_per_epoch: 200

- batch_size: 4

- patch_size: (256, 256)

Dataset used for this is the GOPRO Dataset (download it here).

Results for the different loaders. Explanation on differences between each loader is explained below.

| Loaders | Eager Mode Enabled (s) | Eager Mode Disabled (s) |

|---|---|---|

BasicPythonGenerator |

410 | 71 |

BasicTFDataLoader |

184 | 47 |

TFDataNumParallelCalls |

110 | 46 |

TFDataPrefetch |

106 | 46 |

TFDataGroupedMap |

103 | 46 |

TFDataCache |

95 | 46 |

BasicPythonGenerator:- Implement a simple

yield - Operations to load images are using

tf.ioandtf.image

- Implement a simple

BasicTFDataLoader:- Use the

tf.dataAPI - Perform operations with

tf.ioandtf.image

- Use the

TFDataNumParallelCalls:- Add

num_parallel_calls=tf.data.experimental.AUTOTUNEto each.mapoperations

- Add

TFDataPrefetch:- Use prefetching to dataset with

dataset = dataset.prefetch(tf.data.experimental.AUTOTUNE)

- Use prefetching to dataset with

TFDataGroupedMap:- Group all

tf.ioandtf.imageoperations to avoid popping too much processes

- Group all

TFDataCache:- Cache dataset before selecting random crops with

dataset.cache()

- Cache dataset before selecting random crops with

- Check for GPU drop with

nvidia-smiand TensorBoard profiling - Add

num_parallel_calls=tf.data.experimental.AUTOTUNEfor optimal parallelization - Group

.map()operations to avoid popping too much processes - Cache your dataset at the right time (before data augmentation)

- Disable eager mode with

tf.compat.v1.disable_eager_execution()when you're sure of your training pipeline

- Create a virtual environment

- Download the GOPRO Dataset

python datasets_comparison.py --epochs 5 --steps_per_epoch 200 --batch_size 4 --dataset_path /path/to/gopro/train --n_images 600 --enable_eager Truepython run_keras_sequence.py --epochs 5 --steps_per_epoch 200 --batch_size 4 --dataset_path /path/to/gopro/train --n_images 600 --enable_eager True