Work in progress repository that implements Multi-Class Text Classification using a CNN (Convolutional Neural Network) for a Deep Learning university exam using PyTorch 1.3, TorchText 0.5 and Python 3.7. It also integrates TensorboardX, a module for visualization with Google’s tensorflow’s Tensorboard (web server to serve visualizations of the training progress of a neural network). TensorboardX gives us the possibility to visualize embeddings, PR and Loss/Accuracy curves.

I've started this project following this awesome tutorial that perfectly shows how to perform sentiment analysis with PyTorch. The convolutional approach to sentences classification takes inspiration from Yoon Kim's paper.

The achievement of this particular application of convolutional neural networks to Text Classification is to being able to categorize 7 different classes of Italian Math/Calculus exercises, using a small, but balanced dataset. For example, given a 4D optimization Calculus exercise to the input, the NN should be able to categorize that exercise as a 4D optimization problem.

- Export datasets with a folder hierarchy (eg. test/label_1/point.txt) from txt to JSON files (with TEXT and LABEL as fields)

- Import custom datasets

- Train, evaluate and save model's state

- Make a prediction from user's input

- Print infos about dataset and model

- Test NLPAug (NLP Data Augmentation)

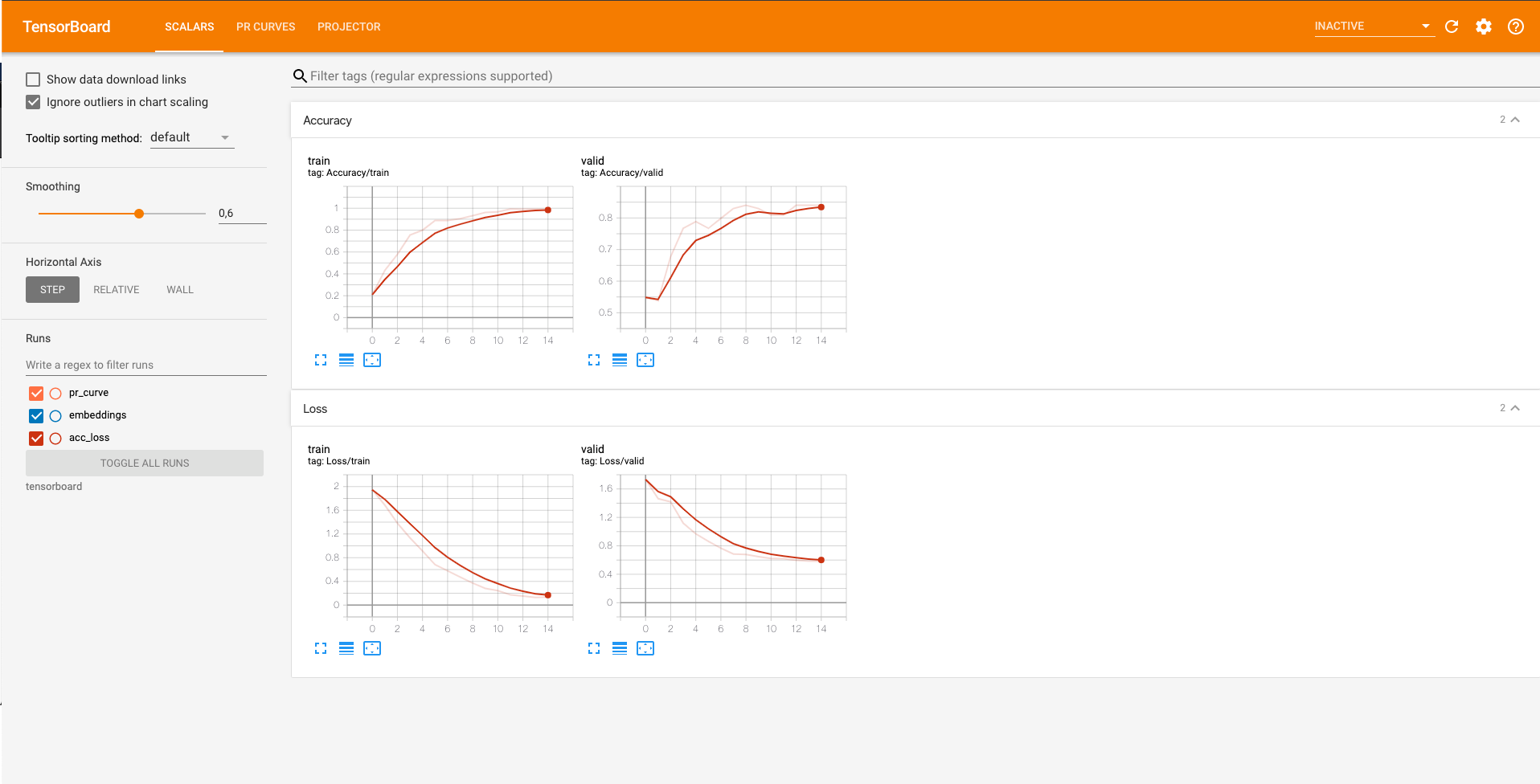

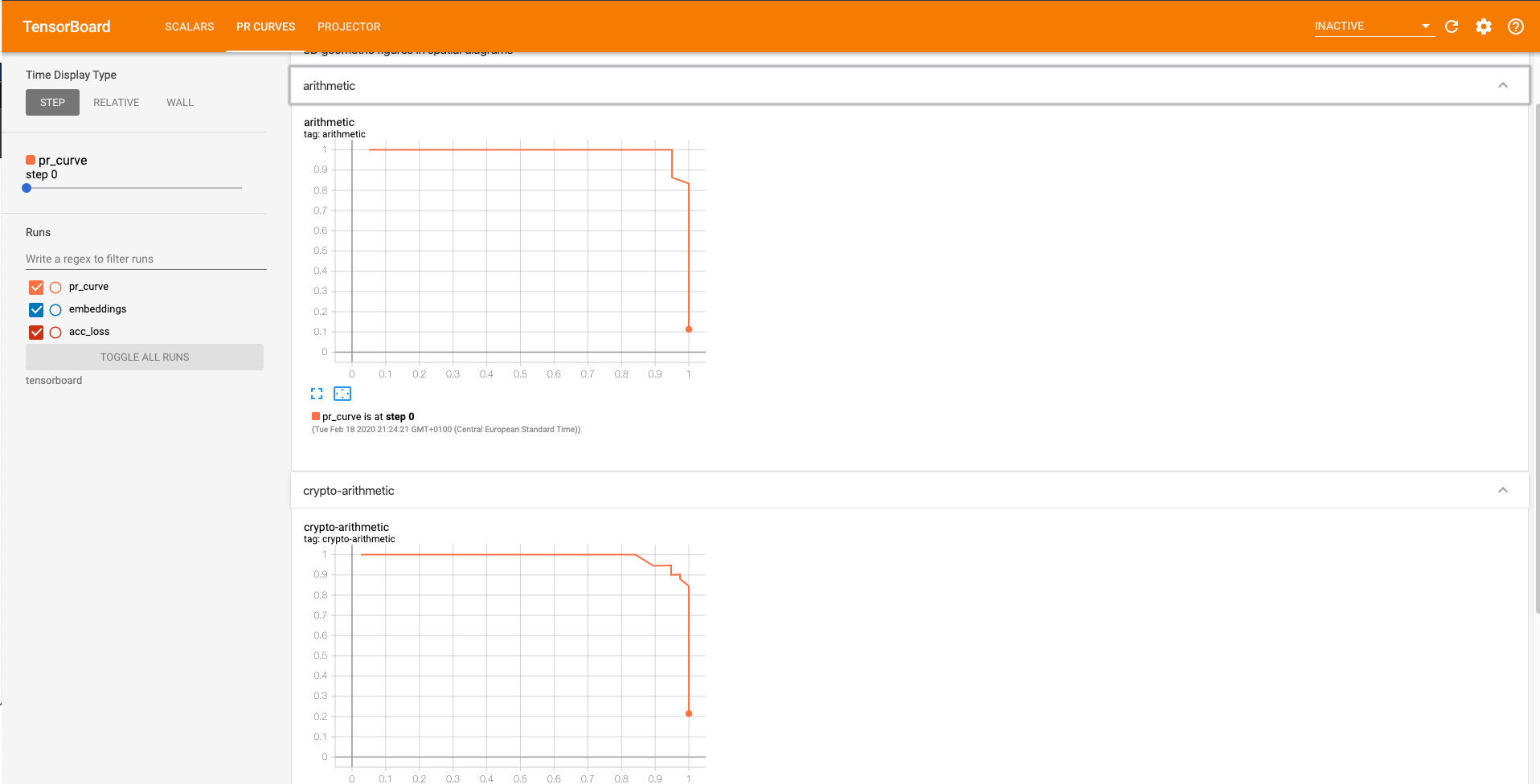

- Plot Accuracy, Loss and PR curves - TensorboardX

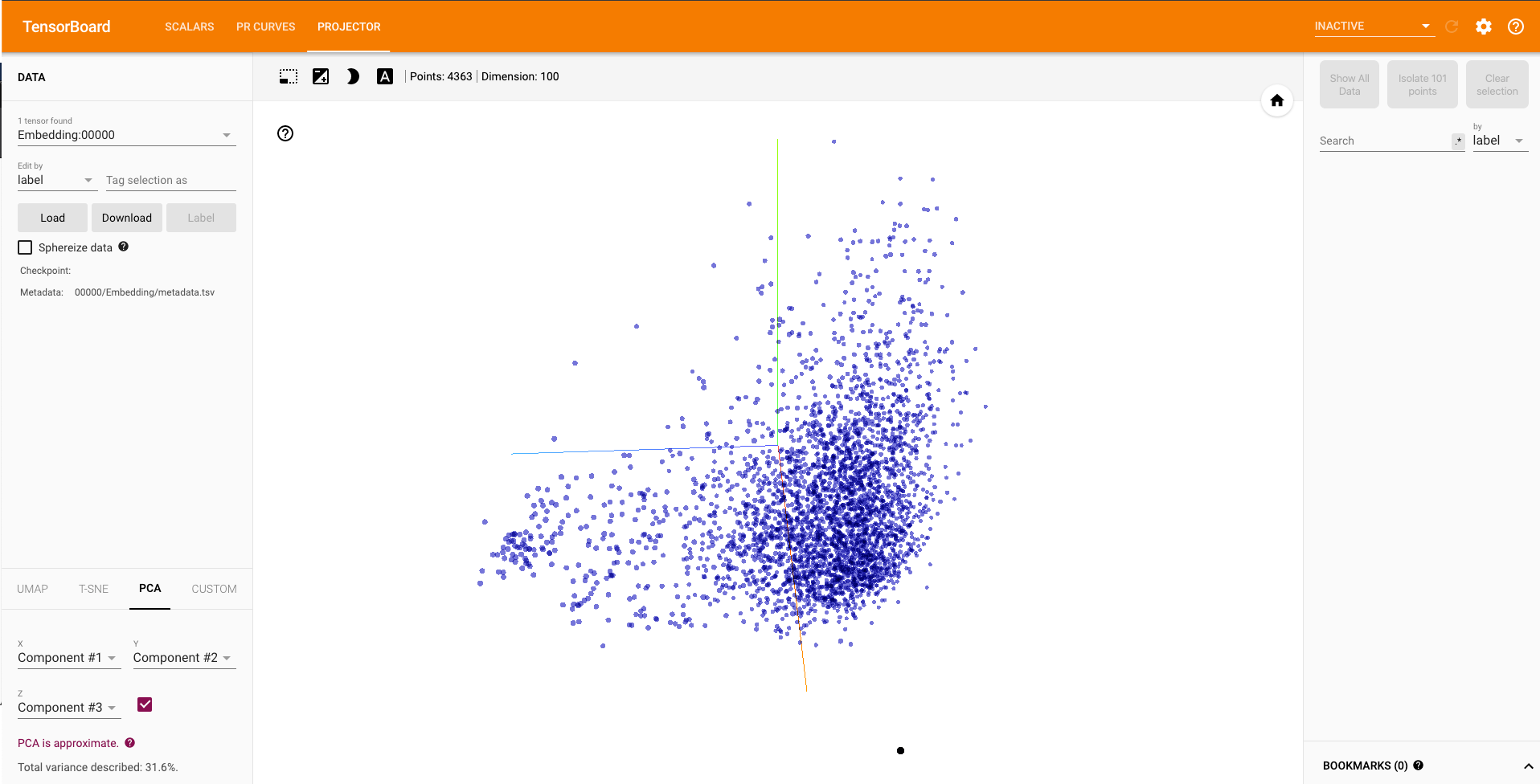

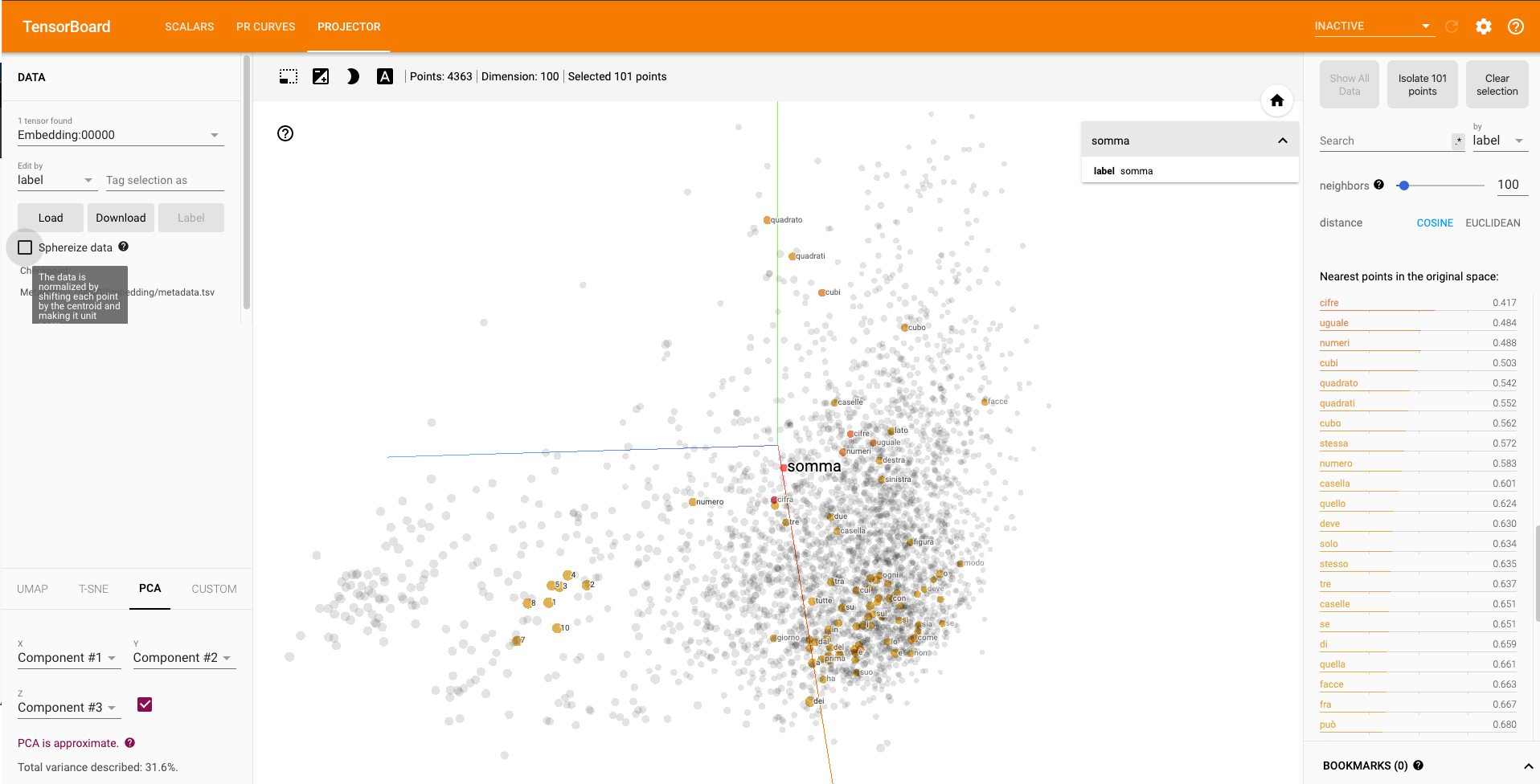

- Visualize the embedding space projection - TensorboardX

Embedding dimension: 100

N. of filters: 400

Vocab dimension: 4363

Filter Sizes = [2, 3, 4]

Batch Size = 32

Categories:

3D geometric figures in spatial diagrams

arithmetic

crypto-arithmetic

numbers in spatial diagrams

temporal reasoning

spatial reasoning

geometric figures in spatial diagrams OR puzzle

===================================================

Layer (type) Output Shape Param

===================================================

Embedding-1 [-1, 32, 100] 436,300

Conv2d-2 [-1, 400, 31, 1] 80,400

Conv2d-3 [-1, 400, 30, 1] 120,400

Conv2d-4 [-1, 400, 29, 1] 160,400

Dropout-5 [-1, 1200] 0

Linear-6 [-1, 7] 8,407

===================================================

Total params: 805,907

Trainable params: 805,907

Non-trainable params: 0

Best Test Loss achieved: 0.654

Best Test Accuracy achieved: 83.33%

To install PyTorch, see installation instructions on the PyTorch website.

Install TorchText, Inquirer, TorchSummary* and TensorboardX:

pip install inquirer

pip install tensorboardX

pip install torchsummary

pip install torchtextSpaCy is required to tokenize our data. To install spaCy, follow the instructions here making sure to install the Italian (or other language) module with:

python -m spacy download it_core_news_smI've tried two different Italian embeddings - to build the vocab and load the pre-trained word embeddings - you can download them here:

- Human Language Technologies - CNR

- [Suggested] Italian CoNLL17 corpus (filtering by language)

You should extract one of them to vector_cache folder and load it from dataset.py. For example:

vectors = vocab.Vectors(name='model.txt', cache='vector_cache/word2vec_CoNLL17')I'd anyway suggest to use Word2Vec models as I've found them easier to integrate with libraries such nlpaug - Data Augmentation for NLP

After have performed any TensorboardX related operation remember to run

tensorboard --logdir=tensorboard Due to a Torchsummary issue with embeddings you should change the dtype from FloatTensor to LongTensor in its source file in order to have the summary of the model in a Keras-like way

This project works on a custom private dataset. You can import your own .txt dataset just adopting the following folder pattern.

/ezmath/

...

...

|-- dataset_folder

| |-- test

| |-- train

| |-- validation

| | |-- whatever_label_1

| | |-- whatever_label_1

| | |-- ...

| | |-- ...

| | |-- whatever_label_X

| | | |-- whatever_1.txt

| | | |-- whatever_2.txt

| | | |-- ...

| | | |-- ...

| | | |-- whatever_Y.txtload_dataset() will create a data folder with three JSON files test.json, train.json, validation.json

every JSON will contain Y entries. Every entry will have 2 fields: text and label.

For eg.

{"text": ["This", "was", "a", ".txt"], "label": "whatever_label_X"}- http://anie.me/On-Torchtext/

- https://github.com/lanpa/tensorboardX

- https://radimrehurek.com/gensim/models/word2vec.html#module-gensim.models.word2vec

- https://stats.stackexchange.com/questions/164876/tradeoff-batch-size-vs-number-of-iterations-to-train-a-neural-network

- https://magmax.org/python-inquirer/examples.html

- https://pytorch.org/docs/stable/tensorboard.html?highlight=tensorboard

- http://www.erogol.com/use-tensorboard-pytorch/

- https://github.com/sksq96/pytorch-summary

- https://arxiv.org/abs/1408.5882