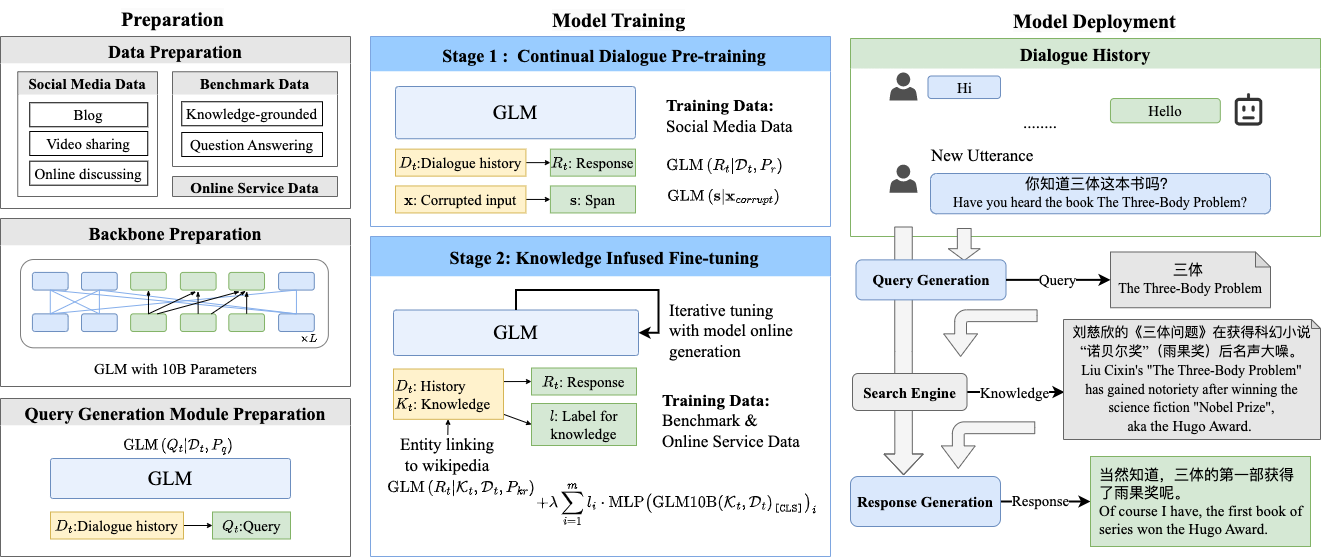

GLM-Dialog is a large-scale language model (LLM) with 10B parameters capable of knowledge-grounded conversation in Chinese using a search engine to access the Internet knowledge. It is obtained by fine-tuning GLM10B, an open-source, pre-trained Chinese LLM with 10B parameter. GLM-Dialog offers

- a series of applicable techniques for exploiting various external knowledge including both helpful and noisy knowledge, enabling the creation of robust knowledge-grounded dialogue LLMs with limited proper datasets;

- a novel evaluation platform for comparing the dialogue models in real-world applications;

- a large-scale, open-source dialogue model for building downstream dialogue service;

- an easy-to-use toolkit that consists of tools such as short text entity linking, query generation, helpful knowledge classification, as well as an online service on WeChat platform for supporting convenient usage and experience.

We provide two ways to configure the environment: dockerfile configuration or manual configuration. Clone the repo first.

git clone https://github.com/RUCKBReasoning/GLM-dialog

cd GLM-dialogDocker Image: We prepare a docker image based on CUDA 11.2 docker/cuda112.dockerfile.

docker build -f cuda112.dockerfile . -t dailglm-cuda112

docker run --gpus all --rm -it --ipc=host dailglm-cuda112Manual Installation:

Please first install PyTorch (we use 1.9.0) and apex, and then install other

dependencies by pip install -r requirements.txt

We currently do not support multi-GPU inference. Loading the 10B model in FP16 mode requires at least 19GB of GPU memory, so please make sure you have a GPU with 24GB of memory or more, such as the RTX 3090.

The trained checkpoint for our final model can be downloaded google_drive or pan

mv glm-dialog.zip model_ckpt && cd model_ckpt && unzip glm-dialog.zipcd infer && python deploy.pySee train.md for instructions.

-

HOSMEL is a hot swappable modulized entity linking toolkit for short text in Chinese

-

The query generation module takes dialogue history as input and generates an appropriate search query, which is passed to an online search engine for retrieving dialogue-relevant knowledge snippets

cd tools && bash query_generation.sh- Helpful knowledge Classifier decides whether to use the external knowledge or not**

cd tools && bash knowledge_classification.shOur model achieves promising performance by the traditional human-evaluation methods.

Human-evaluation on 50 chit-chat self-chat dialogues:

| Model | Coherence | Informativeness | Safety | Inspiration | Hallucination | Engagingness | Faithfulness |

|---|---|---|---|---|---|---|---|

| CDial-GPT | 0.860 | 0.851 | 0.913 | 0.515 | 0.291 | 0.500 | 0.473 |

| PLATO-2 | 1.455 | 1.438 | 1.448 | 1.129 | 0.062 | 1.260 | 1.220 |

| EVA2.0 | 1.386 | 1.336 | 1.362 | 0.902 | 0.068 | 1.213 | 1.093 |

| GLM10B | 1.371 | 1.296 | 1.539 | 0.932 | 0.130 | 1.187 | 1.160 |

| DialGLM10B | 1.515 | 1.517 | 1.656 | 1.171 | 0.098 | 1.383 | 1.383 |

Human-evaluation on 100 knowledge-grounded self-chat dialogues:

| Model | Coherence | Informativeness | Safety | Inspiration | Hallucination | Engagingness | Faithfulness |

|---|---|---|---|---|---|---|---|

| CDial-GPT | 1.140 | 1.069 | 1.478 | 0.591 | 0.221 | 0.603 | 0.690 |

| PLATO-2 | 1.698 | 1.614 | 1.793 | 1.090 | 0.032 | 1.420 | 1.413 |

| EVA2.0 | 1.488 | 1.413 | 1.674 | 0.832 | 0.089 | 1.230 | 1.223 |

| GLM10B | 1.513 | 1.497 | 1.669 | 1.157 | 0.093 | 1.460 | 1.340 |

| DialGLM10B | 1.759 | 1.742 | 1.816 | 1.223 | 0.046 | 1.550 | 1.473 |

Human-evaluation on 50 chit-chat human-bot chat dialogue:

| Model | Coherence | Informativeness | Safety | Inspiration | Hallucination | Engagingness | Faithfulness |

|---|---|---|---|---|---|---|---|

| CDial-GPT | 1.138 | 0.984 | 1.310 | 0.690 | 0.272 | 0.696 | 0.660 |

| PLATO-2 | 1.725 | 1.610 | 1.741 | 1.239 | 0.068 | 1.392 | 1.316 |

| EVA2.0 | 1.690 | 1.494 | 1.743 | 1.107 | 0.077 | 1.312 | 1.292 |

| GLM10B | 1.439 | 1.436 | 1.513 | 1.249 | 0.164 | 1.236 | 1.208 |

| GLM130B | 1.232 | 1.179 | 1.378 | 1.000 | 0.257 | 0.816 | 0.784 |

| DialGLM10B | 1.660 | 1.641 | 1.688 | 1.376 | 0.127 | 1.440 | 1.460 |

Human-evaluation on 100 knowledge-grounded human-bot chat dialogue:

| Model | Coherence | Informativeness | Safety | Inspiration | Hallucination | Engagingness | Faithfulness |

|---|---|---|---|---|---|---|---|

| CDial-GPT | 0.956 | 0.777 | 1.194 | 0.543 | 0.363 | 0.562 | 0.542 |

| PLATO-2 | 1.585 | 1.387 | 1.650 | 1.086 | 0.129 | 1.244 | 1.128 |

| EVA2.0 | 1.524 | 1.275 | 1.616 | 0.961 | 0.151 | 1.150 | 1.096 |

| GLM10B | 1.543 | 1.528 | 1.570 | 1.329 | 0.174 | 1.324 | 1.282 |

| GLM130B | 1.177 | 1.128 | 1.315 | 0.954 | 0.303 | 0.852 | 0.832 |

| DialGLM10B | 1.668 | 1.624 | 1.688 | 1.393 | 0.134 | 1.412 | 1.368 |

Evaluation platform:

We also provide an implicit human evaluation strategy that enables a human to centrally converse with several dialogue models at once and implicitly compare these bots during the conversation process. You can access the evaluation platform here, which currently includes CDial-GPT, EVA2.0, and Plato2, GLM10B, GLM130B, and GLM-Dialog for evaluation. We release the codes for the automatic evaluation, traditional human evaluation, and the proposed implicit human evaluation at DialEvaluation.

This is the official implementation of the paper "GLM-Dialog:Noise-tolerant Pre-training for Knowledge-grounded Dialogue Generation[https://arxiv.org/pdf/2302.14401.pdf]" (KDD 2023 ADS).

If this repository could help you, please cite the following paper:

@inproceedings{zhangkdd2023,

author = {Jing Zhang, Xiaokang Zhang, Daniel Zhang-Li, Jifan Yu, Zijun Yao, Zeyao Ma, Yiqi Xu, Haohua Wang, Xiaohan Zhang, Nianyi Lin, Sunrui Lu, Juanzi Li, Jie Tang},

title = "GLM-Dialog:Noise-tolerant Pre-training for Knowledge-grounded Dialogue Generation",

booktitle = "KDD",

year = "2023"

}

NPM is CC-BY-NC 4.0 licensed.

Please leave Github issues or contact Xiaokang Zhang zhang2718@ruc.edu.cn for any questions.