Kalyani Marathe*, Mahtab Bigverdi*, Nishat Khan, Tuhin Kundu, Aniruddha Kembhavi, Linda G. Shapiro, Ranjay Krishna

Welcome to the official repository of MIMIC! MIMIC is a data curation method that can be used to mine multiview image pairs from videos and synthetic 3D environments. It does not require any annotations such as 3D meshes, point clouds, and camera parameters. Using MIMIC we curate MIMIC-3M, a dataset of 3.1 M image pairs, and train MAE and CroCo objectives.

In this repository, we provide the scripts and instructions to download and curate MIMIC-3M. We also provide the code to train CroCo and open-source the checkpoint trained using MIMIC-3M.

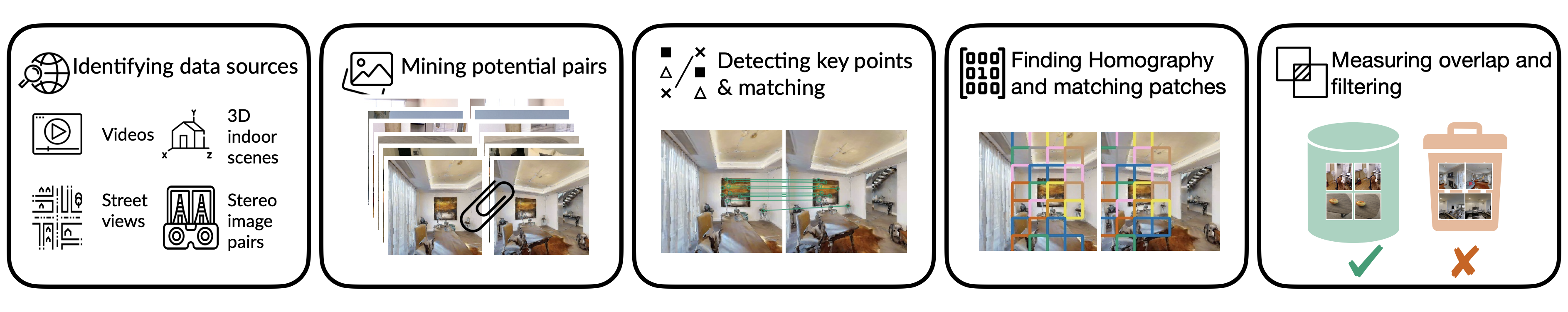

The figure below shows the end-to-end pipeline of MIMIC. MIMIC identifies data sources with indoor scenes/people/objects videos, 3D indoor environments, outdoor street views, and stereo pairs to determine potential multiview images. Next, it leverages traditional computer vision methods such as SIFT keypoint detection and homography transformation to locate corresponding patches. Finally, it filters pairs based on a threshold for significant overlap, ensuring a substantial percentage of pixels match between a pair.

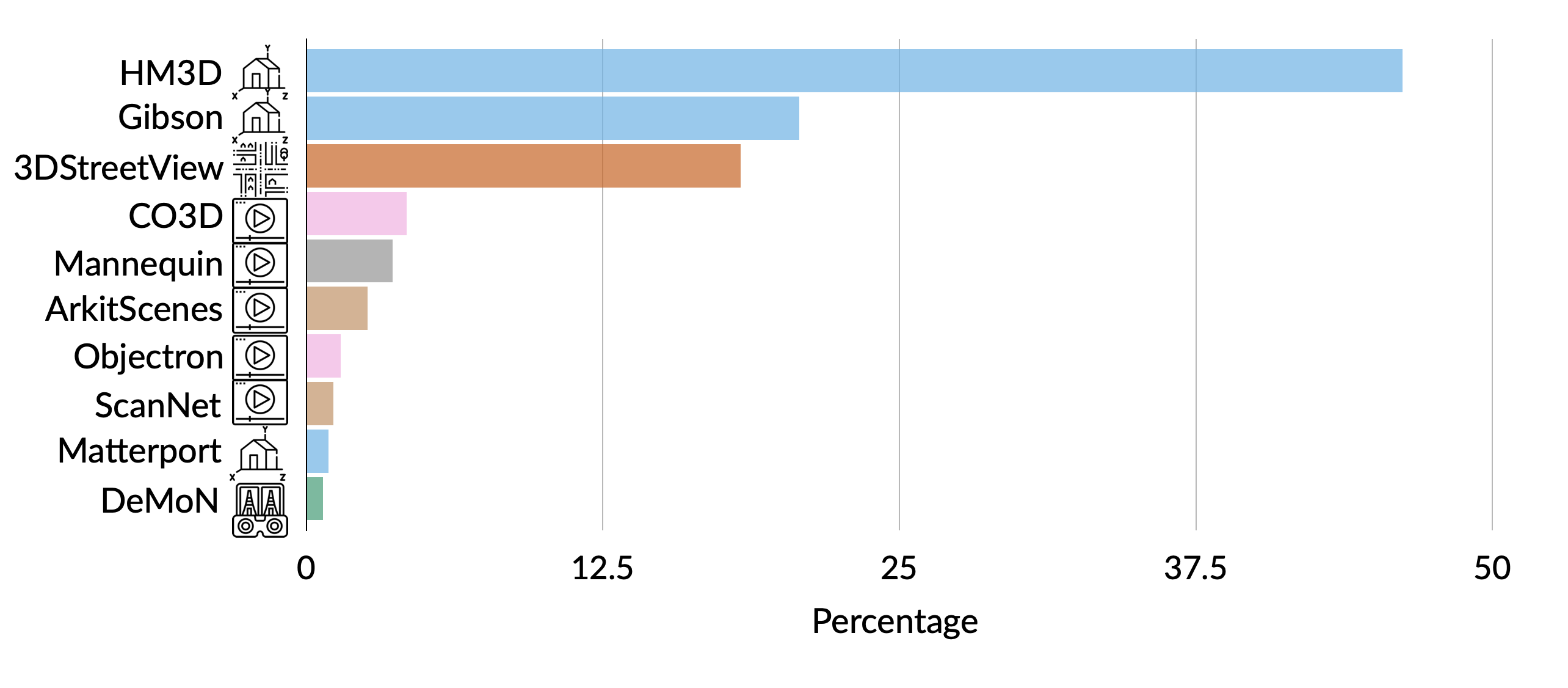

The figure below shows the distribution of MIMIC-3M. Real data sources, including DeMoN, ScanNet, ArkitScenes, Objectron, CO3D, Mannequin, and 3DStreetView, contribute to 32% of MIMIC. The remaining portion consists of synthetic sources, namely HM3D, Gibson, and Matterport.

The instructions to mine potential pairs are provided in DATASET.md. You can also use these scripts to mine potential pairs on your own video datasets/ synthetic environments. Refer to the following file for setting up a conda environment to mine MIMIC-3M : dataset.yml

You can download the zip file of image pairs with metadata from different sources here: HM3D, Gibson, Matterport, Mannequin, ArkitScenes, CO3D, Objectron, 3DStreetView, DeMoN, ScanNet. The whole dataset can be downloaded here. The CSV file containing paths for all 3 million image pairs is here. After unzipping the files, you will find the following folder structure:

<MIMIC>

<Dataset_Name>

<Video/Scene_ID>

<Pair_Number>

<0000.jpg> ## view 1

<0001.jpg> ## view 2

<corresponding.npy> ## correspondence info

<Video/Scene_ID>

<Pair_Number>

<0000.jpg> ## view 1

<0001.jpg> ## view 2

<corresponding.npy> ## correspondence info

.

.

.

.

.

.

The file corresponding.npy contains the patch correspondences between view1 and view2. It is a Python dictionary e.g. {1:2, 15:100, 121: 4} in which the keys are the patch numbers in the first view (0000.jpg) and the values are the patch numbers in the second view (0001.jpg). We assign index 0 to the top left patch and traverse row by row.

Refer to mae.yml for creating a conda environment for pretraining. The command to train CroCo on MIMIC is provided below. The effective batch size (batch_size_per_gpu * nodes * gpus_per_node * accum_iter) is equal to 4096.

torchrun --nproc_per_node=8 main_pretrain.py --multiview \

--batch_size 128 \

--accum_iter 4 \

--model mae_vit_base_patch16 \

--norm_pix_loss \

--mask_ratio 0.9 \

--epochs 200 \

--blr 1.5e-4 \

--warmup_epochs 20 \

--train_path_csv /path/to/csv \

--base_data_path /path/to/MIMIC/data/folderTo train models in a multi-node setup refer to the following command:

python submitit_pretrain.py \

--job_dir job_dir \

--base_data_path base_data_path \

--train_path_csv /path/to/csv \

--multiview \

--accum_iter 4 \

--nodes 2 \

--batch_size 64 \

--model mae_vit_base_patch16 \

--norm_pix_loss \

--mask_ratio 0.9 \

--epochs 200 \

--warmup_epochs 20 \

--blr 1.5e-4 \

--account account \

--partition gpu-a40 \

--timeout timeout --report_to_wandb \

--wandb_project croco --wandb_entity entity \

--run_name croco_200_vitb \The following table provides the pre-trained checkpoint on MIMIC-3M used in the paper.

| ViT-Base | |

|---|---|

| pre-trained checkpoint | download |

For fine-tuning, refer to MAE for linear probing, MultiMAE for semantic segmentation and depth estimation, and ViTPose for pose estimation. Make sure to convert the checkpoint to the MultiMAE format before evaluations using the following converter: link for depth estimation and semantic segmentations tasks

The log files of the finetuning runs are in finetune.

The code for model training is built on top of MAE, uses cross-attention blocks from CroCo, and refers to MultiMAE for evaluations. We thank them all for open-sourcing their work.

This project is under the CC BY-NC-SA 4.0 license. See LICENSE for details.

If you find this work useful, please consider citing us:

@article{marathe2023mimic,

title={MIMIC: Masked Image Modeling with Image Correspondences},

author={Marathe, Kalyani and Bigverdi, Mahtab and Khan, Nishat and Kundu, Tuhin and Kembhavi, Aniruddha and Shapiro, Linda G and Krishna, Ranjay},

journal={arXiv preprint arXiv:2306.15128},

year={2023}

}