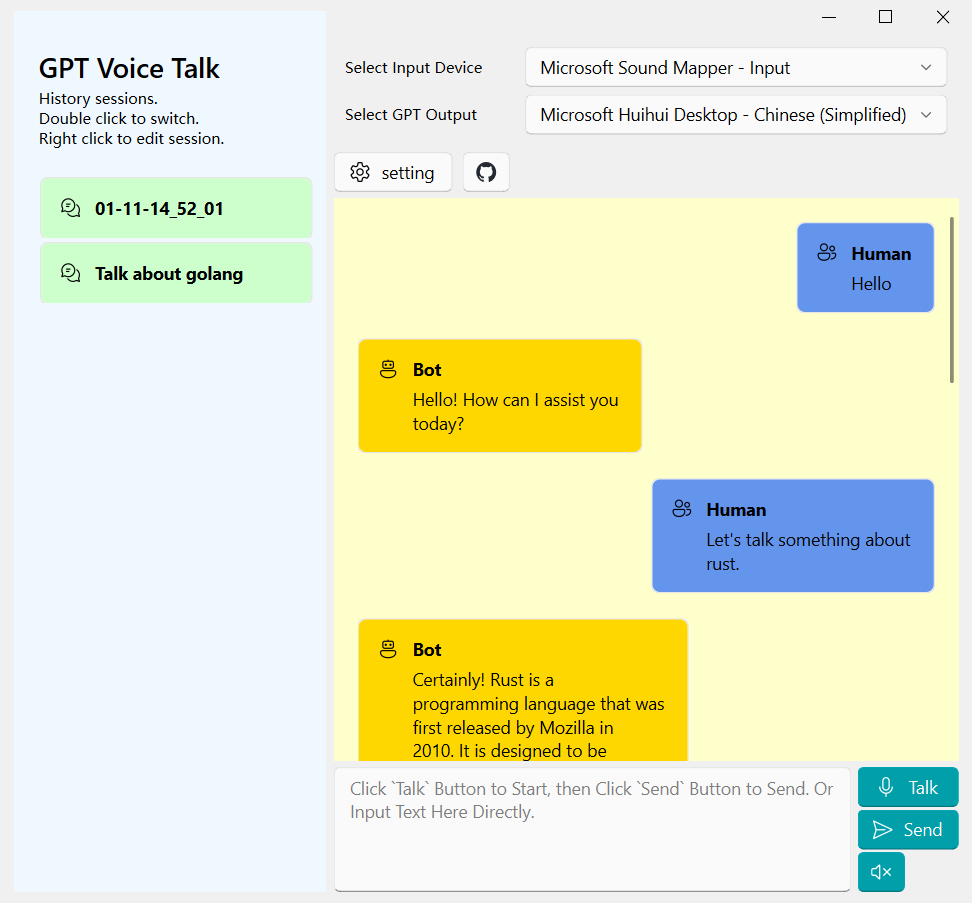

Base on whisper and PyQT(PySide6), a RealTime Voice GPT chat tool, supporting historical conversations. Enjoy chatting with GPT voice without relying on ChatGPT Plus. 🐔🐔

python >= 3.10

win10+, Linux(Just test in Ubuntu, works), Mac(Based on Linux as reference, theoretically feasible, but not tested.)

Running the whisper base model requires less than 1GB of available memory, and the results are passable with no noise and an accuracy rate around 90% in accurate spoken language situations. The whisper large model requires over 8GB of available memory, yet it provides excellent performance. Even my poor English speaking skills are recognized fairly accurately. Moreover, it handles long speech segments and interruptions quite effectively.

In summary, the base model is more user-friendly, but if conditions allow, it's recommended to use the large model. In cases of recognition errors, modifications can be directly made to the recognized results in the GUI.

Clone repo

git clone https://github.com/QureL/ChatGPTVoice.git

cd ChatGPTVoiceCreate and activate a virtual environment.(powershell. In Bash, you may need to run scripts like activate.)

mkdir venv

python -m venv .\venv\

.\venv\Scripts\Activate.ps1Install dependencies.

pip install -r requirements.txtIn Linux, you need to run the following command to install the required dependencies.

apt install portaudio19-dev python3-pyaudio

apt install espeakExecute directly within the virtual env.

python ./main.py

I have a Linux host with 12GB of GPU memory and a laptop with a weak 1650 GPU. To run the Whisper large model, you can host Whisper on Linux and use websocket communication between the client and Whisper.

Linux:

python scrpit/whisper_server.py --model large-v2

client:

python .\main.py --whisper_mode remote --whisper_address ws://{You Linux IP}:3001

python .\main.py --proxy http://127.0.0.1:10809After enabling the proxy, all OpenAI GPT requests and model downloads will pass through the proxy node.

PyQt-Fluent-Widgets A fluent design widgets library based on PyQt5

- 国际化支持

- 聊天ui优化

- 导入其他TTS

- 消息编辑

- 本地langchain向量库