NeurIPS 2024 Paper

He Qiyuan1,

Wang Jinghao2,

Liu Ziwei2,

Angela Yao1,✉;

Computer Vision & Machine Learning Group, National University of Singapore 1

S-Lab, Nanyang Technological University 2

✉ Corresponding Author

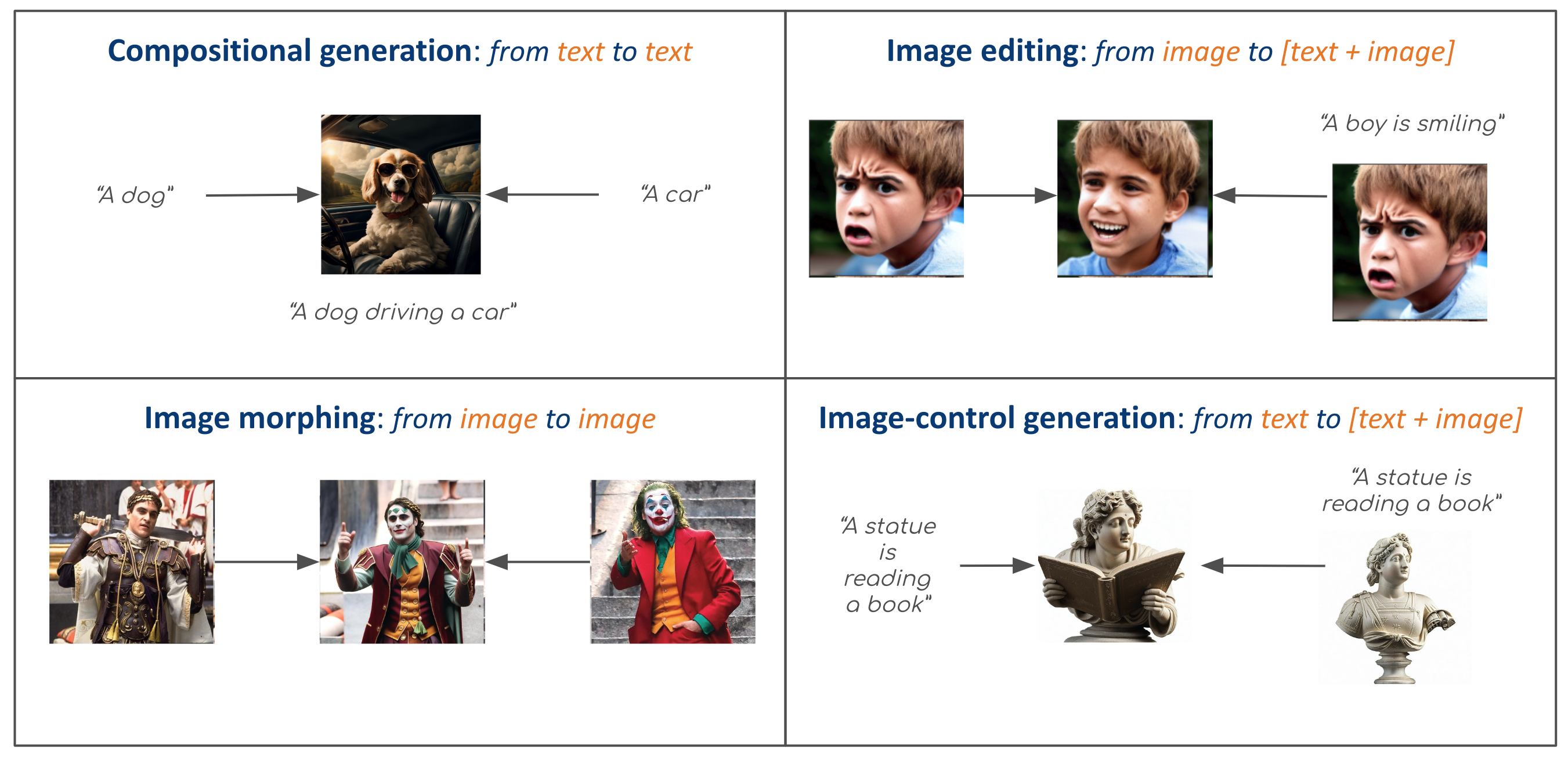

[10/2024] We are now supporting various application including compositional generation, image morphing, image editing and image-control generation (based on IP-Adapter), try play_sdxl_application.ipynb and play_sd.ipynb. It's also available in our Hugging Face Space AID-v2. Have fun!

[10/2024] We are now supporting interpolating between images via IP-Adapter!

[10/2024] We add dynamic selection pipeline to further improve smoothness, try play_sdxl_trial.ipynb!

[10/2024] PAID is accepted as a conference paper by NeurIPS 2024!

[03/2024] Code and paper are publicly available.

TL;DR: AID (Attention Interpolation via Diffusion) is a training-free method that enables the text-to-image diffusion model to generate interpolation between different conditions with high consistency, smoothness and fidelity. Its variant, PAID, provides further control of the interpolation via prompt guidance.

CLICK for the full abstract

Conditional diffusion models can create unseen images in various settings, aiding image interpolation. Interpolation in latent spaces is well-studied, but interpolation with specific conditions like text or poses is less understood. Simple approaches, such as linear interpolation in the space of conditions, often result in images that lack consistency, smoothness, and fidelity. To that end, we introduce a novel training-free technique named Attention Interpolation via Diffusion (AID). Our key contributions include 1) proposing an inner/outer interpolated attention layer; 2) fusing the interpolated attention with self-attention to boost fidelity; and 3) applying beta distribution to selection to increase smoothness. We also present a variant, Prompt-guided Attention Interpolation via Diffusion (PAID), that considers interpolation as a condition-dependent generative process. This method enables the creation of new images with greater consistency, smoothness, and efficiency, and offers control over the exact path of interpolation. Our approach demonstrates effectiveness for conceptual and spatial interpolation.Given a prompt that involves multiple components (e.g., "A dog driving a car"), we use the compositional description as a guidance prompt, with each related component (e.g., "A dog" and "A car") serving as the prompts at endpoints for interpolation. Under this setting, we apply PAID and then select the image from the interpolation sequence that achieves the highest CLIP score with respect to the compositional description.

We can use P2P or EDICT to firstly inverse the generation process of given image, and then set the endpoint condition as the original prompt and the edting prompt, respectively, to control the editing level of images.

Using IP-Adapter, we set the two images as the condition at the endpoints of the interpolation sequence for image morphing. Notice that the text prompt can be further added to refine the generated images at the endpoints.

Given a text prompt and an image, we can better control the scale of IP-Adapter by AID. To achieve this, we set one endpoint as only using text prompt as condition while the other endpoint using both text and image condition. This provides smoother control over the scale of IP-Adapter.

Directly try PAID with Stable Diffusion 2.1 or SDXL using Google's Free GPU!

- Clone the repository and install the requirements:

git clone https://github.com/QY-H00/attention-interpolation-diffusion.git

cd attention-interpolation-diffusion

pip install requirements.txt- Go to

play.ipynborplay_sdxl.ipynbfor fun!

- install Gradio

pip install gradio- Launch the Gradio interface

gradio gradio_src/app.py| Model Name | Link |

|---|---|

| Stable Diffusion 1.5-512 | stable-diffusion-v1-5/stable-diffusion-v1-5 |

| Realistic Vision V4.0 | SG161222/Realistic_Vision_V4.0_noVAE |

| Stable Diffusion 2.1-768 | stabilityai/stable-diffusion-2-1 |

| Stable Diffusion XL-1024 | stabilityai/stable-diffusion-xl-base-1.0 |

| Animagine XL 3.1 | cagliostrolab/animagine-xl-3.1 |

| Realistic Vision XL V4.0 | SG161222/RealVisXL_V5.0 |

| Playground v2.5 – 1024 | playgroundai/playground-v2.5-1024px-aesthetic |

| Juggernaut XL v9 | RunDiffusion/Juggernaut-XL-v9 |

If you found this repository/our paper useful, please consider citing:

@article{he2024aid,

title={AID: Attention Interpolation of Text-to-Image Diffusion},

author={He, Qiyuan and Wang, Jinghao and Liu, Ziwei and Yao, Angela},

journal={arXiv preprint arXiv:2403.17924},

year={2024}

}We thank the following repositories for their great work: diffusers, transformers, IP-Adapter, P2P and EDICT.