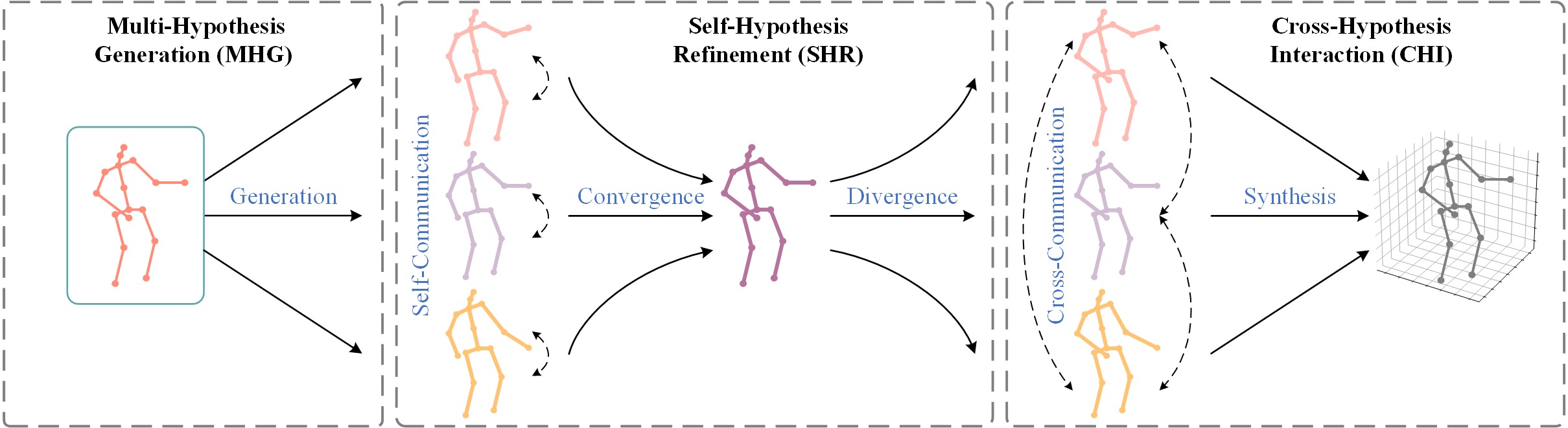

MHFormer_ResiDual: Multi-Hypothesis Transformer for 3D Human Pose Estimation [CVPR 2022] + ResiDual: Transformer with Dual Residual Connections

MHFormer: Multi-Hypothesis Transformer for 3D Human Pose Estimation,

Wenhao Li, Hong Liu, Hao Tang, Pichao Wang, Luc Van Gool,

In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2022

|

|

|---|

ResiDual: Transformer with Dual Residual Connections, Shufang Xie, Huishuai Zhang, Junliang Guo, Xu Tan, Jiang Bian, Hany Hassan Awadalla, Arul Menezes, Tao Qin, Rui Yan, arXiv preprint arXiv:2304.14802 (2023).

-

Modify the SHR network structure and add branches following the ResiDual method.

MHFormer:

MHFormer_ResiDual:

-

My paper will soon be uploaded to the arXiv platform.

- Create a conda environment:

conda create -n mhformer python=3.9 pip3 install -r requirements.txt

Please download the dataset from Human3.6M website and refer to VideoPose3D to set up the Human3.6M dataset ('./dataset' directory). Or you can download the processed data from here.

${POSE_ROOT}/

|-- dataset

| |-- data_3d_h36m.npz

| |-- data_2d_h36m_gt.npz

| |-- data_2d_h36m_cpn_ft_h36m_dbb.npzThe pretrained model can be found in here, please download it and put it in the './checkpoint/pretrained' directory.

To test on a 351-frames pretrained model on Human3.6M:

python main.py --test --previous_dir 'checkpoint/pretrained/351' --frames 351Here, we compare our MHFormer with recent state-of-the-art methods on Human3.6M dataset. Evaluation metric is Mean Per Joint Position Error (MPJPE) in mm.

| Models | MPJPE |

|---|---|

| VideoPose3D | 46.8 |

| PoseFormer | 44.3 |

| MHFormer | 43.0 |

| MHFormer_ResiDual |

To train a 351-frames model on Human3.6M:

python main.py --frames 351 --batch_size 128To train a 81-frames model on Human3.6M:

python main.py --frames 81 --batch_size 256First, you need to download YOLOv3 and HRNet pretrained models here and put it in the './demo/lib/checkpoint' directory. Then, you need to put your in-the-wild videos in the './demo/video' directory.

Run the command below:

python demo/vis.py --video sample_video.mp4Sample demo output:

If you find our work useful in your research, please consider citing:

@inproceedings{li2022mhformer,

title={MHFormer: Multi-Hypothesis Transformer for 3D Human Pose Estimation},

author={Li, Wenhao and Liu, Hong and Tang, Hao and Wang, Pichao and Van Gool, Luc},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

pages={13147-13156},

year={2022}

}

@article{li2023multi,

title={Multi-Hypothesis Representation Learning for Transformer-Based 3D Human Pose Estimation},

author={Li, Wenhao and Liu, Hong and Tang, Hao and Wang, Pichao},

journal={Pattern Recognition},

pages={109631},

year={2023},

}

@article{xie2023residual,

title={ResiDual: Transformer with Dual Residual Connections},

author={Xie, Shufang and Zhang, Huishuai and Guo, Junliang and Tan, Xu and Bian, Jiang and Awadalla, Hany Hassan and Menezes, Arul and Qin, Tao and Yan, Rui},

journal={arXiv preprint arXiv:2304.14802},

year={2023}

}

Our code is extended from the following repositories. We thank the authors for releasing the codes.

- ST-GCN

- VideoPose3D

- 3d-pose-baseline

- 3d_pose_baseline_pytorch

- StridedTransformer-Pose3D

- MHFormer

- ResiDual

This project is licensed under the terms of the MIT license.