1Chaofeng Chen, 1Sensen Yang, 1Haoning Wu, 1Liang Liao, 3Zicheng Zhang, 1AnnanWang, 2Wenxiu Sun, 2Qiong Yan, 1Weisi Lin

1S-Lab, Nanyang Technological University, 2Sensetime Research, 3Shanghai Jiao Tong University

- Release datasets

- Release test codes

- Release training codes

If you find this work useful, please consider to cite our paper:

@inproceedings{chen2024qground,

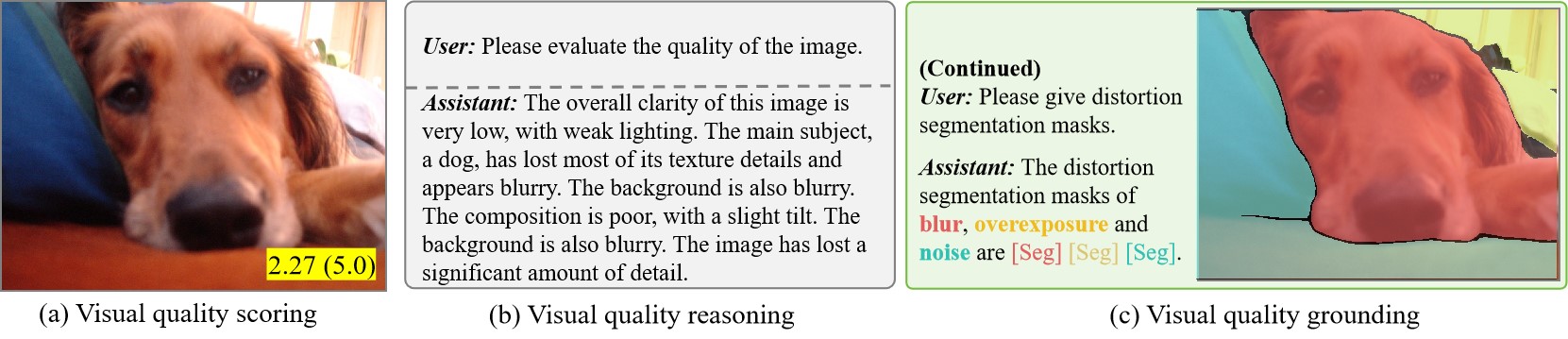

title={Q-Ground: Image Quality Grounding with Large Multi-modality Models},

author={Chaofeng Chen and Sensen Yang and Haoning Wu and Liang Liao and Zicheng Zhang and Annan Wang and Wenxiu Sun and Qiong Yan and Weisi Lin},

Journal = {ACM International Conference on Multimedia},

year={2024},

}

This project is based on PixelLM, LISA and LLaVA. Thanks to the authors for their great work!

-2B9370.svg)