Yunsheng Ma, Liangqi Yuan, Amr Abdelraouf, Kyungtae Han, Rohit Gupta, Zihao Li, Ziran Wang

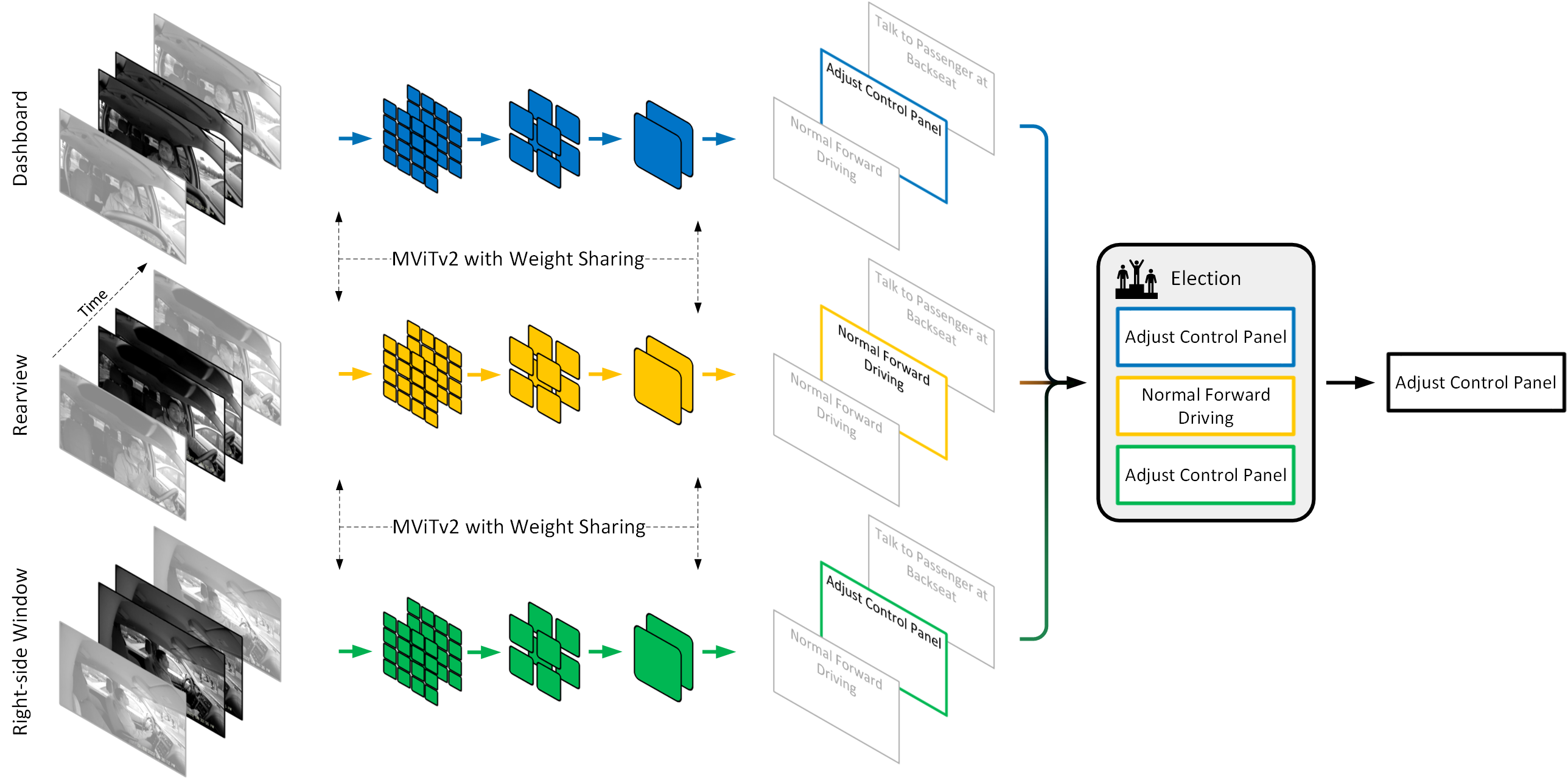

Ensuring traffic safety and preventing accidents is a critical goal in daily driving. Computer vision technologies can be leveraged to achieve this goal by detecting distracted driving behaviors. In this paper, we present M2DAR, a multi-view, multi-scale framework for naturalistic driving action recognition and localization in untrimmed videos.

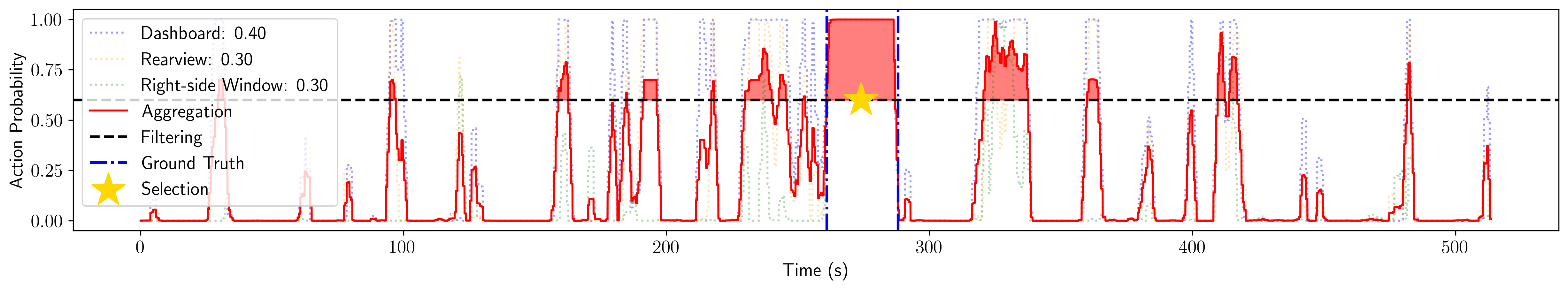

M2DAR is a weight-sharing, Multi-Scale Transformer-based action recognition network that learns robust

hierarchical representations. It features a novel election algorithm consisting of aggregation, filtering, merging, and

selection processes to refine the preliminary results from the action recognition module across multiple views.

M2DAR is a weight-sharing, Multi-Scale Transformer-based action recognition network that learns robust

hierarchical representations. It features a novel election algorithm consisting of aggregation, filtering, merging, and

selection processes to refine the preliminary results from the action recognition module across multiple views.

This project is based on PySlowFast and AiCityAction codebases. Please follow the instructions there to set up the environment. Additionally, install the decord library by running the following command:

pip install decord-

Download the AI-City Challenge 2023 Track 3 Dataset and put it into

data/orignal. The expected contents of this folder are:data/original/A1data/original/A2data/original/Distracted_Activity_Class_definition.txt

-

Create an annotation file

annotation_A1.csvby running the following command:

python tools/data_converter/create_anno.py- Move all videos in both the A1 and A2 subsets in a single folder

data/preprocessed/A1_A2_videosusing the following command:

python tools/data_converter/relocate_video.py- Process the annotation file to fix errors in the original annotation file such as action end time < start time or wrong action labels by running the following command:

python tools/data_converter/process_anno.py- Cut the videos into clips using the following commands:

mkdir data/preprocessed/A1_clips

parallel -j 4 <data/A1_cut.sh- Split the

processed_annotation_A1.csvinto train and val subsets using the following commands:

python tools/data_converter/split_anno.py- Make annotation files for training on the whole A1 set:

$ mkdir data/annotations/splits/full

$ cat data/annotations/splits/splits_1/train.csv data/annotations/splits/splits_1/val.csv >data/annotations/splits/full/train.csv

$ cp data/annotations/splits/splits_1/val.csv data/annotations/splits/full/

- Download the MViTv2-S pretrained model

from here and put it

as

MViTv2_S_16x4_pretrained.pythunderdata/ckpts.

To train the model, set the working directory to be the project root path, then run:

export PYTHONPATH=$PWD/:$PYTHONPATH

python tools/run_net.py --cfg configs/MVITV2_S_16x4_448.yaml

1.Copy the video_ids.csv from the original dataset to the annotation directory:

cp data/original/A2/video_ids.csv data/annotations

mv data/annotations/video_ids.csv data/annotations/A2_video_ids.csv

- Create the video list using the following:

python tools/data_converter/create_test_video_lst.py-

Download the backbone model checkpoint from here, which is the one that achieved an overlap score of 0.5921 on the A2 test set and put it at

data/ckpts/MViTv2_S_16x4_aicity_200ep.pyth. -

Run the DAR module using the following commands, which will generate preliminary results stored at

data/runs/A2in pickle format:

python tools/inference/driver_action_rec.py

- Run the Election algorithm to refine the preliminary findings from the DAR module using the following commands:

python tools/inference/election.pyThe submission file is at data/inferences/submit.txt, which achieves an overlap score of 0.5921 on the A2 test set.

If you use this code for your research, please cite our paper:

@inproceedings{ma_m2dar_2023,

title = {{M2DAR}: {Multi}-{View} {Multi}-{Scale} {Driver} {Action} {Recognition} with {Vision} {Transformer}},

booktitle = {2023 {IEEE}/{CVF} {Conference} on {Computer} {Vision} and {Pattern} {Recognition} {Workshops} ({CVPRW})},

author = {Ma, Yunsheng and Yuan, Liangqi and Abdelraouf, Amr and Han, Kyungtae and Gupta, Rohit and Li, Zihao and Wang, Ziran},

month = jun,

year = {2023},

}

This repo is based on the PySlowFast and AiCityAction repos. Many thanks!