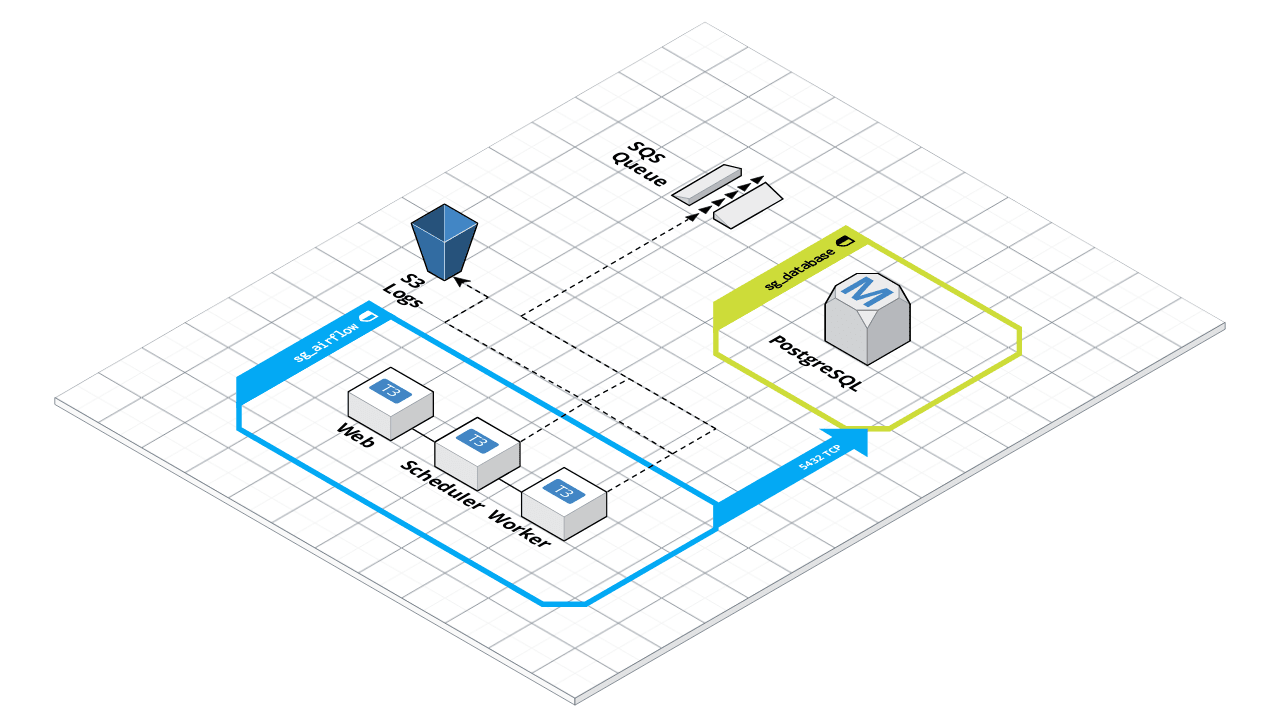

Terraform module to deploy an Apache Airflow cluster on AWS, backed by RDS PostgreSQL for metadata, S3 for logs and SQS as message broker with CeleryExecutor

| Terraform version | Tag |

|---|---|

| <= 0.11 | v0.7.x |

| >= 0.12 | >= v0.8.x |

You can use this module from the Terraform Registry

module "airflow-cluster" {

# REQUIRED

source = "powerdatahub/airflow/aws"

key_name = "airflow-key"

cluster_name = "my-airflow"

cluster_stage = "prod" # Default is 'dev'

db_password = "your-rds-master-password"

fernet_key = "your-fernet-key" # see https://airflow.readthedocs.io/en/stable/howto/secure-connections.html

# OPTIONALS

vpc_id = "some-vpc-id" # Use default if not provided

custom_requirements = "path/to/custom/requirements.txt" # See examples/custom_requirements for more details

custom_env = "path/to/custom/env" # See examples/custom_env for more details

ingress_cidr_blocks = ["0.0.0.0/0"] # List of IPv4 CIDR ranges to use on all ingress rules

ingress_with_cidr_blocks = [ # List of computed ingress rules to create where 'cidr_blocks' is used

{

description = "List of computed ingress rules for Airflow webserver"

from_port = 8080

to_port = 8080

protocol = "tcp"

cidr_blocks = "0.0.0.0/0"

},

{

description = "List of computed ingress rules for Airflow flower"

from_port = 5555

to_port = 5555

protocol = "tcp"

cidr_blocks = "0.0.0.0/0"

}

]

tags = {

FirstKey = "first-value" # Additional tags to use on resources

SecondKey = "second-value"

}

load_example_dags = false

load_default_conns = false

rbac = true # See examples/rbac for more details

admin_name = "John" # Only if rbac is true

admin_lastname = "Doe" # Only if rbac is true

admin_email = "admin@admin.com" # Only if rbac is true

admin_username = "admin" # Only if rbac is true

admin_password = "supersecretpassword" # Only if rbac is true

}The Airflow service runs under systemd, so logs are available through journalctl.

$ journalctl -u airflow -n 50

- Run airflow as systemd service

- Provide a way to pass a custom requirements.txt files on provision step

- Provide a way to pass a custom packages.txt files on provision step

- RBAC

- Support for Google OAUTH

- Flower

- Secure Flower install

- Provide a way to inject environment variables into airflow

- Split services into multiples files

- Auto Scalling for workers

- Use SPOT instances for workers

- Maybe use the AWS Fargate to reduce costs

Special thanks to villasv/aws-airflow-stack, an incredible project, for the inspiration.

| Name | Version |

|---|---|

| terraform | >= 0.12 |

| Name | Version |

|---|---|

| aws | n/a |

| template | n/a |

| Name | Description | Type | Default | Required |

|---|---|---|---|---|

| admin_email | Admin email. Only If RBAC is enabled, this user will be created in the first run only. | string |

"admin@admin.com" |

no |

| admin_lastname | Admin lastname. Only If RBAC is enabled, this user will be created in the first run only. | string |

"Doe" |

no |

| admin_name | Admin name. Only If RBAC is enabled, this user will be created in the first run only. | string |

"John" |

no |

| admin_password | Admin password. Only If RBAC is enabled. | string |

false |

no |

| admin_username | Admin username used to authenticate. Only If RBAC is enabled, this user will be created in the first run only. | string |

"admin" |

no |

| ami | Default is Ubuntu Server 18.04 LTS (HVM), SSD Volume Type. |

string |

"ami-0ac80df6eff0e70b5" |

no |

| aws_region | AWS Region | string |

"us-east-1" |

no |

| azs | Run the EC2 Instances in these Availability Zones | map(string) |

{ |

no |

| cluster_name | The name of the Airflow cluster (e.g. airflow-xyz). This variable is used to namespace all resources created by this module. | string |

n/a | yes |

| cluster_stage | The stage of the Airflow cluster (e.g. prod). | string |

"dev" |

no |

| custom_env | Path to custom airflow environments variables. | string |

null |

no |

| custom_requirements | Path to custom requirements.txt. | string |

null |

no |

| db_allocated_storage | Dabatase disk size. | string |

20 |

no |

| db_dbname | PostgreSQL database name. | string |

"airflow" |

no |

| db_instance_type | Instance type for PostgreSQL database | string |

"db.t2.micro" |

no |

| db_password | PostgreSQL password. | string |

n/a | yes |

| db_subnet_group_name | db subnet group, if assigned, db will create in that subnet, default create in default vpc | string |

"" |

no |

| db_username | PostgreSQL username. | string |

"airflow" |

no |

| fernet_key | Key for encrypting data in the database - see Airflow docs. | string |

n/a | yes |

| ingress_cidr_blocks | List of IPv4 CIDR ranges to use on all ingress rules | list(string) |

[ |

no |

| ingress_with_cidr_blocks | List of computed ingress rules to create where 'cidr_blocks' is used | list(object({ |

[ |

no |

| instance_subnet_id | subnet id used for ec2 instances running airflow, if not defined, vpc's first element in subnetlist will be used | string |

"" |

no |

| key_name | AWS KeyPair name. | string |

null |

no |

| load_default_conns | Load the default connections initialized by Airflow. Most consider these unnecessary, which is why the default is to not load them. | bool |

false |

no |

| load_example_dags | Load the example DAGs distributed with Airflow. Useful if deploying a stack for demonstrating a few topologies, operators and scheduling strategies. | bool |

false |

no |

| private_key | Enter the content of the SSH Private Key to run provisioner. | string |

null |

no |

| private_key_path | Enter the path to the SSH Private Key to run provisioner. | string |

"~/.ssh/id_rsa" |

no |

| public_key | Enter the content of the SSH Public Key to run provisioner. | string |

null |

no |

| public_key_path | Enter the path to the SSH Public Key to add to AWS. | string |

"~/.ssh/id_rsa.pub" |

no |

| rbac | Enable support for Role-Based Access Control (RBAC). | string |

false |

no |

| root_volume_delete_on_termination | Whether the volume should be destroyed on instance termination. | bool |

true |

no |

| root_volume_ebs_optimized | If true, the launched EC2 instance will be EBS-optimized. | bool |

false |

no |

| root_volume_size | The size, in GB, of the root EBS volume. | string |

35 |

no |

| root_volume_type | The type of volume. Must be one of: standard, gp2, or io1. | string |

"gp2" |

no |

| s3_bucket_name | S3 Bucket to save airflow logs. | string |

"" |

no |

| scheduler_instance_type | Instance type for the Airflow Scheduler. | string |

"t3.micro" |

no |

| spot_price | The maximum hourly price to pay for EC2 Spot Instances. | string |

"" |

no |

| tags | Additional tags used into terraform-terraform-labels module. | map(string) |

{} |

no |

| vpc_id | The ID of the VPC in which the nodes will be deployed. Uses default VPC if not supplied. | string |

null |

no |

| webserver_instance_type | Instance type for the Airflow Webserver. | string |

"t3.micro" |

no |

| webserver_port | The port Airflow webserver will be listening. Ports below 1024 can be opened only with root privileges and the airflow process does not run as such. | string |

"8080" |

no |

| worker_instance_count | Number of worker instances to create. | string |

1 |

no |

| worker_instance_type | Instance type for the Celery Worker. | string |

"t3.small" |

no |

| Name | Description |

|---|---|

| database_endpoint | Endpoint to connect to RDS metadata DB |

| database_username | Username to connect to RDS metadata DB |

| this_cluster_security_group_id | The ID of the security group |

| this_database_security_group_id | The ID of the security group |

| webserver_admin_url | Url for the Airflow Webserver Admin |

| webserver_public_ip | Public IP address for the Airflow Webserver instance |