Focal Inverse Distance Transform Map

-

An officical implementation of Focal Inverse Distance Transform Map. We propose a novel map named Focal Inverse Distance Transform (FIDT) map, which can represent each head location information.

-

Paper Link

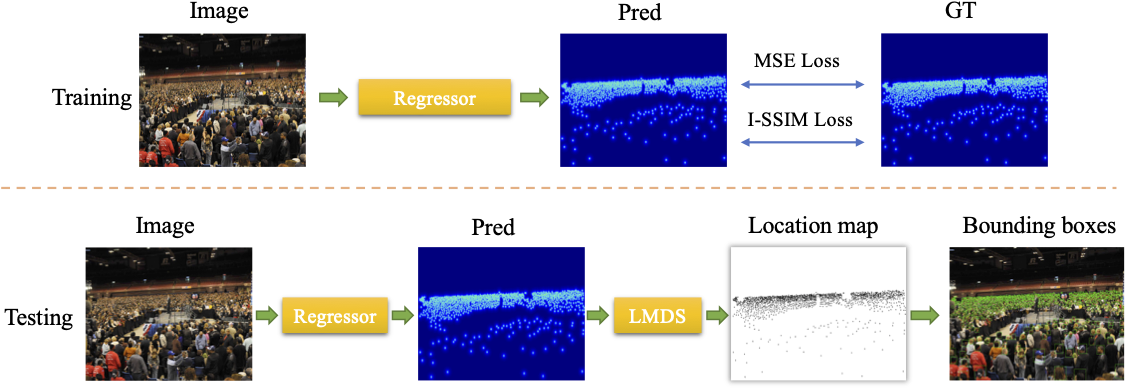

Overview

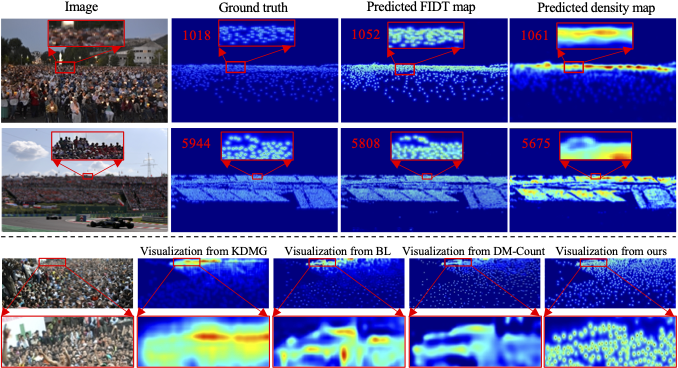

Visualizations

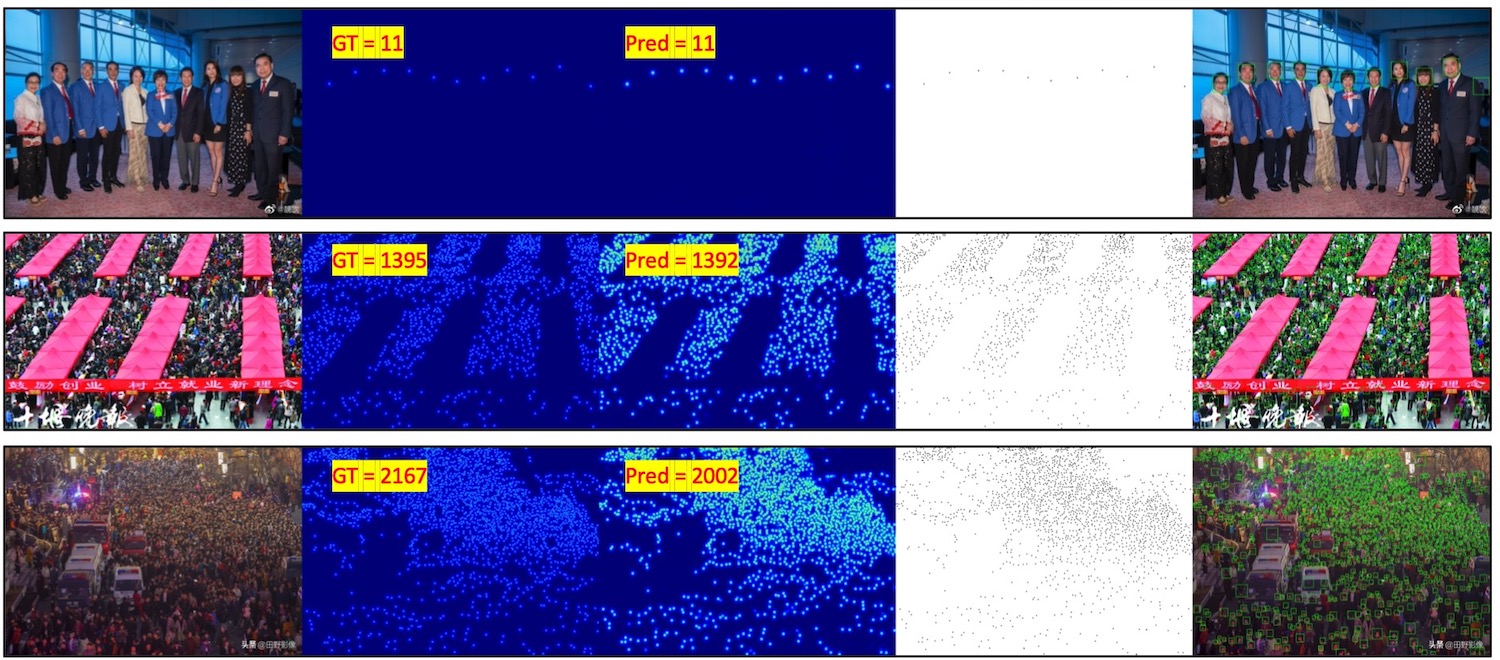

Visualizations for bounding boxes

Progress

- Testing Code (2021.3.16)

- Training baseline code (2021.4.29)

- Pretrained model

- ShanghaiA (2021.3.16)

- ShanghaiB (2021.3.16)

- UCF_QNRF (2021.4.29)

- JHU-Crowd++ (2021.4.29)

- Bounding boxes visualizations(2021.3.24)

- Video demo(2021.3.29)

Environment

python >=3.6

pytorch >=1.4

opencv-python >=4.0

scipy >=1.4.0

h5py >=2.10

pillow >=7.0.0

imageio >=1.18

nni >=2.0 (python3 -m pip install --upgrade nni)

Datasets

- Download ShanghaiTech dataset from Baidu-Disk, passward:cjnx; or Google-Drive

- Download UCF-QNRF dataset from here

- Download JHU-CROWD ++ dataset from here

- Download NWPU-CROWD dataset from Baidu-Disk, passward:3awa; or Google-Drive

Generate FIDT Ground-Truth

cd data

run python fidt_generate_xx.py

“xx” means the dataset name, including sh, jhu, qnrf, and nwpu. You should change the dataset path.

Model

Download the pretrained model from Baidu-Disk, passward:gqqm, or OneDrive

Quickly test

git clone https://github.com/dk-liang/FIDTM.git

Download Dataset and Model

Generate FIDT map ground-truth

Generate image file list: run python make_npydata.py

Test example:

python test.py --dataset ShanghaiA --pre ./model/ShanghaiA/model_best.pth --gpu_id 0

python test.py --dataset ShanghaiB --pre ./model/ShanghaiB/model_best.pth --gpu_id 1

python test.py --dataset UCF_QNRF --pre ./model/UCF_QNRF/model_best.pth --gpu_id 2

python test.py --dataset JHU --pre ./model/JHU/model_best.pth --gpu_id 3

If you want to generate bounding boxes,

python test.py --test_dataset ShanghaiA --pre model_best.pth --visual True

(remember to change the dataset path in test.py)

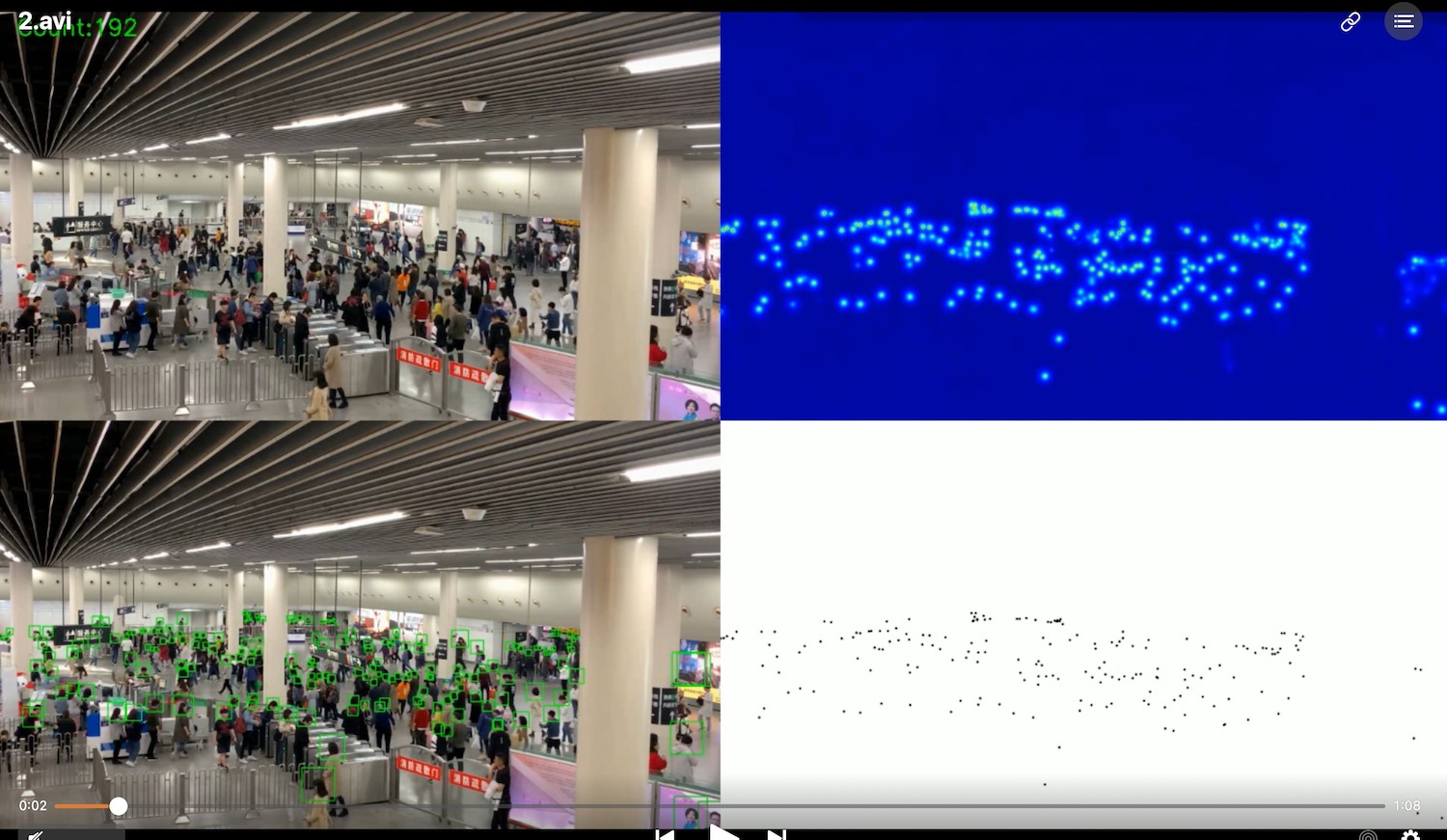

If you want to test a video,

python video_demo.py --pre model_best.pth --video_path demo.mp4

(the output video will in ./demo.avi; By default, the video size is reduced by two times for inference. You can change the input size in the video_demo.py)

Visiting bilibili or Youtube to watch the video demonstration.

Visiting bilibili or Youtube to watch the video demonstration.

More config information is provided in config.py

Evaluation localization performance

cd ./local_eval

Generate coordinates of Ground truth. (Remember to change the dataset path)

python A_gt_generate.py

python eval.py

We choose two thresholds (4, 8) for evaluation. The evaluation code is from NWPU

Training

The training strategy is very simple. You can replace the density map with the FIDT map in any regressors for training.

If you want to train based on the HRNET, please first download the ImageNet pre-trained HR models from the official link, and replace the pre-trained model path in HRNET/congfig.py (__C.PRE_HR_WEIGHTS).

Here, we provide the training baseline code, the I-SSIM loss will released when the review completed.

Training baseline example:

python train_baseline.py --dataset ShanghaiA --crop_size 256 --save_path ./save_file/ShanghaiA

python train_baseline.py --dataset ShanghaiB --crop_size 256 --save_path ./save_file/ShanghaiB

python train_baseline.py --dataset UCF_QNRF --crop_size 512 --save_path ./save_file/JHU

python train_baseline.py --dataset JHU --crop_size 512 --save_path ./save_file/JHU

For ShanghaiTech, you can train by a GPU with 8 G memory. For other datasets, please utilize a single GPU with 24 G memory or multiple GPU for training .

We have reorganized the code, which usually better than the results of the original manuscript.

Reference

If you find this project is useful for your research, please cite:

@article{liang2021focal,

title={Focal Inverse Distance Transform Maps for Crowd Localization and Counting in Dense Crowd},

author={Liang, Dingkang and Xu, Wei and Zhu, Yingying and Zhou, Yu},

journal={arXiv preprint arXiv:2102.07925},

year={2021}

}