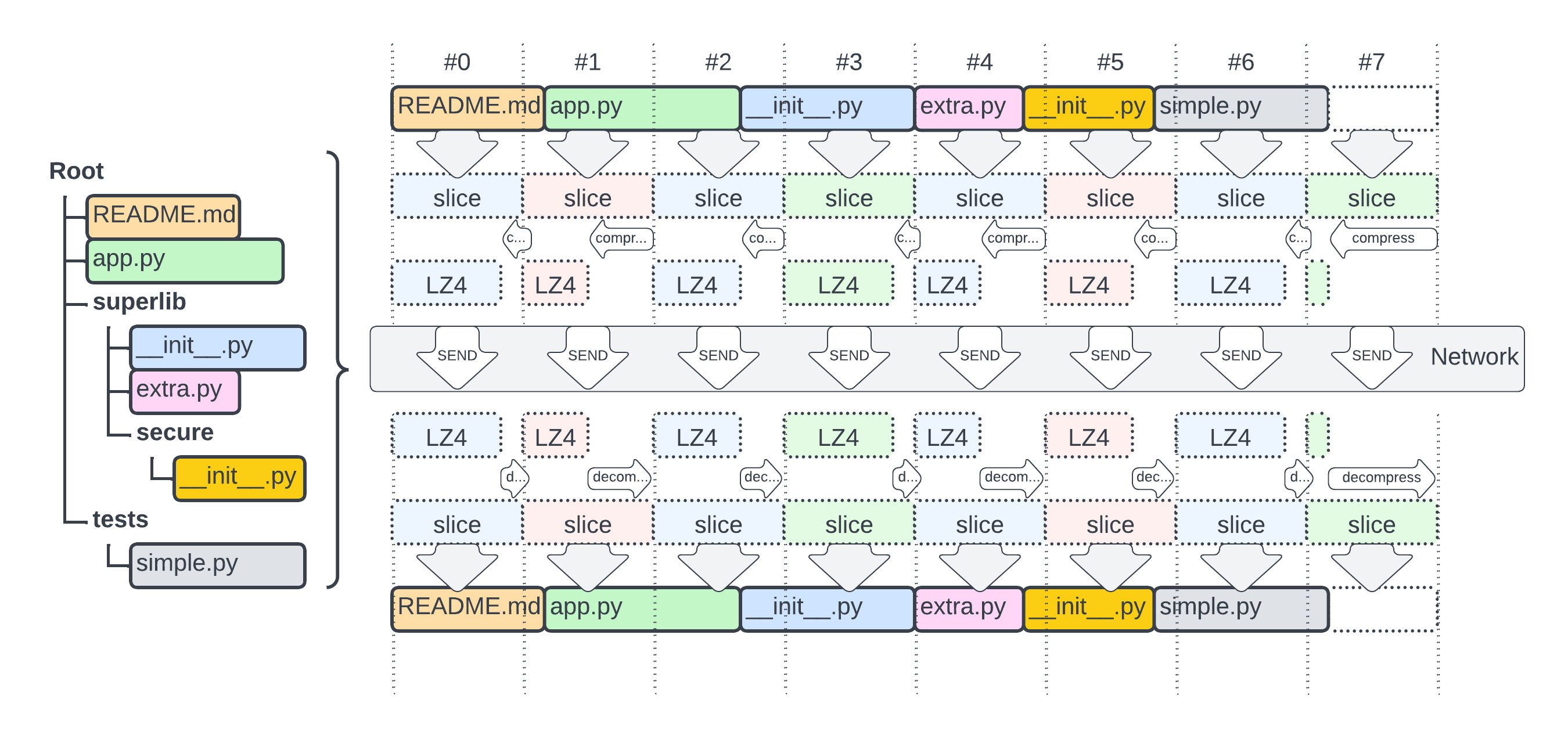

Below you can find a picture how the data are treated. All existing files are scanned and their size and hash (md5) is collected and put into db which forms a virtula tar file of concatenanted files.

This tar_like archive is then virtually split into blocks of arbitrary size and can be transfered over to the receiving side. You can use any number of uploading agents as long as you are able to coordinate them.

At the time of writing this there is only a naïve way of doing that via the python multiprocessing.Pool.map function. Some multihost mechanism should be employed to enable scale-out (i.e. Redis or APMQ).

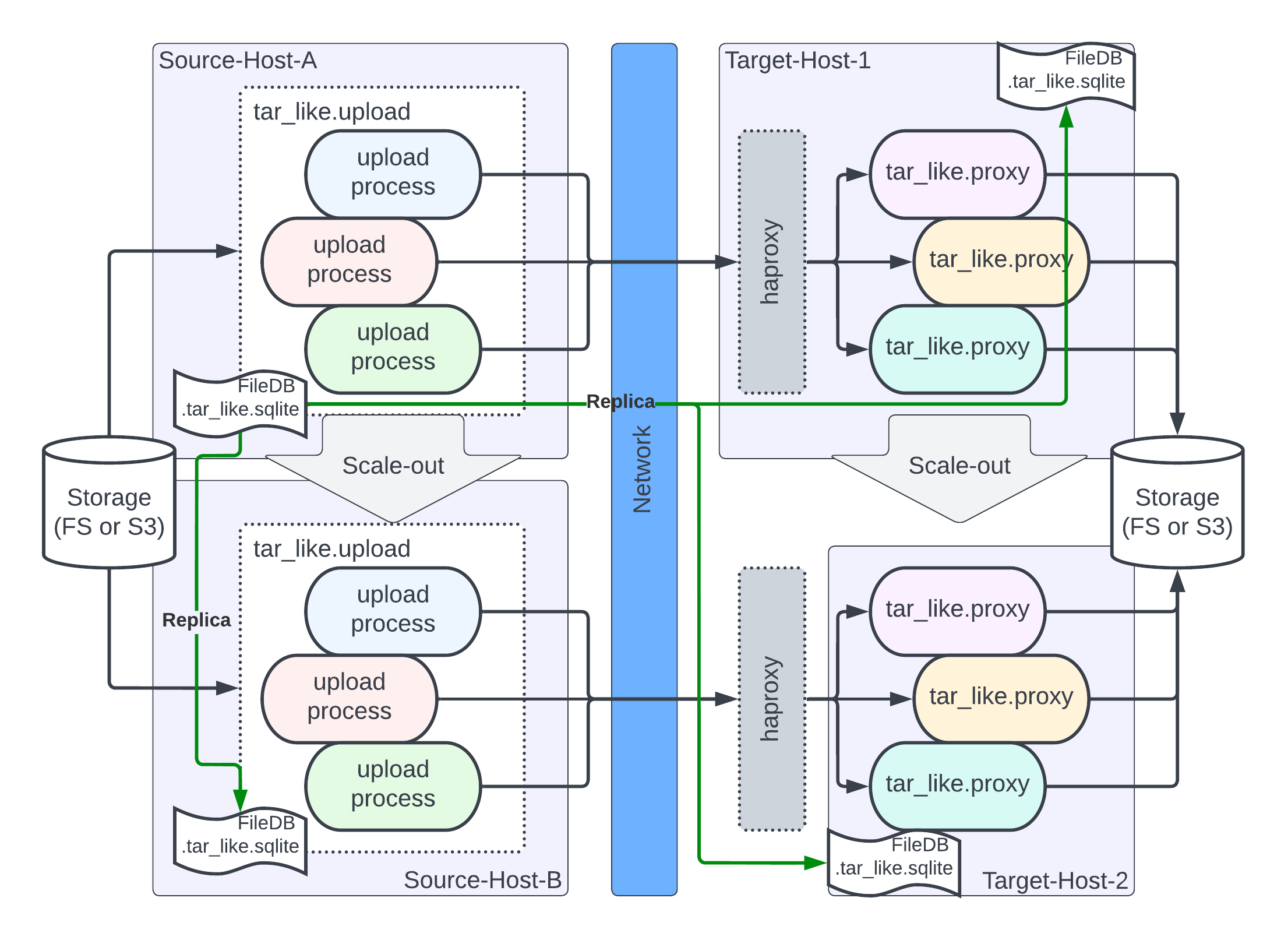

There is also a process and data-source/sink perspective which is depicted in the second image below. While scale-up i.e. adding more processing on upload side is pretty mature. The scale-out to more hosts is possible but there is not mechanism to split the list of blocks to upload across multiple hosts. Also the data-source sharding and collocation might and should be considered. At the receiving side the is much more room for improvement. Although the key caviat at the moment is a way to share the FileDB as it is the only shared resource needed for decoding the arriving data/segments.

Folder transfer in tar like fashion. It consists of few components that let the process by streamlined.

- index phase create an index of files to be transfered, capturing their

path,sizeandhash(md5) - proxy the receiving end which has the DB created above at hand

- upload the sending end which also has the DB created above at hand

- check optional tool that verifies that the files matches the hash in the DB

The whole process creates virtual tar like file inside the DB, which is for now just a simple concatenation of files.

In particular it just computes the position of each file within the concatenated file (offset and offset_end).

This virtual tar file - SQLite database is then shared between the sender and receiver.

The final step is to start a tar_like.proxy on the receiving end and tar_like.upload on sending site.

The sender tar_like.upload collects the files into a segment in memory (of certain arbitrary block_size i.e. 10'000'000 = 10MB)

this segment is then compressed using LZ4 and using PUT method uploaded to tar_like.proxy which as it knows the

structure and from the URL provided looks up the segment index, and after decompression it split the block segments back into files.

Note: the segments are of a fixed size, however as the structure of segment is pulled from DB dynamically it can accomodate. In addition the size of a segment is part of the URL.

It is expected that the files do not change during the upload. Otherwise the hashes will not match. As such it is not a problem you can retry, but the delta transfer of not yet considered and/or developed (~new index,delta computation, new upload, resize or delete files as needed).

The app was for ease of execution and portability also contanerized. It is utilizing Docker for this purpose (but Podman should do as well).

As a preparation step run the mkDockerImage.sh to prepare python:3 based image with dependencies.

Note on containers: there was spotted an issue while running in docker container, which is most likely caused by too little memory for docker VM. The error can be seen as an error of non matching read and expected (

Content-Length) block size.

Following subsections describes the process from indexing over reception and sending up to optional hash check.

CLI Parameters:

usage: tar_like.index [-h] [-b BASE_FOLDER] [-db DATABASE] [-x EXCLUDE] [-c]

options:

-h, --help show this help message and exit

-b BASE_FOLDER, --base-folder BASE_FOLDER

Specify base folder

-db DATABASE, --database DATABASE

Specify database file (SQLite)

-x EXCLUDE, --exclude EXCLUDE

Exclude regex (repeat as needed)

-c, --clean Clean the DB before inserting

An example how to make an index of this folder:

python -mtar_like.index -x .git -x __pycache__ -x tmp/ -cor dockerized ./r01-index.sh

-xexclude of some regex pattern matched against the filepath-cclean db before inserting values

To check the resulting sqlite DB:

sqlite3 ~/.tar_like.sqlite '.mode table' 'SELECT * FROM tar LIMIT 40;'CLI Parameters:

usage: tar_like.proxy [-h] [-b BASE_FOLDER] [-db DATABASE] [port]

positional arguments:

port Specify alternate port [default: 8000]

options:

-h, --help show this help message and exit

-b BASE_FOLDER, --base-folder BASE_FOLDER

Specify base folder

-db DATABASE, --database DATABASE

Specify database file (SQLite)

On the receiving end run:

python -mtar_like.proxyor dockerized ./r02-proxy.sh

CLI Parameters:

usage: tar_like.upload [-h] [-b BASE_FOLDER] [--use-s3] [--ignore-done] [--ignore-ca] [-db DATABASE] [-dbp DATABASE_PROGRESS] [-u UPLOAD_URL] [-p NO_PROCESSES]

[-f FIRST_BLOCK] [-l LAST_BLOCK] [-s BLOCK_SIZE]

options:

-h, --help show this help message and exit

-b BASE_FOLDER, --base-folder BASE_FOLDER

Specify base folder

--use-s3 Use S3 backend instead of filesystem (incl.HCP)

--ignore-done ignore that something was already uploaded

--ignore-ca ignore invalid/unverifiable TLS CA

-db DATABASE, --database DATABASE

Specify database file (SQLite)

-dbp DATABASE_PROGRESS, --database-progress DATABASE_PROGRESS

Specify database file to track progress (SQLite)

-u UPLOAD_URL, --upload-url UPLOAD_URL

Specify upload URL base

-p NO_PROCESSES, --no-processes NO_PROCESSES

Specify number of processes for upload

-f FIRST_BLOCK, --first-block FIRST_BLOCK

Specify first block

-l LAST_BLOCK, --last-block LAST_BLOCK

Specify last block

-s BLOCK_SIZE, --block-size BLOCK_SIZE

Specify block size

On the sending end run:

python -mtar_like.upload -f 0 -s 100000or dockerized ./r03-upload.sh

where:

-f 0is the number of first block to send-s 100000is the size of block in bytes

there is also an alternative that uses S3 (or alike interface - i.e. HCP - Hitachi Cloud Platform) as a source of files:

using Docker ./r05-upload_s3.sh it requires some envronment variables see .tar_like_rc.example.

CLI Parameters:

usage: tar_like.check [-h] [-b BASE_FOLDER] [-db DATABASE] [-f FIRST_BLOCK] [-l LAST_BLOCK] [-s BLOCK_SIZE]

options:

-h, --help show this help message and exit

-b BASE_FOLDER, --base-folder BASE_FOLDER

Specify base folder

-db DATABASE, --database DATABASE

Specify database file (SQLite)

-f FIRST_BLOCK, --first-block FIRST_BLOCK

Specify first block

-l LAST_BLOCK, --last-block LAST_BLOCK

Specify last block

-s BLOCK_SIZE, --block-size BLOCK_SIZE

Specify block size

To check the checksums of files on filesystem vs in the DB

python -mtar_like.checkor dockerized ./r04_check.sh

The containers are the easiest way of deploying the tar_like proxy (and other parts). There are ready made scripts, that can be used. To make things customisable there is a number of variables that can override the default values and behavior as well as number of paramaters tha the individual parts access when run from commandline (including use inside docker) for those see sections CLI Parameters above.

The environment variables with their default values as used by the scripts are:

| Name | Default value | Purpose |

|---|---|---|

DDIR |

$PWD/tmp |

Destination directory R/W, stores data as well as the .tar_like.sqlite |

EX_PYCACERT |

~/.python-cacert.pem |

the proxy CA bundle (or --ignore-ca) |

IN_PYCACERT |

ca-cert.pem |

the S3/HCP source CA |

LOCAL_BIND |

8000 |

At which [IP:]PORT should the docker listen for HTTP access forwarded to port 8000 inside container of tar_like.proxy |

LOCAL_BIND_HTTPS |

8443 |

At which [IP:]PORT should the docker listen for HTTPS access forwarded to port 8443 inside container of haproxy which in turn contact the tar_like.proxy localy via 127.0.0.1:8000 inside container (same net-ns) |

SDIR |

$PWD |

source directory, where to look for files to tar_like.index and tar_like.upload (R/O) |

UDIR |

/data/rw/recv-test |

Where to put the uploaded data to the tar_like.proxy (R/W) inside container |

UPLOAD_URL |

http://172.17.0.1:8000/tar |

Used by tar_like.upload to know where to contact the tar_like.proxy |

Note: it is expected that in most cased only these variables are to be modified not the config files or scripts. The most frequent modification should be

LOCAL_BIND_HTTPSeventuallyDDIRalong withSDIR

Note: the

r06-proxy_with_haproxy.shis combining two containers thetar_like.proxyandhaproxywithin the same net-ns (network namespace), thehaproxyis refering the net-ns by name of container, thus if you update the first then please also update the second at haproxy/run_ha.sh line--network container:....

TO DOes:

- add intermediate hash layer, where not paths will be transferred but hashes (i.e. SHA1) and then reverse mapped to files on target machine

- extend the hashes to merkle trees (Merkle DAGs: Structuring Data for the Distributed Web and Merkle Directed Acyclic Graphs)

- consider Rust, Go or C++ for better server/proxy side

- alternative transports (Wiki:Tsunami UDP Protocol also @ Source Forge Proj, UDP-Based Protocol (UDT) also @ Source Forge Web evolution to SRT - Secure Reliable Transport, ref. IBM Aspera FASP or Data Expedition Fast File Transfer)

- SyncThing, Resilio former BTSync (aka BitTorrent Sync)

- Multicast PGM and Clonezilla deprecated: partimage, fsarchiver, partclone

- rclone cloud backends