A minimal PyTorch implementation of VGG conv-based perceptual loss (not the VGG relu-based perceptual loss).

# pip

pip install git+https://github.com/PeterouZh/HRInversion.git

# local

git clone https://github.com/PeterouZh/HRInversion.git

pip install -e HRInversion

import torch

import torch.nn.functional as F

from hrinversion import VGG16ConvLoss

bs = 1

# Note that the shortest edge of the image must be larger than 32 pixels.

img_size = 1024

# Dummy data

target = (torch.rand(bs, 3, img_size, img_size).cuda() - 0.5) * 2 # [-1, 1]

pred = (torch.rand(bs, 3, img_size, img_size).cuda() - 0.5) * 2 # [-1, 1]

pred.requires_grad_(True)

# VGG conv-based perceptual loss

percep_loss = VGG16ConvLoss().cuda().requires_grad_(False)

# high-level perceptual loss: d_h

# percep_loss = VGG16ConvLoss(fea_dict={'features_2': 0., 'features_7': 0., 'features_14': 0.,

# 'features_21': 0.0002, 'features_28': 0.0005,

# }).cuda().requires_grad_(False)

fea_target = percep_loss(target)

fea_pred = percep_loss(pred)

loss = F.mse_loss(fea_pred, fea_target, reduction='sum') / bs # normalized by batch size

loss.backward()Inversion (please zoom in to see artifacts. )

inversion.mp4

LSO for ukiyo_e

lso_ukiyo_e_compressed.mp4

LSO for disney

lso_disney_compressed.mp4

Panorama

HRInversion_panorama.mp4

- Prepare models

# Download StyleGAN2 models

wget https://nvlabs-fi-cdn.nvidia.com/stylegan2-ada-pytorch/pretrained/transfer-learning-source-nets/ffhq-res1024-mirror-stylegan2-noaug.pkl -P datasets/pretrained/

wget https://nvlabs-fi-cdn.nvidia.com/stylegan2-ada-pytorch/pretrained/metrics/vgg16.pt -P datasets/pretrained/

tree datasets/

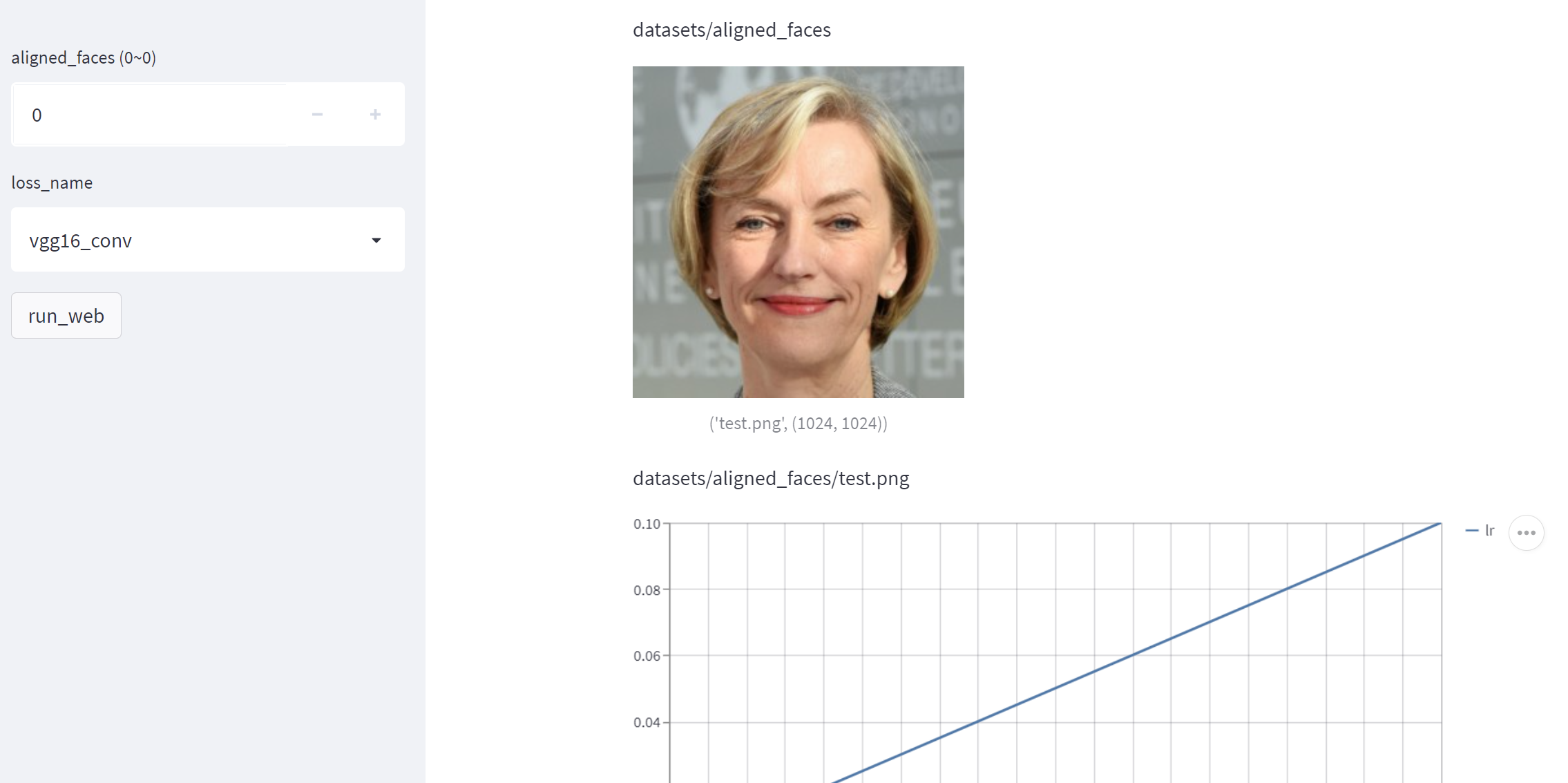

datasets/

├── aligned_faces

│ └── test.png

└── pretrained

├── ffhq-res1024-mirror-stylegan2-noaug.pkl

└── vgg16.pt

- Start a web demo:

streamlit run --server.port 8501 \

hrinversion/scripts/projector_web.py -- \

--cfg_file hrinversion/configs/projector_web.yaml \

--command projector_web \

--outdir results/projector_web

- (optional) Debug the script with this command:

python hrinversion/scripts/projector_web.py \

--cfg_file hrinversion/configs/projector_web.yaml \

--command projector_web \

--outdir results/projector_web \

--debug True

- Results

TBD

- stylegan2-ada from https://github.com/NVlabs/stylegan2-ada-pytorch