Click-BERT: Clickbait Detector with Bidirectional Encoder Representations from Transformers

Course Project for EECS 498 NLP, Winter 2021

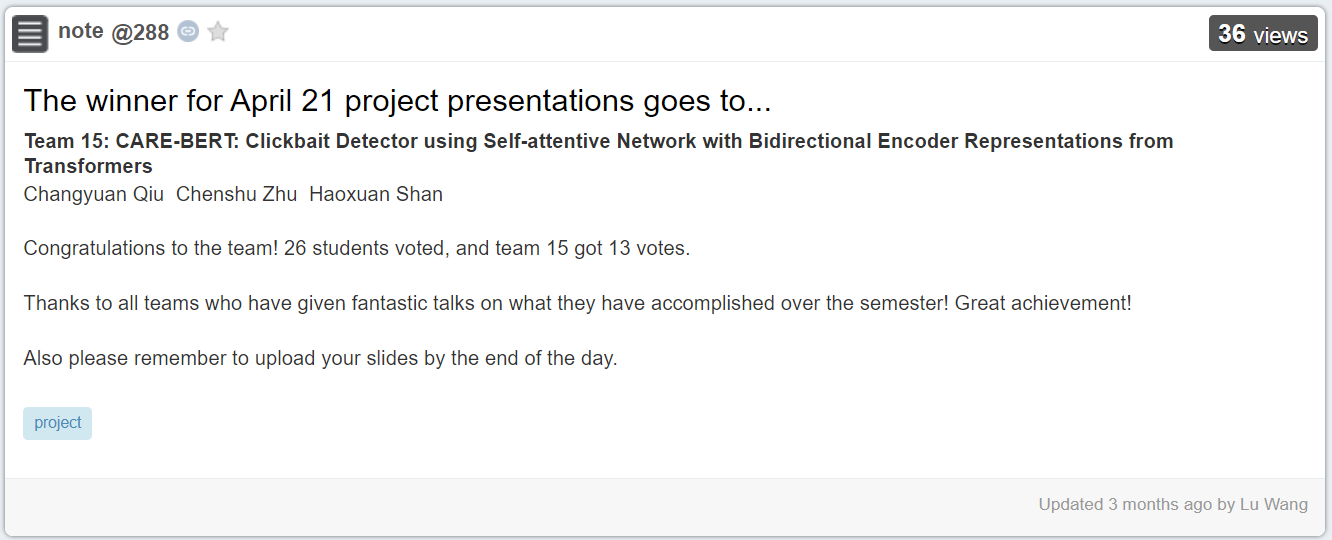

Team Members: Changyuan Qiu (@PeterQiu0516), Chenshu Zhu (@Jupiter), Haoxuan Shan (@shanhx2000)

Clickbait is a rampant problem haunting online readers. Nowadays, clickbait detection in tweets remains an elusive challenge. In this paper, we propose Clickbait Detector with Bidirectional Encoder Representations from Transformers (Click-BERT), which could effectively identify clickbaits utilizing recent advances in pre-training methods (BERT and Longformer) and recurrent neural networks (Bi- LSTM and Bi-GRU) with a parallel model structure. Our model supports end-to-end training without involving any hand-crafted features and achieved new state-of-the-art results on the Webis 17 Dataset.

-

Final Report released.

-

Presentation Slides released.

-

Our baseline2: headline Bi-LSTM with BERT achieved new SOTA on accuracy and MSE on Webis 17! Get more details in our progress report.