Source code for the paper "Graph Neural Networks with Learnable Structural and Positional Representations" by Vijay Prakash Dwivedi, Anh Tuan Luu, Thomas Laurent, Yoshua Bengio and Xavier Bresson.

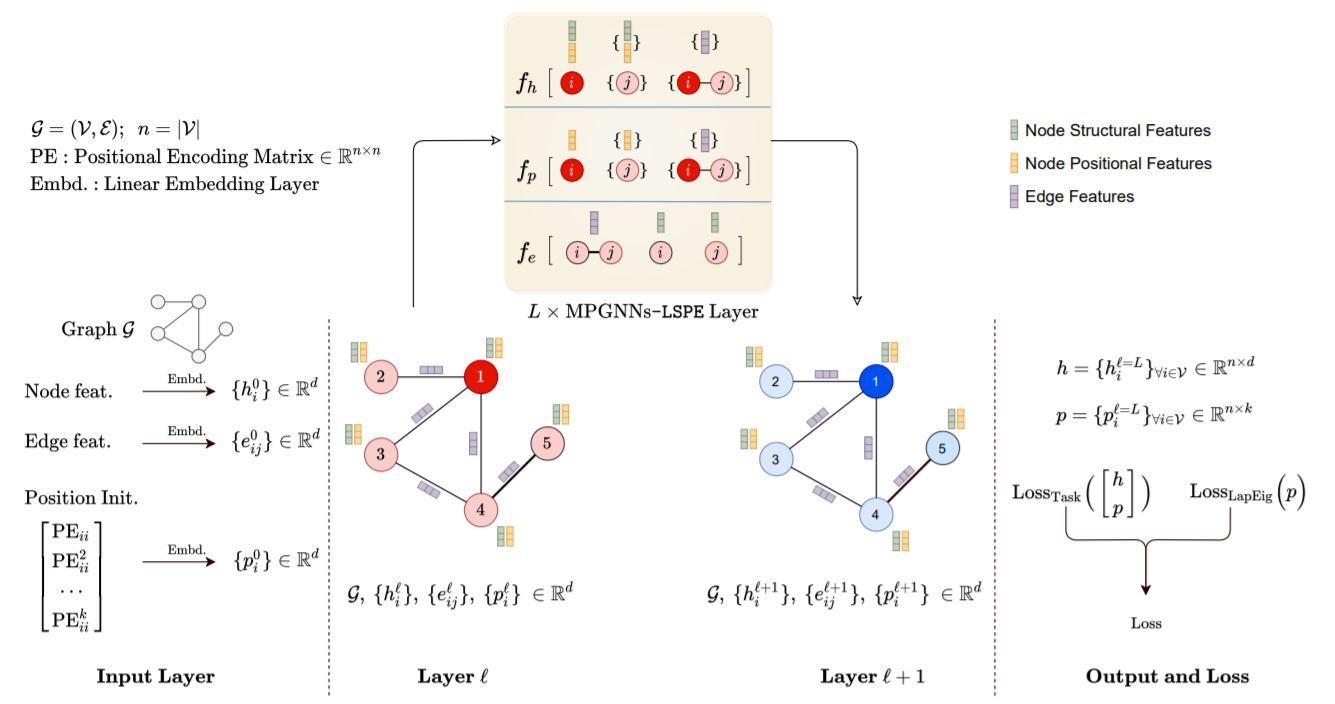

We propose a novel GNN architecture in which the structural and positional representations are decoupled, and are learnt separately to learn these two essential properties. The architecture, named MPGNNs-LSPE (MPGNNs with Learnable Structural and Positional Encodings), is generic that it can be applied to any GNN model of interest which fits into the popular 'message-passing framework', including Transformers.

Follow these instructions to install the repo and setup the environment.

Proceed as follows to download the benchmark datasets.

Use this page to run the codes and reproduce the published results.

📃 Paper on arXiv

@article{dwivedi2021graph,

title={Graph Neural Networks with Learnable Structural and Positional Representations},

author={Dwivedi, Vijay Prakash and Luu, Anh Tuan and Laurent, Thomas and Bengio, Yoshua and Bresson, Xavier},

journal={arXiv preprint arXiv:2110.07875},

year={2021}

}